An Interesting Application of a Likelihood-Based Asymptotic Method

advertisement

An Interesting Application of a Likelihood-Based Asymptotic

Method

M. Rekkas

Y. She

Y. Sun

A. Wong

Abstract

Fraser (1990) discussed how to obtain statistical inference for a scalar parameter of interest from the

likelihood function. Since then many authors have extended the method and applied it to various models.

In this paper we consider the one-sample normal problem. Using the likelihood-based asymptotic method

described in Fraser (1990), we obtain the p-value function for the mean parameter as well as the variance

parameter. By re-expressing the results, we derive simple and accurate normal approximations to the

Student t- and χ2 - cumulative distribution functions.

Keywords: Canonical parameter; Exponential family model; Modified signed log-likelihood ratio

statistic; p-value function.

MSC classification codes: 62E20; 62F30

1

1

Introduction

Fraser (1990) showed how the statistical significance for a scalar canonical parameter of interest from an

exponential family model could be obtained from the log-likelihood function of the model. Since then,

many authors have extended the method to various models; Reid (2003) gives a summary of some of the

major results. It is interesting to note that the existing literature surrounding likelihood-based higher order

methods, has rarely discussed or examined the approximation of the cumulative distribution of a test statistic.

In this paper we apply a likelihood-based third order method to the one-sample normal problem. With a

simple re-expression of the results, we obtain highly accurate approximations to the cumulative distribution

functions of the Student t- and χ2 - distributions.

In Section 2, we first review the approach set out by Fraser (1990) and then we extend the method

for non-canonical parameters of interest. In Section 3, we apply our method to approximate the cumulative

distribution functions of the Student t- and χ2 - distributions. Numerical comparisons of the proposed method

with some standard approximations are presented in Section 4. General discussions are given in Section 5.

2

Main Results

Let x = (x1 , . . . , xn ) be a random sample from a canonical exponential family model with log-likelihood

function

`(θ) = `(θ; x) = ψt + λs + k(θ),

(2.1)

where θ = (ψ, λ0 )0 is the p-dimensional canonical parameter, with ψ being the scalar parameter of interest and

λ being the (p − 1) dimensional nuisance parameter. The minimal sufficient statistic is (t, s) = (t(x), s(x)).

It is well known that θ̂, the maximum likelihood estimate, which satisfies

¯

∂`(θ) ¯¯

`θ (θ̂) =

=0

∂θ ¯θ=θ̂

has mean θ and variance-covariance matrix given by i−1

θθ 0 (θ), where

µ 2

¶

∂ `(θ)

iθθ0 (θ) = −E[`θθ0 (θ)] = −E

,

∂θ∂θ0

(2.2)

(2.3)

is the Fisher expected information matrix. As iθθ0 (θ) can be difficult to evaluate, it is often approximated

by

¯

jψψ (θ̂)

∂ 2 `(θ) ¯¯

jθθ0 (θ̂) = −

=

¯

0

∂θ∂θ θ=θ̂

jψλ (θ̂)

jψλ0 (θ̂)

jλλ0 (θ̂)

,

(2.4)

which is the observed information matrix evaluated at θ̂. It is well known that, under moderate regularity,

(θ̂ −θ)0 jθθ0 (θ̂)(θ̂ −θ) is asymptotically distributed as χ2 with p degrees of freedom. And for ψ = (1, 0, . . . , 0)θ,

2

we have (ψ̂ − ψ)0 [j ψψ (θ̂)]−1 (ψ̂ − ψ) which is asymptotically distributed as χ2 with 1 degree of freedom, where

ψψ

ψλ0

j (θ̂) j (θ̂)

−1

.

(2.5)

jθθ

0 (θ̂) =

0

j ψλ (θ̂) j λλ (θ̂)

Alternatively, we can present this result as

q = (ψ̂ − ψ)[j ψψ (θ̂)]−1/2 ,

(2.6)

where q is asymptotically distributed as standard normal. Standardizing the maximum likelihood estimate

in this way is generally referred to as Wald’s method. Notice that this method is not parameterization

invariant.

An alternative likelihood-based method is based on the signed log-likelihood ratio statistic

r = r(ψ) = sgn(ψ̂ − ψ){2[`(θ̂) − `(θ̂ψ )]}1/2 ,

(2.7)

which is asymptotically distributed as standard normal. The vector θ̂ψ = (ψ, λ̂0ψ )0 denotes the constrained

maximum likelihood estimate of θ for a given ψ which satisfies

¯

∂`(θ) ¯¯

`λ (θ̂ψ ) =

.

∂λ ¯θ=θ̂ψ

(2.8)

The signed log-likelihood ratio statistic is invariant to reparameterization. It is important to note that both

Wald’s method and the signed log-likelihood ratio statistic method are first order methods, meaning they

achieve O(n−1/2 ) accuracy. Based on the Wald and signed log-likelihood statistics, the p-value function

for ψ, p(ψ), can be approximated by Φ(q) and Φ(r), respectively, where Φ() is the cumulative distribution

function of the standard normal distribution.

In the statistics literature, various likelihood-based small-sample asymptotic methods have been proposed

that, in theory, achieve a high order of accuracy. One of these methods is given by Barndorff-Nielsen (1986,

1991) and is known as the modified signed log-likelihood ratio method,

r∗ (ψ) = r(ψ) +

1

Q(ψ)

log

,

r(ψ)

r(ψ)

(2.9)

where r(ψ) is the signed log-likelihood ratio statistic as defined in (2.7) and Q(ψ) is a standardized maximum

likelihood departure measured in a certain parameterization scale. Barndorff-Nielsen (1986, 1991) showed

that r∗ (ψ) is asymptotically distributed as standard normal with accuracy O(n−3/2 ) provided that the Q(ψ)

is specially chosen. Fraser (1990) showed that for the canonical exponential family model with canonical

parameter θ = (ψ, λ0 )0 , Q(ψ) is the standardized maximum likelihood estimate of ψ where the estimated

variance of ψ̂ takes into consideration the elimination of the nuisance parameter λ. More specifically, Fraser

3

(1990) showed that

(

Q(ψ) = (ψ̂ − ψ)

)1/2

|jθθ0 (θ̂)|

|jλλ0 (θ̂ψ )|

.

(2.10)

Now consider a general exponential family model, with ϕ = ϕ(θ) being the canonical parameter and ψ(θ)

being the scalar parameter of interest. The quantity Q(ψ) has to be expressed in the canonical parameter,

ϕ(θ), scale. Let ϕθ (θ) be the derivative of ϕ(θ) with respect to θ, and similarly, ϕλ (θ) is the derivative of

ϕ(θ) with respect to λ. Then by chain rule differentiation, we have

|jϕϕ0 (θ̂)| = |jθθ0 (θ̂)||ϕθ (θ̂)|−2

(2.11)

|j(λλ0 ) (θ̂ψ )| = |jλλ0 (θ̂ψ )||ϕ0λ (θ̂ψ )ϕλ (θ̂ψ )|−1 .

(2.12)

and

Let χ(θ) be a scalar linear version of ϕ(θ) that corresponds to dψ at θ = θ̂ψ . Then

χ(θ) =

ψϕ (θ̂ψ )

||ψϕ (θ̂ψ )||

ϕ(θ),

(2.13)

2

where ψϕ (θ) is the row of ϕ−1

θ (θ) that corresponds to ψ, and ||ψϕ (θ̂ψ )|| is the squared length of the vector

ψϕ (θ̂ψ ). Note that χ(θ) can simply be viewed operationally as the scalar parameter of interest in the ϕ(θ)

scale. Hence Q(ψ) can be re-written as

(

Q(ψ) = sgn(ψ̂ − ψ)|χ(θ̂) − χ(θ̂ψ )|

|jϕϕ0 (θ̂)|

|j(λλ0 ) (θ̂ψ )|

)1/2

.

(2.14)

Thus inference concerning ψ(θ) for a general exponential family model with canonical parameter ϕ(θ) can

be obtained by using (2.14) in the r∗ (ψ) formula given in (2.9). In particular, the p-value function for ψ,

p(ψ), can be approximated by Φ(r∗ (ψ)) with O(n−3/2 ) accuracy.

An alternative method to improve the accuracy of approximating the p-value function from the likelihood

ratio statistic is due to Lugannani and Rice (1980). The p-value function for ψ takes the following form:

½

¾

1

1

Φ(r(ψ)) + φ(r(ψ))

−

,

(2.15)

r(ψ) Q(ψ)

where r(ψ) and Q(ψ) are defined in (2.7) and (2.14) respectively, and φ() is the probability density function

of the standard normal distribution. It is interesting to note that Barndorff-Nielsen’s method adjusts the

statistic r(ψ) such that the p-value function obtained from r∗ (ψ) is close to the true p-value function; whereas

Lugannani and Rice’s method adjusts the p-value function obtained from r(ψ) such that it is close to the true

p-value function. Fraser (1990) showed that these two adjustments are equivalent up to O(n−3/2 ) accuracy.

4

3

Approximating the cumulative distributions functions of the tand χ2 - distributions

In Section 2, the p-value function for a scalar parameter of interest from a general exponential family model

was obtained. We now consider a random sample x = (x1 , . . . , xn ) from a normal distribution with mean µ

and variance σ 2 . This model is an exponential family model with log-likelihood function given by

`(θ) = `(µ, σ 2 ) = −

n

1 X

(xi − µ)2 .

log σ 2 − 2

2

2σ

(3.1)

A convenient version of the canonical parameter is

µ

¶0

1 µ

ϕ(θ) =

.

,

σ2 σ2

(3.2)

It is easy to obtain the following results:

µP

2 0

2

0

θ̂ = (µ̂, σ̂ ) = (x̄, (n − 1)s /n) =

xi

,

n

P

(xi − x̄)2

n

¶0

and

|jθθ0 (θ̂)| =

n2

.

2σ̂ 6

(3.3)

If the parameter of interest is the mean parameter, ψ(θ) = µ, it is well known that the exact p-value

function of µ is

p(µ) = Ftn−1 (t),

(3.4)

where Ftn−1 () is the cumulative distribution function of the Student t distribution with (n − 1) degrees of

freedom and

t=

x̄ − µ

√ .

s/ n

(3.5)

By applying the method discussed in Section 2, we have the constrained maximum likelihood of θ

µ P

¶0

(xi − µ)2

n

2 0

θ̂µ = (µ, σ̂µ ) = µ,

.

and |jσ2 σ2 (θ̂µ )| =

n

2σ̂µ4

(3.6)

The signed log-likelihood ratio statistic can then be simplified to

½

µ

r(µ) = sgn(x̄ − µ) n log 1 +

t2

n−1

¶¾1/2

.

(3.7)

,

(3.8)

Moreover, with

ϕθ (θ) = (ϕµ (θ), ϕσ2 (θ)) =

0

− σ14

1

σ2

− σµ4

Q(µ) can be simplified to

Q(µ) =

p

n(n − 1)

·

5

t

(n − 1) + t2

¸

(3.9)

and r∗ (µ) can be obtained from (2.9). Thus the p-value function of µ, or equivalently the cumulative

distribution function of the Student t distribution with (n − 1) degrees of freedom can be approximated by

Φ(r∗ (µ)).

Finally by re-indexing the above result, the cumulative distribution function for the Student t distribution

with ν degrees of freedom can be approximated by using the Barndorff-Nielsen formula

µ

¶

1

Q

Ftν (t) = Φ r + log

,

r

r

(3.10)

or by using the asymptotically equivalent Lugannani and Rice formula

¾

½

1

1

−

,

Ftν (t) = Φ(r) + φ(r)

r

Q

(3.11)

where

½

µ

¶¾1/2

t2

r = sgn(t) (ν + 1) log 1 +

ν

and

Q=

p

µ

ν(ν + 1)

t

ν + t2

¶

,

with O(n−3/2 ) accuracy.

Consider now the case of a random sample x = (x1 , . . . , xn ) from the normal distribution with mean 0

and variance σ 2 for which the parameter of interest is the variance parameter, ψ(θ) = σ 2 . A convenient

canonical parameter is ϕ(θ) = 1/σ 2 . The exact p-value function of σ 2 is given by

p(σ 2 ) = Fχ2n (x2 ),

(3.12)

where Fχ2n () is the cumulative distribution function of the χ2 distribution with n degrees of freedom and

Pn

2

i=1

x =

x2i /n

σ2

.

(3.13)

By applying the method is Section 2, we can obtain

2

r(σ )

Q(σ 2 )

r

n

= sgn(x − n) (x2 − n) + n log 2

x

x2 − n

√

=

2n

2

(3.14)

(3.15)

and r∗ (σ 2 ) can be obtained from (2.9). Thus the p-value function of σ 2 , or equivalently the cumulative

distribution function of the χ2 distribution with n degrees of freedom can be approximated by Φ(r∗ (σ 2 )).

In other words, the cumulative distribution function of the χ2 distribution with ν degrees of freedom can

be approximated by using the Barndorff-Nielsen formula

µ

¶

1

Q

Fχ2ν (x2 ) = Φ r + log

,

r

r

6

(3.16)

or by using the asymptotically equivalent Lugannani and Rice formula

½

¾

1

1

2

Fχ2ν (x ) = Φ(r) + φ(r)

−

,

r Q

(3.17)

where

h

ν i1/2

r = sgn(x2 − ν) (x2 − ν) + ν log 2

x

(3.18)

x2 − ν

Q= √

2ν

(3.19)

and

with O(n−3/2 ) accuracy.

Note that since the cumulant generating function of the chi-square distribution is known, we can directly

apply the saddlepoint method and the Lugannani and Rice formula to obtain the following results:

¶

µ 2

x −ν h 2

ν i1/2

rSP = sgn

(x

−

ν)

+

ν

log

2x2

x2

x2 − ν

√

QSP =

2ν

(3.20)

(3.21)

and

½

Fχ2ν (x2 ) = Φ(rSP ) + φ(rSP )

1

rSP

−

1

QSP

¾

.

(3.22)

Interestingly, the Q given in (3.19) is identical to the QSP given in (3.21). Moreover, since x2 > 0, we have

³ 2 ´

, which implies that the r given in (3.18) is also identical to the rSP given in (3.20).

sgn(x2 − ν) = sgn x2x−ν

2

Thus for approximating the cumulative distribution function of the χ2 distribution, the proposed method

and the direct saddlepoint method give exactly the same results.

4

Numerical comparisons

To illustrate the accuracy of our proposed method, we compare it with some recent approximations. Jing et

al. (2004) applied the saddlepoint method without using the moment generating functions to approximate

the cumulative distribution function of the Student t-distribution function. While their approximation is

based on the Lugannani and Rice result, the exact form of their result is very complicated. They provide

numerical results for comparing their approximations with the exact Student t-distribution with 5 degrees

of freedom. Their results are reported for the survivor function rather than the cumulative distribution

function. Table 1 contains the results from Jing et al. (2004) and the results of our approximations using

both the Barndorff-Nielsen (BN) and Lugannani and Rice (LR) formulas given by equations (3.10) and

(3.11), respectively. In Figure 1 we plot the relative errors of the three approximations. From Table 1 and

Figure 1, we observe that the Jing et al. (2004) method and our methods are almost indistinguishable around

7

the center of the distribution, but our approximations are much better towards the tail of the distribution

which is crucial for inference purposes. Interestingly, the Lugannani and Rice formula seems to provide a

better approximation than the Barndorff-Nielsen one. In Figure 2 we plot our proposed approximations

for the extreme case of the Student t-distribution with 1 degree of freedom. Even for this extreme case,

our approximations give remarkably accurate approximations, especially so using the Lugannani and Rice

approximation.

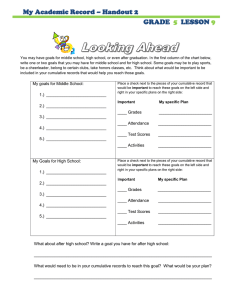

Table 1: Comparisons of exact and approximate values for 1 − Ft5 (t)

t

Exact

Jing

BN

LR

0.1001

0.4620

0.4621

0.4618

0.4618

0.2010

0.4243

0.4244

0.4238

0.4238

0.3034

0.3869

0.3872

0.3861

0.3861

0.4082

0.3500

0.3505

0.3489

0.3490

0.5164

0.3138

0.3146

0.3125

0.3126

0.6290

0.2785

0.2797

0.2771

0.2771

0.7473

0.2443

0.2460

0.2427

0.2427

0.8729

0.2113

0.2136

0.2097

0.2097

1.0078

0.1799

0.1829

0.1782

0.1783

1.1547

0.1502

0.1539

0.1485

0.1486

1.3171

0.1225

0.1268

0.1208

0.1209

1.5000

0.0970

0.1010

0.0954

0.0955

1.7107

0.0739

0.0793

0.0725

0.0727

1.9604

0.0536

0.0592

0.0524

0.0525

2.2678

0.0363

0.0417

0.0353

0.0355

2.6667

0.0223

0.0271

0.0215

0.0217

3.2271

0.0116

0.0154

0.0112

0.0113

4.1295

0.0045

0.0070

0.0043

0.0044

6.0849

0.0009

0.0018

0.0008

0.0008

Lin (1988) provides a very simple approximation to the cumulative distribution of the chi-square distribution. Figures 3 and 4 plot approximations to the cumulative distribution function Fχ2ν (x2 ) for ν = 5 and

1, respectively. We observe that the Lin (1988) approximation is not at all satisfactory. We also observe

that, even for the extreme case of the χ2 distribution with 1 degree of freedom, the proposed approximations

give remarkably accurate approximations.

8

60

40

Jing

BN

LR

0

20

relative error (percentage)

80

100

Figure 1: Relative error for approximations to 1-Ft5 (t)

0

1

2

3

4

5

6

t

0.6

0.2

0.4

Exact

BN

LR

0.0

cdf for t with 1 df

0.8

1.0

Figure 2: Approximations to Ft1 (t)

−20

−10

0

t

9

10

20

0.6

0.4

Exact

BN

LR

Lin

0.0

0.2

cdf for chi−square with 5 df

0.8

1.0

Figure 3: Approximations to Fχ25 (χ2 )

0

5

10

15

x2

0.6

0.4

Exact

BN

LR

Lin

0.2

cdf for chi−square with 1 df

0.8

1.0

Figure 4: Approximations to Fχ21 (χ2 )

0

2

4

6

x2

10

8

10

5

Conclusion

When the likelihood-based asymptotic method is proposed and then applied to the one sample normal

problem, the results can easily be transformed to produce a simple and yet highly accurate normal approximations to the cumulative distribution functions of the t- and χ2 -distributions. A characteristic of these

approximations is their known O(n−3/2 ) accuracy and resultant expressions which are remarkably simple.

References

[1] Barndorff-Nielsen, O., 1986, Inference on Full or Partial Parameters Based on the Standardized Signed

Log Likelihood Ratio, Biometrika 73, 307-22.

[2] Barndorff-Nielsen, O., 1991, Modified Signed Log-Likelihood Ratio, Biometrika 78,557-563.

[3] Fraser, D., 1990, Tail Probabilities from Observed Likelihood, Biometrika 77, 65-76.

[4] Jing, B.Y., Shao, Q.M., and Zhou, W., 2004, Saddlepoint Approximation for Student t-Statistic With

No Moment Conditions, Annals of Statistics 32, 2679-2711.

[5] Lin, J.T., 1988, Approximating the Cumulative Chi-square Distribution and Its Inverse, The Statistician

37, 3-5.

[6] Lugannani, R., and Rice, S., 1980, Saddlepoint Approximation for the Distribution of the Sums of

Independent Random Variables, Advances in Applied Probability 12, 475-490.

[7] Reid, N., 2003, Asymptotics and the Theory of Inference, Annals of Statistics 31, 1695-1731.

11

Contact Addresses:

Marie Rekkas

Department of Economics

Simon Fraser University

8888 University Drive

Burnaby, BC V5A 1S6

Canada

Y. She

Department of Mathematics and Statistics

York University

4700 Keele Street

Toronto, ON M3J 1P3

Canada

Ye Sun

Department of Statistics

University of Toronto

100 St. George Street

Toronto, ON M5S 1G1

Canada

Augustine Wong (Corresponding Author)

Department of Mathematics and Statistics

York University

4700 Keele Street

Toronto, ON M3J 1P3

Canada

Rekkas and Wong gratefully acknowledge the support of the National Sciences and Engineering Research

Council of Canada.

12