Sparse 3D convolutional neural networks Applications of 3 dimensional CNNs Ben Graham

advertisement

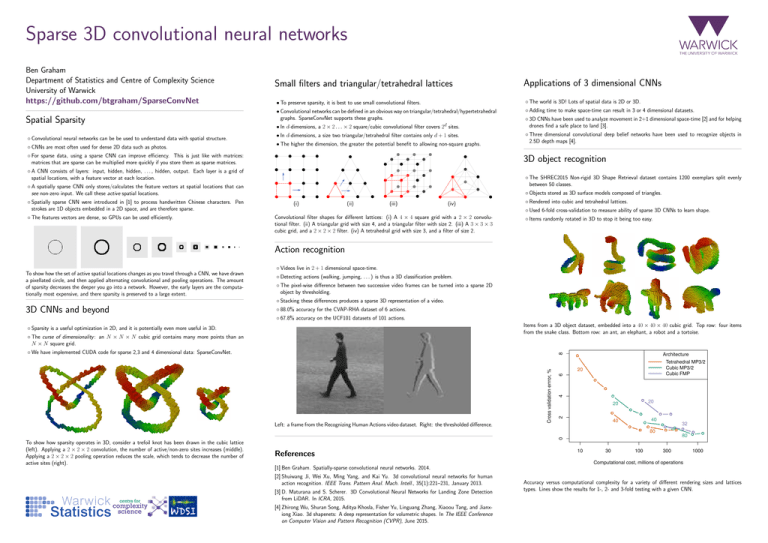

Sparse 3D convolutional neural networks Ben Graham Department of Statistics and Centre of Complexity Science University of Warwick https://github.com/btgraham/SparseConvNet Spatial Sparsity ◦ Convolutional neural networks can be be used to understand data with spatial structure. ◦ CNNs are most often used for dense 2D data such as photos. Small filters and triangular/tetrahedral lattices • To preserve sparsity, it is best to use small convolutional filters. ◦ The world is 3D! Lots of spatial data is 2D or 3D. • Convolutional networks can be defined in an obvious way on triangular/tetrahedral/hypertetrahedral graphs. SparseConvNet supports these graphs. ◦ Adding time to make space-time can result in 3 or 4 dimensional datasets. • In d-dimensions, a 2 × 2 . . . × 2 square/cubic convolutional filter covers 2d sites. • In d-dimensions, a size two triangular/tetrahedral filter contains only d + 1 sites. • The higher the dimension, the greater the potential benefit to allowing non-square graphs. ◦ For sparse data, using a sparse CNN can improve efficiency. This is just like with matrices: matrices that are sparse can be multiplied more quickly if you store them as sparse matrices. ◦ Three dimensional convolutional deep belief networks have been used to recognize objects in 2.5D depth maps [4]. ◦ The SHREC2015 Non-rigid 3D Shape Retrieval dataset contains 1200 exemplars split evenly between 50 classes. ◦ A spatially sparse CNN only stores/calculates the feature vectors at spatial locations that can see non-zero input. We call these active spatial locations. ◦ The features vectors are dense, so GPUs can be used efficiently. ◦ 3D CNNs have been used to analyze movement in 2+1 dimensional space-time [2] and for helping drones find a safe place to land [3]. 3D object recognition ◦ A CNN consists of layers: input, hidden, hidden, . . . , hidden, output. Each layer is a grid of spatial locations, with a feature vector at each location. ◦ Spatially sparse CNN were introduced in [1] to process handwritten Chinese characters. Pen strokes are 1D objects embedded in a 2D space, and are therefore sparse. Applications of 3 dimensional CNNs ◦ Objects stored as 3D surface models composed of triangles. (i) (ii) (iii) (iv) ◦ Rendered into cubic and tetrahedral lattices. ◦ Used 6-fold cross-validation to measure ability of sparse 3D CNNs to learn shape. Convolutional filter shapes for different lattices: (i) A 4 × 4 square grid with a 2 × 2 convolutional filter. (ii) A triangular grid with size 4, and a triangular filter with size 2. (iii) A 3 × 3 × 3 cubic grid, and a 2 × 2 × 2 filter. (iv) A tetrahedral grid with size 3, and a filter of size 2. ◦ Items randomly rotated in 3D to stop it being too easy. Action recognition To show how the set of active spatial locations changes as you travel through a CNN, we have drawn a pixellated circle, and then applied alternating convolutional and pooling operations. The amount of sparsity decreases the deeper you go into a network. However, the early layers are the computationally most expensive, and there sparsity is preserved to a large extent. ◦ Videos live in 2 + 1 dimensional space-time. ◦ Detecting actions (walking, jumping, . . . ) is thus a 3D classification problem. ◦ The pixel-wise difference between two successive video frames can be turned into a sparse 2D object by thresholding. ◦ Stacking these differences produces a sparse 3D representation of a video. 3D CNNs and beyond ◦ 88.0% accuracy for the CVAP-RHA dataset of 6 actions. ◦ 67.8% accuracy on the UCF101 datasets of 101 actions. Items from a 3D object dataset, embedded into a 40 × 40 × 40 cubic grid. Top row: four items from the snake class. Bottom row: an ant, an elephant, a robot and a tortoise. ◦ Sparsity is a useful optimization in 2D, and it is potentially even more useful in 3D. ◦ The curse of dimensionality : an N × N × N cubic grid contains many more points than an N × N square grid. Left: a frame from the Recognizing Human Actions video dataset. Right: the thresholded difference. Architecture Tetrahedral MP3/2 Cubic MP3/2 Cubic FMP 4 6 20 20 20 2 Cross validation errror, % 8 ◦ We have implemented CUDA code for sparse 2,3 and 4 dimensional data: SparseConvNet. 40 40 32 80 0 80 To show how sparsity operates in 3D, consider a trefoil knot has been drawn in the cubic lattice (left). Applying a 2 × 2 × 2 convolution, the number of active/non-zero sites increases (middle). Applying a 2 × 2 × 2 pooling operation reduces the scale, which tends to decrease the number of active sites (right). References 10 30 100 300 1000 Computational cost, millions of operations [1] Ben Graham. Spatially-sparse convolutional neural networks. 2014. [2] Shuiwang Ji, Wei Xu, Ming Yang, and Kai Yu. 3d convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell., 35(1):221–231, January 2013. [3] D. Maturana and S. Scherer. 3D Convolutional Neural Networks for Landing Zone Detection from LiDAR. In ICRA, 2015. [4] Zhirong Wu, Shuran Song, Aditya Khosla, Fisher Yu, Linguang Zhang, Xiaoou Tang, and Jianxiong Xiao. 3d shapenets: A deep representation for volumetric shapes. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2015. Accuracy versus computational complexity for a variety of different rendering sizes and lattices types. Lines show the results for 1-, 2- and 3-fold testing with a given CNN.