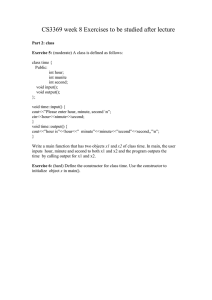

Allocating Memory Ted Baker Andy Wang CIS 4930 / COP 5641

advertisement

Allocating Memory

Ted Baker Andy Wang

CIS 4930 / COP 5641

Topics

kmalloc and friends

get_free_page and “friends”

vmalloc and “friends”

Memory usage pitfalls

Linux Memory Manager (1)

Page allocator maintains individual pages

Page allocator

Linux Memory Manager (2)

Zone allocator allocates memory in power-of-

two sizes

Zone allocator

Page allocator

Linux Memory Manager (3)

Slab allocator groups allocations by sizes to

reduce internal memory fragmentation

Slab allocator

Zone allocator

Page allocator

kmalloc

Does not clear the memory

Allocates consecutive virtual/physical memory

pages

Offset by PAGE_OFFSET

No changes to page tables

Tries its best to fulfill allocation requests

Large memory allocations can degrade the

system performance significantly

The Flags Argument

kmalloc prototype

#include <linux/slab_def.h>

void *kmalloc(size_t size, int flags);

GFP_KERNEL is the most commonly used flag

Eventually calls __get_free_pages (the origin of

the GFP prefix)

Can put the current process to sleep while waiting for a

page in low-memory situations

Cannot be used in atomic context

The Flags Argument

To obtain more memory

Flush dirty buffers to disk

Swapping out memory from user processes

GFP_ATOMIC is called in atomic context

Interrupt handlers, tasklets, and kernel timers

Does not sleep

If the memory is used up, the allocation fails

No flushing and swapping

Other flags are available

Defined in <linux/gfp.h>

The Flags Argument

GFP_USER is used to allocate user pages; it may

sleep

GFP_HIGHUSER allocates high memory user pages

GFP_NOIO disallows I/O

GFP_NOFS does not allow making file system calls

Used in file system and virtual memory code

Disallow kmalloc to make recursive calls to file

system and virtual memory code

The Flags Argument

Allocation priority flags

Prefixed with __

Used in combination with GFP flags (via ORs)

__GFP_DMA requests allocation to happen in the

DMA-capable memory zone

__GFP_HIGHMEM indicates that the allocation may be

allocated in high memory

__GFP_COLD requests for a page not used for some

time (to avoid DMA contention)

__GFP_NOWARN disables printk warnings when an

allocation cannot be satisfied

The Flags Argument

__GFP_HIGH marks a high priority request

Not for kmalloc

__GFP_REPEAT

Try harder

__GFP_NOFAIL

Failure is not an option (strongly discouraged)

__GFP_NORETRY

Give up immediately if the requested memory is not

available

Memory Zones

DMA-capable memory

Platform dependent

First 16MB of RAM on the x86 for ISA devices

PCI devices have no such limit

Normal memory

High memory

Platform dependent

> 32-bit addressable range

Memory Zones

If __GFP_DMA is specified

Allocation will only search for the DMA zone

If nothing is specified

Allocation will search both normal and DMA

zones

If __GFP_HIGHMEM is specified

Allocation will search all three zones

The Size Argument

Kernel manages physical memory in pages

Needs special management to allocate small

memory chunks

Linux creates pools of memory objects in

predefined fixed sizes (32-byte, 64-byte, 128byte memory objects)

Smallest allocation unit for kmalloc is 32 or

64 bytes

Largest portable allocation unit is 128KB

Lookaside Caches (Slab Allocator)

Nothing to do with TLB or hardware caching

Useful for USB and SCSI drivers

Improved performance

To create a cache for a tailored size

#include <linux/slab.h>

kmem_cache_t *

kmem_cache_create(const char *name, size_t size,

size_t offset, unsigned long flags,

void (*constructor) (void *, kmem_cache_t *,

unsigned long flags),

void (*destructor) (void *, kmem_cache_t *,

unsigned long flags));

Lookaside Caches (Slab Allocator)

name: memory cache identifier

Allocated string without blanks

size: allocation unit

offset: starting offset in a page to align

memory

Most likely 0

Lookaside Caches (Slab Allocator)

flags: control how the allocation is done

SLAB_NO_REAP

Prevents the system from reducing this memory

cache (normally a bad idea)

Obsolete

SLAB_HWCACHE_ALIGN

Requires each data object to be aligned to a

cache line

Good option for frequently accessed objects on

SMP machines

Potential fragmentation problems

Lookaside Caches (Slab Allocator)

SLAB_CACHE_DMA

Requires each object to be allocated in the DMA

zone

See mm/slab.h for other flags

constructor: initialize newly allocated

objects

destructor: clean up objects before an

object is released

Constructor/destructor may not sleep due to

atomic context

Lookaside Caches (Slab Allocator)

To allocate an memory object from the

memory cache, call

void *kmem_cache_alloc(kmem_cache_t *cache, int flags);

cache: the cache created previously

flags: same flags for kmalloc

Failure rate is rather high

Must check the return value

To free an memory object, call

void kmem_cache_free(kmem_cache_t *cache,

const void *obj);

Lookaside Caches (Slab Allocator)

To free a memory cache, call

int kmem_cache_destroy(kmem_cache_t *cache);

Need to check the return value

Failure indicates memory leak

Slab statistics are kept in /proc/slabinfo

A scull Based on the Slab Caches:

scullc

Declare slab cache

kmem_cache_t *scullc_cache;

Create a slab cache in the init function

/* no constructor/destructor */

scullc_cache

= kmem_cache_create("scullc", scullc_quantum, 0,

SLAB_HWCACHE_ALIGN, NULL, NULL);

if (!scullc_cache) {

scullc_cleanup();

return -ENOMEM;

}

A scull Based on the Slab Caches:

scullc

To allocate memory quanta

if (!dptr->data[s_pos]) {

dptr->data[s_pos] = kmem_cache_alloc(scullc_cache,

GFP_KERNEL);

if (!dptr->data[s_pos])

goto nomem;

memset(dptr->data[s_pos], 0, scullc_quantum);

}

To release memory

for (i = 0; i < qset; i++) {

if (dptr->data[i]) {

kmem_cache_free(scullc_cache, dptr->data[i]);

}

}

A scull Based on the Slab Caches:

scullc

To destroy the memory cache at module

unload time

/* scullc_cleanup: release the cache of our quanta */

if (scullc_cache) {

kmem_cache_destroy(scullc_cache);

}

Memory Pools

Similar to memory cache

Reserve a pool of memory to guarantee the

success of memory allocations

Can be wasteful

To create a memory pool, call

#include <linux/mempool.h>

mempool_t *mempool_create(int min_nr,

mempool_alloc_t *alloc_fn,

mempool_free_t *free_fn,

void *pool_data);

Memory Pools

min_nr is the minimum number of allocation

objects

alloc_fn and free_fn are the allocation

and freeing functions

typedef void *(mempool_alloc_t)(int gfp_mask,

void *pool_data);

typedef void (mempool_free_t)(void *element,

void *pool_data);

pool_data is passed to the allocation and

freeing functions

Memory Pools

To allow the slab allocator to handle

allocation and deallocation, use predefined

functions

cache = kmem_cache_create(...);

pool = mempool_create(MY_POOL_MINIMUM, mempool_alloc_slab,

mempool_free_slab, cache);

To allocate and deallocate a memory pool

object, call

void *mempool_alloc(mempool_t *pool, int gfp_mask);

void mempool_free(void *element, mempool_t *pool);

Memory Pools

To resize the memory pool, call

int mempool_resize(mempool_t *pool, int new_min_nr,

int gfp_mask);

To deallocate the memory poll, call

void mempool_destroy(mempool_t *pool);

get_free_page and Friends

For allocating big chunks of memory, it is

more efficient to use a page-oriented allocator

To allocate pages, call

/* returns a pointer to a zeroed page */

get_zeroed_page(unsigned int flags);

/* does not clear the page */

__get_free_page(unsigned int flags);

/* allocates multiple physically contiguous pages */

__get_free_pages(unsigned int flags, unsigned int order);

get_free_page and Friends

flags

Same as flags for kmalloc

order

Allocate 2order pages

order = 0 for 1 page

order = 3 for 8 pages

Can use get_order(size)to find out order

Maximum allowed value is about 10 or 11

See /proc/buddyinfo statistics

get_free_page and Friends

Subject to the same rules as kmalloc

To free pages, call

void free_page(unsigned long addr);

void free_pages(unsigned long addr, unsigned long order);

Make sure to free the same number of pages

Or the memory map becomes corrupted

A scull Using Whole Pages:

scullp

Memory allocation

if (!dptr->data[s_pos]) {

dptr->data[s_pos] =

(void *) __get_free_pages(GFP_KERNEL, dptr->order);

if (!dptr->data[s_pos])

goto nomem;

memset(dptr->data[s_pos], 0, PAGE_SIZE << dptr->order);

}

Memory deallocation

for (i = 0; i < qset; i++) {

if (dptr->data[i]) {

free_pages((unsigned long) (dptr->data[i]), dptr->order);

}

}

The alloc_pages Interface

Core Linux page allocator function

struct page *alloc_pages_node(int nid, unsigned int flags,

unsigned int order);

nid: NUMA node ID

Two higher level macros

struct page *alloc_pages(unsigned int flags,

unsigned int order);

struct page *alloc_page(unsigned int flags);

Allocate memory on the current NUMA node

The alloc_pages Interface

To release pages, call

void __free_page(struct page *page);

void __free_pages(struct page *page, unsigned int order);

/* optimized calls for cache-resident or non-cache-resident

pages */

void free_hot_page(struct page *page);

void free_cold_page(struct page *page);

vmalloc and Friends

Allocates a virtually contiguous memory

region

Not consecutive pages in physical memory

Each page retrieved with a separate

alloc_page call

Less efficient

Can sleep (cannot be used in atomic context)

Returns 0 on error, or a pointer to the

allocated memory

Its use is discouraged

vmalloc and Friends

vmalloc-related prototypes

#include <linux/vmalloc.h>

void

void

void

void

*vmalloc(unsigned long size);

vfree(void * addr);

*ioremap(unsigned long offset, unsigned long size);

iounmap(void * addr);

vmalloc and Friends

Each allocation via vmalloc involves setting

up and modifying page tables

Return address range between

VMALLOC_START and VMALLOC_END

(defined in <linux/pgtable.h>)

Used for allocating memory for a large

sequential buffer

vmalloc and Friends

ioremap builds page tables

Does not allocate memory

Takes a physical address (offset) and return

a virtual address

Useful to map the address of a PCI buffer to

kernel space

Should use readb and other functions to

access remapped memory

A scull Using Virtual Addresses:

scullv

This module allocates 16 pages at a time

To obtain new memory

if (!dptr->data[s_pos]) {

dptr->data[s_pos]

= (void *) vmalloc(PAGE_SIZE << dptr->order);

if (!dptr->data[s_pos]) {

goto nomem;

}

memset(dptr->data[s_pos], 0,

PAGE_SIZE << dptr->order);

}

A scull Using Virtual Addresses:

scullv

To release memory

for (i = 0; i < qset; i++) {

if (dptr->data[i]) {

vfree(dptr->data[i]);

}

}

Per-CPU Variables

Each CPU gets its own copy of a variable

Almost no locking for each CPU to work with

its own copy

Better performance for frequent updates

Example: networking subsystem

Each CPU counts the number of processed

packets by type

When user space request to see the value,

just add up each CPU’s version and return the

total

Per-CPU Variables

To create a per-CPU variable

#include <linux/percpu.h>

DEFINE_PER_CPU(type, name);

name: an array

DEFINE_PER_CPU(int[3], my_percpu_array);

Declares a per-CPU array of three integers

To access a per-CPU variable

Need to prevent process migration

get_cpu_var(name); /* disables preemption */

put_cpu_var(name); /* enables preemption */

Per-CPU Variables

To access another CPU’s copy of the

variable, call

per_cpu(name, int cpu_id);

To dynamically allocate and release per-CPU

variables, call

void *alloc_percpu(type);

void *__alloc_percpu(size_t size);

void free_percpu(const void *data);

Per-CPU Variables

To access dynamically allocated per-CPU

variables, call

per_cpu_ptr(void *per_cpu_var, int cpu_id);

To ensure that a process cannot be moved

out of a processor, call get_cpu (returns cpu

ID) to block preemption

int cpu;

cpu = get_cpu()

ptr = per_cpu_ptr(per_cpu_var, cpu);

/* work with ptr */

put_cpu();

Per-CPU Variables

To export per-CPU variables, call

EXPORT_PER_CPU_SYMBOL(per_cpu_var);

EXPORT_PER_CPU_SYMBOL_GPL(per_cpu_var);

To access an exported variable, call

/* instead of DEFINE_PER_CPU() */

DECLARE_PER_CPU(type, name);

More examples in

<linux/percpu_counter.h>

Obtaining Large Buffers

First, consider the alternatives

Optimize the data representation

Export the feature to the user space

Use scatter-gather mappings

Allocate at boot time

Acquiring a Dedicated Buffer at Boot

Time

Advantages

Least prone to failure

Bypass all memory management policies

Disadvantages

Inelegant and inflexible

Not a feasible option for the average user

Available only for code linked to the kernel

Need to rebuild and reboot the computer to install

or replace a device driver

Acquiring a Dedicated Buffer at Boot

Time

To allocate, call one of these functions

#include <linux/bootmem.h>

void *alloc_bootmem(unsigned long size);

/* need low memory for DMA */

void *alloc_bootmem_low(unsigned long size);

/* allocated in whole pages */

void *alloc_bootmem_pages(unsigned long size);

void *alloc_bootmem_low_pages(unsigned long size);

Acquiring a Dedicated Buffer at Boot

Time

To free, call

void free_bootmem(unsigned long addr, unsigned long size);

Need to link your driver into the kernel

See Documentation/kbuild

Memory Usage Pitfalls

Failure to handle failed memory allocation

Needed for every allocation

Allocate too much memory

No built-in limit on memory usage