Midterm exam for Econometrics 101, Warwick Econ Ph.D, 2015

advertisement

Midterm exam for Econometrics 101, Warwick Econ Ph.D, 2015

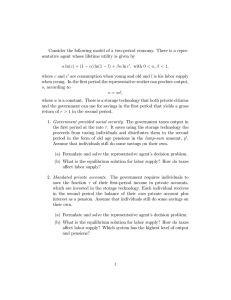

1) (25 marks) Doing again a proof we saw together

Let (Xi )1≤i≤n be an iid sample of k × 1 random vectors following the same distribution as the

k × 1 random vector X . Let (Yi )1≤i≤n be an iid sample of random variables following the same

distribution as the random variable Y . Assume that the k × k matrix E(XX 0 ) is invertible.

Let

β = E(XX 0 )−1 E(XY )

(0.0.1)

be the population OLS regression coecient of Y on X . Let

n

βb =

1X

Xi Xi0

n

!−1

n

1X

Xi Yi

n

i=1

(0.0.2)

i=1

be the estimator of β . Redo the proof of the following theorem, which we saw together.

Theorem 0.0.1 Asymptotic normality of

βb

βb.

β:

is an asymptotically normal sequence of estimators of

√

n(βb − β) ,→ N (0, E(XX 0 )−1 E(XX 0 e2 )E(XX 0 )−1 ).

In the proof, you can use the following result without proving it:

Theorem 0.0.2 The product of a deterministic matrix and a normally distributed vector is a

normally distributed vector

If

U ∼ N (0, V (U ))

is a

k×1

normal vector and

B

is a

k×k

deterministic matrix, then

BU ∼ N (0, BV (U )B 0 ).

Solution

Write Yi = Xi0 β + ei . Substitute this into (0.0.2). This yields

n

1X

Xi Xi0

n

!−1

n

1X

Xi (Xi0 β + ei ).

n

i=1

i=1

!−1

n

n

1X

1X

= β+

Xi Xi0

Xi ei .

n

n

βb =

i=1

i=1

Therefore,

√

n(βb − β) =

n

1X

Xi Xi0

n

i=1

As

1

n

!−1

√

n

n

1X

Xi ei

n

!

.

(0.0.3)

i=1

0 −1

i=1 Xi Xi

Pn

→ E(XX 0 )−1 (why?), the Slutsky lemma implies that

!−1

!

!

n

n

n

√

1X

1X

1X

0

0 −1 √

Xi Xi

n

Xi ei ,→ E(XX )

n

Xi ei .

n

n

n

i=1

i=1

(0.0.4)

i=1

Since E(Xe) = 0 (why?), the central limit theorem implies that

√

n

n

1X

Xi ei

n

!

,→ N (0, V (Xe)).

i=1

1

(0.0.5)

Because E(Xe) = 0,

V (Xe) = E((Xe − E(Xe))(Xe − E(Xe))0 ) = E(XX 0 e2 ).

(0.0.6)

Combining Equations (0.0.3), (0.0.4), (0.0.5), (0.0.6) and Theorem 0.0.2 yields the result (once

noted that E(XX 0 )−1 0 = E(XX 0 )−1 ).

QED.

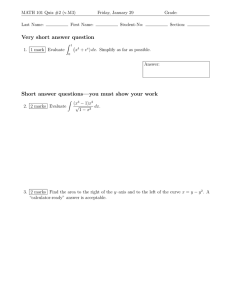

2) Short questions

a) (5 marks) Assume a policy maker oers you to run a randomized experiment to measure

the eect of a training for unemployed people. 400 unemployed are motivated to participate

in the experiment, and the policy maker leaves it to you to decide how many will be in the

control group, and how many will be in the treatment group. To maximize the statistical

power of your experiment, how many unemployed should be in the treatment group? No need

to justify your answer, just give a number.

b) (5 marks) Assume a policy maker oers you to run a randomized experiment to measure the

eect of a class size reduction program in Portugal. Based on estimates from other countries,

you anticipate that this policy should increase students test scores by 20% of a standard

deviation. Given the number of students that will be included in the experiment, and the

share of them who will receive the treatment, you compute that for α = 0.05 and λ = 0.8, the

minimum detectable eect of the experiment is exactly equal to 20% of a standard deviation.

If the true eect of that policy is indeed to increase test scores by 20% of a standard deviation,

what is the probability that you will incorrectly conclude that the policy has no eect at the

outset of the experiment? No need to justify your answer, just give a number.

c) (10 marks) Assume you regress wages on a constant, a dummy variable for gender (1 for

males, 0 for females) and a dummy variable for black people (1 for black people, 0 for nonblack). The gender and black dummies are respectively denoted by X2 and X3 . The following

table presents the mean of wages in the four subgroups dened by those two variables.

Females Males

Non-Black

Black

2000

2500

1400

1600

The coecient of X3 , the black dummy, is equal to wδ1 + (1 − w)δ2 , where w is a positive

weight, and δ1 and δ2 are two numbers which you can compute from the table. Give the values

of δ1 and δ2 . No need to justify your answer, just give two numbers.

d) (5 marks) What is the only circumstance in which you might prefer using non-robust instead

of robust standard errors when you run a regression? Reply in no more than one sentence.

Solution

a) 200.

2

b) 20%.

c) δ1 = −600, δ2 = −900.

d) When there are less than 200 units in your sample.

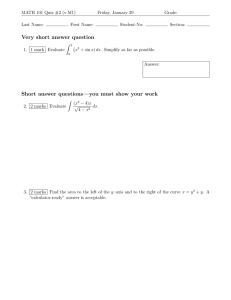

3) Measuring heterogeneous treatment eects.

Assume you are interested in measuring the eect of reducing class size on students' performance. You run an experiment where 500 rst graders are allocated to small classes with

10 students, while 500 other rst graders are allocated to regular classes with 25 students.

The allocation of students to classes is made randomly. Let Di denote a dummy equal to 1

if student i is in a small class. Let Yi denote students' i mark in a test in the end of rst

grade, standardized by the variance of marks. Let Yi1 denote the standardized mark that

student i will obtain in that test if she is sent to a small class. Let Yi0 denote the standardized mark that student i will obtain in that test if she is not sent to a small class. We have

Yi = Di Yi1 + (1 − Di )Yi0 . Finally, assume that in their last year of kindergarten, students took

a standardized test measuring their readiness for rst grade, and let Xi be a dummy equal to

1 for students who scored above the median in this test.

a) (3 marks) Explain in a few sentences why randomization ensures that Di ⊥⊥ (Yi0 , Yi1 , Xi ).

Your answer should not be longer than 5 lines.

b) (3 marks) Assume you regress Yi on a constant and Di . Let β denote the population

coecient of Di in that regression. Using one of the three examples of saturated regressions

we saw together, give a formula for β . Your formula should only involve Yi and Di , not Yi0

or Yi1 , and to justify it you only need to say whether this regression corresponds to the rst,

second, or third example of a saturated regression we saw together.

c) (4 marks) Using the result from the previous question, show that β = E(Yi1 − Yi0 ). Hint:

you can use without proving them the two following facts

1. Di ⊥⊥ (Yi0 , Yi1 , Xi ) implies that Di ⊥⊥ Yi0 and Di ⊥⊥ Yi1

2. For any random variables U and V , if U ⊥⊥ V then E(U |V ) = E(U ).

d) (5 marks) Now, assume you regress Yi on a constant, Di , Xi , and Di × Xi . Let β0 and

β1 denote the population regression coecients of Di and Di × Xi in that regression. Using

one of the three examples of saturated regressions we saw together, give a formula for β0 and

β1 . Your formula should only involve Yi , Di , and Xi , not Yi0 or Yi1 , and to justify it you

only need to say whether this regression corresponds to the rst, second, or third example of

a saturated regression we saw together.

e) (5 marks) Using the result from the previous question, show that β0 = E(Yi1 − Yi0 |Xi = 0)

and β1 = E(Yi1 − Yi0 |Xi = 1) − E(Yi1 − Yi0 |Xi = 0). Hint: you can use the fact that

Di ⊥⊥ (Yi0 , Yi1 , Xi ) implies that Di ⊥⊥ (Yi0 , Xi ) and Di ⊥⊥ (Yi1 , Xi ). Then, remember that

joint independence implies conditional independence. Finally, you can use the fact for any

random variables (U, V, W ), E(V |U = u) − E(W |U = u) = E(V − W |U = u).

3

f) (5 marks) Now, assume you estimate the regression in question d). You nd βb1 = −0.3, and

you can also reject the null hypothesis that β1 = 0 at the 5% level. Interpret these results.

Based on the results of the experiment, which students should benet from smaller classes?

Solution

a) Because treatment is randomly allocated, the treatment and the control group must be

comparable on all dimensions.

b) β = E(Yi |Di = 1) − E(Yi |Di = 0). This follows from the second example of a saturated

regression we saw together.

c)

E(Yi |Di = 1) − E(Yi |Di = 0) = E(Yi1 |Di = 1) − E(Yi0 |Di = 0)

= E(Yi1 ) − E(Yi0 )

= E(Yi1 − Yi0 ).

d)

β0 = E(Yi |Di = 1, Xi = 0) − E(Yi |Di = 0, Xi = 0)

and

β1 = E(Yi |Di = 1, Xi = 1)−E(Yi |Di = 0, Xi = 1)−(E(Yi |Di = 1, Xi = 0)−E(Yi |Di = 0, Xi = 0)).

This follows from the third example of a saturated regression we saw together.

e)

E(Yi |Di = 1, Xi = 0) − E(Yi |Di = 0, Xi = 0) = E(Yi1 |Di = 1, Xi = 0) − E(Yi0 |Di = 0, Xi = 0)

= E(Yi1 |Xi = 0) − E(Yi0 |Xi = 0)

= E(Yi1 − Yi0 |Xi = 0).

The proof of the second statement is similar.

f) Within the subgroup of students above the median of readiness for rst grade, the treatment/control comparison of test scores in the end of rst grade is 30% of a standard deviation

lower than among students below the median, and this dierence is statistically signicant.

Therefore, the eect of studying in smaller classes is stronger for weak than for strong students.

Students below the median of readiness for rst grade should benet from smaller classes.

4) Performing inference on the upper bound of the support of a random variable in a nonparametric model.

Assume you observe an iid sample of n random variables (Yi ) which follow a continuous

distribution on [0, θ]. Let F denote the cumulative distribution function of these random

variables: F (x) = P (Yi ≤ x). Let us also assume that F is dierentiable on [0, θ]. Its

derivative is the density of the (Yi ). It is denoted f . θ is the unknown parameter we would

like to estimate. Consider the following estimator for θ: θbM L = max {Yi }.

1≤i≤n

4

a) Show that for any x ∈ [0, θ], P (θbM L ≤ x) = (F (x))n , for x < 0 P (θbM L ≤ x) = 0, and for

x > θ P (θbM L ≤ x) = 1.

b) Assume that f (θ) > 0. Use question a) to show that n θ − θbM L ,→ U , where U follows an

exponential distribution with parameter f (θ). Hint 1 : the cdf of a random variable following

an exponential distribution with parameter f (θ) is 1 − exp(−xf (θ)) for x ≥ 0 and 0 otherwise.

Hint 2 : to prove this, you can use the following result: if a function g is dierentiable at some

real number u with derivative g 0 (u), then for any real number k, g(u+ nk ) = g(u)+g 0 (u) nk + εnn ,

where εn is a sequence converging towards 0 when n goes to +∞.

c) Assume that f (θ) = 0 but f is dierentiable at θ and f 0 (θ) 6= 0. As f (θ) = 0 and f

is a density function which must be positive everywhere, if f 0 (θ) 6= 0 then we must have

f 0 (θ) < 0 (otherwise, f would be strictly negative for values to the left of θ). Use question

√ a) to show that n θ − θbM L ,→ U , where U follows a Weibull distribution with parameters

2 and

q

2

−f 0 (θ) . Hint 1 :

the cdf of a random variable following a Weibull distribution with

!2

parameter 2 and

q

2

−f 0 (θ)

is 1 − exp −

q x

2

−f 0 (θ)

for x ≥ 0 and 0 otherwise.

Hint 2

: to

prove this, you can use the following result: if a function g is twice dierentiable at some real

number u with rst and second order derivatives g 0 (u) and g 00 (u), then for any real number

k2

k , g(u + √kn ) = g(u) + g 0 (u) √kn + g 00 (u) 2n

+ εnn , where εn is a sequence converging towards 0

when n goes to +∞.

d) Question b) shows that if f (θ) 6= 0, θbM L is an n−consistent estimator of θ. Question c)

√

shows that if f (θ) = 0 (but f 0 (θ) 6= 0), θbM L is a n−consistent estimator of θ. Explain

intuitively why θbM L converges more slowly towards θ when f (θ) = 0.

Solution

a) For x ∈ [0, θ],

P (θbM L ≤ x) = P (Y1 ≤ x, ..., Yn ≤ x)

= P (Y1 ≤ x)...P (Yn ≤ x)

= (F (x))n .

The rst step holds because the max of n random variables is lower than x if and only if each

of those random variables is lower than x. The second step follows from independence.

For x < 0, the result follows from the fact that the max of positive random variables cannot

be lower than 0. For x > θ, this follows from the fact that the max of random variables lower

than θ must be lower than θ as well.

b) The cdf of an exp(f (θ)) variable is continuous everywhere.

Using the denition

of conver gence in distribution, we therefore have to show that P n θ − θbM L ≤ x converges to the

cdf of an exp(f (θ)) for every x.

5

For x < 0, P n θ − θbM L ≤ x = 0 because the random variable n θ − θbM L is positive.

This proves the result for x < 0.

For x ≥ 0,

P n θ − θbM L ≤ x

=

x

P θbM L > θ −

n

x

b

1 − P θM L ≤ θ −

n

x n

1− F θ−

n x 1 − exp n ln F θ −

n

x

εn 1 − exp n ln F (θ) − f (θ) +

n

n

1 − exp (−xf (θ) + εn )

→

1 − exp(−xf (θ)).

=

=

=

=

=

n→+∞

c) The cdf of a variable following a Weibull distribution is continuous everywhere.

Using

the

√ denition of convergence in distribution, we therefore have to show that P n θ − θbM L ≤ x

converges to the cdf given in the question for every x.

√ For x < 0, P n θ − θbM L ≤ x = 0 because the random variable

tive. This proves the result for x < 0.

√ n θ − θbM L is posi-

For x ≥ 0,

√ n θ − θbM L ≤ x

P

=

=

=

=

=

=

→

n→+∞

x

b

P θM L > θ − √

n

x

1 − P θbM L ≤ θ − √

n

n

x

1− F θ− √

n

x

1 − exp n ln F θ − √

n

x

x2 f 0 (θ) εn

+

1 − exp n ln F (θ) − √ f (θ) +

n 2

n

n

0

f (θ)

1 − exp x2

+ εn

2

f 0 (θ)

1 − exp x2

.

2

d) When f (θ) = 0, the probability that a realization of the Yi is close to θ is lower than when

f (θ) > 0.

6