C E P A

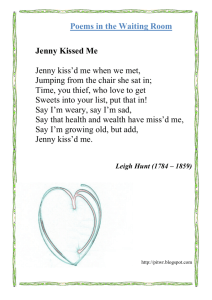

advertisement