Replicated Binary Designs Andy Wang CIS 5930-03 Computer Systems

advertisement

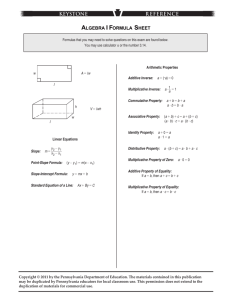

Replicated Binary Designs Andy Wang CIS 5930-03 Computer Systems Performance Analysis 2k Factorial Designs With Replications • 2k factorial designs do not allow for estimation of experimental error – No experiment is ever repeated • Error is usually present – And usually important • Handle issue by replicating experiments • But which to replicate, and how often? 2 k 2r Factorial Designs • Replicate each experiment r times • Allows quantifying experimental error • Again, easiest to first look at case of only 2 factors 3 2 2 r Factorial Designs • 2 factors, 2 levels each, with r replications at each of the four combinations • y = q0 + qAxA + qBxB + qABxAxB + e • Now we need to compute effects, estimate errors, and allocate variation • Can also produce confidence intervals for effects and predicted responses 4 Computing Effects for 22r Factorial Experiments • We can use sign table, as before • But instead of single observations, regress off mean of the r observations • Compute errors for each replication using similar tabular method • Similar methods used for allocation of variance and calculating confidence intervals 5 Example of 22r Factorial Design With Replications • Same parallel system as before, but with 4 replications at each point (r =4) • No DLM, 8 nodes: 820, 822, 813, 809 • DLM, 8 nodes: 776, 798, 750, 755 • No DLM, 64 nodes: 217, 228, 215, 221 • DLM, 64 nodes: 197, 180, 220, 185 6 22r Factorial Example Analysis Matrix I 1 1 1 1 A -1 1 -1 1 B -1 -1 1 1 AB 1 -1 -1 1 y Mean (820,822,813,809) 816 (217,228,215,221) 220.25 (776,798,750,755) 769.75 (197,180,220,185) 195.5 2001.5 -1170 -71 21.5 500.4 -292.5 -17.75 5.4 Total Total/4 q0= 500.4 qA= -292.5 qB= -17.75 qAB= 5.4 7 Estimation of Errors for 22r Factorial Example • Figure differences between predicted and observed values for each replication: eij y ij yˆ i y ij q0 q A x Ai qB xBi q AB x Ai xBi • Now calculate SSE 22 r SSE e 2606 i 1 j 1 2 ij 8 Allocating Variation • We can determine percentage of variation due to each factor’s impact – Just like 2k designs without replication • But we can also isolate variation due to experimental errors • Methods are similar to other regression techniques for allocating variation 9 Variation Allocation in Example • We’ve already figured SSE • We also need SST, SSA, SSB, and SSAB 2 SST y ij y i, j • Also, SST = SSA + SSB + SSAB + SSE • Use same formulae as before for SSA, SSB, and SSAB 10 Sums of Squares for Example • • • • • • • SST = SSY - SS0 = 1,377,009.75 SSA = 1,368,900 SSB = 5041 SSAB = 462.25 Percentage of variation for A is 99.4% Percentage of variation for B is 0.4% Percentage of variation for A/B interaction is 0.03% • And 0.2% (approx.) is due to experimental errors 11 Confidence Intervals for Effects • Computed effects are random variables • Thus would like to specify how confident we are that they are correct • Usual confidence-interval methods • First, must figure Mean Square of Errors SSE 2 se 2 2 r 1 • r - 1 is because errors add up to zero 12 Calculating Variances of Effects • Standard deviation (due to errors) of all effects is the same: 2 se sq0 sq A sqB sq AB 2 2 r • In calculations, use t- or z-value for 22(r-1) degrees of freedom 13 Calculating Confidence Intervals for Example • At 90% level, using t-value for 12 degrees of freedom, 1.782 • Standard deviation of effects is 3.68 • Confidence intervals are qi(1.782)(3.68) • q0 is (493.8,506.9) • qA is (-299.1,-285.9) • qB is (-24.3,-11.2) • qAB is (-1.2,11.9) 14 Predicted Responses • We already have predicted all the means we can predict from this kind of model – We measured four, we can “predict” four • However, we can predict how close we would get to true sample mean if we ran m more experiments 15 Formula for Predicted Means • For m future experiments, predicted mean is yˆ t 1 Where syˆ ;22 r 1 2 m 1 2 1 1 s yˆ m se neff m Also, neff = n/(1 + DoF in y_hat) 16 Example of Predicted Means • What would we predict as confidence interval of response for no dynamic load management at 8 nodes for 7 more 1 tests? 1 2 1 s yˆ 7 14.7 9.95 16 / 5 7 • 90% confidence interval is (798.3,833.7) – We’re 90% confident that mean would be in this range 17 Visual Tests for Verifying Assumptions • What assumptions have we been making? – Model errors are statistically independent – Model errors are additive – Errors are normally distributed – Errors have constant standard deviation – Effects of errors are additive • All boils down to independent, normally distributed observations with constant variance 18 Testing for Independent Errors • Compute residuals and make scatter plot • Trends indicate dependence of errors on factor levels – But if residuals order of magnitude below predicted response, trends can be ignored • Usually good idea to plot residuals vs. experiment number 19 Example Plot of Residuals vs. Predicted Response 30 20 10 0 -10 -20 0 200 400 600 800 1000 20 Example Plot of Residuals vs. Experiment Number 30 20 10 0 -10 -20 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 21 Testing for Normally Distributed Errors • As usual, do quantile-quantile chart against normal distribution • If close to linear, normality assumption is good 22 Quantile-Quantile Plot for Example 2 1 0 y = 0.0731x + 4E-17 2 R = 0.9426 -1 -2 -20 -10 0 10 20 30 23 Assumption of Constant Variance • Checking homoscedasticity • Go back to scatter plot and check for even spread 24 The Scatter Plot, Again 30 20 10 0 -10 -20 0 200 400 600 800 1000 25 Multiplicative Models for 22r Experiments • • • • • Assumptions of additive models Example of a multiplicative situation Handling a multiplicative model When to choose multiplicative model Multiplicative example 26 Assumptions of Additive Models • Last time’s analysis used additive model: – yij = q0+ qAxA+ qBxB+ qABxAxB+ eij • Assumes all effects are additive: – Factors – Interactions – Errors • This assumption must be validated! 27 Example of a Multiplicative Situation • Testing processors with different workloads • Most common multiplicative case • Consider 2 processors, 2 workloads – Use 22r design • Response is time to execute wj instructions on processor that does vi instructions/second • Without interactions, time is yij = viwj 28 Handling a Multiplicative Model • Take logarithm of both sides: yij = viwj so log(yij) = log(vi) + log(wj) • Now easy to solve using previous methods • Resulting model is: y 10 10 q0 qA xA 10 qB x B 10 q AB x A x B 10e 29 Meaning of a Multiplicative Model 10 • Model is y 10 10 10 10 qA • Here, A = 10 is ratio of MIPS ratings of processors, B = 10qb is ratio of workload size • Antilog of q0 is geometric mean of responses: y 10q0 n y1y 2 y n where n = 22r q0 qA xA qB x B q AB x A x B e 30 When to Choose a Multiplicative Model? • Physical considerations (see previous slides) • Range of y is large – Making arithmetic mean unreasonable – Calling for log transformation • Plot of residuals shows large values and increasing spread • Quantile-quantile plot doesn’t look like normal distribution 31 Multiplicative Example • Consider additive model of processors A1 & A2 running benchmarks B1 and B2: y1 y2 85.1 79.5 0.891 1.047 0.955 0.933 0.015 0.013 y3 Mean I A B AB 147.9 104.167 1 -1 -1 1 1.072 1.003 1 1 -1 -1 1.122 1.003 1 -1 1 -1 0.012 0.013 1 1 1 1 Total 106.19 -104.15 -104.15 102.17 Total/4 26.55 -26.04 -26.04 25.54 • Note large range of y values 32 Error Scatter of Additive Model 50 25 Residuals 0 -25 -50 0 20 40 60 80 100 120 Predicted Response 33 Quantile-Quantile Plot of Additive Model 50 25 Residual Quantile 0 -25 -50 -2 -1 0 1 2 Normal Quantile 34 Multiplicative Model • Taking logs of everything, the model is: y1 1.93 -0.05 -0.02 -1.83 y2 y3 Mean 1.9 2.17 2.000 0.02 0.0302 0.000 -0.03 0.05 0.000 -1.9 -1.928 -1.886 Total Total/4 I 1 1 1 1 0.11 0.03 A -1 1 -1 1 -3.89 -0.97 B -1 -1 1 1 -3.89 -0.97 AB 1 -1 -1 1 0.11 0.03 35 Error Residuals of Multiplicative Model 0.20 0.15 0.10 0.05 Residuals 0.00 -0.05 -0.10 -0.15 -3 -2 -1 0 1 2 3 Predicted Response 36 Quantile-Quantile Plot for Multiplicative Model 0.20 0.15 0.10 Residual 0.05 Quantile 0.00 -0.05 -0.10 -0.15 -2 -1 0 1 2 Normal Quantile 37 Summary of the Two Models Factor I A B AB e Effect 26.55 -26.04 -26.04 25.54 Additive Model Percentage Confidence of Variation Interval 16.35 36.74 30.15 -36.23 -15.85 30.15 -36.23 -15.85 29.01 15.35 35.74 10.69 Multiplicative Model Percentage Confidence Effect of Variation Interval 0.03 -0.02 0.07 -0.97 49.85 -1.02 -0.93 -0.97 49.86 -1.02 -0.93 0.03 0.04 -0.02 0.07 0.25 38 General 2kr Factorial Design • • • • Simple extension of 22r See Box 18.1 for summary Always do visual tests Remember to consider multiplicative model as alternative 39 Example of 2kr Factorial Design • Consider a 233 design: y1 y2 14 16 22 18 11 15 34 30 46 42 58 62 50 55 86 80 Total Total/8 y3 Mean 12 14 20 20 19 15 35 33 44 44 60 60 54 53 74 80 I A B C AB AC BC ABC 1 -1 -1 -1 1 1 1 -1 1 1 -1 -1 -1 -1 1 1 1 -1 1 -1 -1 1 -1 1 1 1 1 -1 1 -1 -1 -1 1 -1 -1 1 1 -1 -1 1 1 1 -1 1 -1 1 -1 -1 1 -1 1 1 -1 -1 1 -1 1 1 1 1 1 1 1 1 319 67 43 155 23 19 15 -1 39.88 8.38 5.38 19.38 2.88 2.38 1.88 -0.13 40 ANOVA for 3 2 3 Design • Percent variation explained: A 14.1 B C 5.8 75.3 AB AC 1.7 1.1 BC ABC Errors 0.7 0 1.37 • 90% confidence intervals I 38.7 41.0 A 7.2 9.5 B C 4.2 18.2 6.5 20.5 AB AC 1.7 1.2 4.0 3.5 BC ABC 0.7 -1.3 3.0 1.0 41 Error Residuals for 233 Design 8 6 4 2 0 -2 -4 -6 -8 0 20 40 60 80 100 42 Quantile-Quantile Plot for 233 Design 8 6 4 2 0 -2 -4 -6 -8 -3 -2 -1 0 1 2 3 43 Alternative QuantileQuantile Plot for 233 8 6 4 2 0 -2 -4 -2 -1 0 1 2 44 White Slide