CIS 4930 Data Mining Spring 2016, Assignment 2

advertisement

CIS 4930 Data Mining

Spring 2016, Assignment 2

Instructor: Peixiang Zhao

TA: Yongjiang Liang

Due date: Monday, 02/29/2016, during class

Problem 1

[20 = 50 ∗ 4]

Consider 4 data points in a 2-D space: (1, 1), (2, 2), (3, 3), and (4, 4).

1. Calculate the covariance matrix Σ (Hint: you need calculate the centered matrix

Z first);

2. Calculate the correlation coefficient for the two dimensions;

3. Calculate the first and the second principal components;

4. What are the coordinates of the 4 data points projected to the 1-D space corresponding to the first principal component?

Problem 2

[10 = 30 + 50 + 20 ]

Use these methods to normalize the following group of data: 200, 300, 400, 600,

1000

1. min-max normalization by setting min = 0 and max = 1;

2. z-score normalization;

3. normalization by decimal scaling;

Problem 3

[10 = 50 ∗ 2]

Suppose a group of 12 sales price records has been sorted as follows: 5, 10, 11, 13, 15,

35, 50, 55, 72, 92, 204, 215. Partition them into three bins by each of the following

methods:

1. equal-frequency (equal-depth) partitioning;

2. equal-width partitioning.

2

CIS 4930: Data Mining

Problem 4

[15 = 7.50 ∗ 2]

The Apriori algorithm makes use of the prior knowledge of subset support properties.

1. Given a frequent itemset l and a subset s of l, prove that the confidence of the

rule s0 → (l − s0 ) cannot be more than the confidence of s → (l − s), where s0

is a subset of s;

2. A partitioning variation of Apriori subdivides the transactions of a database D

into n nonoverlapping partitions. Prove that any itemset that is frequent in D

must be frequent in at least one partition of D.

Problem 5

[30 = 120 + 20 + 20 + 20 + 120 ]

The Apriori algorithm uses a candidate generation and frequency counting strategy

for frequent itemset mining. Candidate itemsets of size (k + 1) are created by joining

a pair of frequent itemsets of size k. A candidate is discarded if any one of its

subsets is found to be infrequent during the candidate pruning step. Suppose the

Apriori algorithm is applied to the transaction databases, as shown in Table 1 with

minsup = 30%, i.e., any itemset occurring in less than 3 transactions is considered

to be infrequent.

Table 1: A Sample of Marekt Basket Transactions

Transaction ID

1

2

3

4

5

6

7

8

9

10

Items Bought

{a, b, d, e}

{b, c, d}

{a, b, d, e}

{a, c, d, e}

{b, c, d, e}

{b, d, e}

{c, d}

{a, b, c}

{a, d, e}

{b, d}

1. Draw an itemset lattice representing the transaction database in Table 1. Label

each node in the lattice with the following letters:

• N: If the itemset is not considered to be a candidate itemset by the Apriori

algorithm.

• F: If the itemset is frequent;

• I: If the candidate itemset is infrequent after support counting.

Spring 2016

Assignment 2

CIS 4930: Data Mining

3

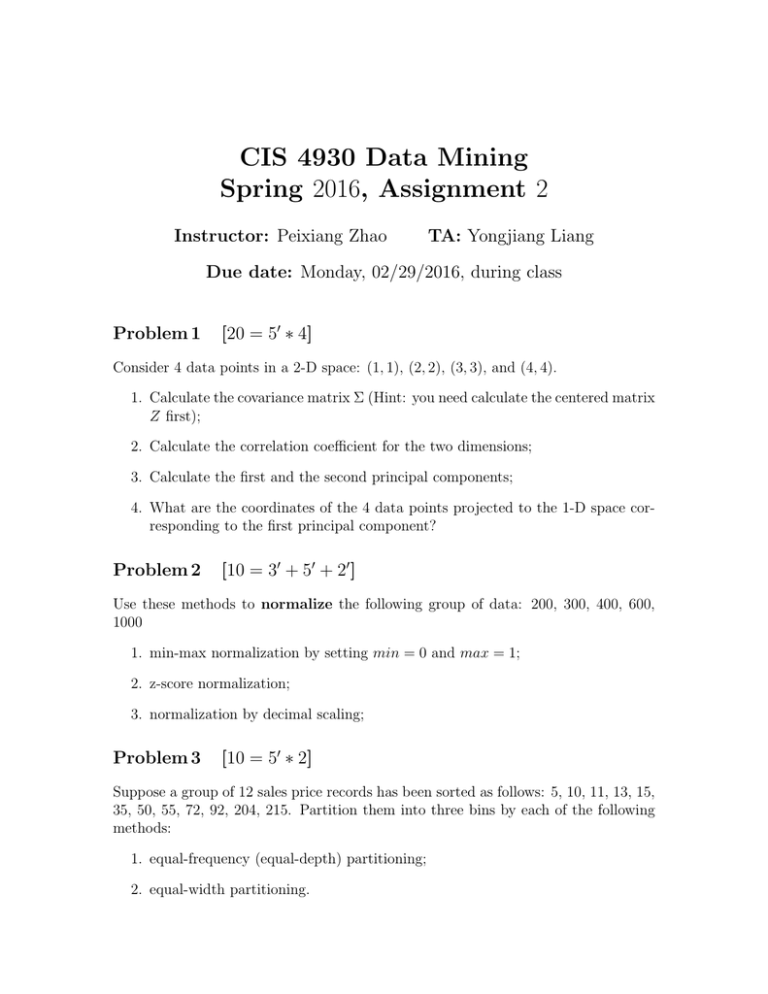

Figure 1: FP-tree of a Transaction Database.

2. What is the percentage of frequent itemsets (w.r.t. all itemsets in the attice)?

3. What is the pruning ratio of the Apriori algorithm on this database (Pruning

ratio is defined as the percentage of itemsets not considered to be a candidate)?

4. What is the false alarm rate (False alarm rate is the percentage of candidate

itemsets that are found to be infrequent after performing support counting)?

5. Redraw the itemset lattice representing the transaction database in Table 1.

Label each node with the following letter(s):

• M: if the node is a maximal frequent itemset;

• C: if it is a closed frequent itemset;

• N: if it is frequent but neither maximal nor closed;

• I: if it is infrequent.

Problem 6

[15 = 50 ∗ 3]

A database with 150 transactions has its FP-tree shown in Figure 1. Let the relative

minsup = 0.4 and minconf = 0.7.

1. Show c’s projected database;

2. Present all frequent 3-itemsets and 2-itemsets;

3. Show all the association rules with the format of XY → Z with the support

and confidence values. Here X, Y , and Z are items in the transaction database.

Spring 2016

Assignment 2