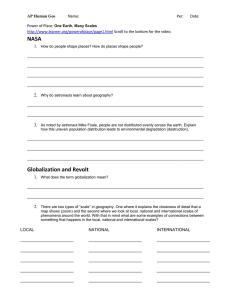

Document 12246630

advertisement