Data Warehousing: A Vital Role in the Pursuit of Competitive Advantage Abstract

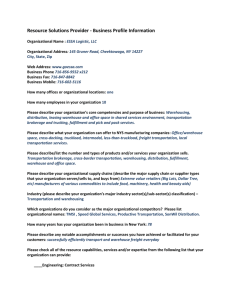

advertisement

Data Warehousing: A Vital Role in the Pursuit of Competitive Advantage Kimberly Merritt Dr. Richard Braley Cameron University Abstract Despite vast quantities of data collected in organizations today, many managers have difficulty obtaining the information they need for decision-making. This problem has given rise to the development of data warehouses. Data warehouses support managers’ complex decision making through applications such as the analysis of trends, target marketing, competitive analysis, and many others. Data warehousing has evolved to meet the needs of decision-makers without disturbing existing operational processing. In the past, an organization focused upon information concerning customers, products, inventories, sales and other superficial profile information bites that were readily available. Now, by utilizing data warehousing and data mining technologies, an organization is able to focus upon “affinities” of customers, relationships between marketed products, minimal inventory-storage windows that maximize movement from the inventory storage area to the shelf and sales. Data about sales, and what is done with data about sales, has changed the face of retail and wholesale marketing. This paper will investigate the strategic importance of data warehouses through an investigation of the applicable technology, growth of the market, and the factors spurring that growth. In addition, future trends in this rapidly expanding segment of information systems will be explored and the resulting strategic implications for modern retail businesses will be addressed. Introduction Corporations are increasingly dependent on information technology as a means by which to achieve and retain competitive advantage. In many cases, information technology is no longer seen as a cost of doing business, but rather is being exploited to ensure the success and survival of the firm. In the increasingly complex business world, massive amounts of relevant internal and external data exist that, if captured, warehoused, mined and analyzed, will provide valuable guidance in the increasingly complex realm of managerial decision-making. And yet, modern organizations are said to be drowning in data but starving for information. Organizations are anxious to take advantage of vast stores of historical operational data, especially information about many aspects of their current business activities. In the past, an organization focused upon information concerning customers, products, inventories, sales and other superficial profile information bites that were readily available. Now, with data warehousing and data mining, an organization focuses upon “affinities” of customers, relationships between marketed products, minimal inventory-storage windows that maximize movement from the inventory storage area to the shelf and sales. Data about sales, and what is done with data about sales, has changed the face of retail and wholesale marketing. Using data warehousing and data mining, Wal-Mart Corporation maintains a record of every cash register receipt, from every customer transaction, twenty-four hours every day, every day of the year, from every Wal-Mart store. Wal-Mart’s motto concerning data mining is this: Every store will be treated as if it were the only store owned. This paper will investigate the strategic importance of data warehouses through an investigation of the applicable technology, growth of the market, and the factors spurring that growth. In addition, future trends in this rapidly expanding segment of information systems will be explored and the resulting strategic implications for modern businesses will be addressed. Description of Data Warehousing Until recently, companies did not have enough computing power at their disposal to be able to take advantage of stored data. Now, however, the emergence of massive parallel processing is enabling organizations to analyze enormous amounts of historical data through the development of data warehouses. Parallel processing has emerged as the supercomputing system of choice for most massive data analysis systems. Older, vector-driven supercomputing has been superceded because of the reliability of parallel processing using hard disk technology called Redundant Array of Individual Disks (or RAID). Where the older systems required large amounts of time for searching and correlating data bases, massive parallel processing (MPP) holds the data at multiple hard disk locations with data mined at all locations simultaneously and then compared with data warehoused and mined throughout the system. MPP systems utilize hundreds or thousands of hard drives for storage. Multiple processing units (CPUs) break down a query into smaller parts and work on it simultaneously. Coordination of these multiple processors requires additional system capability. The work is assigned to the CPUs by one specialized processing unit called a parsing engine. The CPUs perform their assigned duties and return the result of the analysis back to the parsing engine. From the answers to the queries decisions are made. For example, toothpicks might be shelved next to paper plates because data analysis showed that 99% of the customers who purchased paper plates also had a box of toothpicks in their basket. Hand cream might move to the gardening area during a single month out of a year because warehoused and mined data showed that 88% of customers who purchased gardening shovels during the month of April also purchased a bottle of hand cream. Those relationships are called “affinities.” The objective of an “affinity” analysis is to make it as easy as possible for the customer to arrive in the retail outlet, move to their purchase arena and find all of the items they want to purchase. Helping the customer to easily make purchases is the goal of Wal-Mart Corporation, the largest retailer in the world. This recent, dramatic increase in processing power has empowered corporations to consider information processing opportunities that were previously unavailable. Data warehousing has been called the center of the architecture for information systems of the future (Inmon, 1995). McFadden, Hoffer and Prescott (1999) define a data warehouse as, “An integrated decision support database whose content is derived from the various operational databases” (p. 602). Inmon (1995) defines a data warehouse as a subject-oriented, integrated, time-variant, nonvolatile collection of data used in support of managerial decision-making processes. These are the fundamental data warehousing concepts: Subject-Oriented: A data warehouse is organized around the key subjects (or high-level entities) of the enterprise. Major subjects may include customers, patients, students, and products. Integrated: The data housed in the data warehouse are defined using consistent naming conventions, formats, encoding structures, and related characteristics. Time-variant: Data in the data warehouse contain a time dimension so that they may be used as a historical record of the business. Nonvolatile: Data in the data warehouse are loaded and refreshed from operational systems, but cannot be updated by end users (Inmon & Hackathorn, 1994). The process of obtaining information from the warehouse and exploiting it for the good of the firm is data mining (Barquin, 1996; Gardner, 1998). The construction and use of a data warehouse affords a number of potential advantages to the firm, including: better inventory management, more effectively targeted sales and marketing efforts, and improved customer satisfaction (Whiting, 1999). However, mining the data often forces the manager to resolve interpretation conflicts. Minute relationships emerge that harbor both compelling and irrelevant information. Potential information advantages surface amid a sea of potentially useless information. Events Spurring the Increased Use of Data Warehousing The concept of a data warehouse is a fairly new one. According to McFadden, Hoffer, and Prescott (1999), Devlin and Murphy published the first article describing the architecture of a data warehouse in 1988 (Devlin & Murphy, 1988); Inmon published the first book on data warehousing in 1992 (Inmon, 1992). Hackathorn (1993) described the rise of data warehousing and the proliferation of its use as the result of many separate advances in information technology occurring over many years. In addition to the evolution from vector-driven computing to RAID technology discussed above, these factors are: 1. Improvements in database technology, particularly the development of the relational data model and relational database management systems (DBMS) 2. The emergence of end-user computing, facilitated by powerful, intuitive computer interfaces and tools 3. Advances in middleware products that enable enterprise database connectivity across heterogeneous platforms Classifications of Data Warehouses The primary business drive behind the creation of data warehousing is the recognition that there is a distinct difference in operational data and decision support data. Operational data is used to support the day-to-day operations of the firm and is focused on the functional processing requirements of a business user (Gardner, 1998). On the other hand, informational data is generally subject oriented, with an enterprise view, providing information required for sound, strategic business decisions (Gardner, 1998). Informational data may contain historical data as well as operational data from a number of sources, both internal and external. When firms relied on operational data, they built operational databases. These traditional databases were designed based on relational database design principles. Data was represented as tables, and the goal for the database was to provide short update and query transaction time (Benander & Benander, 2000). The physical structure of the operational database was designed to ensure efficiency and integrity over small sets of clearly related data. In contrast, the data warehouse is designed to support data analysis (Benander & Benander, 2000). The environment is one of ad hoc queries and unstructured data. There are three main types of data warehouses: operational data stores, enterprise data warehouses, and data marts. Operational data stores (ODS) are the latest development in data warehousing (Benander & Benander, 2000). An ODS is used to support customer information files and contains all information pertaining to current, prospective, and past customers. The ODS is also used to track all recent customer activity and marketing interactions. This data warehouse application is especially valuable in a customer-oriented organizational structure. An enterprise data warehouse (EDW) is larger in scope than an ODS. This design also contains product, accounting, and organizational information as well as customer information (Benander & Benander, 2000). An EDW may contain up to five years of data. Because of the size and complexity of an EDW, most are not accessed by end-users, but are rather used to populate data marts. A data mart is a subject-oriented or department-oriented data warehouse whose scope is much smaller and more focused than an EDW (McFadden, Hoffer, & Prescott, 1999, Benander & Benander, 2000). The smaller scale of the data mart allows end-users to access data pertinent to the area in which they work without the size and complexity associated with an EDW. Applicable Technology As previously mentioned, corporations frequently have tremendous amounts of data but little information. There are many causes for this inconsistency, but two major ones are disparate legacy systems and the practice of developing systems with a focus on operational data. The resulting information gap leaves managers with no support for decision-making. The construction of a data warehouse bridges this information gap by pulling together data from disparate systems and presenting usable information to decision makers. There is, however, a risk in that massive data correlations amplify insignificant relationships making them appear to be fundamental when they are actually farcical. Multiple systems architectures exist for the development of a data warehouse. A two-tier architecture involves the operational source systems and the data warehouse. Users query the data warehouse directly (McFadden, Hoffer, & Prescott, 1999). A three-tier architecture exists when the operational source systems load a data warehouse which in turn loads the data marts (McFadden, Hoffer, & Prescott, 1999). A four-tier model results from server-based information access tools that let Internet users interactively query a database and view the results as HTML pages. The four tiers are the web browser, the web server, application servers, and the data warehouse (“Trends,” 1999). The major steps in the process of building and exploiting a data warehouse depend on the nature of the architecture employed. However, in general terms, Gallegos (1999) defines the steps as data acquisition, data storage, and data access. Data Acquisition Data acquisition includes accumulating, cleansing, and standardizing the data (Gallegos, 1999) obtained from operational source systems, also called legacy systems (Kimball, 1998). McFadden, Hoffer, and Prescott (1999) refer to this process as data reconciliation and utilize the terms capture, scrub, and transform. Regardless of the particular term used, the process involves extracting the relevant data from the source files; removing errors, duplicates, empty fields, and generally improving the quality of the data; and preparing or converting the data into the format desired for the data warehouse through various functions such as selection and joining. Some authors suggest summarization of the data (Gallegos, 1999), while other suggest maintaining detail-level data (Gardner, 1998). Regardless the method employed, the focus remains upon keeping “relevant” data relevant and minimizing the appearance previously mentioned impurities. Therefore, the value of a data warehouse is dependent on (1) the validity of the data it contains, and (2) the relevancy of the interpretation of that data. In a recent survey, more than 80% of corporate executives reported that improving customer data quality was a top priority (Faden, 2000). Data cleansing was once an obscure, specialized technology. However, with the increasing focus on the accuracy of data, cleansing has become a core requirement for data warehousing. The technology used for data cleansing was originally designed for use with address lists. Increasingly, the software is also used to help identify other relationships in a company’s data and for adding information from other sources during the cleansing process. Following acquisition and cleansing, the acquired data is moved to a data staging area, a storage area and set of processes that prepare the source data for use in the data warehouse (Kimball, 1998). The data staging area may be spread over a number of machines. Data Storage The acquired data then populates the actual data warehouse on presentation servers. This is the target physical machine on which the data warehouse data is organized and stored for direct querying by end users, report writers, and other applications (Kimball, 1998). Indexes are also created (McFadden, Hoffer, & Prescott, 1999). If a three-tier architecture is employed, the data marts will be populated from the data warehouse. The most commonly used data model for data storage is the star schema (McFadden, Hoffer, & Prescott, 1999; Benander & Benander, 2000), also known as the dimensional model (Kimball, 1998). Another possible storage configuration is a snowflake schema. The strength of either storage schema is its ability to identify affinities by associating pieces of data in unique and multiple ways. Benander and Benander (2000) describe a star schema to include a central “fact” table that contains a large number of rows that correspond to observed business events or facts (factual or quantitative data about the business). Surrounding the “fact” table are multiple “dimension” tables that contain classification and aggregation information about the central fact rows (descriptive data). The dimension tables have a one-to-many relationship with rows in the central fact table. This design provides extremely fast query response time, simplicity, and ease of maintenance for read-only database structures. Data Access The existence of a data warehouse does not guarantee a company a strategic advantage. Once the data warehouse is constructed and populated, the challenge becomes access and interpretation of the data and transformation into useful information and knowledge (Chen & Sakaguchi, 2000). Data access refers to the methods used by end users to access data in the data warehouse. Data access can result from traditional query tools, on-line analytical processing (OLAP) tools, data-mining tools, and data-visualization tools (McFadden, Hoffer, & Prescott, 1999; Kimball, 1998), each of which is discussed in the following paragraphs. Traditional query tools include reporting tools, spreadsheets, and personal computer databases populated from the data warehouse. On-line analytical processing (OLAP) tools involve the use of a set of graphical tools that provides users with multidimensional views of data, and allows users to analyze data based on windowing techniques (McFadden, Hoffer, & Prescott, 1999). Users can slice data to produce a simple two-dimensional table or view, or can drill the data down to a finer level of detail (Gallegos, 1999). Data mining refers to the discovery of knowledge from the data contained in the warehouse (McFadden, Hoffer, & Prescott, 1999). Some authors consider all data access to be data mining, while others refer to a more limited definition of data mining. The tools associated with data mining can be generally divided into two categories, statistical models and machine learning (Chen & Sakaguchi, 2000, McFadden, Hoffer, & Prescott, 1999). Statistical models contribute to evaluating hypotheses and results and applying those results. Machine learning is a branch of leading-edge artificial intelligence programming. It suggests using a training set of data from which the data mining system learns and finds the parameters for models. According to Chen and Sakaguchi (2000), this inductive reasoning approach can be used for a number of systems, including: neural networks, decision trees, and genetic algorithms. Finally, data access can be facilitated through the use of data visualization tools. These tools are graphical and multimedia software products that provide for visual representation of data (McFadden, Hoffer, & Prescott, 1999). Trends and exceptions are often easier to identify when the data is presented graphically. Growth of the Market Although the concept of the data warehouse is barely ten years old, the evolution of the technology has been rapid. With the emergence of cost-effective MPPs using commercial offthe-shelf (COTS) disk drives with RAID III Technology, vector systems diminished in value when compared to parallel processing manufacture, installation and start-up. Data warehousing is now one of the hottest topics in information systems. The market segment for this technology is estimated at over $15 billion (Chen & Frolick, 2000). Currently, over 90 percent of larger companies either have a data warehouse or are starting one (McFadden, Hoffer & Prescott, 1999). Burwen (1997) reported that 62 data warehousing projects showed an average return on investment of 321 percent, with an average payback of 2.73 years. Users also reported that expenditures on data warehousing technology were expected to reach nearly $200 billion by 2001. Emerging Technologies and Future Trends In only ten years, U.S. businesses have rapidly adopted data warehousing technology in an effort to exploit the strategic advantage offered by sophisticated decision support systems. However, there are many challenges inherent in the current technology. According to Chen and Frolick (2000), the drawbacks of the current approach to data warehousing include: the expense of client/server infrastructure, the growth of a mobile workforce in which employees will no longer have continuous access to high-speed connections, and system compatibility. The future trends in this technology will address these current limitations. Data Warehousing via Internet/Intranet Web technology is increasing access to data warehouses. Data warehousing liberates information, and the Internet makes it easy and less costly to access information from anywhere at anytime (Chen & Frolick, 2000). It is estimated that the marriage of these two technologies will make the Internet the primary decision support delivery platform by 2001 (Chen & Frolick, 2000, Chen & Sakaguchi, 2000). Web-based access to the data warehouse has significant advantages: capacity to deliver access to more users; simplicity of use and platform independence; and lower establishment and management costs (Booker, 1999, Chen & Frolick, 2000). Not long ago, the concept of a data warehouse was known to only the most sophisticated user who accessed the warehouse through expensive, complex, client-side business intelligence applications (Booker, 1999). Almost covert in nature, these business intelligence operations persist as massive parallel processing extends beyond terascale computing to petascale computing. However, the web and related technology provide a method of granting access to these databases to hundreds or thousands of employees, regardless of the computing scale. Company intranets are being employed internally to raise visibility and maximize use of a data warehouse while the Internet is being exploited externally to extend the benefits of a data warehouse beyond the company to customers, vendors and other partners (“Trends,” 1999, Booker, 1999). This use of web technology has greatly affected the market for information access tools and will contribute to substantial growth of this market in the future. This has increased the need for competent, trained programmers for warehousing systems. Additionally, end users now are less systems engineers and more inquisitive entrepreneurs. The emergence of browser- and Java-based analysis tools reduced the complexity of data analysis and allows users to focus on data interpretation rather than on learning how to use the technology and language the technology supports. The new web-based access tools are similar in function to the fat client/server systems, but are much simpler to use (Booker, 1999). There are challenges to implementing web-based data warehousing. The first is user scalability (Chen & Frolick, 2000). It may be difficult to estimate the number of users who will be accessing the data warehouse concurrently. This has significant implications for the software and hardware chosen to support the data warehouse. The second challenge to the development of web-based data warehousing is speed (Chen & Frolick, 2000). The speed of storage-access-query-response cycles is substantial. The complexity of user queries and the large amount of data involved further complicate the oftenslow transfer speed associated with the Internet. The only viable solution with today’s technology is to eliminate multiplexing and packet-switching, along with their inherent data checks, and initiate data streams with prioritized data checks of specific data blocks, thus dramatically reducing transfer speeds. The integrity of the systems and their stored data must be relied upon, rather than relying on a process of checking and re-checking the data during transfer and storage. Selective sampling of data during transfer and selective sampling of stored data will result in faster transfer and storage speeds. Finally, another challenge to web-based access to data warehouses is security (Chen & Frolick, 2000). Having access to data is not enough. The data must be reliable and therefore capable of generating quality information (McNee, et al., 1998). As corporations consider granting external entities access to the data warehouse, the data must be near perfect (Davis, 1999). Currently, as many as 80 percent of U.S. corporations have suffered at least one type of data loss. Data integrity is threatened by unauthorized attempts to access private data, computer viruses that can corrupt data, and simple, electronic malfunctions. In order to make good decisions, modern managers need access to complete information, and thus a data warehouse may need to provide access to external data as well as internal data (“Ten Mistakes,” 1999). Chen and Frolich (2000) suggest that security of web-based data warehouses is a matter of balancing the rights of authorized users while preventing the theft or corruption of the information. Add to that the need for trusted recovery after natural disasters, the need to continuously up-date warehoused data, and the inherent vulnerability of web-based customers who purchase warehouse time, and security costs sky-rocket for warehouse vendors. In the future, access to the data warehouse will be granted to more and more internal users. Some users will require sophisticated access to allow for complex data analysis, while others will be satisfied with more simple access and predefined queries. Therefore, a combination of client applications is also a new possibility for firms. Those users who require sophisticated on-line analytical capabilities may need to retain a fat client/server application while those users who require a more simple form of data acquisition and analysis can access the data warehouse through a web-based solution. This concept of dual routes of access is going to increase in importance as firms consider granting broader access to internal users (Booker, 1999). With that access, internal security loosens and vulnerability increases. External accessibility will also increase because of the potential benefit that can be gained by allowing supply chain partners and customers into the data warehouse. Companies that leveraged the power of the web to provide access to the data warehouse internally are finding that sharing the same information externally can provide tremendous benefits as well (Davis, 1999). Customers allowed access to the data warehouse are empowered, while granting access to supply chain partners can result in a tighter, leaner, more responsive supply chain. The Importance of Distributed Processing Although the size of data warehouses is predicted to grow in the future, it is possible that source data will be obtained from input sources that are ever decreasing in size. The expanding market of microprocessor-equipped devices with small data bases will make it possible to gather data at sources never before considered (Whiting & Caldwell, 1999). Until recently, the lack of small database systems has hindered the widespread use of application-specific portable computing devices for collecting data and sending it back to a central data store for analysis. However, database vendors are developing and marketing “lite” versions of database products. Sybase, for example, has a database product that is only 50 Kbytes, or 1/20 the size of its full-size big brother. Other companies have released similar products (Whiting & Caldwell, 1999). IBM is concentrating resources on the pervasive computing market. Company officials see three markets developing in this area: hardware and software components for device manufacturers; consulting and development work to support business customers; and systems for service providers building security, billing, and management infrastructures. IBM is also developing transcoding technology based on XML that will enable the synchronization of portable and embedded databases with company databases (Whiting & Caldwell, 1999). The most exciting potential from the trend toward distributed processing lies in the ability to link it with the trend toward increasing the size of data warehouses. These two trends present the possibility of gathering data from the mobile and embedded devices, then linking that data to a centralized database for analysis and mining: automated, continuous, massive data replacement from remote sources. Federal Express, already engaged in this practice, has already realized results (Whiting & Caldwell, 1999). Company officials have indicated that they no longer engage in mass marketing, but rather target the customers specifically. Other firms that have invested in this technology are also reaping benefits. Data warehouses will continue to evolve in the future and will be loaded with near real-time data (McNee, et al., 1998) to give decision makers access to up-to-the-minute information. Past business entities had to automate, immigrate or evaporate. Future business entities must make massive data marketing commonplace, or perish. Growth in the Size of Data Warehouses Data warehouses are very large databases, but they are predicted to get even bigger (McNee, et al., 1998). By 2001, the size of data warehouses is predicted to grow by 36 times, while the number of users is predicted to grow by 70 times (Gallegos, 1999). Application Development The state of technology in the data warehouse market has now reached a level of maturity sufficient to allow vendors to begin to develop packaged applications (“Trends,”1999). Packaged applications may save time and money, but they may lack the flexibility of custom development. There is also a trend in the Enterprise Resource Planning (ERP) market to include data warehousing solutions as part of the ERP systems (“Trends,” 1999). These ERP solutions will be attractive to businesses because the vendor has already addressed many issues surrounding data integration, transformation, movement, and modeling. The transaction processing systems of the past focused on maintaining internal recordoriented data that was relatively structured in nature. Modern executives rely on data that is often unstructured in nature (“Ten Mistakes,” 1999). For example, it may be necessary to store video, audio, graphics, etc. Future data warehouse systems will incorporate ever-increasing amounts of unstructured data in ever-increasing varieties of formats (McNee, et al., 1998). Conclusion The potential benefits from sharing a data warehouse are enormous. However, a very efficient, reliable system must be in place before it is opened to customers and suppliers. A data warehouse has no value if the users cannot access the data. Effective information access tools allow users to intuitively navigate through the database without the need to memorize methods or commands. Despite vast quantities of data collected in organizations today, many managers have difficulty obtaining the information they need for decision-making. This problem has given rise to the development of data warehouses. Data warehouses support managers’ complex decision making through applications such as the analysis of trends, target marketing, competitive analysis, and many others. Data warehousing has evolved to meet the needs of decision-makers without disturbing existing operational processing. The process of developing a quality data warehouse is long and complicated; however, the potential returns to the firm are enormous. The future of business is uncertain; however it is certain that to remain competitive, firms must invest in and commit to a form of data warehousing. References Barquin, R. (1996). On the first issue of the Journal of Data Warehousing. The Journal of Data Warehousing, 1, 2-6. Benander, A., & Benander, B. (2000). Data warehouse administration and management. Information Systems Management, 17(1), 71-80. Booker, E. (1999, June 28). Data warehousing – Unleash the Treasure Trove. Internetweek, 41. Burwen, M. (1997). Syndicated study of data warehousing market. DM Review, 7, 58-59. Chen, L., & Frolick, M. (2000). Web-based data warehousing: Fundamentals, challenges, and solutions. Information Systems Management, 17(2), 80-86. Chen, L., & Sakaguchi, T. (2000). Data mining methods, applications, and tools. Information Systems Management, 17(1), 65-70. Davis, B. (1999 June 28). Data warehouses open up. Informationweek, 42-48. Devlin, B., & Murphy, P. (1998). An architecture for a business information system. IBM Systems Journal, 27(1), 60-80. Faden, M. (2000, April 10). Data cleansing helps e-businesses run more efficiently: Data mining and e-commerce have exposed problems with the management of information. InformationWeek, 781, 136-139. Gallegos, F. (1999). Data warehousing: A strategic approach. Information Strategy: The Executive’s Journal, 16(1), 41-47. Gardner, S. (1998). Building the data warehouse. Association for Computing Machinery, Communications of the ACM, 41(9), 52-60. Hackathorn, R. (1993). Enterprise database connectivity. NewYork: John Wiley & Sons. Inmon, W. (1992). Building the data warehouse. Wellesley, MA: QED Information Sciences. Inmon, W. (1995). Tech topic: What is a data warehouse? Prism Solutions, Inc (On-line). Available: http://www.cait.wustl.edu/cait/papers/prism/vol1_no1. Inmon, W. & Hackathorn, R. (1994). Using the data warehouse. New York: John Wiley & Sons. Kimball, R., Reeves, L, Ross, M., & Thornthwaite, W. (1998). The data warehouse lifecycle toolkit: Expert methods for designing, developing, and deploying data warehouses. New York: John Wiley & Sons. McFadden, F. R., Hoffer, J. A., & Prescott, M. B. (1999). Modern database management (5th ed.). Reading, MA: Addison-Wesley. NcNee, B., Percy, A., Fenn, J., Cassell, J., Hunter, R., Cohen, L., Keller, E., Goodhue, C., Scott, D., Tunick Morello, D., Magee, F., Whitten, D., Schlier, F., Baylock, J., West, M., & Berg, T. (1998). The industry trends scenario: Delivering business value through IT. GartnerGroup Stragegic Analysis Report. Ten mistakes to avoid. (1999). The Data Warehousing Institute (On-line). Available: http://www.dw-institute.com/papers/10mistks.htm. Trends and developments in data warehousing. (1999, May 15). America’s Network, 22-24. Whiting, R. & Caldwell, B. (1999 June 14). Data capture grows wider. Informationweek, 59-72.