Document 12203348

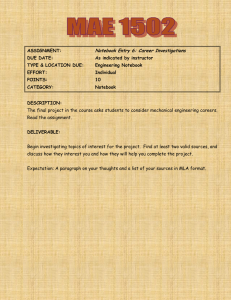

advertisement