Observational Cosmology R.H. Sanders

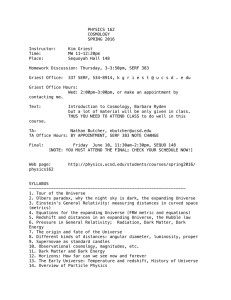

advertisement