Stat Mech: Problems II

advertisement

Stat Mech: Problems II

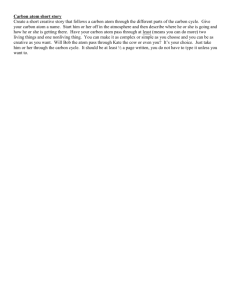

[2.1] A monatomic ideal gas in a piston is cycled around the path in the PV diagram in Fig. 3

Along leg a the gas cools at constant volume by connecting to a cold heat bath at a temperature

T2 . Along leg b the gas heats at constant pressure by connecting to a hot heat bath at a

temperature T1 with T1 > T2 . Along leg c the gas compresses at constant temperature while

remaining connected to the bath at T1 . Using the ideal gas law P V = NkB T and the energy

relation E = 3NkB T /2 answer the following.

Figure 3: P-V diagram

(a) Calculate the total amount of work ∆W done by the gas during one cycle. From the sign

of ∆W deduce whether the system acts as an engine or a refrigerator.

(b) Show that an amount of heat Q2 = (9/2)P0 V0 is released from the gas to the cold heat

bath along leg a

(c) Show that an amount of heat Qb1 = (15/2)P0 V0 is absorbed by the gas from the hot heat

bath along leg b.

(d) Show that an amount of heat Qc1 = 4P0 V0 log 4 is released from the gas to the hot heat

bath along leg c.

(e) Confirm that energy conservation holds.

(f) Is the process reversible?

5

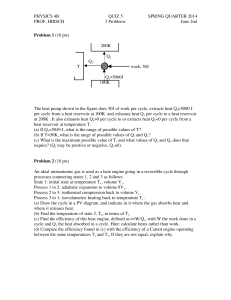

[2.2] Burning information. Consider a really frugal digital memory tape, with one atom

used to store each bit (Fig. 4). The tape is a series of boxes, with each box containing one

ideal gas atom. The box is split into two equal pieces by a removable central partition. If the

atom is in the top half of the box, the tape reads one; if it is in the bottom half the tape

reads zero. The side walls are frictionless pistons that may be used to push the atom around.

If we know the atom position in the nth box, we can move the other side wall in, remove the

partition, and gradually retract the piston to its original position (Fig. 5), thus destroying our

information about where the atom is, but extracting useful work.

Figure 4: Minimalist digital memory tape. The position of a single ideal gas atom denotes a

bit. If it is in the top half of a partitioned box, the bit is one, otherwise it is zero. The side

walls of the box are pistons, which can be used to set, reset, or extract energy from the stored

bits. The numbers above the boxes are not a part of the tape, they just denote what bit is

stored in a given position.

(a) Burning the information. Assuming the gas expands at a constant temperature T , how

much work P dV is done by the atom as the piston retracts in Fig. 5? This is also the

minimum work needed to set a bit whose state is currently unknown.

Figure 5: Expanding piston. Extracting energy from a known bit is a three-step process:

compress the empty half of the box, remove the partition, and retract the piston and extract

P dV work out of the ideal gas atom. (One may then restore the partition to return to an

equivalent, but more ignorant, state.) In the process, one loses one bit of information (which

side of the partition is occupied)

6

(b) Rewriting a bit. Give a sequence of partition insertion, partition removal, and adiabatic

side-wall motions that will reversibly convert a bit zero (atom on bottom) into a bit one (atom

on top), with no net work done on the system. Thus the only irreversible act in using this

memory tape occurs when one forgets what is written upon it (equivalent to removing and

then reinserting the partition).

(c) Forgetting a bit. Suppose the atom location in the nth box is initially known. What is the

change in entropy, if the partition is removed and the available volume doubles? Give both the

thermodynamic entropy (involving kB ) and the information entropy (involving kS = 1/log2).

This entropy is the cost of the missed opportunity of extracting work from the bit (as in part

(a)).

[2.3] Reversible computation. A general-purpose computer can be built out of certain

elementary logic gates. Many of these gates destroy information. Early workers in the field

of computing thought that these logical operations must have a minimum energy cost. It

was later recognized that they could be done with no energy cost by adding extra outputs to

the gates. Naturally, keeping track of these extra outputs involves the use of extra memory

storage – an energy cost is traded for a gain in entropy. However, the resulting gates now

became reversible.

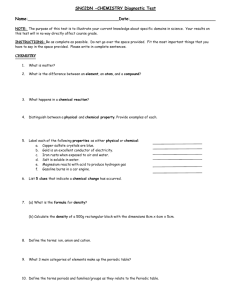

Figure 6: Exclusive-or gate. In logic, an exclusive or (XOR) corresponds to the colloquial

English usage of the word: either A or B but not both. An XOR gate outputs a one (true) if

the two input bits A and B are different, and outputs a zero (false) if they are the same.

(a) Irreversible logic gates. Consider the XOR gate shown in Fig. 6. How many bits of

information are lost during the action of this gate? Presuming the four possible inputs are

equally probable, what is the minimum work needed to perform this operation, if the gate is

run at temperature T ?

(b) Reversible gate. Add an extra output to the XOR gate, which just copies the state of input

A to the first output (C = A, D = A ◦ B). (This is the Controlled-Not gate, one of three that

7

Feynman used to assemble a general purpose computer). Make a table, giving the four possible

outputs (C, D) of the resulting gate for the four possible inputs (A, B) = (00, 01, 10, 11). If

we run the outputs (C, D) of the gate into the inputs (A, B) of another Controlled-Not gate,

what net operation is performed?

[2.4] Shannon entropy. Natural languages are highly redundant; the number of intelligible ftyletter English sentences is many fewer than 265 0, and the number of sound signals that could

be generated with frequencies up to 20 000Hz. This immediately suggests a theory for signal

compression. If you can recode the alphabet so that common letters and common sequences

of letters are abbreviated, while infrequent combinations are spelled out in lengthy fashion,

you can dramatically reduce the channel capacity needed to send the data. (This is lossless

compression, like zip, gz, and gif.). An obscure language for long-distance communication has

only three sounds: a hoot represented by A, a slap represented by B, and a click represented

by C. In a typical message, hoots and slaps occur equally often (p = 1/4), but clicks are twice

as common (p = 1/2). Assume the messages are otherwise random.

(a) What is the Shannon entropy in this language?

(b) Show that a communication channel transmitting bits (ones and zeros) can transmit no

more than one unit of Shannon entropy per bit.

(c) Calculate the minimum number of bits per letter on average needed to transmit messages

for the particular case of an ABC communication channel.

(d) Find a compression scheme (a rule that converts an ABC message to zeros and ones, that

can be inverted to give back the original message) that is optimal, in the sense that it saturates

the bound you derived in part (b). (Hint: Look for a scheme for encoding the message that

compresses one letter at a time. Not all letters need to compress to the same number of bits.)

[2.5] Uniqueness of entropy function. Consider a continuous function S(p1 , . . . , pN ) with

P

j pj = 1, which satisfies the following three properties:

1

1

S

> S(p1 , . . . , pN )

(1)

,...,

N

N

unless pj = 1/N for all j,

S(p1 , . . . , pN −1 , 0) = S(p1 , . . . , pN −1 ),

and

X

ql S(A|Bl ) = S(A, B) − S(B).

l

8

(2)

(3)

In the last case

S(A) = SI (p1 , . . . , pN )

S(B) = S(q1 , . . . , qM )

S(A, B) = S(r11 , . . . , rN M )

S(A|Bl ) = S(c11 , . . . , cN l )

with rlk = ql clk . We also define the function L(n) = S(1/n, . . . , 1/n)

(a) Suppose that ql = nl /n for all l (i.e., B has rational probabilities with n the least common

multiple of the denominators). Take the set A to have N elements with N = max l{nl }

and choose the joint probabilities according to rkl = 1/n for k = 1, . . . nl and rkl = 0 for

k = nl + 1, . . . N. Using property (3), show that

S(B) = L(n) −

X

ql L(nl )

l

(b) If L(n) = K log g for some constant factor K then S(B) in part (a) reduces to the form

given by the non-equilibrium or Shannon entropy. Thus, we have reduced the problem to

establishing that L(n) is given by log(n) up to a constant.

(c) Show that L(n) is a monotone function of n using properties (1) and (2).

(d) Show that L(nm ) = mL(n) by recursively applying property (3). (Hint: Take the sets A

and B to have n elements and each occurs independently with probability 1/n).

(e) If 2m < sn < 2m+1 then use parts (c) and (d) to show that

m

L(s)

m+1

<

<

n

L(2)

n

Using the same arguments, show that these inequalities also hold with L(s)/L(2) replaced

by log(s)/ log(2). Hence, show that |L(s)/L(2) − log(s)/ log(2)| < 1/n and thus L(s) =

K log(s) for some constant K.

9