A method for 21 cm power spectrum estimation in the

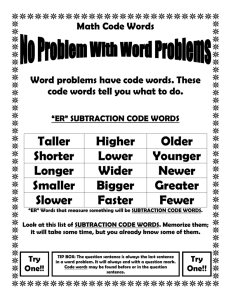

advertisement