Studies Addressing Reliability

advertisement

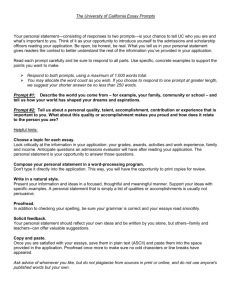

Reliability Handout March 8-9, 2012 CCCCO Assessment Validation Training Studies Addressing Reliability Materials needed None Persons Supplying Data 1. Students 2. Raters for performance assessments Test-Retest Method 1. Collect initial time 1 placement test data from a sample of students (at least 50). 2. Wait at least two weeks, and then retest the same group of students with the same or a parallel form of the placement test. 3. Pair the time 1 and time 2 scores for each student. 4. Compute a correlation coefficient between the time 1 and 2 placement test scores. 5. Report the results. The threshold for acceptable test-retest reliability is .75. Internal Consistency Method (Split Halves Approach) 1. 2. 3. 4. 5. Collect a single set of placement test data from a sample of students (at least 50). Obtain summed scores for the odd numbered items for each student. Obtain summed scores for the even numbered items for each student. Compute a correlation coefficient between the scores based on the odd and even items. This correlation represents an estimate of the internal consistency reliability for a test that contains half the number of items as the actual test. Thus, to estimate the reliability of the full-length test, the correlation coefficient computed in the previous step is adjusted using the following formula (Spearman-Brown). In the formula, the symbol “rodd, even” represents the odd/even score correlation computed in step 4 above. Full Test Reliability = 2 times rodd, even divided by (1 + rodd, even ) For example, if the correlation coefficient between the odd and even item scores was .60, the estimated full test reliability would be 1.2/1.6 or .75. 6. Many software programs compute correlation coefficients. For example, MS Excel includes the function “correl” which will determine this value. If a computer statistical software program is being used to analyze the data and it contains a reliability estimation program, the likely index of reliability computed by the program is called Cronbach’s alpha coefficient. This coefficient is an acceptable alternative to the split-half method in estimating the internal consistency reliability of a test’s scores. The threshold for an acceptable internal consistency reliability coefficient is .80. 1 Reliability Handout March 8-9, 2012 CCCCO Assessment Validation Training Rater Agreement Procedure: When performance assessments are used such as a direct writing assessment, there is a need to document rater scoring consistency, that is, when two or more qualified readers rate the same student papers, do the readers provide the same or similar evaluations of the same papers. The procedure is as follows: Train readers in the rating process (remember that strong quality training is the key to successful rater agreement); Working independently and adhering to the scoring guidelines, readers rate the papers. A random sample of least 60 to 75 papers need be scored by two readers independently. Readers must not be aware of ratings assigned by other readers. For papers read by two readers calculate the percent of exact agreement between the readers. Also compute the percent of agreement when the readers are within one point of each other. The criterion standard is 90% agreement within 1 point of agreement. The table below is a representation for the configuration of the data needing to be assembled. As an alternative, the correlation between the ratings from the two raters may be computed. The criterion standard for this coefficient is .70 or higher. When either standard is not met, the college needs to spend more time in training readers, improve the precision of the language defining score points on the rubric scale, and when needed, provide for a third reader to adjudicate scoring discrepancies. Illustration for 100 papers each scored by two independent readers using a six-point scoring rubric First reader scores: Second reader scores: 1 2 3 4 5 6 1 7 3 0 0 0 0 2 2 10 2 2 1 0 3 1 3 12 2 1 0 4 0 2 2 13 4 1 5 0 1 0 1 12 3 6 0 0 1 1 3 11 Number of papers receiving identical scores = 65 (7+10+12+…..) Number of papers receiving scores within 1 point of agreement = 25 (3+2+2+3+…) Thus, (65+25)/100 =90 percent rater consistency. 2 Reliability Handout March 8-9, 2012 CCCCO Assessment Validation Training Common Deficiencies in Reliability Studies Submitted by Local Colleges and Preliminary Report Comment Examples Common Errors or Deficiencies in Evidence Submitted 1. A sufficient sample size is not used. A sample size of 50 is required. 2. The values reported do not meet minimal criteria stated in the Standards. The minimum criterion for a test-retest index value is .75; for an internal consistency index, the minimum value is .80; for a correlation coefficient between raters’ scores in a writing assessment, the minimum value is .70; and for the percent agreement index (within one score value) between raters’ scores in a writing assessment, the minimum value is 90 percent. 3. The college needlessly conducts their own reliability study when they could have used the information reported in the test’s Technical Manual. 4. At the time of renewal for a writing assessment, the rubric, scoring procedures or the prompts may have changed, but the college does not conduct a new reliability study. 5. When more than one prompt is being used in a single occasion of a writing assessment, no evidence on the equivalency of placement across prompts is provided. Comment Example 1: DRP The reliability coefficient reported by the test publishers in the test’s technical manual may be referenced to satisfy the requirement for this area of the Standards. The criterion value for an internal consistency index is .80 and for a test-retest index, .75. Comment Example 2: Computer Adaptive Placement Test The reliability coefficients reported are not sufficiently high to satisfy this standard. It is also unclear as to the types of items on the four forms of the test configured and what the exact design for data collection was. Given the low coefficients and the placement decision model used, it is suggested that the college consider implementing a test-retest decision (classification) consistency study as the reliability study. It is the consistency of the placement given the decision rules that is the important reliability evidence. 3 Reliability Handout March 8-9, 2012 CCCCO Assessment Validation Training Comment Example 3: English Writing Sample The study and analyses referenced is to have two or more raters rate the same set of student response papers (a minimum of 50 papers is needed), thus each paper will have a minimum of two scores. It is these pairs of scores for the sample of papers that would be correlated if that were the reliability index to be reported. The Standards identify a minimum value of .70 if this is the design used and index reported. The other index that can be computed and reported for the same set of data (scores for two raters on the sample of papers) is the extent to which two raters agree within 1 point of each other across the sample of papers (percent agreement index). The Standards identify a minimum value of 90% agreement if this is the design used and index reported. Comment Example 4: English Writing Sample If prompts or scoring procedures have changed since the last review, then new data on inter-scorer and inter-prompt reliability need to be provided. Please confirm whether the prompts used and scoring procedures have changed over time or remain the same as they were during the initial approval review. Comment Example 5: Writing Sample The data provided are for only 23 ratings using two raters. At least 50 ratings should be in the sample set of data and if several raters are used in the rating process, data on more than two raters should be provided. Comment Example 6: English Placement Writing Sample (ENGL) There is no indication if the prompts are old or new. For the ENGL, it is implied that the prompts come from a "topics" pool with no indication if the topics pool is constant and unchanging from past years. The college needs to address and clarify the prompt issue. If prompts are new, then their equivalency needs to be addressed. Comment Example 7: ESL Writing Sample It is unclear whether multiple prompts are used during any one assessment time, i.e., prompts are assigned at random or students have choice. If multiple prompts are used during any one testing period, then evidence showing prompt equivalency is required. 4 Reliability Handout March 8-9, 2012 CCCCO Assessment Validation Training Reliability Reporting Examples Split Halves minimum correlation to meet reliability estimate criterion of .80 for a test’s total score is .67. Inter-prompt Equivalency Designs Preferred Design (Double Testing, Counterbalanced): Have students respond to two prompts in a randomized, counterbalanced order (approximately half get Prompt A first and Prompt B second, the others get the reverse order, Prompt B first and Prompt A second). This design would be used for all pairings of prompts available for use as part of a college’s writing assessment. If six prompts were available for use, this would result in 15 separate pairings to be studied. The preferred way to summarize the data collected from this design would be to report placement agreement rates for the paired prompts. Also, during the assessment period when the study is conducted, the highest score from the two prompts should be used for placement. Sample 1 – See the next two pages. 5 Reliability Handout March 8-9, 2012 CCCCO Assessment Validation Training 6 Reliability Handout March 8-9, 2012 CCCCO Assessment Validation Training 7 Reliability Handout March 8-9, 2012 CCCCO Assessment Validation Training Optional Design (Single Testing, Random Assignment): Prompts are assigned to students at random during the data collection study. The primary concern is about the effect of random assignment on the individual student’s score and placement if any one prompt results in a different distribution of placement into courses. If this design were to be used, the college would be required to submit placement rate data (frequencies across the rubric score values) for the different prompts. One could not only examine the equivalency of the mean ratings for the independent groups of test takers. The latter is not sufficient as one or more of the prompts may result in a restriction of range for the score values (no 1’s or 6’s, therefore no placement of students into those courses) or result in a different distribution across score values, thus resulting in differential placement by prompt. If a prompt is found to produce a non-equivalent distribution of placement scores, adjustments should be made for students receiving that prompt such that they will not be advantaged or disadvantaged in the placement recommendation during the assessment period when the study is conducted. Sample 2 The equivalency of essay prompts was demonstrated in the initial validation study with the distributions of essay scores for each prompt. The graph below shows these distributions. The considerable overlap of the distributions shows that the set of prompts result in approximately equivalent essays. 60% 50% PROMPT 1 PROMPT 2 PROMPT 3 PROMPT 4 PROMPT 5 PROMPT 6 PROMPT 7 PROMPT 8 PROMPT 9 PROMPT 10 PROMPT 11 PROMPT 12 40% 30% 20% 10% 0% 0 1 2 3 4 5 6 ESSAY SCORE 8