Infants’ Understanding of the Link Between Visual Perception and

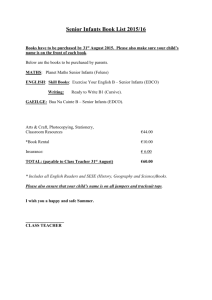

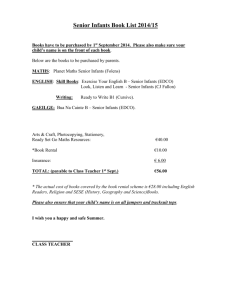

advertisement