Psychological Science The New Statistics: Why and How

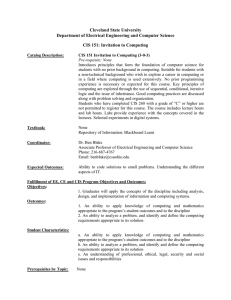

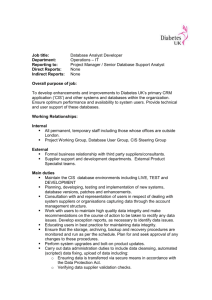

advertisement