Learning Hidden Markov Models using Probabilistic Matrix Factorization HIDDEN MARKOV PROCESS CONTENT

advertisement

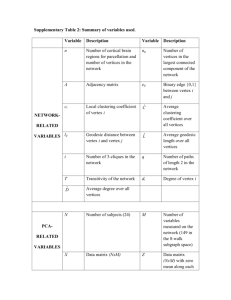

Learning Hidden Markov Models using Probabilistic Matrix Factorization April 30 2011 Ashutosh Tewari Decision Support and Machine Intelligence M. Giering, M. Shashanka (collaborators) 2 HIDDEN MARKOV PROCESS CONTENT 1. Hidden Markov Models V1 V2 V3 b2(1) b2(2) b3(3) 2. Parameter Estimation (Baum-Welch) V1 V2 V3 b3(1) b3(2) b3(3) 3. Probabilistic Matrix Factorization V1 4. HMM parameter estimation using PMF V2 π2 V3 a12 S2 b1(1) b1(2) b1(3) 5. Experiments a32 a23 a21 π1 S1 Observed Sequence 3 a13 π3 S3 a31 V3 V2 V2 V1 V2 V3 V2 V1 V1 HIDDEN MARKOV MODELS HIDDEN MARKOV MODEL Generative Model Applications Hidden Observed Model Parameters: 1. Speech Recognition Transition Probabilities 2. Character Recognition 3. Intrusion Detection Emission Probabilities Observed Sequence Observed symbols are being emitted by some Hidden St t States 4. Bioinformatics Initial State Distribution 5. Remaining Useful Life Prediction Hidden Sequence 6 Source 6. S Separation S ti 5 6 BAUM-WELCH ALGORITHM HIDDEN MARKOV MODELS Expectation Maximization Problem Classes EM algorithm gives the ML estimate of the parameters, when the generative model has hidden variables**: Initialization: Iterations (till convergence) Class 1: Given the model parameters, compute the probability of a symbol sequence, Class 2: Given the model parameters and an observed symbol sequence, determine the most likely hidden state sequence. Forward/Backward recursive algorithm E Step: E Step: p Estimate the distribution of hidden variables,, given the model parameters and the observed data. M Step: Re-estimate the model parameters given the distribution computed in the E step and the observed data. Viterbi algorithm M St Step: Class 3: Given the observed symbol sequence, compute the model parameters. Baum-Welch EM algorithm Guarantees local maximization of the observed data likelihood given the model parameter parameter. We address this problem !! Complexity: O IterEMN2T !!!!! **R.M. 7 Termination Maximizes Neal, G.E. Hinton, A View of EM Algorithm that Justifies Incremental, Sparse and other Variants. 8 APPLICATIONS OF PMF PROBABILISTIC MATRIX FACTORIZATION Topic Modeling Entries of all matrices are non-negative ! Goal: Identifying hidden topics in a document corpus !!! D = Matrix with columns representing the data vector. W =Matrix with columns representing basis vector. H = Matrix with columns representing p g mixing g weights. g Popular Models: An observed data vector can be represented as a linear combination of basis vectors. 1. Probabilistic Latent Semantic Analysis (Hofmann) 2. Latent Dirichlet Allocation (Blei et al) Whi h matrix Which t i is i factorized f t i d? If D is a double stochastic matrix it can be factorized symmetrically (PLCA) If D is a left stochastic matrix it can be factorized asymmetrically (PLSA) Generative Model of PLSA (k k = hidden topic) P w|k P k|d MVS Shashanka et al, Probabilistic Latent Variable Models as Non-Negative Factorizations, Computational Intelligence and Neuroscience, May 2008. 9 HMM ESTIMATION USING PMF Pd d k w Topic modeling of a corpus with 16,333 news articles with 23,075 unique words. Topic 1 Topic 2 Topic 3 Topic 4 NEW FILM SHOW MUSIC MOVIE PLAY MUSICAL BEST ACTOR FIRST YORK OPERA THEATER ACTRESS LOVE MILLION TAX PROGRAM BUDGET BILLION FEDERAL YEAR SPENDING NEW STATE PLAN MONEY PROGRAMS GOVERNMENT CONGRESS CHILDREN WOMEN PEOPLE CHILD YEARS FAMILIES WORK PARENTS SAYS FAMILY WELFARE MEN PERCENT CARE LIFE SCHOOL STUDENTS SCHOOLS EDUCATION TEACHERS HIGH PUBLIC TEACHER BENNETT MANIGAT NAMPHY STATE PRESIDENT ELEMENTARY HAITI P( w | d ) P(k | d ) P( w | k ) 10 k PARAMETER ESTIMATION Which Matrix to Factorize? Expectation Maximization Algorithm is double stochastic. Convert the sequence into a count matrix. ti Initialize C Computational t ti lC Complexity: l it Iterate E Step 1) Count matrix generation O T 2)) EM algorithm g O IterEMM2N)) Overall How a pair is generated ? M Step: O T IterEMM2N ~ O T for long sequences BW Complexity: O IterEMN2T !! Hidden state HMM Parameters are estimated as How do you estimate Terminate Maximizes 11 12 HMM ESTIMATION USING PMF EXPERIMENT Improved Generative Model Emission Probabilities State Transition Matrix The above generative model does not include the transition probabilities e explicitly. plicitl S1 S2 S3 S1 0 0.9 0.1 S2 0 0 1 S3 1 0 0 Observed Sequence after rounding + Emission Probabilities (Estimated) Th generative The i model d l is i improved i d by b including i l di the h transition i i probabilities b bili i **. Count Matrix (color map) Factorization of Count Matrix using the PMF. **M. Shashanka, A Fast Algorithm for Discrete HMM Parameter using Observed Transitions, ICASSP, 2011. 13 COMPARISON WITH BAUM-WELCH ALGORITHM 14 EFFECT OF SEQUENCE LENGTH Accuracy and Time Complexity Sequence Length = 104 Estimated Emission Probs. B Lakshminarayanan and R Raich, “Nonnegative Matrix Factorization for Parameter Estimation in Hidden Markov Models ” in Workshop on Models, Machine Learning for Signal Processing, 2010. PMF Time Taken (s) log(P(O|λ) ) 0.03 -29910 BW 28.41 -24397 The PMF was faster by almost 3 orders of magnitude !!! Empirically estimated joint distribution is poor at low sequence length, resulting in a bad model !!!! Hellinger Distance between the estimated and true emission probabilities 15 16 APPROXIMATING t t 1 Different Option: 1. Soft discretization. 2. Non-parametric estimation. 3. Parametric mixture model. Used a Gaussian Mixture Copula Models (GMCM)** Extension E t i off GMMs GMM to t handle non-Gaussian component densities. A. Tewari, A. Raghunathan, Gaussian Mixture Copula Models, In Preparation, 2011. 17