Large-Scale Test Mining SIAM Conference on Data Mining Text Mining 2010

advertisement

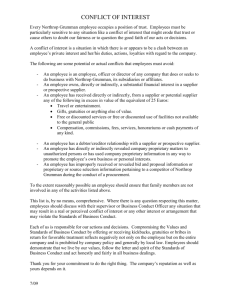

Large-Scale Test Mining SIAM Conference on Data Mining Text Mining 2010 Alan Ratner Northrop Grumman Information Systems NORTHROP GRUMMAN PRIVATE / PROPRIETARY LEVEL I Aim • Identify topic and language/script/coding of real-world informal text at highest speed possible • Informal text – – – – Blogs, posts, tweets Don’t necessarily follow conventional rules of spelling & grammar Transliterated language usually ad hoc In typical web documents far more bytes of HTML & JavaScript than content making everything look like English. Can be parsed out but timeconsuming. – Does not look like newswire (written by journalists, rich in named entities, summarized in first paragraph) • High speed – Want to process documents as quickly as possible – Trillions of web pages – Gigabytes per second speed desired 2 What is Text? 3 NORTHROP GRUMMAN PRIVATE / PROPRIETARY LEVEL I 6 Fundamental Questions in Mining Text ¾ Detection 1. 2. ¾ Clustering 3. 4. ¾ Does a document contain text in any language? (Or is it audio, video, …?) If so, does the text have a topic? (41% of tweets are “pointless babble”.) Which documents are in the same (but not necessarily known) language? Which documents are on the same (but not necessarily known) topic? Identification 5. 6. What is the language? (Is it a language the system has been trained to recognize?) What is the topic? (Is it a topic the system has been trained to recognize?) 4 Specific Goals • Identify specific language(s) of documents and identify documents on specific topics • Accuracy requirements – False negatives OK - users don’t know what has been missed – Precision needs to be high enough so we don’t annoy users with lots of false alarms – Extremely low false positive rate for topic id (<< 1 ppm) • Speed requirement – Fast hardware or software; simple algorithms • Ideally use same algorithm for both language and topic id • Language-neutral – Work on all languages, including Asian languages that do not parse words with spaces 5 NORTHROP GRUMMAN PRIVATE / PROPRIETARY LEVEL I Text Analysis Algorithms • Many algorithms have been used for topic id – – – – – – – – – – – Bayesian Markov Markov Orthogonal Sparse Bi-word Hyperspace Correlative Entropy (Optimal Compressor) (longest string match) Minimum Description Length Term Frequency*Inverse Document Frequency Morphological Centroid-based Logistic Regression (similar to SVM and single-layer NN) • Most can be expressed using additive weights of detected tokens • In the domain of interest (low false alarms on informal text) logistic regression worked best and is computationally efficient 6 NORTHROP GRUMMAN PRIVATE / PROPRIETARY LEVEL I Additive Weight Algorithms • Training – Define & select tokens (e.g., words, words with spaces before & after, phrases, N-grams) – Assign weights (for LR weights range from roughly -1 to +1) • Testing – Detect tokens – Add weight to summer (S) – For each document, convert weight sum to likelihood score & compare to threshold • P(on-topic or on-language) = 1/(1+e-S) 7 NORTHROP GRUMMAN PRIVATE / PROPRIETARY LEVEL I Token Detection • In hardware – Load tokens and ternary bit masks into CAM (Content Addressable Memory) – Stream data through CAM to automatically identify token(s) • In software – Aho-Corasick algorithm creates a large state machine • e.g., 50K tokens with 130K states – “Terminal” states indicate detection of a token – For each byte of data • Next_state = TableLookup[Previous_state][New_byte] • If Terminal_state[Next_state] == TRUE then – Retrieve weight and add to summer – Execution time is relatively independent of number of tokens or average length of tokens 8 NORTHROP GRUMMAN PRIVATE / PROPRIETARY LEVEL I 4-Token State Machine sp t h e sp n y a n transition to orange, green or pink state if space, t or a 9 d “ the ” sp sp sp else transition to blue state NORTHROP GRUMMAN PRIVATE / PROPRIETARY LEVEL I “ then ” “ they ” “ and ” Solving Text Analysis Problems using Hadoop • Hadoop is a framework for writing and running applications that process vast amounts of data in parallel on large clusters (up to thousands of nodes) of commodity hardware in a reliable, faulttolerant, manner. • Hadoop is free/open-source software that emulates Google’s proprietary MapReduce – The master node takes the input, chops it up into smaller sub-problems, and distributes those to slave nodes where the “Map” tasks ingest and transform the input The “Reduce” task(s) then aggregate or summarize the Map output to deliver the final output. – 10 Hadoop Software • Hadoop will run on anything from a laptop to a vast cluster of computers Program Stacks Windows/Cygwin/Hadoop Windows/VM/Linux/Hadoop Linux/Hadoop Linux/VM/Linux/Hadoop • 11 Software packages work with Hadoop to provide: − scalable distributed file systems and data warehouses (HBase, CloudBase) − data summarization, ad hoc querying, scripting (Pig, Hive) − massive matrix math, graph computation, machine learning, social network analysis (Hama, Mahout, X-Rime, Pegasus) Hadoop Data Flow with 1 Reduce Slave 1 Map Combine Input File 1 Slave 2 Map Input File 2 12 … Combine Reduce Hadoop/MapReduce Hadoop automatically distributes blocks of data to slave nodes and then lines of text to Maps Main/Run code defines interfaces & loads globals into distrib. cache Your Map •alue pairs code transforms one line of text & outputs KVPs Your Map: Configure code defines Map globals 13 Hadoop automatically sorts and groups outputs of 1 node’s Maps by key Hadoop automatically sorts and groups outputs of all Combines by key Your Combine code transforms sorted & grouped output of all Maps for one node & outputs KVPs Your Combine: Configure code defines Combine globals • One output file from each Reduce Your Reduce code summarizes sorted and grouped output of the Combines & outputs KVPs Your Reduce: Configure code defines Reduce globals KVP = Key Value Pair Original Hardware-Based Text Analyzer 14 • High speed solution with expensive special hardware • HW limitations (# & length of tokens, wildcarding in ternary CAM) • Few people with FPGA/VHDL skills New Improved Hadoop Text Analyzer 15 • High speed software solution on generic hardware • Enabled use of a very sophisticated detection algorithm (AhoCorasick Trie – as in “information reTRIEval”); unlimited token length; speed relatively independent of number of tokens • Many people with Java skills Cluster Configuration Each server may host more than one slave Master & Slave 1 Slave 2 Slave 3 Each slave may run many Maps Master finds slaves using IPs in Host Table Cluster may have 1 or many Reduces Slave N Our servers each have 2 quad-core Nehalem Xeons, 24-48GB RAM, 4 1TB drives Per rack: 328 cores, 1TB RAM, 164TB drives, 30KW, 1700 pounds 16 Language Identification Results • Performance varies – No standard data set or procedure for testing language identification – Worked very well overall except on documents with just a few words – Mutually intelligible languages such as Dutch/Afrikaans, Indonesian/Malay, or Norwegian/Swedish harder to distinguish than dissimilar languages – Relatively few tokens (most common words in informal language) used for each language (7 for Hindi, 55 for English, 95 for Spanish) it is possible to construct difficult documents – Could not distinguish random words from real language • Language is defined as words and grammar 17 NORTHROP GRUMMAN PRIVATE / PROPRIETARY LEVEL I Topic Identification Results Based on incremental content of newsgroup posts (quoted prior posts and metadata such as newsgroup, thread, author removed.) 18 NORTHROP GRUMMAN PRIVATE / PROPRIETARY LEVEL I The Bottom Line • Text analysis readily performed on a cluster of commodity computers using Hadoop • Comparison between software and hardware solutions – In hardware achieved 2.5 Gb/s real-time throughput (but most such links operate at a fraction of their capacity) – In software achieved 1.3 Gb/s off-line throughput on a cluster of 64 ancient servers (on new but unoptimized servers 1.6 Gb/s) – In a properly configured Hadoop cluster performance scales linearly • Elapsed time = 15-20 seconds + C/(number of servers) 19 Alan.Ratner@ngc.com 20 NORTHROP GRUMMAN PRIVATE / PROPRIETARY LEVEL I