EDLSI with PSVD Updating April Kontostathis Ursinus College Erin Moulding, Raymond J. Spiteri

advertisement

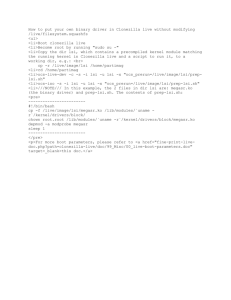

EDLSI with PSVD Updating April Kontostathis Ursinus College Erin Moulding, Raymond J. Spiteri University of Saskatchewan Outline • • • • • • Overview of Latent Semantic Indexing (LSI) Updating methods for PSVD Essential Dimensions of LSI (EDLSI) Description of our experiments Results Conclusions Vector Space Retrieval • Documents represented by vectors • Entries represent importance of term (word) ▫ ▫ ▫ ▫ Binary or raw frequencies May be weighted, locally or globally Normalized Common words (stop-words), infrequent words removed Vector Space Retrieval • Vectors combined into term-document matrix A • Query q also represented as vector, same rules • Scores computed by: w = qT A ▫ Entries of w are relevance of document to q • Same with multiple queries • Problems: ▫ Synonymy: use wrong synonym, miss documents ▫ Polysemy: multiple meanings, get wrong one Latent Semantic Indexing (LSI) • Approximate term-document matrix by rank-k partial singular value decomposition (PSVD): A = U Σ VT ≈ Ak = Uk Σk VkT • Uk, Vk orthonormal, Σ diagonal matrix of singular values • Closest approximation to A in 2-norm • Captures term relationship information • k chosen empirically, usually 100-300 Updating methods for PSVD • Computation of PSVD is expensive ▫ May be reused for many queries if collection is stable ▫ When collection changes, must add new information ▫ Recomputing PSVD very expensive • Methods of adding new information: ▫ Folding-in, updating, folding-up Folding-in • Project new documents D into k-dimensional space, then add to bottom of Vk: Dk = DT Uk Σk−1 [ A, D ] ≈ Uk Σk [ VkT, DkT ] • Fast and easy • Generally corrupts orthogonality of Uk, Vk • Not recommended if collection changes often Updating • Finds exact PSVD of [ Ak, D ] to roundoff error • Uses a smaller QR decomposition and PSVD calculations • Slower than folding-in, but much more accurate • Still faster than recomputing, and gives same result to roundoff error Folding-up • Hybrid method of folding-in and updating • New documents are folded-in until a threshold is reached, then updated with all new documents • Two threshold methods: ▫ Number of documents added reaches pre-selected percentage of current term-document matrix ▫ Error threshold based on the accumulated loss of orthogonality in Vk Essential Dimensions of LSI (EDLSI) • As k approaches the rank r of A, LSI approaches traditional vector-space retrieval ▫ But LSI outperforms vector-space for some collections, even for small k • Hypothesis: LSI captures term relationship information in first few dimensions, then continues to add dimensions to capture data from vector-space methods. Essential Dimensions of LSI (EDLSI) • Idea: use both vector-space retrieval and LSI with very small k • Score is a weighted sum of scores: w = x (qT Ak) + (1 – x) (qT A) • Optimal k with this method usually under 50 • Optimal x small, usually 0.2 or less • Outperforms LSI (both run-time and retrieval performance) Our experiments • Combining EDLSI with PSVD updating methods ▫ Each provides improvement over LSI alone, will combination provide further improvement? • Collections: ▫ Small SMART datasets, used often for LSI ▫ Two subsets of TREC AQUAINT, size 15000 and 30000 documents each (referred to as HARD-1 and HARD-2 respectively) Our experiments • Metrics for evaluation: ▫ Precision: number of retrieved relevant documents divided by total number of retrieved documents ▫ Recall: number of retrieved relevant documents divided by total relevant documents in dataset ▫ 11-point precision: average of precision at 11 standard recall levels (0%, 10%, … , 100%) ▫ Mean Average Precision (MAP): average of 11point precision over all queries Our experiments • Partition each dataset into initial set of 50% of documents • Add incrementally with 3% of documents • For each dataset, each method, determine optimal k for LSI, optimal k and x for EDLSI ▫ Optimal in terms of MAP ▫ If multiple runs give same MAP, smallest k and x value chosen Our experiments • EDLSI: tested k from 5 to 50 for small datasets and 5 to 100 for HARD-1, in increments of 5 • EDLSI: tested x from 0.1 to 0.9 by 0.1 • LSI: tested k from 25 to 200 for small datasets and 25 to 500 for HARD-1, in increments of 25 • For HARD-2, same parameters as HARD-1 used • Each dataset tested with recomputing, foldingin, updating, and both folding-up methods Results • EDLSI generally matched or outperformed LSI in term of MAP • EDLSI always outperformed LSI in terms of run time and memory requirements • EDLSI reaches optimal MAP at small k, then does not change much once passed • EDLSI MAP does not change much near optimal x value of 0.1 Results Results Results Conclusions • EDLSI in combination with PSVD updating techniques provides an improvement in MAP over both EDLSI alone and LSI with PSVD updating • EDLSI improves on LSI in terms of run time and memory considerations