Chapter #7 – Properties of Expectation Question #4:

advertisement

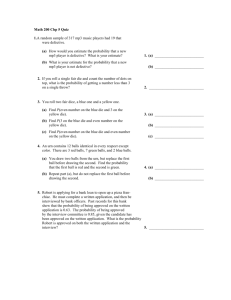

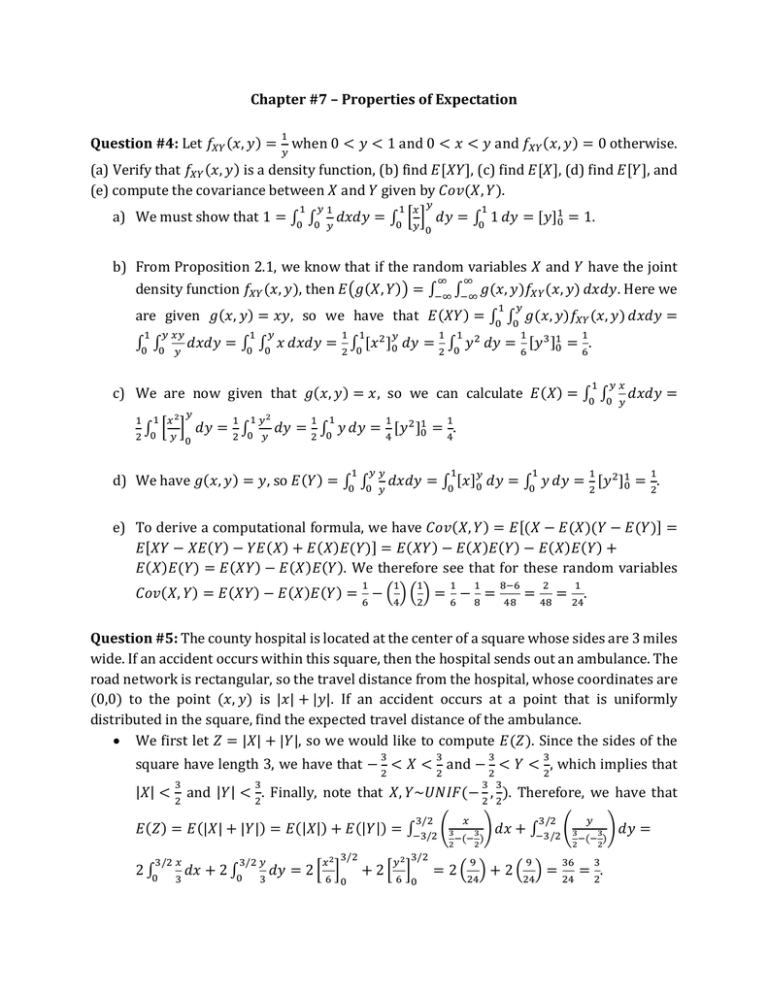

Chapter #7 – Properties of Expectation 1 Question #4: Let 𝑓𝑋𝑌 (𝑥, 𝑦) = 𝑦 when 0 < 𝑦 < 1 and 0 < 𝑥 < 𝑦 and 𝑓𝑋𝑌 (𝑥, 𝑦) = 0 otherwise. (a) Verify that 𝑓𝑋𝑌 (𝑥, 𝑦) is a density function, (b) find 𝐸[𝑋𝑌], (c) find 𝐸[𝑋], (d) find 𝐸[𝑌], and (e) compute the covariance between 𝑋 and 𝑌 given by 𝐶𝑜𝑣(𝑋, 𝑌). 1 1 𝑥 𝑦 𝑦1 a) We must show that 1 = ∫0 ∫0 1 𝑑𝑥𝑑𝑦 = ∫0 [𝑦] 𝑑𝑦 = ∫0 1 𝑑𝑦 = [𝑦]10 = 1. 𝑦 0 b) From Proposition 2.1, we know that if the random variables 𝑋 and 𝑌 have the joint ∞ ∞ density function 𝑓𝑋𝑌 (𝑥, 𝑦), then 𝐸(𝑔(𝑋, 𝑌)) = ∫−∞ ∫−∞ 𝑔(𝑥, 𝑦)𝑓𝑋𝑌 (𝑥, 𝑦) 𝑑𝑥𝑑𝑦. Here we 1 𝑦 are given 𝑔(𝑥, 𝑦) = 𝑥𝑦, so we have that 𝐸(𝑋𝑌) = ∫0 ∫0 𝑔(𝑥, 𝑦)𝑓𝑋𝑌 (𝑥, 𝑦) 𝑑𝑥𝑑𝑦 = 1 𝑦 𝑥𝑦 ∫0 ∫0 𝑦 1 𝑦 1 1 1 1 𝑦 1 1 𝑑𝑥𝑑𝑦 = ∫0 ∫0 𝑥 𝑑𝑥𝑑𝑦 = 2 ∫0 [𝑥 2 ]0 𝑑𝑦 = 2 ∫0 𝑦 2 𝑑𝑦 = 6 [𝑦 3 ]10 = 6. 1 𝑦𝑥 c) We are now given that 𝑔(𝑥, 𝑦) = 𝑥, so we can calculate 𝐸(𝑋) = ∫0 ∫0 1 1 𝑥2 𝑦 1 𝑦2 1 ∫ [ ] 𝑑𝑦 = 2 ∫0 2 0 𝑦 𝑦 0 1 1 1 𝑦 𝑑𝑥𝑑𝑦 = 1 𝑑𝑦 = 2 ∫0 𝑦 𝑑𝑦 = 4 [𝑦 2 ]10 = 4. 1 𝑦𝑦 d) We have 𝑔(𝑥, 𝑦) = 𝑦, so 𝐸(𝑌) = ∫0 ∫0 1 1 𝑦 1 1 𝑑𝑥𝑑𝑦 = ∫0 [𝑥]0 𝑑𝑦 = ∫0 𝑦 𝑑𝑦 = 2 [𝑦 2 ]10 = 2. 𝑦 e) To derive a computational formula, we have 𝐶𝑜𝑣(𝑋, 𝑌) = 𝐸[(𝑋 − 𝐸(𝑋)(𝑌 − 𝐸(𝑌)] = 𝐸[𝑋𝑌 − 𝑋𝐸(𝑌) − 𝑌𝐸(𝑋) + 𝐸(𝑋)𝐸(𝑌)] = 𝐸(𝑋𝑌) − 𝐸(𝑋)𝐸(𝑌) − 𝐸(𝑋)𝐸(𝑌) + 𝐸(𝑋)𝐸(𝑌) = 𝐸(𝑋𝑌) − 𝐸(𝑋)𝐸(𝑌). We therefore see that for these random variables 1 1 1 1 1 𝐶𝑜𝑣(𝑋, 𝑌) = 𝐸(𝑋𝑌) − 𝐸(𝑋)𝐸(𝑌) = 6 − (4) (2) = 6 − 8 = 8−6 48 2 1 = 48 = 24. Question #5: The county hospital is located at the center of a square whose sides are 3 miles wide. If an accident occurs within this square, then the hospital sends out an ambulance. The road network is rectangular, so the travel distance from the hospital, whose coordinates are (0,0) to the point (𝑥, 𝑦) is |𝑥| + |𝑦|. If an accident occurs at a point that is uniformly distributed in the square, find the expected travel distance of the ambulance. We first let 𝑍 = |𝑋| + |𝑌|, so we would like to compute 𝐸(𝑍). Since the sides of the 3 3 3 3 square have length 3, we have that − 2 < 𝑋 < 2 and − 2 < 𝑌 < 2, which implies that |𝑋| < 3 2 3 3 3 and |𝑌| < 2. Finally, note that 𝑋, 𝑌~𝑈𝑁𝐼𝐹(− 2 , 2). Therefore, we have that 3/2 𝐸(𝑍) = 𝐸(|𝑋| + |𝑌|) = 𝐸(|𝑋|) + 𝐸(|𝑌|) = ∫−3/2 (3 2 3/2 𝑥 2 ∫0 3/2 𝑦 𝑑𝑥 + 2 ∫0 3 𝑥2 𝑑𝑦 = 2 [ 6 ] 3 3/2 0 𝑦2 3/2 + 2[ 6 ] 0 3/2 𝑥 3 2 ) 𝑑𝑥 + ∫−3/2 (3 −(− ) 9 2 9 36 𝑦 3 2 ) 𝑑𝑦 = −(− ) 3 = 2 (24) + 2 (24) = 24 = 2. Question #6: A fair die is rolled 10 times. Calculate the expected sum of the 10 rolls. We have a sum 𝑋 of 10 independent random variables 𝑋𝑖 . We therefore have that 1 1 10 10 10 𝐸(𝑋) = 𝐸(∑10 𝑖=1 𝑋𝑖 ) = ∑𝑖=1 𝐸(𝑋𝑖 ) = ∑𝑖=1 [1 ∗ 6 + ⋯ + 6 ∗ 6] = ∑𝑖=1 21 6 21 = 10 ( 6 ) = 35. We could also have found this from noting that 𝐸(𝑋𝑖 ) = 3.5 for all 𝑖 = 1,2, … ,10, so 10 that 𝐸(𝑋) = ∑10 𝑖=1 𝐸(𝑋𝑖 ) = ∑𝑖=1 3.5 = 10(3.5) = 35. Question #9: A total of 𝑛 balls, numbered 1 through 𝑛, are put into 𝑛 urns, also numbered 1 through 𝑛 in such a way that ball 𝑖 is equally likely to go into any of the urns 1,2, … , 𝑖. Find (a) the expected number of urns that are empty; (b) probability none of the urns is empty. a) Let 𝑋 be the number of empty urns and define an indicator variable 𝑋𝑖 = 1 if urn 𝑖 is empty and 𝑋𝑖 = 0 otherwise. We must find the expected value of this indicator random variable, which is simply the probability that it is one: 𝐸(𝑋𝑖 ) = 𝑃(𝑋𝑖 = 1). Since a ball can land in any of the 𝑖 urns with equal probability, the probability that 1 the ith ball will not land in urn 𝑖 is (1 − 𝑖 ). Similarly, the probability that the i + 1st 1 ball will not land in urn 𝑖 is (1 − 𝑖+1). Using this reasoning, we calculate that 𝐸(𝑋𝑖 ) = 1 1 1 1 𝑃(𝑋𝑖 = 1) = (1 − 𝑖 ) (1 − 𝑖+1) (1 − 𝑖+2) … (1 − 𝑛) = ( 𝑖−1 𝑖 𝑖 ) (𝑖+1) … ( 𝑛−1 𝑛 )= can then use this to calculate the 𝐸(𝑋) = 𝐸(∑𝑛𝑖=1 𝑋𝑖 ) = ∑𝑛𝑖=1 𝐸(𝑋𝑖 ) = 1 𝑛 1 1 𝑛(𝑛−1) 𝑛 𝑛 ∑𝑛𝑖=1 𝑖 − 1 = ∑𝑛−1 𝑖=0 𝑖 = ( 2 )= 𝑖−1 . We 𝑛 𝑖−1 ∑𝑛𝑖=1 𝑛 = 𝑛−1 2 . b) For all of the urns to have at least one ball in them, the nth ball must be dropped into 1 the nth urn, which occurs with probability 𝑛. Similarly, the n – 1st ball must be dropped into the n – 1st urn, which has a probability of 1 1 1 , and so on. We can therefore 𝑛−1 1 1 1 calculate that 𝑃(𝑛𝑜 𝑒𝑚𝑝𝑡𝑦 𝑢𝑟𝑛𝑠) = (𝑛) (𝑛−1) … (2) (1) = 𝑛!. Question #11: Consider 𝑛 independent flips of a coin having probability 𝑝 of landing on heads. Say that a changeover occurs whenever an outcome differs from the one preceding it. For instance, if 𝑛 = 5 and the outcome is 𝐻𝐻𝑇𝐻𝑇, then there are 3 changeovers. Find the expected number of changeovers. Hint: Express the number of changeovers as the sum of a total of 𝑛 − 1 Bernoulli random variables. Let 𝑋𝑖 = 1 if a changeover occurs on the ith flip and 𝑋𝑖 = 0 otherwise. Thus, 𝐸(𝑋𝑖 ) = 𝑃(𝑋𝑖 = 1) = 𝑃(𝑖 − 1 𝑖𝑠 𝐻, 𝑖 𝑖𝑠 𝑇) + 𝑃(𝑖 − 1 𝑖𝑠 𝑇, 𝑖 𝑖𝑠 𝐻) = 𝑝(1 − 𝑝) + (1 − 𝑝)𝑝 = 2𝑝(1 − 𝑝) whenever 𝑖 ≥ 2. If we let 𝑋 denote the number of changeovers, then we have that 𝐸(𝑋) = 𝐸(∑𝑛𝑖=2 𝑋𝑖 ) = ∑𝑛𝑖=2 𝐸(𝑋𝑖 ) = ∑𝑛𝑖=2 2𝑝(1 − 𝑝) = 2(𝑛 − 1)(1 − 𝑝)𝑝. Question #20: In an urn containing 𝑛 balls, the ith ball has weight 𝑊(𝑖), 𝑖 = 1, … , 𝑛. The balls are removed without replacement, one at a time, according to: At each selection, the probability that a given ball in the urn is chosen is equal to its weight divided by the sum of the weights remaining in the urn. For instance, if at some time 𝑖1 , … , 𝑖𝑟 is the set of balls remaining in the urn, then the next selection will be 𝑖𝑗 with probability ∑𝑟 𝑊(𝑖𝑗 ) 𝑘=1 𝑊(𝑖𝑘 ) , 𝑗 = 1, … , 𝑟. Compute the expected number of balls that are withdrawn before ball number 1 is removed. Let 𝑋𝑗 = 1 if ball 𝑗 is removed before ball 1 and 𝑋𝑗 = 0 otherwise. Then we see that 𝑊(𝑗) 𝐸(∑𝑗≠1 𝑋𝑗 ) = ∑𝑗≠1 𝐸(𝑋𝑗 ) = ∑𝑗≠1 𝑃(𝑋𝑗 = 1) = ∑𝑗≠1 𝑊(𝑗)+𝑊(1). Question #33: If 𝐸(𝑋) = 1 and 𝑉𝑎𝑟(𝑋) = 5, find (a) 𝐸[(2 + 𝑋)2 ], and (b) 𝑉𝑎𝑟(4 + 3𝑋). a) We calculate that the 𝐸[(2 + 𝑋)2 ] = 𝐸(4 + 4𝑋 + 𝑋 2 ) = 𝐸(4) + 4𝐸(𝑋) + 𝐸(𝑋 2 ) = 4 + 4(1) + 𝑉𝑎𝑟(𝑋) + [𝐸(𝑋)]2 = 4 + 4 + 5 + 12 = 14, which can calculate using the identity that 𝑉𝑎𝑟(𝑋) = 𝐸(𝑋 2 ) − [𝐸(𝑋)]2. b) 𝑉𝑎𝑟(4 + 3𝑋) = 𝑉𝑎𝑟(3𝑋) = 32 𝑉𝑎𝑟(𝑋) = 9(5) = 45. Question #38: If the joint density 𝑓𝑋𝑌 (𝑥, 𝑦) = 2𝑒 −2𝑥 𝑥 when 1 ≤ 𝑥 < ∞ and 0 ≤ 𝑦 ≤ 𝑥 and 𝑓𝑋𝑌 (𝑥, 𝑦) = 0 otherwise, compute 𝐶𝑜𝑣(𝑋, 𝑌). Since 𝐶𝑜𝑣(𝑋, 𝑌) = 𝐸(𝑋𝑌) − 𝐸(𝑋)𝐸(𝑌), we must compute the following: ∞ 𝑥 o 𝐸(𝑋𝑌) = ∫0 ∫0 𝑥𝑦 ∗ ∞ 1 2𝑒 −2𝑥 𝑥 1 2 ∞ 𝑥 ∞ ∫0 𝑥 2 𝑒 −2𝑥 𝑑𝑥 = (2) (2) ∫0 𝑡 2 𝑒 −𝑡 𝑑𝑡 = 𝑥 2𝑒 −2𝑥 o Since 𝑓𝑋 (𝑥) = ∫0 𝑥 ∞ 𝑑𝑦𝑑𝑥 = ∫0 ∫0 2𝑦 𝑒 −2𝑥 𝑑𝑦𝑑𝑥 = ∫0 [𝑦 2 𝑒 −2𝑥 ]0𝑥 𝑑𝑥 = Γ(3) 8 1 = 4. 𝑥 1 𝑑𝑦 = 2𝑒 −2𝑥 , we have 𝐸(𝑋) = ∫0 2𝑥𝑒 −2𝑥 𝑑𝑥 = 2 by doing 1 integration by parts with 𝑢 = 𝑥, 𝑑𝑣 = 2𝑒 −2𝑥 so that 𝑑𝑢 = 1𝑑𝑥, 𝑣 = − 2 𝑒 −2𝑥 . ∞ ∞ o 𝐸(𝑌) = ∫0 ∫𝑦 2𝑦 ∗ ∞ ∫0 𝑥𝑒 −2𝑥 𝑑𝑥 = 𝑒 −2𝑥 𝑥 ∞ 𝑡 −𝑡 ∫ 𝑒 2 0 2 1 ∞ 𝑥 𝑑𝑥𝑑𝑦 = ∫0 ∫0 2𝑦 ∗ 𝑑𝑡 = Γ(2) 4 𝑒 −2𝑥 𝑥 ∞ 𝑦 2 𝑒 −2𝑥 𝑑𝑥𝑑𝑦 = ∫0 [ 1 𝑥 = 4 with 𝑡 = 2𝑥 and 𝑑𝑡 = 2𝑑𝑥. 1 1 1 1 1 1 Thus, 𝐶𝑜𝑣(𝑋, 𝑌) = 𝐸(𝑋𝑌) − 𝐸(𝑋)𝐸(𝑌) = (4) − (2) (4) = 4 − 8 = 8. 𝑥 ] 𝑑𝑦 = 0 Question #41: A pond contains 100 fish, of which 30 are carp. If 20 fish are caught, what are the mean and variance of the number of carp among the 20? Let 𝑋 denote the number of carp caught of the 20 fish caught. We assume that each of the 𝐶(100,20) ways to catch 20 fish from 100 are equally likely, so it is clear that 𝑋~𝐻𝑌𝑃𝐺(𝑛 = 20, 𝑁 = 100, 𝑚 = 30). This implies that 𝐸(𝑋) = 𝑉𝑎𝑟(𝑋) = 𝑛𝑚 (𝑛−1)(𝑚−1) 𝑁 [ 𝑁−1 +1− 𝑛𝑚 𝑁 19∗29 ] = 6( 99 + 5) = 𝑛𝑚 𝑁 = 20∗30 100 = 6, while 112 33 . Question #22: Suppose that 𝑋1, 𝑋2 and 𝑋3 are independent Poisson random variables with respective parameters 𝜆1 , 𝜆2 and 𝜆3 . Let 𝑋 = 𝑋1 + 𝑋2 and 𝑌 = 𝑋2 + 𝑋3 . Calculate (a) 𝐸(𝑋) and 𝐸(𝑌), and (b) 𝐶𝑜𝑣(𝑋, 𝑌). a) 𝐸(𝑋) = 𝐸(𝑋1 + 𝑋2 ) = 𝐸(𝑋1 ) + 𝐸(𝑋2 ) = 𝜆1 + 𝜆2 and 𝐸(𝑌) = 𝐸(𝑋2 + 𝑋3 ) = 𝜆2 + 𝜆3 since whenever a random variable 𝑍~𝑃𝑂𝐼𝑆(𝜆), we have that 𝐸(𝑍) = 𝑉𝑎𝑟(𝑍) = 𝜆. b) 𝐶𝑜𝑣(𝑋, 𝑌) = 𝐶𝑜𝑣(𝑋1 + 𝑋2 , 𝑋2 + 𝑋3 ) = 𝐶𝑜𝑣(𝑋1 , 𝑋2 ) + 𝐶𝑜𝑣(𝑋1 , 𝑋3 ) + 𝐶𝑜𝑣(𝑋2 . 𝑋2 ) + 𝐶𝑜𝑣(𝑋2 , 𝑋3 ) = 0 + 0 + 𝑉𝑎𝑟(𝑋2 ) + 0 = 𝜆2 since the three random variables are independent (implying that their covariance is zero) and 𝐶𝑜𝑣(𝑍, 𝑍) = 𝑉𝑎𝑟(𝑍).