Accomplishments and New Directions for 2004:

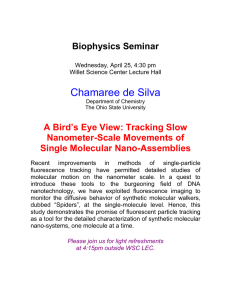

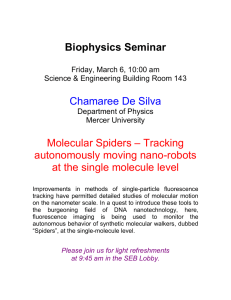

advertisement