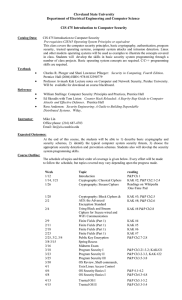

Numerical Linear Algebra: Matrix Norms & Condition

advertisement

Introduction to Numerical Analysis I

Handout 14

1

Numerical Linear Algebra (cont)

1.2

kAk1 = max kAvk1 ≤

Norm of Matrix

max kA↓j k1 .

kvk=1

Reminder: The vector norms we use are

v

u n

n

X

uX

2

kvk1 =

|vi |, kvk2 = t

|vi | , kvk∞ = max |vi |

i=1

Which implies

From the other side, by definition kAk1 ≥ kAvk1

for any kvk = 1. Choose v = em = (0, ...1..., 0)T ,

where m satisfies

A↓m = max kA↓j k1 ,

i

i=1

columns j

columns j

Definition 1.1 (Spectral Radius). Let A be n × n

matrix and λj be j’th eigenvalue of A, then the spectral

radius ρ reads for

to get Aem = A↓m , that is kAk1 ≥ kA↓m k1 .

)

(

P

|aij | , the

2. kAk∞ = max {kAi→ k1 } = max

ρ (An,n ) = max |λj |

rows i

16j6n

i

j

proof is similar.

p

3. kAk2 = ρ (AAT )

Proof: Since AT A is symmetric matrix one writes

AT A = QT ΛQ, where Q is orthogonal matrix

and Λ = diag(λ21 , . . . , λ2n ) is the diagonal matrix of eigenvalues of AT A which are eig(AT A) =

2

eig(A)2 . Thus, kAvk = (Av, Av) = Av · Av =

v T AT A v = v T QT ΛQ v = y T Λy and there-

Definition 1.2 (An induced norm of matrix). A

matrix norm induced from a vector norm k·} is given

by

kA~v k

kAk = max n

06=~

v ∈<

k~v k

Theorem 1.3. kAk = max

kA~v k

n

~

v ∈<

k~

v k=1

Proof:

y=Qv

fore kAk2 = max kAvk2 = max

kA~v k

kAk = max n

=

06=~

v ∈<

k~v k

A~v = max A ~v = max kA~v k

max n n

06=~

v ∈<

06=~

v ∈<

k~v k

k~v k ~v∈<n

kvk=1

kvk=1

Example 1.4. Let A =

1

3

2

4

√

v T Λv =

p

ρ (AAT ).

, then

k~

v k=1

1. kAk1 = max k(1, 3)T k1 , k(2, 4)T k1 = 6

Properties:

2. kAk∞ = max {k(1, 2)k1 , k(3, 4)k1 } = 7

p

3. kAk2 = ρ (AAT ) =

• kA~v k 6 kAk k~v k , ∀~v ∈ <n

Proof:

∀~v ∈ <n \ {0} :

kA~v k

6 kAk ⇒ kA~v k 6 kAk k~v k

k~v k

s 1

= ρ

3

• kABk 6 kAk kBk

Proof:

k~

v k=1

6 max kAk kBk k~v k = kAk kBk

The norms we will use:

max kA↓j k1 = max

j

columns j

P

|aij |

i

Proof:

n

X

X

kAvk1 = vj A↓j kA↓j k1 |vj | ≤

≤

j=1

max

columns j

1

2

3

4

s 5

= ρ

11

11

λ − 25

11

25

=

(λ − 5) (λ − 25) − 121 = λ2 − 30λ + 4

√

√

30 ± 900 − 16

λ1,2 =

= 15 ± 221

2

Thus,

q

q

√

kAk2 = ρ (AAT ) = 15 + 221 ≈ 5.465

k~

v k=1

1. kAk1 =

λ − AAT = λ − 5

11

kABk = max kAB~v k 6 max kAk kB~v k 6

k~

v k=1

2

4

kA↓j k1 kvk1

1

1

1.2.1

Matrix Condition Number

Another way to see the problem is via eigenvalues.

For the matrix A in this example we have a big gap

between the two eigenvalues

1

1

= −0.005, v1 ≈

λ1 ≈ −

−1

200

Consider linear system Ax = b, where b were obtained

with error. That is, instead of original system we solve

Ax̃ = A (x + δx) = b̃ = (b + δb)

We want to analyze the sensitivity of the solution x

to the small changes in the data b. Thus, the relative

error in x is given by

and

λ2 ≈ 200, v2 ≈

−1 A δb

kx̃ − xk

kδxk

=

=

=

6

kxk

kxk

kxk

kxk

−1 −1 A kδbk kAk

A kδbk

6

6

kAk =

kxk

kAk

kAxk

kδbk

= A−1 kAk

kbk

Ax = v2 ⇒ x =

we will get

x = 0.01A−1 v1 + A−1 v2 = 0.01 λ11v1 +

≈ −200 · 0.01v1 +

1. Find the solution to

x̃ =

1.1

0.9

=

−18.7

18.9

2. Compute the relative error in input and output.

x=

0.015

−0.005

kδbk∞

≈ 0.1

kbk∞

δx =

−19

19

kδxk∞

19

=

≈ 1266.6

kxk∞

0.015

Note that the problem is hard, that is the problem is ill-conditioned, a little error in the input

caused to very big error in output. This is usually the case in inverse problems.

3. Bound the error in output as a function of the

error in input.

−1 100 99

−98

99

−1

A =

=

99 98

99 −100

−1 Thus cond (A, ∞) = A ∞ kAk∞ = 1992 ≈

40000, therefore 1267 =

40000 · 0.1 = 4000

kδxk

kxk

1

v

200 2

= −2v1 +

1

v

λ2 2

1

v

200 2

≈

≈ −2v1

That is the error were magnified more then the exact

part.

Example

1.6. Let 100 99

1.005

0.1

A=

, b=

, δb =

99 98

0.995

−0.1

100 · 0.995 − 99 · 1.005

1.005 · 98 − 99 · 0.995

100 · 98 − 992

1

λ2 v 2

Ax ≈ 0.01v1 + v2

cond A = kAkkA−1 k

but if we add a small error, to the RHS, that is

Definition 1.5 (Matrix Condition Number). Let

An×n be non singular matrix, then the condition number of matrix is given by

1

1

This cause the following problem

−1

A b̃ − A−1 b

Ax̃ = b + δb = b̃ =

6 cond (M ) kδyk

kyk =

2