Proposition

advertisement

Determining Probabilities

Product Rule for Ordered Pairs/k-Tuples:

Determining Probabilities

Product Rule for Ordered Pairs/k-Tuples:

Proposition

If the first element of object of an ordered pair can be selected in

n1 ways, and for each of these n1 ways the second element of the

pair can be selected in n2 ways, then the number of pairs is n1 · n2 .

Determining Probabilities

Product Rule for Ordered Pairs/k-Tuples:

Proposition

If the first element of object of an ordered pair can be selected in

n1 ways, and for each of these n1 ways the second element of the

pair can be selected in n2 ways, then the number of pairs is n1 · n2 .

Proposition

Suppose a set consists of ordered collections of k elements

(k-tuples) and that there are n1 possible choices for the first

element; for each choice of the first element , there n2 possible

choices of the second element; . . . ; for each possible choice of the

first k − 1 elements, there are nk choices of the k th element. Then

there are n1 · n2 · ··· · nk possible k-tuples.

Determining Probabilities

Proposition

Pk:n = n · (n − 1) · ··· · (n − (k − 1)) =

n!

(n − k)!

where k! = k · (k − 1) · ··· · 2 · 1 is the k factorial.

Determining Probabilities

Proposition

Pk:n = n · (n − 1) · ··· · (n − (k − 1)) =

n!

(n − k)!

where k! = k · (k − 1) · ··· · 2 · 1 is the k factorial.

Proposition

n

Pk:n

n!

=

=

k

k!

k!(n − k)!

where k! = k · (k − 1) · ··· · 2 · 1 is the k factorial.

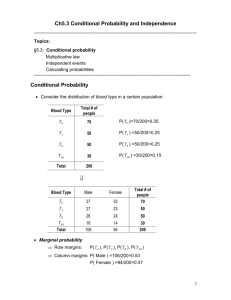

Conditional Probability

Conditional Probability

Definition

For any two events A and B with P(B) > 0, the conditional

probability of A given that B has occurred is defined by

P(A | B) =

P(A ∩ B)

P(B)

Conditional Probability

Definition

For any two events A and B with P(B) > 0, the conditional

probability of A given that B has occurred is defined by

P(A | B) =

P(A ∩ B)

P(B)

Event B is the prior knowledge. Due to the presence of event B,

the probability for event A to happen changed.

Conditional Probability

Definition

For any two events A and B with P(B) > 0, the conditional

probability of A given that B has occurred is defined by

P(A | B) =

P(A ∩ B)

P(B)

Event B is the prior knowledge. Due to the presence of event B,

the probability for event A to happen changed.

The Multiplication Rule

P(A ∩ B) = P(A | B) · P(B)

Conditional Probability

Conditional Probability

Example 2.29 A chain of video stores sells three different brands

of DVD players. Of its DVD player sales, 50% are brand 1, 30%

are brand 2, and 20% are brand 3. Each manufacturer offers a

1-year warranty on parts and labor. It is known that 25% of brand

1’s DVD players require warranty on parts and labor, whereas the

corresponding percentages for brands 2 and 3 are 20% and 10%,

respectively.

1. What is the probability that a randomly selected purchaser has

bought a brand 1 DVD player that will need repair while under

warranty?

2. What is the probability that a randomly selected purchaser has

a DVD player that will need repair while under warranty?

Conditional Probability

Example 2.29 A chain of video stores sells three different brands

of DVD players. Of its DVD player sales, 50% are brand 1, 30%

are brand 2, and 20% are brand 3. Each manufacturer offers a

1-year warranty on parts and labor. It is known that 25% of brand

1’s DVD players require warranty on parts and labor, whereas the

corresponding percentages for brands 2 and 3 are 20% and 10%,

respectively.

1. What is the probability that a randomly selected purchaser has

bought a brand 1 DVD player that will need repair while under

warranty?

2. What is the probability that a randomly selected purchaser has

a DVD player that will need repair while under warranty?

3. If a customer returns to the store with a DVD player that needs

warranty work, what is the probability that it is a brand 1 DVD

player? A brand 2 DVD player? A brand 3 DVD player?

Conditional Probability

Conditional Probability

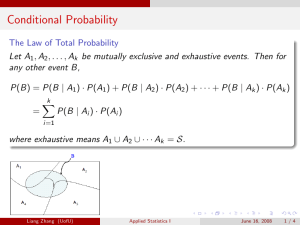

The Law of Total Probability

Let A1 , A2 , . . . , Ak be mutually exclusive and exhaustive events.

Then for any other event B,

P(B) = P(B | A1 ) · P(A1 ) + P(B | A2 ) · P(A2 ) + · · ·

+ P(B | Ak ) · P(Ak )

=

k

X

P(B | Ai ) · P(Ai )

i=1

where exhaustive means A1 ∪ A2 ∪ · · · Ak = S.

Conditional Probability

The Law of Total Probability

Let A1 , A2 , . . . , Ak be mutually exclusive and exhaustive events.

Then for any other event B,

P(B) = P(B | A1 ) · P(A1 ) + P(B | A2 ) · P(A2 ) + · · ·

+ P(B | Ak ) · P(Ak )

=

k

X

P(B | Ai ) · P(Ai )

i=1

where exhaustive means A1 ∪ A2 ∪ · · · Ak = S.

Conditional Probability

Conditional Probability

Bayes’ Theorem

Let A1 , A2 , . . . , Ak be a collection of k mutually exclusive and

exhaustive events with prior probabilities P(Ai )(i = 1, 2, . . . , k).

Then for any other event B with P(B) > 0, the posterior

probability of Aj given that B has occurred is

P(Aj | B) =

P(B | Aj ) · P(Aj )

P(Aj ∩ B)

= Pk

P(B)

i=1 P(B | Ai ) · P(Ai )

j = 1, 2, . . . k

Conditional Probability

Conditional Probability

Application of Bayes’ Theorem

Example 2.30 Incidence of a rare disease

Only 1 in 1000 adults is afflicted with a rare disease for which a

diagnostic test has been developed. The test is such that when an

individual actually has the disease, a positive result will occur 99%

of the time, whereas an individual without the disease will show a

positive test result only 2% of the time. If a randomly selected

individual is tested and the result is positive, what is the

probability that the individual has the disease?

Independence

Independence

Example: A fair die is tossed and we want to guess the outcome.

The outcomes will be 1, 2, 3, 4, 5, 6 with equal probability 61 each.

If we are interested in getting the following results: A = {1, 3, 5},

B = {1, 2, 3} and C = {3, 4, 5, 6}, then we can calculate the

probability for each event:

P(A) = P(B) =

3

1

4

2

= , and P(C ) = = .

6

2

6

3

Independence

Example: A fair die is tossed and we want to guess the outcome.

The outcomes will be 1, 2, 3, 4, 5, 6 with equal probability 61 each.

If we are interested in getting the following results: A = {1, 3, 5},

B = {1, 2, 3} and C = {3, 4, 5, 6}, then we can calculate the

probability for each event:

P(A) = P(B) =

3

1

4

2

= , and P(C ) = = .

6

2

6

3

If someone tell you that after one toss, event C happened, i.e. the

outcome is one of {3, 4, 5, 6}, then what is the probability for

event A to happen and what for B?

P(A ∩ C )

P(A | C ) =

=

P(C )

1

3

2

3

1

P(B ∩ C )

= ; P(B | C ) =

=

2

P(C )

1

6

2

3

1

= .

4

Independence

Example: A fair die is tossed and we want to guess the outcome.

The outcomes will be 1, 2, 3, 4, 5, 6 with equal probability 61 each.

If we are interested in getting the following results: A = {1, 3, 5},

B = {1, 2, 3} and C = {3, 4, 5, 6}, then we can calculate the

probability for each event:

P(A) = P(B) =

3

1

4

2

= , and P(C ) = = .

6

2

6

3

If someone tell you that after one toss, event C happened, i.e. the

outcome is one of {3, 4, 5, 6}, then what is the probability for

event A to happen and what for B?

P(A ∩ C )

P(A | C ) =

=

P(C )

1

3

2

3

1

P(B ∩ C )

= ; P(B | C ) =

=

2

P(C )

P(A | C ) = P(A) while P(B | C ) 6= P(B)

1

6

2

3

1

= .

4

Independence

Independence

Definition

Two events A and B are independent if P(A | B) = P(A), and

are dependent otherwise.

Independence

Definition

Two events A and B are independent if P(A | B) = P(A), and

are dependent otherwise.

Remark:

Independence

Definition

Two events A and B are independent if P(A | B) = P(A), and

are dependent otherwise.

Remark:

1. P(A | B) = P(A) ⇒ P(B | A) = P(B). This is natural since the

definition for independent should be symmetric.

Independence

Definition

Two events A and B are independent if P(A | B) = P(A), and

are dependent otherwise.

Remark:

1. P(A | B) = P(A) ⇒ P(B | A) = P(B). This is natural since the

definition for independent should be symmetric.

P(B | A) =

P(A ∩ B)

P(A | B) · P(B)

=

P(A)

P(A)

Independence

Independence

Remark:

Independence

Remark:

2. If events A and B are mutually disjoint, then they can not be

independent. Intuitively, if we know event A happens, we then

know that B does not happen, since A ∩ B = ∅. Mathmatically,

P(A | B) =

P(A ∩ B)

P(∅)

=

= 0 6= P(A),

P(B)

P(B)

unless P(A) = 0 which is trivial.

Independence

Remark:

2. If events A and B are mutually disjoint, then they can not be

independent. Intuitively, if we know event A happens, we then

know that B does not happen, since A ∩ B = ∅. Mathmatically,

P(A | B) =

P(A ∩ B)

P(∅)

=

= 0 6= P(A),

P(B)

P(B)

unless P(A) = 0 which is trivial.

e.g. for the die tossing example, if A = {1, 3, 5} and B = {2, 4, 6},

then P(A ∩ B) = P(∅) = 0, therefore P(A | B) = 0. However,

P(A) = 0.5.

Independence

Independence

The Multiplication Rule for Independent Events

Independence

The Multiplication Rule for Independent Events

The general multiplication rule tells us

P(A ∩ B) = P(A | B) · P(B).

Independence

The Multiplication Rule for Independent Events

The general multiplication rule tells us

P(A ∩ B) = P(A | B) · P(B).

However, if A and B are independent, then the above equation

would be P(A ∩ B) = P(A) · P(B) since P(A | B) = P(A).

Independence

The Multiplication Rule for Independent Events

The general multiplication rule tells us

P(A ∩ B) = P(A | B) · P(B).

However, if A and B are independent, then the above equation

would be P(A ∩ B) = P(A) · P(B) since P(A | B) = P(A).

Furthermore, we have the following

Proposition

Events A and B are independent if and only if

P(A ∩ B) = P(A) · P(B)

Independence

The Multiplication Rule for Independent Events

The general multiplication rule tells us

P(A ∩ B) = P(A | B) · P(B).

However, if A and B are independent, then the above equation

would be P(A ∩ B) = P(A) · P(B) since P(A | B) = P(A).

Furthermore, we have the following

Proposition

Events A and B are independent if and only if

P(A ∩ B) = P(A) · P(B)

In words, events A and B are independent iff (if and only if) the

probability that the both occur (A ∩ B) is the product of the two

individual probabilities.

Independence

Independence

In real life, we often use this multiplication rule without noticing it.

Independence

In real life, we often use this multiplication rule without noticing it.

The probability for getting {HH} when you toss a fair coin twice is

1

1 1

4 , which is obtained by 2 · 2 ;

Independence

In real life, we often use this multiplication rule without noticing it.

The probability for getting {HH} when you toss a fair coin twice is

1

1 1

4 , which is obtained by 2 · 2 ;

The probability for getting {6,5,4,3,2,1} when you toss a fair die

six times is ( 61 )6 , which is simply obtained by 16 · 61 · 16 · 16 · 16 · 16 ;

Independence

In real life, we often use this multiplication rule without noticing it.

The probability for getting {HH} when you toss a fair coin twice is

1

1 1

4 , which is obtained by 2 · 2 ;

The probability for getting {6,5,4,3,2,1} when you toss a fair die

six times is ( 61 )6 , which is simply obtained by 16 · 61 · 16 · 16 · 16 · 16 ;

The probability for getting {♠♠♠} when you draw three cards

1

, which is

from a deck of well-shuffled cards with replacement is 64

1 1 1

simply obtained by 4 · 4 · 4 .

Independence

In real life, we often use this multiplication rule without noticing it.

The probability for getting {HH} when you toss a fair coin twice is

1

1 1

4 , which is obtained by 2 · 2 ;

The probability for getting {6,5,4,3,2,1} when you toss a fair die

six times is ( 61 )6 , which is simply obtained by 16 · 61 · 16 · 16 · 16 · 16 ;

The probability for getting {♠♠♠} when you draw three cards

1

, which is

from a deck of well-shuffled cards with replacement is 64

1 1 1

simply obtained by 4 · 4 · 4 .

However, if you draw the cards without replacement, then the

multiplication rule for independent events fails since the event {the

first card is ♠} is no longer independent of the event {the second

card is ♠}. In fact,

P({the second card is ♠ | the first card is ♠}) =

12

.

51

Independence

Independence

Example: Exercise 89

Suppose identical tags are placed on both the left ear and the right

ear of a fox. The fox is then let loose for a period of time.

Consider the two events C1 ={left ear tag is lost} and C2 = {right

ear tag is lost}. Let π = P(C1 ) = P(C2 ), and assume C1 and C2

are independent events. Derive an expression (involving π) for the

probability that exactly one tag is lost given that at most one is

lost.

Independence

Example: Exercise 89

Suppose identical tags are placed on both the left ear and the right

ear of a fox. The fox is then let loose for a period of time.

Consider the two events C1 ={left ear tag is lost} and C2 = {right

ear tag is lost}. Let π = P(C1 ) = P(C2 ), and assume C1 and C2

are independent events. Derive an expression (involving π) for the

probability that exactly one tag is lost given that at most one is

lost.

Independence

Independence

Remark:

Independence

Remark:

1. If events A and B are independent, then so are events A0 and B,

events A and B 0 as well as events A0 and B 0 .

P(B) − P(A ∩ B)

P(A ∩ B)

P(A0 ∩ B)

=

=1−

P(B)

P(B)

P(B)

0

= 1 − P(A | B) = 1 − P(A) = P(A )

P(A0 | B) =

Independence

Remark:

1. If events A and B are independent, then so are events A0 and B,

events A and B 0 as well as events A0 and B 0 .

P(B) − P(A ∩ B)

P(A ∩ B)

P(A0 ∩ B)

=

=1−

P(B)

P(B)

P(B)

0

= 1 − P(A | B) = 1 − P(A) = P(A )

P(A0 | B) =

2. We can use the condition P(A ∩ B) = P(A) · P(B) to define the

independence of the two events A and B.

Independence

Independence

Independence of More Than Two Events

Definition

Events A1 , A2 , . . . , An are mutually independent if for every k

(k = 2, 3, . . . , n) and every subset of indices i1 , i2 , . . . , ik ,

P(Ai1 ∩ Ai2 ∩ · · · ∩ Aik ) = P(Aii ) · P(Ai2 ) · ··· · P(Aik ).

Independence

Independence of More Than Two Events

Definition

Events A1 , A2 , . . . , An are mutually independent if for every k

(k = 2, 3, . . . , n) and every subset of indices i1 , i2 , . . . , ik ,

P(Ai1 ∩ Ai2 ∩ · · · ∩ Aik ) = P(Aii ) · P(Ai2 ) · ··· · P(Aik ).

In words, n events are mutually independent if the probability of

the intersection of any subset of the n events is equal to the

product of the individual probabilities.

Independence

Independence

An very interesting example: Exercise 113

A box contains the following four slips of paper, each having

exactly the same dimensions: (1) win prize 1; (2) win prize 2; (3)

win prize 3; and (4) win prize 1, 2 and 3. One slip will be randomly

selected. Let A1 = {win prize 1}, A2 = {win prize 2}, and A3 =

{win prize 3}. Are these three events mutually independent?

Independence

Independence

Example:

Consider a system of seven identical components connected as

following. For the system to work properly, the current must be

able to go through the system from the left end to the right end. If

components work independently of one another and P(component

works)=0.9, then what is the probability for the system to work?

Independence

Example:

Consider a system of seven identical components connected as

following. For the system to work properly, the current must be

able to go through the system from the left end to the right end. If

components work independently of one another and P(component

works)=0.9, then what is the probability for the system to work?

Let A = {the system works} and Ai = {component i works}. Then

A = (A1 ∪ A2 ) ∩ ((A3 ∩ A4 ) ∪ (A5 ∩ A6 )) ∩ A7 .