Pitfalls of Participatory Programs: Evidence from a Please share

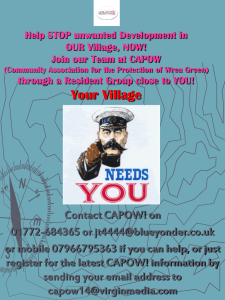

advertisement