NBER WORKING PAPER SERIES ROLE FOR POLICY EVALUATION

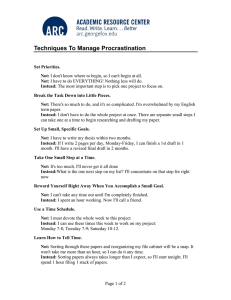

advertisement