Conditional Random Fields Dietrich Klakow

advertisement

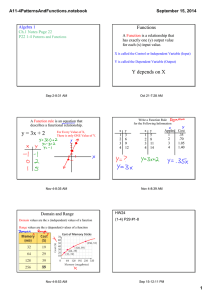

Conditional Random Fields Dietrich Klakow Warning • I might have to leave during the lecture for a meeting Overview • • • • • Sequence Labeling Bayesian Networks Markov Random Fields Conditional Random Fields Software example Background Reading Hanna M. Wallach Conditional Random Fields: An Introduction. Technical Report MS-CIS-04-21. Department of Computer and Information Science, University of Pennsylvania, 2004. http://www.inference.phy.cam.ac.uk/hmw26/papers/crf_intro.pdf Sequence Labeling Tasks Sequence: a sentence Pierre Vinken , 61 years old , will join the board as a nonexecutive director Nov. 29 . POS Labels Pierre Vinken , 61 years old , will join the board as a nonexecutive director Nov. 29 . NNP NNP , CD NNS JJ , MD VB DT NN IN DT JJ NN NNP CD . Chunking Task: find phrase boundaries: Chunking Pierre Vinken , 61 years old , will join the board as a nonexecutive director Nov. 29 . B-NP I-NP O B-NP I-NP B-ADJP O B-VP I-VP B-NP I-NP B-PP B-NP I-NP I-NP B-NP I-NP O Named Entity Tagging Pierre Vinken , 61 years old , will join the board as a nonexecutive director Nov. 29 . B-PERSON I-PERSON O B-DATE:AGE I-DATE:AGE I-DATE:AGE O O O O B-ORG_DESC:OTHER O O O B-PER_DESC B-DATE:DATE I-DATE:DATE O Supertagging Pierre Vinken , 61 years old , will join the board as a nonexecutive director Nov. 29 . N/N N , N/N N (S[adj]\NP)\NP , (S[dcl]\NP)/(S[b]\NP) ((S[b]\NP)/PP)/NP NP[nb]/N N PP/NP NP[nb]/N N/N N ((S\NP)\(S\NP))/N[num] N[num] . Hidden Markov Model HMM: just an Application of a Bayes Classifier (πˆ1 , πˆ 2 ...πˆ N ) = arg max[P( x1 , x2 ...x N , π 1 , π 2 ...π N )] π 1 ,π 2 ..π N x1 , x2 ...x N : observation/input sequence π 1 , π 2 ...π N : label sequence Decomposition of Probabilities P ( x1 , x2 ..x N , π 1 , π 2 ..π N ) N = ∏ P ( xi | π i ) P(π i | π i −1 ) i =1 P(π i | π i −1 ) : transition probability P ( xi | π i ) : emission probability Graphical view HMM Observation sequence X1 X2 X3 ……. XN π1 π2 π3 ……. πN Label sequence Criticism • HMMs model only limited dependencies a come up with more flexible models a come up with graphical description Bayesian Networks Example for Bayesian Network From Russel and Norvig 95 AI: A Modern Approach Corresponding joint P (C , S , R, W ) = distribution P (W | S , R) P( S | C ) P ( R | C ) P(C ) Naïve Bayes Observations x1, …. xD are assumed to be independent D ∏ P( x | z ) i i =1 Markov Random Fields • Undirected graphical model • New term: • clique in an undirected graph: • Set of nodes such that every node is connected to every other node • maximal clique: there is no node that can be added without add without destroying the clique property Example cliques: green and blue maximal clique: blue Factorization x : all nodes x1...x N xC : nodes in clique C C M : set of all maximal cliques ΨC ( xC ) : potential function ( ΨC ( xC ) ≥ 0) Joint distribution described by graph 1 p( x) = Z ∏Ψ C ( xC ) C∈C M Normalization Z =∑ ∏Ψ C ( xC ) x C∈C M Z is sometimes call the partition function Example x2 x1 x3 x5 x4 What are the maximum cliques? Write down joint probability described by this graph a white board Energy Function Define ΨC ( xC ) = e − E ( xC ) Insert into joint distribution 1 p ( x) = e Z − ∑ E ( xC ) C∈CM Conditional Random Fields Definition Maximum random field were each random variable yi is conditioned on the complete input sequence x1, …xn y=(y1…yn) y1 y2 y3 ….. x yn-1 x=(x1…xn) yn Distribution Distribution n 1 p( y | x) = e Z ( x) − N ∑∑ λ j f j ( yi−1 , yi , x ,i ) i =1 j =1 λ j : parameters to be trained f j ( yi −1 , yi , x, i ) : feature function Example feature functions Modeling transitions 1 if y i-1 = IN and y i = NNP f1 ( yi −1 , yi , x, i ) = 0 else Modeling emissions 1 if y i = NNP and x i = September f 2 ( yi −1 , yi , x, i ) = 0 else Training • Like in maximum entropy models Generalized iterative scaling • Convergence: p(y|x) is a convex function a unique maximum Convergence is slow Improved algorithms exist Decoding: Auxiliary Matrix Define additional start symbol y0=START and stop symbol yn+1=STOP Define matrix M i (x ) such that N [M i ( x) ] y i −1 y i − =M i y i −1 y i ( x) = e ∑ λ j f j ( y i −1 , y i , x ,i ) j =1 Reformulate Probability With that definition we have 1 n +1 i p( y | x) = M yi−1 yi ( x) ∏ Z ( x) i =1 with Z ( x) = ∑∑∑ ...∑ M y1 y2 y3 yn 1 y0 y1 ( x)M 2 y1 y 2 ( x)....M n +1 y n y n+1 ( x) Use Matrix Properties Use matrix product [M ( x)M 1 2 ( x) ] y0 y 2 = ∑ M 1y0 y1 ( x) M y21 y2 ( x) y1 with [ 1 2 Z ( x) = M ( x) M ( x)...M n +1 ( x) ] y0 = START , y n+1 = STOP Viterbi Decoding • Matrix M replaces the product of transition and emission probability • Decoding can be done in Viterbi style • Effort: • linear in length of sequence • quadratic in the number of labels Software CRF++ • See http://crfpp.sourceforge.net/ Summary • Sequence labeling problems • CRFs are • flexible • Expensive to train • Fast to decode