Trouble in the Tails? Earnings Non-Response and Response Bias across...

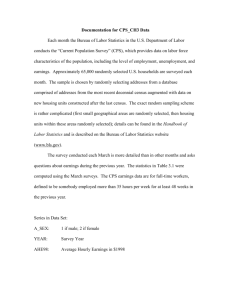

advertisement