Slide set 25 Stat 330 (Spring 2015) Last update: March 22, 2015

advertisement

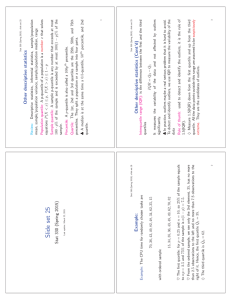

Slide set 25 Stat 330 (Spring 2015) Last update: March 22, 2015 Stat 330 (Spring 2015): slide set 25 Other descriptive statistics Review: Descriptive statistics, inferential statistics, sample/population mean, sample/population variance, sample/population median, range Population quantile: A p-quantile of a population is a number x that solves equations P (X < x) ≤ p, P (X > x) ≤ 1 − p Sample quantile: A sample p-quantile is any number that exceeds at most 100 · p% of the sample and is exceeded by at most 100(1 − p)% of the sample. Percentile: A p-quantile is also called a 100pth percentile. Quartile: The 1st, 2nd and 3rd quartiles are the 25th, 50th, and 75th percentiles. They split a population or a sample into four parts. ♣ A median is at the same time a 0.5-quantile, 50th percentile, and 2nd quartile. 1 Stat 330 (Spring 2015): slide set 25 Example: Example: The CPU time for randomly chosen tasks are 70, 36, 43, 49, 82, 48, 34, 62, 35, 15 with ordered sample 15, 34, 35, 36, 43, 48, 49, 62, 70, 82 ♥ The first quartile: for p = 0.25 and n = 10, so 25% of the sample equals to np = 2.5 and 75% of the sample is n(1 − p) = 7.5. ♥ From the ordered sample, we see only the 3rd element, 35, has no more than 2.5 observations to the left and no more than 7.5 observations to the right of it. Hence, the first quartile Q̂1 = 35. ♦ The third quartile is Q̂3 = 62. 2 Stat 330 (Spring 2015): slide set 25 Other descriptive statistics (Cont’d) Interquartile range (IQR): is the difference between the first and the third quartiles IQR = Q3 − Q1 It measures the variability of the data and not affected by outliers significantly. ♣ In practice, outliers maybe a real serious problem that it is hard to avoid. To detect and identify outliers, we use IQR to measure the variability of the data Rule of thumb: used to detect and identify the outliers, it is the rule of 1.5(IQR). ♦ Measure 1.5(IQR) down from the first quartile and up from the third quartile. All the data points outside this range are assumed to be suspiciously extreme. They are the candidates of outliers. 3 Stat 330 (Spring 2015): slide set 25 Previous Example: Ordered sample 15, 34, 35, 36, 43, 48, 49, 62, 70, 82 with Q1 = 35 and Q3 = 62. Then IQR = Q3 − Q1 = 62 − 35 = 27 and measure 1.5 interquartile ranges from each quartile Q1 − 1.5(IQR) = 35 − 1.5 · 27 = −5.5 Q3 + 1.5(IQR) = 62 + 1.5 · 27 = 102.5 ♣ None of the data in the sample is outside the interval [−5.5, 102.5]. No outliers are suspected. 4 Stat 330 (Spring 2015): slide set 25 Graphical Statistics ♠ To illustrate those graphical tools, consider the data set consist of measurements of the girth, height, and volume of timber in 31 felled black cherry trees. ♠ Note that girth is the diameter of the tree (in inches) measured at 4 ft 6 in above the ground. ♠ We can collect the data and draw schematic to illustrate how the data distributed. Histogram: A histogram shows the shape of a pmf or a pdf of data, checks for homogeneity, and suggests possible outliers. ♥ To construct a histogram, we split the range of data into equal intervals, ’bins’, and count how many (or how much proportion of) observations fall into each bin. 5 Stat 330 (Spring 2015): slide set 25 6 Stat 330 (Spring 2015): slide set 25 Stem-and-leaf: A stem-and-leaf plot is similar to histogram. They however show how the data are distributed within columns. ♥ To construct a stem-and-leaf plot, we need to draw a stem and a leaf. ♥ The first one or several digits for a stem, and the next digit forms a leaf. Other digits are dropped. For example 239 ⇔ 23 | 9, 23 ⇔ 2|3 Example: cherry tree (again) Stem-and-leaf plot for height (leaf unit=1, 6|34 = 63, 64) 5 6 7 8 34 34569 01224455566789 000001123567 7 Stat 330 (Spring 2015): slide set 25 Boxplot: To construct a boxplot, we draw a box between the first and the third quartile, a line inside a box for a median, and extend whiskers to the smallest and the largest observations. ♠ This representation is also called five-points summary (xi is the sample value obtained for random variable Xi) five points = (min xi, Q̂1, M̂ , Q̂3, max xi) Example: cherry tree (again) The boxplot of girth is below 8 Stat 330 (Spring 2015): slide set 25 Scatter plot and time series plot: Scatter plots are used to see and understand a relationship between two variables. Particularly if one of the variable is time, it is referred as time plot. ♣ Scatter plot consists of n pints on an (x, y)−plane, with x− and y−coordinates representing the two recorded variables. Example: cherry tree (again) The scatter plot of girth v.s. height (xcoordinate is girth, y-coordinate is height) 9 Stat 330 (Spring 2015): slide set 25 Parameter Estimation ♠ Why do we need estimators and what is an estimator? Some motivations: Suppose we are interested in the average annual income of people in the U.S., we use a parameter θ to denote it. ♠ Ideally, if we know all the data in the population, say x1, · · · , xN (they N P are the sample values of X1 · · · , XN ) then θ = xi/N . However, we are i=1 not able to record the annual income for each individual! ♠ What we do is to select a good and appropriate sample, a subset of the whole population, say X1, · · · , Xn with sample size n < N . ♣ Their values are x1, · · · , xn and we can compute the sample mean based on those value: x̄, we wish this is a good representation of θ, i.e. estimate θ ♥ X̄ is an estimator for θ then, and x̄ is a value of this estimator. 10 Stat 330 (Spring 2015): slide set 25 Estimators Estimator: Let X1, · · · , Xn be i.i.d. random variables with distribution Fθ with (unknown) parameter θ. A statistics θ̂ = θ̂(X1, · · · , Xn) used to estimate the value of θ is called an estimator of θ. Estimate: For each realization x1, · · · , xn, θ̂(x1, · · · , xn), which is a number, is called an estimate of θ. A very natural question: is that estimate good or bad? ♠ We need some terminology to compare our estimators. ♣ Unbiasedness: An estimator for θ is unbiased if the expected value of the estimator is the true parameter, i.e. E(θ̂) = θ ♣ Efficiency: For two estimators of θ, say θ̂1 and θ̂2, θ̂1 is considered to be more efficient than θ̂2 if E(θ̂1 − θ)2 < E(θ̂2 − θ)2 11 Stat 330 (Spring 2015): slide set 25 ♠ E(θ̂ − θ)2 is called MSE (Mean Squared Error) ♣ Consistency: If we have a large sample size n, we want the estimator θ̂ to be closed to the true parameter in the send that lim P (|θ̂ − θ| > ) = 0 n→∞ for any > 0. ♥ Example: The sample mean X̄ is unbiased for population mean µ, then sample variance is unbiased for the population variance σ 2. ♥ Reason: We have E(X̄) = E(n−1 n X i=1 Xi) = n−1 n X i=1 E(Xi) = n−1 n X µ = n−1nµ = µ i=1 12 Stat 330 (Spring 2015): slide set 25 2 and S = (n − 1) −1 n P i=1 2 (Xi − X̄) = (n − 1) −1 ( P Xi2 − nX̄ 2) so 1 X (Xi − µ)2 − n(X̄ − µ)2 S = n−1 2 where µ = E(Xi), thus 1 1 n 2 2 2 E(S ) = (nσ − nVar(X̄)) = (nσ − σ ) = σ 2. n−1 n−1 n 2 13