The interpretation of the linear regression model

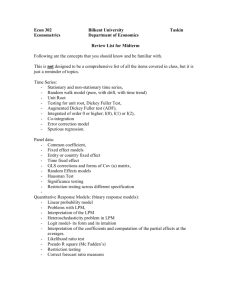

advertisement

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

The linear regression model

Ragnar Nymoen

Department of Economics, UiO

9 January 2009

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Overview

We review the linear regression model,

its interpretation, and

estimation by OLS,

and the properties of OLS estimators.

Main reference is Greene Ch 1-5.5. See teaching plan for

overlapping reference to Biørn and Kennedy

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Model and assumptions

Most of the statistical properties of the linear regression model can

usefully …rst be illustrated for the regression model with only one

explanatry variable. So we start with the simple regression model,

aka the bivariate regression model.

We use the notation in Greene’s book.

As you remember from earlier courses, it is important to

distinguish between the population and the sample. The regression

model we de…ne refer to the population. Greene writes it as

yi = β1 + β2 xi + εi , i = 1, 2, ..., n.

where yi and εi are random (stochastic) variables. xi can take two

interpretations: It can be either a deterministic variable, or a

stochastic variable.

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

In the population model:

yi = β1 + β2 xi + εi , i = 1, 2, ..., n.

the parameters β1 and β2 are the coe¢ cients of the model.

Because economic theory contains hypotheses about how changes

in one variable a¤ects another, the main parameter of interest of

the model is the slope co¢ cient β1 , often called the derivative

coe¢ cient (a term that covers the mathematical derivative, the

elasticity etc, depending on functional form (see below). For the

same reason yi is referred to as the dependent variable, or

regressand, and xi as the independent variable, or regressor.

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Assumptions of the regression model (Table 2.1 in Greene)

A1 Linearity

A2 Full rank (absence of perfect collinarity)

A3 Exogenity of xi . Meaning that the conditional mean

of the stochastic disturbance term εi which we write

E [εi jxi ] is zero.

A4 Homoscedasticity and non-autocorrelation

A5 Analysis is conditional on the observed xi s. (meaning

that all properties of the model estimators can be

derived as if they are deterministic, even in the

stochastic regressor case.

A6 Normal distribution of the disturbances.

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Is clear that A1-A5 is a mix of assumption about the sample (A2)

and about the population (A1, A3-A6). Logically, assumptions

about the regression model should be about the population, hence

A2 could be postponed until estimation of the parameters— but its

listing among the model assumptions has become custom.

Another feature, more worth deliberating, is the close relationship

between A3 and A5: In the light of A5, A3 is implied. Conversely:

When A3 holds also A3 holds automatically.

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Exogenous regressors (A3 and A5)

The case of exogeneity of deterministic xi is of course trivial

since the covariance between εi and the …xed deterministic xi

is zero. Then E [εi jxi ] = E [εi ], which is zero under the

assumption that E [εi ] = 0, which in many presentations

replaces A3 (in the case of deterministic regressor).

If both xi and yt are random variables means that they have a

joint probabily density function (PDF) which we denote

f (xi , yi ). For simplicity we assume that PDF is bivariate

normal (see Greene appendix B.9, p 1009).

All the main results below also holds for other distributions

than the normal, with an important exception of

homoscedasticity (A4).

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

The binormal case

The PDF of fxi ,yi g is

f (xi , yi ) =

1

p

σx σy 2π 1

2ρ

where

ρ2

exp[

µx ) (yi

(xi

σx

(xi µx )2

1

f

(1)

2(1 ρ2 )

σ2x

µy )

σy

+

(yi

µy )2

σ2y

g]

∞ < µx , µy < ∞, 0 < σx , σy < ∞ and

ρ=

E [(xi

µx )(yi

σx σy

µy )]

=

σxy

,

σx σy

1 < ρ < 1.

where σxy is the covariance

of xi and yi (denoted Cov [xi , yi ]).

q

p

σx = σ2x and σy = σ2y , where σ2x and σ2y are the marginal

variances of xi and yi .

µx and µy are the marginal means of xi and yi .

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

The marginal PDF of xi is a normal PDF:

f (xi ) = p

(xi µx )2

1

p exp[

]

2σ2x

2π σ2x

(2)

The conditional PDF of yi is given by

f (yi jxi ) =

f (xi , yi )

f (xi )

(3)

and (1) and (2). It it not di¢ cult to show that

f (yi j xi ) = A exp[

1

2σ2y (1

ρ2 )

fyi

where

A= p

2π

q

µy + ρ

1

σ2y (1

σy

µ

σx x

.

ρ2 )

ECON 4610: Lecture 1

ρ

σy

xi g2 ],

σx

(4)

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Next, de…ne the conditional expectations of yi , E[yi j xi ] as:

E [yi j xi ] = µy

ρ

σy

σy

µ + ρ xi

σx x

σx

(5)

and the conditional variance of yi as

Var [yi j xi ] = σ2y (1

ρ2 )

(6)

We then have the following important results:

1

2

3

The conditional PDF of yi given by (4) is a normal PDF.

If yi and xi are correlated, ρ2 6= 0, the conditional mean of yi

given by (5) is a deterministic function of xi . (5) is called the

regression function.

If yi and xi are correlated, ρ2 6= 0, the conditional variance of

yi given by (6) is less than the marginal variance σ2y :

Var [yi j xi ] < σ2y i¤ ρ2 6= 0

ECON 4610: Lecture 1

(7)

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

The regression model in the binormal case

We now de…ne the disturbance εi by

εi = yt

E[yi j xi ],

(8)

which of course is a stochastic variable with a normal distribution.

We obtain the regression model as

yi = E[yi j xi ] + εi = β1 + β2 xi + εi

(9)

where the parameters of the model is linked to the PDF of the

stochastic varaibles in the following way

σy

µ = µy

σx x

σy

σxy

= ρxy

= 2

σxi

σx

β1 = µy

β2

Finally, εi

ρxy

N (0, σ2 ) with σ2 = σ2y (1

σxy

µ

σ2x x

ρ2 ).

ECON 4610: Lecture 1

(10)

(11)

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Above we have derived the population regression model for

the case where the PDF of fxi , yi g is binormal.

The results generalize to the multivariate case where fyi ,x1i ,

x2i ,...,xk g are have a multivariate normal PDF.

It shows that A3, A5 and A6 are inherent model properties is

this case

A3 and A5 hold also for other PDFs, along with more speci…c

σ

results, such as β2 = ρxy σyx (where the moment refer to that

other PDF)

A6 may not hold for other PDFs, the variance of the

disturbance may by non-constant and may also depend on xi .

It is heteroscedastic. With time series data, we may also have

Cov [εt , εt j ] 6= 0 for j = 1, 2, .... This is called

autocorrelation.

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Status of the listed assumptions (#1)

Our analysis shows that it is (only) A1, A4 and A6 that are

assumptions about the regression model (for the population).

A2 is an assumption about sample variability, not about the

population.

A3 and A5 are a properties (not assumptions) of the

regression model.

For A3 (exogeneity) this seems paradoxial, since we will spend

much time in this course …nding ways of doing valid

econometric analysis of linear relationships when E[εi j xi ]

does not hold.

The solution is that whenever the parameters of interest for

our study are parameters in the regression function (5), then

the regression model, and the associated estimateion method

OLS, is the appropriate methodology.

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Status of the listed assumptions (#2)

Ideally, economic theory should imply when the linear

regression model contains the parameters of interest, and

when another econometric model is needed.

Often, the theory does not take into account the stochastic

nature of the varaibles, and alternative econometric models

then need to be considered with the aid of statistical test of

exogeneity for example. This will be covered later in the

course.

Linearity (A1) is not very restrictive. This is because linearity

of parameters can be retained eventhough the data are

non-lineary transformed prior to modelling.

Gives rise to a wide range of non-linear functional forms, that

are linear in parameters: log-log, and semilog, and reciprocal

functional forms.

It a¤ects the interpretation of the derivative coe¢ cients.

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Another assumption— constancy of parameters

Constancy of the parameters of the model cannot be taken for

granted.

In the case of time-series data, when there is a natural

ordering of the variables (from t = 1 to t = T for example) it

is easy to envisage that one or more parameter of the PDF

can change a given point in time.

It is possible to formalize this by working with the PDF of the

whole sequence of variables fy1 ,y2 , ..,yT , x1 , x2 , ...,xT g,

which is called the Haavelmo distribution.

Non-contancy of parameters can be due to regime-shifts

(changes in economic behaviour), and they can invalidate the

model for policy analysis, and damage forecast accuracy. On

the other hand, regime-shifts can help indentify the direction

of causality. We will show this at a later stage.

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

The multivariate regression model

yi = β1 x1i + β2 x2i + ... + βk xKi + εi , i = 1, 2, ..., n.

Greene uses K to denote the the number of explanatory variables

variables including the constant term (if x1i = 1 for all i). In

Biorn’s notation, the number of variables is K + 1.

De…ning two n 1 vectors y and ε, and a n K matrix X with all

the variables, and a K 1 vector β with the coe¢ cients, we can

write the model in matrix form:

y = Xβ + ε

The regression function:

E [yi j xi ] = xi0 β = β1 x1i + β2 x2i + ... + βk xKi

where the row vector xi0 are made up of the elements in the i’th

row vector in the X matrix.

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Usually, the …rst variable will be speci…ed as a vector of 1’s

(to include an intercept term).

The other K

elasticities.

1 coe¢ cients are partial derivaties or

In matrix notation the assumption/result about the variance

of ε is written as

Var [ε] = E[εε0 ] = σ2 I

where I is the n

n identity matrix.

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

OLS estimators

The OLS estimators of the coe¢ cient in β are given by:

0

b = (X X)

1

X0 y

(12)

This presumes that the inverse of the information matrix (X0 X)

exists, which it does when assumption A2 holds.

Invertibility depends on det(X0 X) 6=0. As an illustration, assume

X =

a c

d b

then

det(X0 X) = ab

cd

If b = αd and c = αa then det(X0 X) = 0 and the inverste of X0 X

does not exists. This is a case of perfect multicollinearity, which is

synonymous with reduced rank of X.

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Assumption A2 states that X has rank = K . We have seen

that this is an assumption about variability in the population

and sample.

In the simple regression

yi = β1 + β2 xi + εi , i = 1, 2, ..., n.

xi cannot be a constant, and generally no single variable can

be an determinstic linear function of the other variables in X.

A classic example of invadvertely creating reduced rank is

when quarterly seasonal e¤ects are attempted modelled with

four seasonal dummies in a regression model where an

intercept is already included.

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Consider the case of K = 3, with an intercept (x1i = 1 for all i)

and two explanatory variables. The OLS estimators for the two

derivative coe¢ cient can be written as

s 2 syx

syx3 sx2 x3

(13)

b2 = x3 2 22

,

sx 2 sx 3 sx 2 x 3

b3 =

sx22 syx3 syx2 sx2 x3

.

sx22 sx23 sx2 x3

where

sx2k

=

1 n

(xki

n i∑

=1

x̄k )2 , k = 2, 3

sx 2 x 3

=

1 n

(x2i

n i∑

=1

x̄2 )(x3i

sx k y

=

1 n

(yi

n i∑

=1

ȳ )(xki

x̄3 )

x̄k ), k = 2, 3

ECON 4610: Lecture 1

(14)

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

(13) and (14) have the same denominator which can be written as

sx22 sx23

sx2 x3 = sx22 sx23 (1

rx22 x3 )

where rx22 x3 is the squared correlation coe¢ cient between x2 and x3 .

2 > 0,

rx22 x3 < 1 (absence of perfect collinearity) and sxk

k = 2, 3 (variability of regressors), ensures the existence of the

estimators.

rx22 x3 = 0 (no collinearity) reduces the expression for b1 and b2

to

syx

syx

b2 = 2 2 , b3 = 2 3

sx 2

sx 3

which are the OLS estimators in the two regressions between

y and x2 and y and x3 . The regressors are then said to be

orthogonal.

If rx22 x3 > 0, estimation of the partial e¤ects of x2 and x3 on y

requires multiple regression (they cannot be estimated by

simple regression).

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Frisch-Waugh theorem

First regress yi on x3i (using OLS), and then regress x2i on x3i

and then regress the residuals from the …rst regression (eyx3 ,i )

on the residuals from the second regression (ex2 x3 ,i ). Let b2

denote the OLS estimate for the derivative coe¢ cients in the

regression between the residuals.

Straight-forward algebra shows that b2

b2 .

This result is due to Frisch and Waugh (1933) and shows that

an alternative to multiple regression is to …rst “…lter out” the

e¤ects of the third variable from both the dependent variable

and from the x2 variable, and then perform simple regression

on the “…ltered variables”.

The correlation coe¢ cient between eyx3 ,i and ex2 x3 ,i is the

partial correlation coe¢ cient between y and x2 , controlling for

the in‡uence of x3 .

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Residuals and multiple correlation

Least squares residuals:

e=y

0

Xβ = My

1

M = I X(X X) X0 is dubbed the “residual maker” by Greene.

Next consider the value of y predicted by the regression, call it ^

y.

^

y=y

0

P = X(X X)

1

e = (I

M)y = Py

X0 is the projection matrix. Note

y =^

y + e = projection + residal

If the variable x1 is a constant then

∑ ei = 0 and ȳ = ŷ

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

We can therefore write (yi

ȳ ) as

= ŷi ei ŷ = xi0 b x0 b+ei

ȳ = (xi x)0 b+ei

yi

ȳ

yi

for the K = 2 case we know ∑ni ei (x2i x2 ) = 0, by virtue of OLS

estimation (1oc) and this generalizes to the multivariate case

∑ni (xi x)0 bei = 0, hence

n

∑ (yi

i =1

motivating

|

ȳ )2 =

{z

SST

}

R2 =

n

∑ (ybi

i =1

|

{z

SSR

SSR

=1

SST

n

ŷ )2 + ∑ ei2

}

i =1

| {z }

SSE

SSE

SST

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Properties of the coe¢ cient of determination

0

R2

1.

R 2 > any simple or partial correlation coe¢ cients.

R 2 is increasing in K , so is not useful for determining K .

For that purpose: use adjusted, R 2

2

R =1

n

n

1

(1

k

R2)

or

do a formal test of signi…cance.

R 2 is not invariant to “trivial changes in the model”.

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Unbiasedness

Conditional unbiasedness

For the K variable regression model we have

b = (X0 X )

1

X0 y = (X0 X )

1

X0 [Xβ + ε] = β + (X0 X)

1

X0 ε

meaning that each element in b is the sum of the true coe¢ cient

and a weighted sum of the n disturbances. The weights are

constructs of the 2nd order moments of the explanatory variables in

X. Since ε is random, b is also a stochastic variable— an estimator.

If we condition on a particular set of observations (a particular

realization of all the possible X’s), the conditional expectation of b

is β since

0

E [b j X] = β+E [(X X)

1

X0 ε j X ] = β

Note that we can show the conditional results by thinking as if the

x 0 s were deterministically generated!

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Unbiasedness

Unconditionally

b has expectation β for any given set of observations. Therefore,

when we average over all possible realizations (all possible data

sets) the mean of all the conditional expectations must be β.

Formally we show (unconditional) unbiasedness by the law of

iterated expectations:

E [b] = EX fE [b j X]g = EX [ β] = β

here EX denotes that we take the average over variations of X.

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Conditional distribution

If assumption A5 holds, ε has a multivariate normal PDF, a mean

of 0 and a variance equal to σ2 I:

ε

N (0,σ2 I)

Since each bk in b is a linear combination of εi0 s it follows that

0

1

b j X =N ( β,σ2 (X X)

)

The variance is derived from

E [(b

β)(b

β)0

j

X] = E [((X0 X)

0

0

= E [εε ](X X)

1

1

X0 ε)(ε0 X(X0 X)

2

0

= σ (X X)

ECON 4610: Lecture 1

1

.

1

)j X]

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

OLS variance and consistency

We have for bk :

Var [bk ] =

σ2

nsk2 (1

rx22 x3 )

, k = 2, 3

(15)

The higher the degree of multicollinearity, the larger the

variances.

The variances are decreasing functions of n.

If Var [bk ] ! 0 if n ! ∞.

Together with unbiasedness, this implies consistency: plim

b = β.

These results generalize to any number of variables.

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

A (…rst) Monte Carlo experiment

We let the computer generate 1000 data series according to

Ci = 54, 5 + 0, 8 Ii + εi , i = 1, 2, ....., n.

C symbolizes consumption, and I income.

We generate the 1000 replications by “drawing from” a

normal PSD for the disturbance εi .

In the experiment we vary sample length, n,and the sample

variance of Ii , and σ2 .

Ii is …rst treated as deterministic, so we …rst look at

conditional properties of OLS.

Then random It .

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Determinstic It

MC-1

n = 47

σ2 = 3

σ̂2X = “high”

MC-2

n = 28

σ2 = 3

σ̂2X = “high”

MC-3

n = 47

σ2 = 1, 5

σ̂2X = “high”

MC-4

n = 47

σ2 = 3

σ̂2X = “low”

E [ β̂2 ]

0.79940

0.80601

0.79958

0.80595

q

0.0616

0.072909

0.04356

0.18622

Var [ β̂2 ]

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

It is stochastic

MC-1

n = 47

σ2 = 3

σ̂2X = “high”

MC-2

n = 28

σ2 = 3

σ̂2X = “high”

MC-3

n = 47

σ2 = 1, 5

σ̂2X = “high”

MC-4

n = 47

σ2 = 3

σ̂2X = “low”

E [ β̂2 ]

0.80192

0.80601

0.80136

0.79731

Var [ β̂2 ]

0.066575

0.072909

0.047076

0.16021

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Equipped with the regression model and a data sample, OLS

estimates are obtained and statistical testing of hypotheses

about the population parameters can be done.

Student-t distributed test statistics: Hypotheses about single

parameters, con…dence/prediction intervals.

χ2 and F distributed test statistics: Joint hypotheses, i.e. H0 :

Rβ = q.(Greene Ch 5.3)

These statistics (and modi…cations of them) also play a role in

testing for functional form and structural break, a point we

shall return to because of its importance for modelling.

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Robustness of OLS inference (#1)

Important premises for the above procedure:

That OLS estimators are conditionally normally distributed

The sum of squared and standardized OLS residuals

ei = yi

xi0 b = εi

xi0 (b

β)

has a conditional χ2 distribution:

∑ni=1 ei2

j X χ2 (n K ),

σ2

That all inference is conditional on X is not entirely

convincing— after all the explanatory variables are a mix of

deterministic and random variables, and the sample only give one

realization of the random variables. Inference seeks to generalize

from the sample results to the population and should not depend

on the observations of the x’s that the data generation happened

to deliver.

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Robustness of OLS inference (#2)

Heuristically this problem is not so serious. For example, the

t distibution used for testing H0 : βk = β0k

β̂

β0k

qk

Var ( β̂k )

t (n

K)

depends on the degrees of freedom, but not on X.

In this sense, OLS inference is unconditional.

ECON 4610: Lecture 1

(16)

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Robustness of OLS inference— asymptotics

Even if the disturbances are not normally distributed

(departure from A6), or if they are heteroscedastic (departure

from A4), inference based on OLS remains approximately

correct,

and the statistical quality of the inference gets better as the

sample size n increases. Also, b is asymptotically normally

distributed even though it may depart from the normal

distribution for …nite samples.

This builds on the insight of the central limit theorem:

Sequences of averages from non-normal but independent

variables converges in distribution to a normal PDF.

Since OLS estimates takes the form of averages of a similar

nature, the result holds in many cases.

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Limitations of the regression model and OLS estimation.

Despite the above, improved inference may still be achieved in

small samples by modifying the estimators and/or the

test-statistics (e.g., correction for heteroscedasticity or

autocorrelation). You will learn about that in this course.

The …rst serious limitation is that the parameters of interest is

not always “in” the regression model.

Then need a di¤erent econometric model. This is also covered

by this course

A second limitation lies in the assumption that the random

variables xk 1 , xk 2 , ...., xkn are independent. This too is not so

serious as it …rst seems, cf Greene’s discussion of “well

behaved data” on page 65:

Moreover OLS perform relatively well even in the case with

“lagged dependent variable” mentioned by Greene at the

bottom of page 73.

ECON 4610: Lecture 1

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Lagged endogenous regressor (#1)

The essence of the model discussed by Greene is:

yt = β2 yt

1

+ εt ,

εt

N (0, 1), t = 1, . . . T .

The problematic feature here is that yt

and all “older” εt ’s.

1 is

correlated with εt

(17)

1

This creates a …nite sample bias in the OLS estimate (the

Hurwicz-bias).

For the simplest

Function Asymptotic Finite sample

E [b2 ]

Var [b2 ]

β2

0

β2

(1

2β2 /(T

β22 )/T

ECON 4610: Lecture 1

1)

The interpretation of the linear regression model

Estimation of the regression model

Properties of the OLS estimator

Inference in the regression model

Lagged endogenous regressor (#2)

Can illustrate these results by Monte Carlo simulation using

PcNaive, which is part of the PcGive family of programs.

The limiting case, when this extension of the OLS inference

breaks down, is β2 = 1. We are then in the realm of

non-stationarity random variables, ie. assumption AD5 on

page 74 in Greene does not hold.

One of the most noted pitfalls of non-stationary is when we

estimate:

yt = β1 + β2 xt + εt .

The standard OLS inference “always” leads to rejection of H0 :

β2 = 0 when H0 is true. Spurious regression.

Solved by another model (not in this course)–cointegration.

ECON 4610: Lecture 1