GraphPad StatMate 2 Copyright (C) 2004, GraphPad Software, Inc.

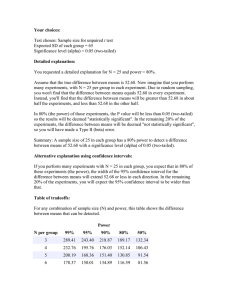

advertisement