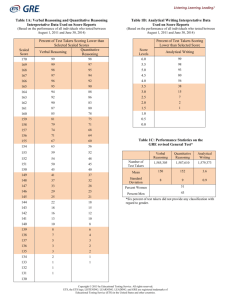

ETS Standards 2014

advertisement