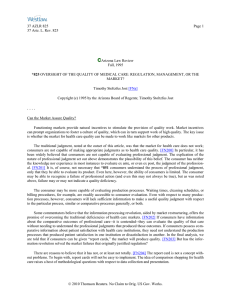

Published in the International Journal of Law and Information Technology,... Journal website at

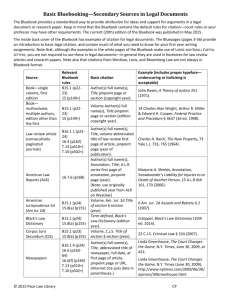

advertisement