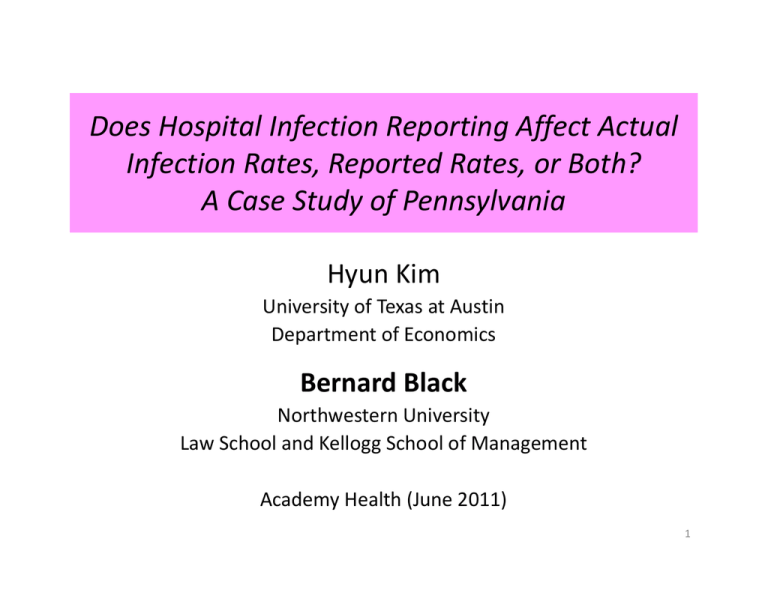

Does Hospital Infection Reporting Affect Actual Infection Rates, Reported Rates, or Both? A Case Study of Pennsylvania

advertisement

Does Hospital Infection Reporting Affect Actual Infection Rates, Reported Rates, or Both? A Case Study of Pennsylvania Hyun Kim University of Texas at Austin Department of Economics Bernard Black Northwestern University Law School and Kellogg School of Management Academy Health (June 2011) 1 Background • Roughly 1.7 million annual Healthcare Associated Infections (HAIs) – ~100,000 result in death • Direct U.S. healthcare costs of HAIs ~$45 billion – total annual costs > $100 billion • We study Central‐Line Associated Bloodstream Infections (CLABSI) – Roughly 80,000 infections; 30,000 deaths – often preventable (Pronovost et al, 2006) 2 Does Public Reporting Help? • 20 states: hospitals must publicly report HAI rates – More state reports coming – National : first CLABSI reports late 2011 • Effect of reporting on infection rates: unknown • Pro: “You manage what you measure” – High rates patient and doctor pressure professional pride internal resources • Con: – Can measuring distract from controlling? – Do patients, doctors or insurers use the data? 3 Broader Context • US healthcare is: – Wildly expensive . . . and getting more so – Of only middling quality • How can we fix those twin problems? – What role for public reporting of outcomes? 4 Three main results • Public reporting (1) lower actual infection rates (2) “gaming” (time‐inconsistent public reporting) (3) Threshold effect: both effects mostly for high‐rate hospitals • Key methodology innovation: Study proxy rates (“inpatient” rates), not reported rates – Based on AHRQ Patient Safety Indicator (PSI)‐7 • PSI‐7 is AHRQ’s measure of CLABSI – correlates strongly w. reported rates – not reported hopefully not gamed 5 20 40 60 80 Slope = 0.848*** Correlation = 0.77 0 P H C 4 I n f e c tio n s 10 0 Correlation between Reported and Inpatient Infections 0 20 40 60 80 100 Inpatient Infections PHC4 Infections Outliers Fitted Line Data for PA for 2005 = first reporting year 6 Why use inpatient rates? Let’s us come closer to “gold standard” ‐‐ drug trial protocol: Drug Trial Design Before After Treatment group Data Data Control group Data Data “Difference in differences” (DiD) research design: treated vs. controls after vs. before Random assignment to treatment “Double blind”: researchers can’t manipulate outcomes 7 Compare public infection reporting Before After Treated (hospitals in reporting states) ? Reported Data Controls (hospitals in non‐reporting states ? ? Usual (weak) research design: Assume reporting is accurate (time‐consistent) Study reported infection rates: • only for treated group • only for treatment period 8 DiD research design for inpatient rate Before Treated (hospitals in PA) Controls (10 non‐reporting states) After Publicly Reported Data Inpatient data (PA) Inpatient data (PA) Inpatient data (NIS) Inpatient data (NIS) Difference‐in‐Differences design (“natural” experiment) Also assess gaming: Within treated group: Compare reported rates to inpatient rates Divergence evidence of gaming Control states: CA, IL, IA, MA, MD, NJ, OR, UT, WA, WI Exclude: < 40 beds; gov’t owned 9 Mean CLABSI inpatient rates, 1998‐2008 (per 1,000 discharges) 1.3 1.1 0.9 Mandatory Reporting 0.7 0.5 1998 1999 2000 2001 2002 Pennsylvania 2003 2004 2005 2006 2007 2008 Control States 10 Inpatient and Reported Rates, 1998‐2008 1.3 1.1 0.9 Mandatory Reporting 0.7 0.5 1998 1999 2000 2001 2002 Pennsylvania: Reported rate 2003 2004 2005 2006 2007 2008 Pennsylvania: Inpatient rate Reported rate is extrapolated, based on inpatient rate, to 1998‐2004 and 2008 11 Impact of Reporting: Regression Analysis 1. Compare Trends 2. DiD 3. Structural break (not shown) • Controls and econometric method: – Hospitals (Xit): beds; teaching, rural, for‐profit dummies – State and year fixed effects – Exclude outliers by state and year – Standard errors with hospital clusters – Lots of robustness checks 12 Compare Trends in Inpatient Rates Year PA*Year Pre‐reporting (1998‐2003) 0.041 (3.92)*** 0.005 (0.37) Reporting (2003‐2008) 0.014 (1.04) ‐0.047 (‐2.79)*** Similar results with year dummies. 13 DiD Estimates (t‐stats in parentheses) (1) (2) Inpatient rate ln((CLABSI+1)/ 1,000 discharges) ‐0.181 (‐4.09)*** ‐0.141 (‐3.52)*** beds/100 0.239 (16.7)*** 0.121 (10.9)*** Teaching hospital 0.227 (4.35)*** 0.146 (3.27)*** Rural hospital ‐0.286 (7.16 )*** ‐0.125 (2.36)** For‐profit hospital 0.238 (3.69)*** 0.209 (3.62)*** Yes Yes Dependent Variable Reporting dummy Year and state fixed effects, constant Note: Reporting dummy = 1 if state = PA and year ≥ 2004; no. of PA hospitals = 151 14 DiD by Hospital Type Rural; Non‐ teaching (mostly small) Urban; Small Non‐ teaching Urban; Large Non‐ teaching Teaching (large, mostly urban) A. Dependent Variable: CLABSI inpatient rate Reporting dummy No of PA hospitals ‐0.084 [‐1.68]* ‐0.022 [‐0.24] ‐0.319 [‐3.25]*** ‐0.289 [‐2.94]*** 38 37 33 43 15 Gaming: proxy vs. reported rates (% change, 2005‐2007) No. of hospitals inpatient rate reported rate t‐test, diff in mean log ratios All hospitals 151 ‐17% ‐40% 3.76*** Teaching (all large) 43 ‐20% ‐41% 3.11*** Non‐Teaching 108 ‐14% ‐38% 2.40** Urban large 33 ‐19% ‐32% 1.00 Urban small 37 ‐12% ‐45% 2.30** Rural 38 ‐1% ‐35% 0.87 16 Lower correlation (inpatient vs reported Infections) 20 40 60 80 YEAR 2007 (r=0.67) 0 P H C 4 I n f e c t io n s 10 0 YEAR 2005 (r=0.77) 0 20 40 60 80 100 0 20 40 60 80 100 Inpatient Infections PHC4 Infections 2005 correlation = 0.77 Regression coefficient=0.848*** Outliers Regression line 2007 correlation = 0.67 Regression coefficient=0.570*** 17 Threshold Effect(s)? Predict: – High rate stronger incentives; lower marginal cost – Low rate weaker benefit; higher marginal cost threshold rate for impact of reporting on rates Practical and theoretical issues: • Graphical assessment • • Plausible threshold: top third based on infection rates Control for regression to threshold 18 Trends of Mean Inpatient Rate by Cohort Pennsylvania 1998~2008 B. Public reporting (2004-2008) 1 .5 1 .5 0 M e a n C L A B S I ra te 2 A. Pre-reporting (1998-2003) -4 -3 -2 -1 0 1 2 3 4 -4 -3 -2 -1 0 1 2 3 4 Event time bottom third middle third top third 19 Threshold Effect; Pennsylvania Hospitals Type of CLABSI rate Dependent Variable [1] [2] [3] Inpatient Reported Reported de‐thresholded ratet+2 ‐ de‐thresholded ratet De‐thresholded reported rate ‐0.291 [7.96] ** ‐0.420 [9.53]*** ‐0.556 [5.10]*** Reporting dummy ‐0.082 [2.57] ** ‐0.008 [0.24] 0.403 [1.98]** 0.007 [0.14] ‐0.220 [3.59]*** ‐0.607 [2.64] *** ‐0.172 [2.36] ** ‐0.703 [9.67]*** ‐0.764 [3.53]*** Report * middle third Report * top third Report * de‐thresholded rate 0.415 [2.00]** Report * middle*de‐thresholded rate ‐0.376 [1.25] Report * top * de‐thresholded rate ‐0.898 [4.20]*** Other controls Yes Yes Yes 20 0 -1 -2 -3 P a rtia l R e s id u a ls 1 Partial Scatter Plot, PA 2004~2008 Bottom third Slope = 0.415** (t=2.37) -1 Middle third Slope = 0.040 (t=0.22) 0 1 Top third Slope = − 0.483*** (t=9.34) 2 3 4 Reported rate t - threshold rate t Linear Fit: bottom third partial residuals: bottom third middle third middle third top third top third 21 Conclusions; Future Research • Public reporting lower “actual” CLABSI rates – ~20% decline (relative to controls) during public reporting period – But *only* 20% (much more is achievable) • Threshold Effect – Hospitals with highest third of reported rates lower inpatient rates – Smaller effect for bottom 2/3rds • Reported rates: time‐inconsistent reporting – reported rate drop >> inpatient rate drop can’t measure impact of reporting using only reported rates • Extensions: – Other states; other HAIs – Other areas of public reporting 22 Placeholder, extra slides follow 23 State of the Art Example: Stricof et al. (2011) 5 4.5 Central Line Associated Bloodstream Infection (CLABSI) Rates in New York State Surgical Intensive Care Units, compared by participation in Greater New York Collaborative H* 4 CLABSI Rate 3.5 3 Greater NY Collaborative Not in Collaborative 2.5 2 1.5 1 0.5 0 2007 H* significantly higher outside of collaborative. 2008 Year 2009 No change in CLABSI rate for 10 hospitals in collaborative Decrease in CLABSI rate for 25 hospitals outside of collaborative (p<0.0001) 24 Pennsylvania Infection Reporting • Early mandate for HAI reporting – Nov. 2003: Law adopted – 2004: Private reporting to Pennsylvania Health Care Cost Containment Council (PHC4), not publicly released. – 2005 ‐ 2007: HAI rates reported on PHC4’s website Rate = Infections/1,000 discharges – 2008 ~ : Infection reporting through NHSN; annual reports by PaDoH. Different reporting methodology. 25 Summary Statistics Pre‐reporting 1998~2003 Reporting 2004~2008 Control states PA Control states PA 696 151 592 151 1,435 904 981 754 204 (171) 222 (182) 202 (166) 228 (179) % Teaching 25.9% 27.7% 26.8% 28.2% % Urban 25.1% 26.5% 20.0% 26.5% % For‐profit 12.1% 7.3% 15.1% 11.4% 1.08 (1.26) 0.95 (1.00) 1.29 (1.73) 0.94 (0.93) 0.78 0.70 0.97 0.75 23.8% 18.3% 19.3% 18.2% Hospitals Sample size Mean (median) bed Mean proxy rate (s.d.) Median proxy Rate % zero proxy rate 26 Structural Break in Inpatient Rates [1] [2] [3] Pennsylvania Control states All Year 0.045 (4.65)*** 0.040 (3.86)*** 0.041 (5.54)*** Post2004 0.543 (4.20)*** 0.228 (1.45) 0.226 (1.68)* ‐0.087 (‐4.77)*** ‐ 0.039 (‐1.95)* Post2004*Year ‐0.038 (‐2.08)*** PA*Post2004 0.189 (1.11) PA*Post2004*Year ‐0.046 (‐2.12)** Post2004* Hospital characteristics Yes Yes No Hospital characteristics, constant Yes Yes Yes State Fixed Effects No Yes Yes F‐Test for Joint Significance Sample size Adjusted R2 Post2004, Post2004*Year, Post2004*Hospital characteristics PA*Post2004, PA*Post2004*Year F = 6.10 p = 0.0000 F = 1.33 p = 0.2407 F = 8.28 p = 0.0003 1,582 0.462 2,321 0.4351 3,903 0.4419 27