A stereo video imaging system for surface particle tracking

advertisement

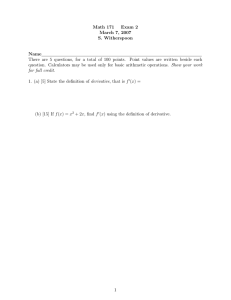

A stereo video imaging system for surface particle tracking Klaus Hoyer1 & Marshall P. Tulin2 Ocean Engineering Lab, University of California, Santa Barbara A two camera stereoscopic Particle Tracking Velocimetry (PTV) system has been developed for the quantitative measurement of Lagrangian surface velocities in water waves. Floating particles are imaged with this system and computer processed off line using appropriate algorithms. The system has been designed for and installed in a large (180 L, 14 W, 8 D) wind wave tank in the Ocean Engineering Laboratory, UCSB. The procedures involve i) calibration, ii) stereoscopic matching, iii) tracking in imagespace, and iv) transformation of the matched and tracked particle positions into physical space. The system and the procedures are detailed below and an example is shown of surface motions in wind waves. Introduction: Surface particle tracking allows quantitative measurements of individual particle positions in time. The observed particles are buoyant and are assumed to follow the local surface flow field with the topological constraint to remain on the water surface. This system consists of a pair of synchronized cameras, computer and frame grabber, a threedimensional calibration grid, and buoyant tracer particles to be recorded. Experimental Setup and procedure: The experimental procedure requires two cameras, which are positioned on tripods directly on opposite sides of the wave tank looking perpendicular to the wave propagation direction from an elevation of approximately 30¡, see Figure 1 . The tracer particles and the calibration grid targets were painted with fluorescent paint and illuminated by UV-intensive black light lamps. This ensured a very high signal to noise ratio in the particle images. Calibration: With the cameras in place and not to be moved again, a single frame of a regular calibration grid comprising 72 targets whose positions are known is taken. The threedimensional calibration target grid is positioned in the region to be calibrated (see Figure 1) . The outer extent of the grid should cover the entire region of interest, so that the geometric analysis of the particle images is obtained by interpolation rather than extrapolation. An example of this calibration image is depicted in Figure 2. After acquiring the image of the calibration targets, the frame is removed from the tank and care is taken not to move the camera in any way. 1 2 kvg5@cox.net mpt6@cox.net a b a d c e Figure 1: : Experimental setup for the calibration of the surface particle tracking system. The cameras (a) are positioned on opposite sides of the wave tank, facing each other, the overhead light (b) provides the black light illumination of the floating calibration frame (c) with mounted calibration targets which were coated with fluorescent paint (d) and their magnification (e). The calibration targets have to be at precisely known locations with respect to each other, but need not have an absolutely known location with respect to the cameras. Once the calibration images are taken, a zero reference will be assigned to one of the grid points. The optical calibration of the system is necessary to map the particle images, or tracks, of the flow field from the pixel-space to the physical 3D space. Taking a single shot of a three dimensional grid of known x, y and z coordinates allows a nonlinear (e.g. quadratic, linear, constant and interaction terms) multivariate regression to be performed for each camera as a function of the measured pixel coordinates. The system of equations will be over-defined since there will be four pixel coordinates to find three physical coordinates in space. The fitted parameters of the mapping function later allow the physical coordinates to be calculated from the pixel coordinates of the tracked particles. The calibration target s image coordinates, as long as they appear larger than a single pixel on the image, can be estimated with sub-pixel accuracy by calculating the center of light intensity over the particle image. The first step in the image analysis involves identifying the grid points and determining their respective centers. The magnified inlay in the bottom left corner of Figure 2 shows the digitized image of some of the 72 calibration targets. The calibration targets are approximately 2x2 pixels large, whereas the different gray values correspond to individual pixels of the camera CCD chip. This magnification also shows the results of that first step, where the centers of the grid points are marked with small dotted squares. Since the physical coordinates of the calibration grid points are known, a multivariate regression allows curve fitting of the functions f1, f2 and f3 with respect to the image coordinates x1, y1 and x2, y2 . Y Z 12 in X 2 in 16 in Figure 2 Image of a 64 inch (l) by 24 inch (w) by 12inch (h) calibration grid as seen by the camera with the 72 calibration targets. The individual particles were identified by threshold level. Each particle center is calculated by integration over the surroundings of the peak values. Wave tank coordinates are as indicated. The grid spacing is 16 inches ∆ x, 2inches ∆ y and 12 inches ∆z, the lowest points are 1 above the water surface which is defined as y = 0. Optimizing the residual errors of the curve fitting function resulted in a form for xph, yph and zph containing constant, linear, interaction and quadratic terms. The optimal curve fitting functions thus were of the form x ph = f1 ( x1 , y1 , x2 , y2 ) = a0 + a1 x1 + a2 y1 + a3 x2 + a4 y2 + a5 x1 y1 + a6 x2 y2 + a7 x1 x2 + a8 y1 y2 + a9 x12 + a10 x22 + a11 y12 + a12 y 22 y ph = f 2 ( x1 , y1 , x2 , y2 ) = b0 + b1 x1 + b2 y1 + b3 x2 + b4 y2 + b5 x1 y1 + b6 x2 y 2 + b7 x1 x2 + b8 y1 y2 + b9 x12 + b10 x22 + b11 y12 + b12 y 22 z ph = f 3 ( x1 , y1 , x2 , y 2 ) = c0 + c1 x1 + c2 y1 + c3 x2 + c4 y2 + c5 x1 y1 + c6 x2 y 2 + c7 x1 x2 + c8 y1 y2 + c9 x12 + c10 x22 + c11 y12 + c12 y 22 The result of this curve fit are a set of coefficients a0 .. a12 , b0 .. b12, and c0 to c12 and inserting the measured image coordinates of the calibration grid back into those fitting functions gives an estimate for the quality of the curve fit. This result is presented in Figure 6, where the absolute errors (xph — xfitand yph — yfitand zph —zfit) of the curve fitting procedure are presented. 2.2: Finding the stereoscopic correspondences In imaging more than one particle, the determination of the stereoscopic correspondences (pairing) of the particle images from each of the two camera frames requires additional algorithms and processing. The optical arrangement for a two-camera perspective is shown in Figure 3. The epipole e12 is the point of intersection of the image plane and the line joining the optical centers O1 and O2. The epipolar plane Π12 is defined by the location of the point pi and the two epipoles e12 and e21. pi pi2 pi1 Π12 e12 O1 e21 l12 O2 l21 Figure 3: Optical arrangement of a 2-Camera system displaying the epipolar geometry used to find the stereoscopic correspondences. The epipolar line l12 in camera 1 is defined as the line between the particle image pi1 and image of the origin O2 of camera 2. The camera origins O1, O2 and the particle location x, form the epipolar plane Πij. The epipolar line l12 is the intersection between the epipolar plane and the image plane. The epipolar line is the projection in one camera of the line connecting the optical center and the image point of the other camera plane. A point in one image thus generates a line in the other image on which its corresponding point must lie. Given two image points, pi1 = [x1,y1,1] and pi2 = [x2,y2,1] in the camera images 1 and 2 respectively, that are a conjugate pair of the ith particle, they must satisfy the epipolar equation pi1T F pi2 = 0. This can be rewritten as a linear equation with the unknowns being the matrix values for F, where Ui F = 0 (where i is the particle index) Ui = [xi1 xi2, xi1 yi2, xi1, yi2 xi2, yi1 yi2, yi1, xi2, yi2, 1]T F= [F11, F12, F13, F21, F22, F23, F31, F32, F33] If we have n matched calibration grid points, we form the matrix Un and solve the system Un F = 0. This system of linear equations allows us to estimate the stereoscopic matching. If the number of calibration targets is larger than the minimum needed, one solves such a linear system in a least squares minimization min U n F subject to the constraint F = 1 . Having solved for the matrix F we can now apply the algorithm to finding stereoscopic pairs of particles as shown in Figure 4. Figure 4: Actual example of the epipolar geometry displayed with a two-camera system. The cameras are facing each other looking on a water surface at an angle (e.g. top left is far in the left image and matches bottom right (near) in the right image). The particle in the left image marked with the cross-hair lies on the epipolar line on the right image. 2.3.: Tracking and stereoscopic matching of particles Particle tracking is equivalent to finding the positions of an individual particle as a function of time. For this task it is generally advantageous to have fairly short time differences between measured particle positions, so that the particle travel is on average significantly less than the mean distance between particles. In the present system, particle tracking is achieved in image space by finding the corresponding particle in the next frame first by trying the neighbors within a given search radius and choosing the candidate with the largest cross correlation between the frames of a given box size centered around the particle at t=ti and the candidate particle at t=ti+1. This is done simultaneously in both camera views, verifying matching tracks by minimizing the cumulative tracking error. 2.4. Transformation from the image space into physical 3D space The output from the camera calibration routine is a set of fitted parameters from the nonlinear multivariate regression. Since the two-camera system is over defined by providing 4 image variables to obtain 3 physical coordinates the estimation of the true particle position in space is redundant. The functional relationship between the pixel coordinates of the two images and the physical coordinates in the 3D space is of the form presented earlier in the calibration section. For a matched particle track, inserting the tracked particle positions in image coordinates into the best fit calibration equation will result in the physical x-y-z track as a function of time for the given particle. For an optical path with low distortions this fitting function should yield a very good fit. Optical distortions originate e.g. from lenses or from viewing through glass walls of a water tunnel. In the present system, only lens distortions apply since the particles are viewed only though one medium, namely air. Nevertheless, just the fact that we have a perspective projection necessitates a higher order fitting function. The variables xi, yi refer to pixel coordinates in the camera with index i. This approach needs at least 36 calibration points to determine all the unknowns. One example of a single particle track in the physical space is presented in Figure 5 below. The system used was the previously described two camera system, where the tracking was achieved using the minimization of the cumulative tracking error derived from calculating the distance of the particle center to the epipolar line. Figure 5 Example of a single particle track taken from a previous version of the tracking code. The particle marks the orbital path of a wind generated wave in a wave tank. All three projections of the 3D track (top right corner) are presented. The axis units are Inches. Limitations A two camera stereoscopic system has the following limits. In determining the matched stereoscopic pair in a true 3-D flow field of high particle density it may happen that multiple images of different particles are projected onto the same image pixels. (e.g multiple particles lie on the line p i O 2 , see Figure 3). In this case usually a third camera resolves this ambiguities. In the present system, the topological constraint that the particles stay on the water surface allows to exclude this possibility if the surface slopes are smaller than the camera elevation angle. Figure 6 : Absolute error of the calibration curve fit in inches for the calibration grid points plotted for the different cross channel planes. ( σx =0.09 in, σy = 0.05 in). This translates into a relative error (based on the calibration box size) of εx < 0.2 % and εy < 0.4 %