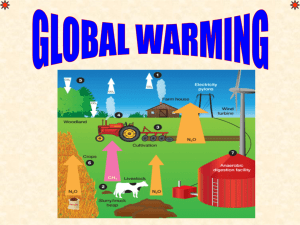

THE GLOBAL WARMING SCAM

advertisement