Discrete Event Simulation of a large OBS Network Stein Gjessing Arne Maus

advertisement

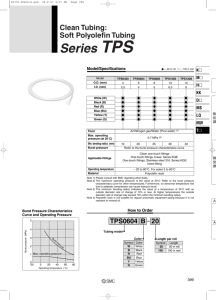

Discrete Event Simulation of a large OBS Network by Stein Gjessing Simula Research Lab, Dept. of Informatics, University of Oslo IEEE SMC 2005 11th Oct 2005 Arne Maus Dept. of Informatics University of Oslo, 1 Outline Simulation of the COST 239 network between 11 European cities Motivation – Optical fiber networks and OBS - Optical Burst Switching – A realistic simulation model – Self similar traffic Results – Burst drop rate (and throughput) vs. load – Congestion in the network Link utilization and burst loss – Transferring an extra stream of small bursts for ‘free’ – Throughput and drop rate as a function of number of channels Conclusions and further work IEEE SMC 2005 11th Oct 2005 2 The COST 239 network between 11 European cities 0, Copenhagen 3.5 9 5 5.5 1, London 2, Amsterdam 4 1.7 4, Brussels 3 3, Berlin 4.9 2.9 2.5 6.6 5, Luxemburg 6, Prague 1.5 3.7 5.2 5.1 2.3 2 2.4 2.8 4.5 9, Vienna 4.1 5.3 8, Zurich 7, Paris 6 5.4 2.9 5.6 10, Milano Latency between routers in milliseconds IEEE SMC 2005 11th Oct 2005 3 Optical fiber networksand OBS - Optical Burst Switching data (burst ) app. 500 µs. Optical switching & conversion initial void 200 µs control ← time – Each switch can simultaneously transmit data on n different wavelengths/channels – An incoming data stream can be switched to any outgoing connection by moving tiny mirrors and be converted to any free channel on that connection without being converted to electrical representation of data (wavelength conversion) – The setup of any switching and conversion has to be done in the electrical domain Electrical conversion of control packets – For each data packet (a burst), a control packet is sent into the networkahead of the burst to reserve its way through the network. – A separate optical channel is reserved for such short control packets – These control packets are converted to electrical in every switch, and this delays the propagation of the control packet (so that it might be overtaken by the burst) – An updated control packet is sent out (converted to optical) to the next switch for the burst. A burst is dropped if: – The control packet is overtaken by the burst – No free channel is found out of the switch (fiber delay lines, FDLs, might be used) IEEE SMC 2005 11th Oct 2005 4 Optical Burst Switching data (burst ) app. 500µs Optical burst switching CPT(void): 200 µs control ← time – Collects many (10-100) IP-packets in one ‘big’ burst packet – The initial size of the void between the control packet and the burst, the Control Packet lead Time, CPT, is typically 200µs – The control packet delay in a switch is typically 10µs i.e. it is overtaken by the burst after 20 switches. – JET scheduling: The resources are scheduled just when needed OBS is analternative to – Optical Line switching – fast, but too low utilization – Optical IP packet switching – Too slow by optical/electrical and electrical/optical conversion of data in all switches, also moderate utilization because of ‘large’ optical switching set up time IEEE SMC 2005 11th Oct 2005 5 0, Copenhagen The simulation model and parameters Written in J-sim Routing by fixed routing tables – shortest path routing Each node is: – A switch for through traffic – An ingress (receiving) node for bursts – An egress (sending) node of bursts 3.5 9 5 1, London 2, Amsterda m 4 3 4, Brussels 1.72.9 1.5 3.7 2 5, Luxemburg 5.5 3, Berlin 4.9 2.5 6, Prague 5.1 2.3 6.6 5.2 2.4 2.8 4.5 9, Vienna 4.1 5.3 8, Zurich 7, Paris 5.4 6 2.9 5.6 10, Milano Each connection is bidirectional The traffic generated is all-send-to-all, and self similar (next slide) The ingress load factor: – The relation between the on and off periods of the IP-packet generating sources (varied: 0.1- 0.5) – Same load factor for all egress (sending) nodes in one simulation Each fiber has – n channels, each with 1 Gbit/sec IEEE SMC 2005 11th Oct 2005 6 The traffic generator and & self similar traffic IP packets generated by N different sources (N = 5 to 35 times M), each with a Pareto distribution that gives a self similar traffic (‘same’ variance on large and small scales). M different OBS buffers (M is the number of different destinations) Sent into the OBS network when full or when a timer expires IEEE SMC 2005 11th Oct 2005 7 Congestion in the network– burst being lost at moderate ingress load factors. Why ? 0.12 Burst drop rate 0.1 0.08 0.06 0.04 0.02 0 0.0 0.1 0.2 0.3 0.4 0.5 Ingress load IEEE SMC 2005 11th Oct 2005 8 Offered load as % of capacity 5-6 Congestion on link 5-6 : Luxemburg - Prague 160 140 120 100 80 60 40 20 0 0.0 0.1 0.2 0.3 0.4 0.5 Ingress load IEEE SMC 2005 11th Oct 2005 9 Burst drop rate and link utilization Burst drop rate on link 5-6 1 0.9 0.8 0.7 Link util. 0.6 Burst loss 0.5 0.4 0.3 0.2 0.1 0 0.00 0.10 0.20 0.30 0.40 0.50 Ingress load IEEE SMC 2005 11th Oct 2005 Note: There is still unused capacity on the link when the drop rate increases to unacceptable levels 10 Horizon scheduling upcoming Horizon void Burst data app. 500µsec. SAT(void): 200 µsec control Horizon void ← time No burst can be scheduled in this void (too small) Any free (outgoing) channel has an infinite void (the Horizon) If one burst is scheduled on a channel, no other burst can be scheduled on this before most of the burst has been transmitted (because 500 > 200) The SAT void ( ≤ 200μs) can not be used for transmitting bursts Any packet sum length ≤ 200μs scheduled in the SAT or Horizon voids can not get in the way of scheduling and sending a burst Small packet, sum length < 200 μs : IEEE SMC 2005 11th Oct 2005 data < 150 µs. SAT = 50 µs control ← time 11 Transferring an extra stream of small burst In a congested network with a bottleneck as 5-6, one would usually introduce extra capacity in the net However, we wanted to study a congested network We introduced a single data stream of small packets (2000 bytes) going London to Vienna over the 5-6 link: Luxembourg-Prague Four classes of priority: 1. 2. 3. 4. Low: CPT = 50 µs Low+FDL – but same CPT as Low, + using Fiber Delay Lines Same: CPT = 200 µs (same CPT as regular bursts) QoS : CPT = 200 µs + 500 µs = transmission time for regular bursts 1 and 2 will never hinder any regular burst because their total length is less than the SAT void, 3 might conflict with bursts scheduling 4 will always have priority over any ordinary burst (hence they might stop ‘many’ bursts) 1 and 2 might transmit extra data over a congested connection for ‘free‘ (can not hinder a single regular burst) IEEE SMC 2005 11th Oct 2005 12 Total traffic on link 5-6 when sending additional small packets, as a function of the ingress load 1.12 Traffic rate (Base case = 1) Low -FDL 1.10 Low 1.08 Same QoS 1.06 1.04 1.02 1.00 0.98 0.96 0.10 0.15 0.20 0.25 0.30 0.35 Ingress load IEEE SMC 2005 11th Oct 2005 13 The drop rate on link 5-6 for the small additional data packets 0.45 Packet drop rate 0.40 Low 0.35 Same 0.30 Low FDL 0.25 QoS 0.20 0.15 0.10 0.05 0.00 0.0 IEEE SMC 2005 11th Oct 2005 0.1 0.2 Ingress load 0.3 0.4 14 Varying the number of channels per connection: Network throughput as a function of c, the number of channels and the ingress load Traffic throughput rate 1.00 0.95 c35 0.90 c20 c15 0.85 c10 c5 0.80 0.00 0.10 0.20 0.30 0.40 0.50 0.60 Ingress load Note: Each channel operates at 1GHz and the generated traffic is proportional to c IEEE SMC 2005 11th Oct 2005 15 Conclusions and further work Our results confirm many other investigations in OBS Demonstrated a new way of transferring an additional low priority data stream over a highly congested OBS switched network Made a realistic simulator for any large OBS network – Self-similar instead of a neg-exp traffic arrival pattern – Mixed size bursts (generated by the timeout) Further studies: – The optimal burst size – Instead of throwing bursts that can’t be scheduled further on in a switch, convert to electrical domain and store for later transmission (might be better than retransmission from egress node or from traffic source) IEEE SMC 2005 11th Oct 2005 16