WebSphere Business Integration V6.0.2 Performance Tuning Front cover

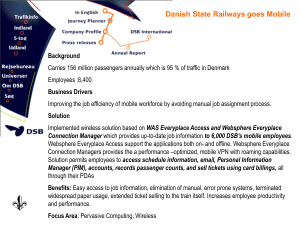

advertisement