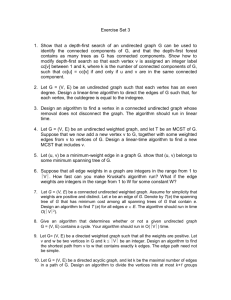

Basic Graph Definitions and Algorithms

advertisement

Basic Graph Definitions and Algorithms Much of this is adapted from Mark Allen Weiss, Data Structures and Algorithm Analysis in C++, 3rd ed. Addison Wesley, 2006. Algorithm pseudocode is from Wikipedia and other sources. A graph is a set G = (V, E), where V is a (normally non-empty) set of vertices (singular vertex) and E (for edges) is a (possibly empty) set of (normally unordered) pairs of vertices. Vertices are sometimes called nodes (as in trees, which are special cases of graphs). Edges may be ordered, in which case they are sometimes called arcs. A graph with ordered edges is said to be directed, and is called a digraph. Graphs with unordered edges are sometimes called undirected graphs, to differentiate them from digraphs. Duplicate edges usually are not allowed. An edge or arc from a vertex to itself is called a loop. A sequence of edges from a vertex to one or more other vertices, without passing through any vertex twice, and back to the original vertex is called a cycle, and a graph with no cycles is said to be acyclic. If an edge exists between two vertices, we say the vertices are adjacent, and that the vertices are neighbors. The size of a graph is the number of edges, |E|. The order of a graph is the number of vertices, |V|. Edges may be weighted, and a graph with weighted edges is said to be a weighted graph (or weighted digraph). The weight of an edge is sometimes called its cost. In an undirected graph, the degree of a vertex is the number of edges incident to the vertex. In a digraph, the indegree of a vertex v is the number of edges (u, v), and the outdegree of v is the number of edges (v, u). In a weighted graph, multiple edges (of different weights) from a vertex u to a vertex v are often allowed. A path in a graph is a sequence of vertices v1, v2, v3, ..., vN such that (vi, vi+1) ∈ E for 1 ≤ i < N. The length of such a path is the number of edges in the path. A simple path is a path in which all vertices are distinct, except possibly the first and last could be the same. A cycle in an undirected graph is a path in which v1 = vN. This cycle is a simple cycle if the path is itself simple. A path an undirected graph must have all edges distinct, e.g., a path u, v, u in an undirected graph should not be considered a cycle because the edge (u, v) and the edge (v, u) are the same edge. In a directed graph, these edges are distinct, so the path u, v, u is a cycle. A hop is the traversal of an edge in a path. A directed graph is acyclic if it has no cycles, and a directed acyclic graph is sometimes referred to by its abbreviation DAG. An undirected graph is connected if there is a path from every vertex to every other vertex. A directed graph with this property is called strongly connected. If a directed graph is not strongly connected, but the equivalent undirected graph would be connected, we say that the digraph is weakly connected. A complete graph is a graph in which there is an edge between every pair of vertices. The two most common methods of representing graphs are adjacency matrices and adjacency lists. An adjacency matrix is a two-dimensional array with the row and column indices the vertex numbers. If there is an edge from u to v, we set a[u][v] to true, otherwise false (or to 1 or 0). If the graph is weighted, we may set a[u][v] to the weight of the edge instead of true or 1. Since the space requirement for adjacency matrix representation is Θ(|V|2), adjacency matrices are most often used when the graph is dense, e.g., |E| = Θ(|V|2). Often, this is not the case. For example, in a map of a city laid out in a normal grid (think most of San Francisco), often the number of edges (street connections between intersections) is about four times the number of vertices (intersections), e.g. |E| ≈ 4|V|. For large |V|, this is much less than |V|2. If you had 1000 intersections, then you would need an array of 1,000,000 elements to hold approximately 4000 edges. The vast majority of elements in the adjacency matrix would be 0. In this case, the graph is said to be sparse, and the matrix representation of it is a sparse matrix. A better way to represent such a graph is with an adjacency list. Keep the vertices in a linked list or vector (or sometimes an array if the number of vertices is known in advance), and for each vertex, keep a list of adjacent vertices in a list attached to the vertex itself. The space requirement for this is O(|E| + |V|), which is linear in the size of the graph. A greedy algorithm is an algorithm which solves a problem in stages by doing what appears to be the best thing at each stage. For example, to give change from $1 in U.S. currency, most people count out the change from largest value coin to smallest value coin: get the number of quarters needed, then dimes, then nickels, then pennies. This results in giving change in the fewest coins possible. Greedy algorithms don't always work optimally. For example, if we had a U.S. 12¢ coin, and we were returning 15¢ change, this would give four coins (one 12¢ coin and three pennies), not two coins (a dime and a nickel). Still, greedy algorithms are often pretty close to optimal. Many graph algorithms are greedy. In an unweighted graph, the path length (or unweighted path length) of a path v1, v2, ..., vN is the number of edges in the path, or N-1. In a weighted graph, if the cost of an edge (vi, vj) is 𝐶𝑖,𝑗 , then the cost of a path v1, v2, ..., vN is ∑𝑁−1 𝑖=1 𝐶𝑖,𝑖+1 . This is referred to as the weighted path length. In a weighted graph, the shortest path from v1 to vN is the minimum weighted path length from v1 to vN. Note that the shortest path may go through more vertices than a longer path. In a weighted graph, the edge weights determine the length of a path, not the number of hops. Graphs are used to represent data from a large variety of applications. For example, a graph could represent a system of airports, with vertices the airports and weighted edges the costs of airline tickets between the airports. Or the graph could represent a communications network, with vertices the nodes and weighted edges representing communications costs in either time or money. In both instances, finding the weighted shortest path from a vertex to another vertex would give the least expensive solution to travelling or transmitting data between vertices. Finding the shortest path in a graph G from a vertex s to a vertex t involves using a greedy algorithm. Normally the shortest path is weighted, but we can easily convert from an unweighted graph problem by assigning all the edges the value 1. The strategy for finding a shortest path is via a breadth-first search. We process the graph starting at the start vertex and proceeding in layers: all vertices closest to the start (reachable by one hop), then the vertices two hops away, then three hops, then four, etc. until all the vertices are processed. To avoid treating the start vertex as being two hops away, we assign it a "distance" from itself of zero. Dijkstra's algorithm Algorithm Let the node at which we are starting be called the initial node. Let the distance of node Y be the distance from the initial node to Y. Dijkstra's algorithm will assign some initial distance values and will try to improve them step-by-step. Assign to every node a distance value. Set it to zero for our initial node and to infinity for all other nodes. Mark all nodes as unvisited. Set initial node as current. For current node, consider all its unvisited neighbors and calculate their distance (from the initial node). For example, if current node (A) has distance of 6, and an edge connecting it with another node (B) is 2, the distance to B through A will be 6+2=8. If this distance is less than the previously recorded distance (infinity in the beginning, zero for the initial node), overwrite the distance. When we are done considering all neighbors of the current node, mark it as visited. A visited node will not be checked ever again; its distance recorded now is final and minimal. Set the unvisited node with the smallest distance (from the initial node) as the next "current node" and continue from step 3. Description Suppose you want to find the shortest path between two intersections on a map, a starting point and a destination. To accomplish this, you could highlight the streets (tracing the streets with a marker) in a certain order, until you have a route highlighted from the starting point to the destination. The order is conceptually simple: at each iteration, create a set of intersections consisting of every unmarked intersection that is directly connected to a marked intersection, this will be your set of considered intersections. From that set of considered intersections, find the closest intersection to the destination (this is the "greedy" part, as described above) and highlight it and mark that street to that intersection, draw an arrow with the direction, then repeat. In each stage mark just one new intersection. When you get to the destination, follow the arrows backwards. There will be only one path back against the arrows, the shortest one. Pseudocode In the following algorithm, the code u := vertex in Q with smallest dist[], searches for the vertex u in the vertex set Q that has the least dist[u] value. That vertex is removed from the set Q and returned to the user. dist_between(u, v) calculates the length between the two neighbor-nodes u and v. The variable alt on line 13 is the length of the path from the root node to the neighbor node v if it were to go through u. If this path is shorter than the current shortest path recorded for v, that current path is replaced with this alt path. The previous array is populated with a pointer to the "next-hop" node on the source graph to get the shortest route to the source. 1 function Dijkstra(Graph, source): 2 for each vertex v in Graph: // Initializations 3 dist[v] := infinity // Unknown distance function from source to v 4 previous[v] := undefined // Previous node in optimal path from source 5 dist[source] := 0 // Distance from source to source 6 7 8 9 10 11 12 13 14 15 16 17 Q := the set of all nodes in Graph // All nodes in the graph are unoptimized - thus are in Q while Q is not empty: // the main loop u := vertex in Q with smallest dist[] // this is “extract minimum” below if dist[u] = infinity: break // all remaining vertices are inaccessible from source remove u from Q for each neighbor v of u: // where v has not yet been removed from Q. alt := dist[u] + dist_between(u, v) if alt < dist[v]: // Relax (u,v,a) – this is “decrease key” below dist[v] := alt previous[v] := u return dist[] If we are only interested in a shortest path between vertices source and target, we can terminate the search at line 11 if u = target. Now we can read the shortest path from source to target by iteration: 1 S := empty sequence 2 u := target 3 while previous[u] is defined: 4 insert u at the beginning of S 5 u := previous[u] Now sequence S is the list of vertices constituting one of the shortest paths from target to source, or the empty sequence if no path exists. A more general problem would be to find all the shortest paths between source and target (there might be several different ones of the same length). Then instead of storing only a single node in each entry of previous[] we would store all nodes satisfying the relaxation condition. For example, if both r and source connect to target and both of them lie on different shortest paths through target (because the edge cost is the same in both cases), then we would add both r and source to previous[target]. When the algorithm completes, the previous[] data structure will actually describe a graph that is a subset of the original graph with some edges removed. Its key property will be that if the algorithm was run with some starting node, then every path from that node to any other node in the new graph will be the shortest path between those nodes in the original graph, and all paths of that length from the original graph will be present in the new graph. Then to actually find all these short paths between two given nodes we would use a path finding algorithm on the new graph, such as depth-first search. Running time An upper bound of the running time of Dijkstra's algorithm on a graph with edges E and vertices V can be expressed as a function of | E | and | V | using the Big-O notation. For any implementation of set Q the running time is O(|E| ∙ dkQ + |V| ∙ emQ), where dkQ and emQ are times needed to perform decrease key and extract minimum operations in set Q, respectively. The simplest implementation of the Dijkstra's algorithm stores vertices of set Q in an ordinary linked list or array, and extract minimum from Q is simply a linear search through all vertices in Q. In this case, the running time is O( |V| 2 + |E| ) ∈ O( |V| 2). For sparse graphs, that is, graphs with far fewer than O( |V| 2) edges, Dijkstra's algorithm can be implemented more efficiently by storing the graph in the form of adjacency lists and using a binary heap, pairing heap, or Fibonacci heap as a priority queue to implement extracting minimum efficiently. With a binary heap, the algorithm requires O(( |E| + |V| )log |V| ) time (which is dominated by O( |E| log |V| ), assuming the graph is connected), and the Fibonacci heap improves this to O( |E| + |V| log |V| ). A spanning tree T in a graph G is a tree comprising all the vertices in G and some (or possibly all) of the edges in G. A spanning tree exists in G if and only if G is connected. A minimum spanning tree in a weighted graph is a spanning tree in which the sum of the weights of the edges in the spanning tree is the minimum possible for graph. There could be more than one possible spanning tree, or minimum spanning tree, in the graph. The size of a minimum spanning tree is always |V| - 1. Like all trees, spanning trees are acyclic. In an un-weighted graph, a minimum spanning tree can be made by assigning all the edges the weight of 1. Prim's algorithm Pseudocode: Min-heap initialization inputs: A graph, a function returning edge weights weight-function, and an initial vertex Initial placement of all vertices in the 'not yet seen' set, set initial vertex to be added to the tree, and place all vertices in a min-heap to allow for removal of the min distance from the minimum graph. for each vertex in graph set min_distance of vertex to ∞ set parent of vertex to null set minimum_adjacency_list of vertex to empty list set is_in_Q of vertex to true set min_distance of initial vertex to zero add to minimum-heap Q all vertices in graph, keyed by min_distance Algorithm In the algorithm description above, nearest vertex is Q[0], now latest addition fringe is v in Q where distance of v < ∞ after nearest vertex is removed not seen is v in Q where distance of v = ∞ after nearest vertex is removed The while loop will fail when remove minimum returns null. The adjacency list is set to allow a directional graph to be returned. time complexity: V for loop, log(V) for the remove function while latest_addition = remove minimum in Q set is_in_Q of latest_addition to false add latest_addition to (minimum_adjacency_list of (parent of latest_addition)) add (parent of latest_addition) to (minimum_adjacency_list of latest_addition) time complexity: E/V, the average number of vertices for each adjacent of latest_addition if (is_in_Q of adjacent) and (weight-function(latest_addition, adjacent) < min_distance of adjacent) set parent of adjacent to latest_addition set min_distance of adjacent to weightfunction(latest_addition, adjacent) time complexity: log(V), the height of the heap update adjacent in Q, order by min_distance Proof of correctness Let P be a connected, weighted graph. At every iteration of Prim's algorithm, an edge must be found that connects a vertex in a subgraph to a vertex outside the subgraph. Since P is connected, there will always be a path to every vertex. The output Y of Prim's algorithm is a tree, because the edge and vertex added to Y are connected. Let Y1 be a minimum spanning tree of P. If Y1=Y then Y is a minimum spanning tree. Otherwise, let e be the first edge added during the construction of Y that is not in Y1, and V be the set of vertices connected by the edges added before e. Then one endpoint of e is in V and the other is not. Since Y1 is a spanning tree of P, there is a path in Y1 joining the two endpoints. As one travels along the path, one must encounter an edge f joining a vertex in V to one that is not in V. Now, at the iteration when e was added to Y, f could also have been added and it would be added instead of e if its weight was less than e. Since f was not added, we conclude that 𝑊(𝑓) ≥ 𝑤(𝑒). Let Y2 be the graph obtained by removing f and adding e from Y1. It is easy to show that Y2 is connected, has the same number of edges as Y1, and the total weights of its edges is not larger than that of Y1, therefore it is also a minimum spanning tree of P and it contains e and all the edges added before it during the construction of V. Repeat the steps above and we will eventually obtain a minimum spanning tree of P that is identical to Y. This shows Y is a minimum spanning tree.