Cover Page 1

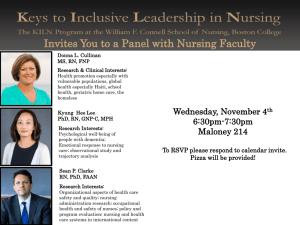

advertisement