Analyzing and Boosting Performance Chapter 17 In This Chapter

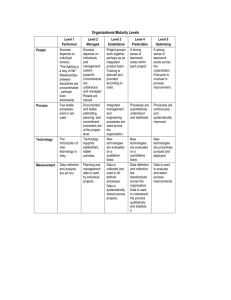

advertisement

4620-1 ch17.f.qc 10/28/99 12:19 PM Page 541 Chapter 17 Analyzing and Boosting Performance In This Chapter Learning why you should monitor, boost, and forecast Windows 2000 Server performance Defining performance analysis Learning about and distinguishing between quantitative and qualitative tools Learning the conceptual steps in performance analysis Learning about troubleshooting via performance analysis Knowing what the four most important areas to monitor in order to boost performance are Reasons for declines in performance A nalyzing and boosting performance is a very important part of a Windows 2000 Server professional’s job. You are responsible for getting the most from your implementation of Windows 2000 Server. Installing, managing, and using Windows 2000 Server is a big investment on your part in both time and money. By analyzing and boosting performance, you can increase the return on that investment. This chapter will not only define performance analysis from both quantitative and qualitative viewpoints, but will also set the foundation for the chapters that follow in Part VI, “Optimizing Windows 2000 Server.” This chapter is for the MBA in all of us. While MBAs spend their days applying linear programming to business scenarios and mastering the inner workings of their Hewlett-Packard (HP) 12C calculators, Window 2000 Server engineers can learn a lot from the basics of quantitative analysis used by MBAs. You can apply the quantitative or scientific approach to Windows 2000 Server performance analysis and add real value to your network and its operations. To do so, consider mastering System Performance Monitor, Network Monitor, and Task Manager. Why? Smart practitioners know that you get what you give to Windows 2000 Server. If all you do is set up and simply answer several questions posed by the Windows 2000 Server setup dialog boxes, your signature will be on public display when others follow and look closely at your network. Simple is as 4620-1 ch17.f.qc 542 10/28/99 12:19 PM Page 542 Part VI: Optimizing Windows 2000 Server ■ ■ simple does. A network setup in a simple fashion will basically perform, but doom lurks. Once your network experiences significant growth either via user count or activity levels, system design and implementation issues often return to wreak havoc. Thus the need to study, master, and implement the performance boosting secrets discussed in this and the next few chapters in the Performance Analysis part. These secrets include third-party products that add to and help you exceed the capabilities of Microsoft’s built-in performance analysis tools. Performance Analysis We wouldn’t embark on a sailing trip without a plan, a map, and a compass in our stash of necessities. We like to know where we are headed, how long it will take, and often, whether we can get there sooner. Managing Windows 2000 Server environments is no different. Is our Windows 2000 Server performance headed in the right direction? Going south on us? Remaining stable or veering sideways? These are the types of questions we ask ourselves in the middle of the night, workaholics that we are in this exciting and demanding field of Windows 2000 Server network administration and engineering. To answer these questions, we tinker, try again, and tinker more, hoping to boost Windows 2000 Server performance and predict where our environment is headed. Chant the following mantra: It all starts with the data. While this is a popular refrain among database administrators (DBAs), it is the data that ultimately matters when analyzing and boosting the performance of Windows 2000 Server-based networks. Data is at the center of our efforts to analyze Windows 2000 Server, so we place great importance on the type of data, the quantity of data, and the quality of data we can obtain from Windows 2000 Server. Fortunately, the computer readily generates this data for us. Thank God we don’t have to record by hand like the door-to-door U.S. government census interviewers of days gone by. Data can be collected as a one-time snapshot of our system health, or it can be systematically collected over time. As quantitative analysts, we desire and seek out large clean data sets that provide enough values for us to perform meaningful analysis and draw meaningful conclusions. Whichever data analysis tool you use to monitor and manage your Windows 2000 Server network, you should strive to collect data consistently, frequently, and routinely. We love large data sets as the foundation of our analysis. Statistically, we refer to a large data set as a large sample size. 4620-1 ch17.f.qc 10/28/99 12:19 PM Page 543 Chapter 17: Analyzing and Boosting Performance ■ 543 ■ Built-in performance analysis tools In Windows 2000 Server, we typically use six tools to collect and analyze our data: System Performance Monitor, Network Monitor, Task Manager, Windows 2000 System Information, Event Viewer, and Device Manager. System Performance Monitor enables us to perform sophisticated analysis via charts, logs, reports, and alerts over time (see Figure 17-1). System Performance Monitor is discussed at length in Chapter 18. Network Monitor is truly a gift in Windows 2000 Server, enabling basic networking packet analysis without having to spend $5,000 or more on a hardware-based sniffer (see Figure 17-2). Network Monitor is discussed at length in Chapter 19. Task Manager (see Figure 17-3), Windows 2000 System Information (see Figure 17-4) and Event Viewer (see Figure 17-5) are discussed extensively in Chapter 20. You can also read more about System Performance Monitor, Network Monitor, and Task Manager in the “Are You Being ‘Outperformed?’” section of this chapter. Device Manager, a welcome addition to the Windows 2000 family, was discussed in Chapter 9 (see Figure 17-6). Figure 17-1: Default view of System Performance Monitor showing the Object:Processor Counter:% Processor Time 4620-1 ch17.f.qc 544 10/28/99 12:19 PM Page 544 Part VI: Optimizing Windows 2000 Server ■ ■ Figure 17-2: Default view of Network Monitor — Capture View window Figure 17-3: Default view of Task Manager — Performance tab sheet 4620-1 ch17.f.qc 10/28/99 12:19 PM Page 545 Chapter 17: Analyzing and Boosting Performance ■ 545 ■ Figure 17-4: Default view of Windows 2000 System Information — System Summary Figure 17-5: Default view of Event Viewer — System Log 4620-1 ch17.f.qc 546 10/28/99 12:19 PM Page 546 Part VI: Optimizing Windows 2000 Server ■ ■ Figure 17-6: Default view of Device Manager Each of these tools, except for Device Manager (already discussed in Chapter 9), will be discussed in the following chapters. It is critical to note that some tools such as System Performance Monitor work best when analyzing data over time to establish trends. Other tools, such as Network Monitor, typically provide a snapshot of system activity at a point in time. This distinction is critical as you read through this and the next few chapters. More quantitative tools Additional quantitative tools to consider using, beyond those provided with Windows 2000 Server, include approaches borrowed from the MBA and quantitative analysis community, namely, manually recording measurements, observing alert conditions in logs, conducting user surveys, and basic trend line analysis. Of these, keep in mind that user surveys directly involve your users and are, therefore, perhaps some of the most valuable tools at your disposal. ■ Manually record measurements and data points of interest while monitoring Windows 2000 Server. ■ Observe event log error conditions that trigger alerts (a big approach used in managing SQL Server). ■ Survey users about system performance via e-mail or a paper-based survey. These survey results, when ranked on a scale (say 1 being low performance and 5 being high performance), can be charted and analyzed. It’s really fun to deliver the same survey again to your users several months later and compare the results to your original survey. Using feedback from your users is one of the best performancemonitoring approaches. 4620-1 ch17.f.qc 10/28/99 12:19 PM Page 547 Chapter 17: Analyzing and Boosting Performance 547 ■ ■ ■ Carry out trend line analysis, frequency distribution, central tendency, regression, correlation analysis, probability distribution, seasonality, and indexing. These approaches are discussed over the next several pages. The trend line analysis approach, while extremely powerful, is also the most difficult of these four analysis methods. Because it has a high degree of difficulty, I discuss trend line analysis at length over the next several pages. But don’t be frightened by this preamble. The trend line analysis that follows may be tried at home, and I didn’t hire professionals to complete the stunts. Trend line analysis Also known as time series analysis, this approach finds the best fit of a trend line applied against a plotted data set. In terms of managing Windows 2000 Server environments, that means applying the chart view in System Performance Monitor to observe data points being charted via a line graph, and then placing a ruler on your screen to create the trend line. The slope of the ruler would be the trend line and represents a line drawn equidistant from each point that has been plotted. Not surprisingly, this is a simple and effective forecasting tool for predicting system performance and is generally known as the freehand method. The mathematicians reading this book know that this handheld-ruler method is a gross oversimplification when it comes to creating a trend line, and indeed a trend line is best calculated via the least-squares method. See the quantitative methods book of your choice for a more in-depth discussion. Frequency distribution Imagine you are a network analyst in a large organization deploying Windows 2000 servers. You want to know what amount of RAM is available in the client machines. Table 17-1, based on data collected by Microsoft’s System Management Server, was created to show a frequency distribution. Table 17-1 Frequency Distribution Example RAM Number of Clients (Frequency) 12MB 150 16MB 200 24MB 100 32MB 50 Simple enough. You have now created the frequency distribution to help plan your technology requirements. Clearly, most of the machines have less than 24MB of RAM and may need a memory upgrade in the near future. 4620-1 ch17.f.qc 548 10/28/99 12:19 PM Page 548 Part VI: Optimizing Windows 2000 Server ■ ■ Central tendency — the mean, the mode, and the median Assume you are in a large organization with WAN network traffic management problems. The organization is growing rapidly. You are curious about the nature of the network traffic. Are just a few users creating most of the network traffic? Are all users placing a similar amount of traffic on the wire? Analyzing the mean, mode, and median will accomplish this for you. The mean is simply the mathematical average calculated as the sum of the values divided by the number of observations (ten apples divided by five schoolchildren = two apples per child on average). The mode is the most frequently occurring value in a data set (following our apple example, if four schoolchildren each took one apple and the fifth school child took six apples, the mode would be one). The median is determined by placing a data set in order ranging from the largest number to the smallest number, and then choosing the values that occur in the middle of the set: One schoolchild ate one apple One schoolchild ate two apples One schoolchild ate three apples One schoolchild ate four apples The median would be between two and three apples. The actual median would be 2.5 (you are supposed to calculate the arithmetic mean of the two middle values if there is no one middle number). How does this apply to Windows 2000 Server performance analysis? Suppose your network traffic pattern has the characteristics shown in Table 17-2 for 11 users. Table 17-2 Sample Network Traffic for 11 Users Central Tendency Measurement Number of Data Packets Mean 1,500 Median 225 Mode 200 From this information, we can reliably state that one or more large users generate most of the network traffic as measured by data packets on the wire. How? When your median and mode measurements are smaller than your mean, you can assume that a few users (in this case) are supplying an inordinate amount of data packets. In the preceding information, you can easily see that the mean (or average) is considerably larger than the median or mode. The median, being a reflection of the midpoint of the data series when ordered from smallest to largest values, suggests that the data is 4620-1 ch17.f.qc 10/28/99 12:19 PM Page 549 Chapter 17: Analyzing and Boosting Performance ■ 549 ■ skewed toward the smaller values. The mode, in this case, confirms this observation in that the most frequently occurring value is much smaller than the average value. Finally, looking at the data set used to create the example (see Table 17-3) proves the argument that a couple of users (Dan and Ellen) are creating most of the network traffic. Table 17-3 User Network Traffic Users (11) Network Packets Sent Adam 225 Betty 100 Carol 50 Dan 10,000 Ellen 5,000 Frank 250 Gary 200 Harry 225 Irene 175 Jackie 150 Kia 125 Total 11 Users 16,500 Packets Regression This quantitative analysis method, which seeks to define the relationship between a dependent variable and an independent variable, can be used to boost the performance of Windows 2000 Server. Assume you use Windows 2000 Server as an Internet server. Suppose you are interested in seeing how Web traffic impacts the processor utilization rate on the server. Perhaps you believe that Web activity (“hits”) on your site negatively impact the processor utilization rate by causing that value to grow. You can find out by charting the dependent variable Processor:% Processor Time (this is an object:counter in System Performance Monitor that is described in Chapter 18) against the independent variable HTTP Service:Connections/sec (see Figure 17-7.) 4620-1 ch17.f.qc 550 10/28/99 12:19 PM Page 550 Part VI: Optimizing Windows 2000 Server ■ ■ Figure 17-7: Dependent (Processor:%Processor Time) and independent (Web Service:Files/sec) variables More hits on your Web site result in a higher processor utilization rate. Correlation analysis In the regression example just given, a positive correlation was discovered between Processor:% Processor Time and Web Service:Files/sec. A negative correlation between variables in Windows 2000 Server might exist when comparing two object:counters that seem to move in opposite directions when set side by side on a System Performance Monitor chart. At a basic level, the object:counters Memory:Available Bytes and Memory:Pages/sec would have a negative correlation. That is, the less available bytes of memory you have, the more paging activity will occur. These topics are discussed in greater detail in the System Performance Monitor chapter. A negative correlation isn’t necessarily bad. Do not be lulled into the fallacy that a positive correlation is good and a negative correlation is bad. Correlations merely define a relationship, whether that relationship is positive or negative. Another way to think about positive and negative correlations is to view a positive correlation as cyclical and a negative correlation as counter-cyclical. 4620-1 ch17.f.qc 10/28/99 12:19 PM Page 551 Chapter 17: Analyzing and Boosting Performance 551 ■ ■ Probability distribution Do you work for a manager who worships the bell curve, always wondering where things fit in? Then use this quantitative analysis approach, not only to better understand the performance of your Windows 2000 Server system, but also to explain technology-related events to your manager. The cool thing about the normal curve is you can easily predict how just over 2⁄3 (actually 68 percent) of your end users group together when measured against what is called the first standard deviation. A standard deviation measures variability. But let’s speak English. The first standard deviation is a very |basic measurement and represents the behavior of 68 percent of your users on a network. We might say that 68 percent of the users are proficient in mapping drives to another server. Of that 68 percent, some are more proficient than others. A measurement taken to the second standard deviation accounts for 95.4 percent of the user population. Here we’ll consider the ability to log on to the network successfully as our “task” that we believe such a large number of users could complete. However, within that population of 95.4 percent, some may log on without ever having authentication problems, while others may have to enter their name and password multiple times to log on (maybe they are poor typists!). Finally, to the third standard deviation, you can account for the behavior of 99.7 percent of the user population. For this group, it might be safe to say that 99.7 percent of the users can turn on their computers. In the underlying trend I’ve just described, you can see that as the bell curve encapsulates more and more of the user population, the activities and behaviors demonstrated by the users become less and less arduous. That makes sense. To cover the computing behavior of 99.7 percent of your users, you’re speaking about some pretty simple tasks that nearly everyone can accomplish. The normal curve provides a different way of thinking about network performance analysis. What if you believed and measured that 68 percent of your users use three or more applications, but 99.7 percent of your users employ at least one application? Might you somehow try to group the 68-percent group of users (that is, to the first standard deviation), if we can identify this group easily, into its own separate collision domain? Such is the thought and logic behind switching in network management. This quaint mumbo-jumbo really does apply. Seasonality Do Windows 2000 servers really have seasons? Maybe so! Doesn’t it make sense to remain sensitive to peak system usage when such usage occurs at specific times each year? Take Clark Nuber, the Seattle-based accounting firm that owns the consulting practice where I’m employed. Our network clearly experiences its heaviest loads during tax season (January to April). I can document the increased load using the different Windows 2000 Server performance tools discussed in this chapter, but more important, I can easily predict the increased load on Clark Nuber’s network each tax season. 4620-1 ch17.f.qc 552 10/28/99 12:19 PM Page 552 Part VI: Optimizing Windows 2000 Server ■ ■ Needless to say, we “Clark Nuberites” have learned (sometimes the hard way) not to upset the network with upgrades or enhancements during tax season. On more than one occasion, the network couldn’t handle the increased load and crashed! Create an index Once you’ve worked extensively with Windows 2000 Server and collected large data sets, then you can create meaningful measurement indexes. Here’s how. Assume you’ve tracked several sites with System Performance Monitor for several months, periodically capturing data to a log file. At this point, you would have a sufficiently large data set. Then you want to know what a fair measure of a user’s impact on paging activity is on a typical Windows 2000 server for your sites. To determine this impact, you would create an index. Suppose you looked at the average Pages/Sec (found under the Memory object) in System Performance Monitor and divided that by the average number of users on the system. Note calculating the average Pages/Sec should incorporate readings from different logon periods from different sites so that you can indeed create a generic index. If the average Pages/Sec value were 15.45 and you had 100 users on average, then the index calculation would be 15.45 / 100 = 0.1545. This index value of 0.1545 enables you to predict, on average, what paging file activity might be caused on a per-user basis. In effect, an index enables you to predict the load on your system, something that might be very useful when scaling a new system for an upgrade. Qualitative tools too! Performance analysis is not only a quantitative exercise, it is also a qualitative endeavor. We can map and chart Windows 2000 Server performance until the cows come home, but many of us also rely on, if not favor, our intuition and other qualitative analysis approaches. Individuals equipped with strong qualitative analysis skills may not even need to know the finer points of System Performance Monitor and other Windows 2000 Server performance analysis tools. These people simply “know” when something isn’t right. Then they set out to troubleshoot the problem and fix it. This is how many CEOs run their organizations. While these leaders might not have a solid grasp of the technologies their firms use, they do know when something is out of place or not right. Loosely defined, qualitative approaches to boost Windows 2000 Server performance include the following: ■ Experience working with Windows 2000 Server ■ Luck ■ Intuition ■ Luck ■ Good judgment 4620-1 ch17.f.qc 10/28/99 12:19 PM Page 553 Chapter 17: Analyzing and Boosting Performance 553 ■ ■ ■ Luck ■ Good advice from peers ■ Luck ■ A seventh sense ■ Luck ■ Decision making under uncertainty ■ Luck ■ ESP ■ And finally... LUCK! Several of us long on Windows 2000 Server work experience rely on our qualitative judgments on a day-to-day basis. We use the quantitative analysis approaches such as System Performance Monitor logging periodically or during intensive troubleshooting, but probably not in our day-to-day world. Those with strong qualitative skills often reap the rewards that accrue to the privileged in this line of work, such as higher pay and more work. Why? Because these individuals simply work more efficiently and better. Think about that the next time you team with an industry peer to troubleshoot a Windows 2000 Server problem and find yourself left behind analytically as you marvel at your companion’s superior skills. Fear not, however, for the rest of us can be equally successful by employing Windows 2000 Server’s built-in performance analysis tools. Stated another way, when you’re short on qualitative tools, you should emphasize the quantitative tools. And of course, even our Windows 2000 Server companions with ESP can still benefit from the fundamentals of quantitative analysis. It takes both the quantitative and qualitative approaches to successfully analyze and boost the performance of your Windows 2000 Server over the long term! In other words, it takes a combination of the quantitative tools (System Performance Monitor, Network Monitor, and Task Manager) plus the qualitative approaches you have at your disposal (experience, intuition, and a seventh sense). Both the quantitative and qualitative schools should receive attention if not equal weight. And don’t you forget it! Data = information The central activity performed in performance analysis is to capture and analyze Windows 2000 Server data. In effect, we turn data into information. The information is used to correct Windows 2000 Server deficiencies, eliminate system bottlenecks, proactively prevent system problems before they occur, troubleshoot problems once they do occur, and plan for system upgrades and enhancements. 4620-1 ch17.f.qc 554 10/28/99 12:19 PM Page 554 Part VI: Optimizing Windows 2000 Server ■ ■ Are You Being “Outperformed?” After you have arrived on the scene as the great net god, whether as consultant or full-time employee, and fixed the obvious problems, your talents often reach a fork in the road. The first fork is that of mediocrity in network management. Many in the network engineering field are content to coast once a system is up and running. That’s truly what I’d call satisfying behavior: working only hard enough to satisfy management, bosses, clients, and end users. These technology peers are great readers of industry trade journals (on company time!). They also have shoes that are hardly worn because their feet are perched on their desktops while reading those trade journals. The other fork network professionals take is to exceed everyone’s expectations. In the world of Windows 2000 Server, this is accomplished by mastering tools such as System Performance Monitor, Network Monitor, and Task Manager. That is when and where you can really master the management of your Windows 2000 Server networked environment. Mastery involves cultivating the ability to identify and mitigate bottlenecks, preventing poor system performance by planning for system additions, and more planning, planning, planning. System Performance Monitor Mastering System Performance Monitor not only enhances your professional standing, but more important, it enables you to provide your end users with a more efficient and stable network for accomplishing their work. And that is how you and your network are ultimately evaluated: by how well your end users do their jobs using computers attached to your Windows 2000 Server network. By employing the suggestions that follow, you can proactively manage your Windows 2000 Server networks and provide solutions before encountering problems. In fact, it’s been said that preventing problems is the best definition of a superior systems engineer. You don’t necessarily have to provide the latest and greatest bells and whistles on your network. You do have to provide an efficient, reliable, and secure Windows 2000 Server network computing environment that users know they can trust. Network Monitor Mastering Network Monitor provides benefits different from System Performance Monitor. Network Monitor provides a snapshot view of network activity in the form of packet analysis. When working with Microsoft technical support at the senior systems engineer level (read “paid incident level”), it is not uncommon for a Microsoft support engineer to have you install Network Monitor and perform a capture. This capture file is then e-mailed to the engineer for analysis. In fact, the way I learned packet analysis was by using exactly this approach. E-mailing the capture file and then discussing its contents with a Microsoft support engineer provided me with the packet analysis fundamentals lacking in many published texts. That is, except Microsoft Official Curriculum course 4620-1 ch17.f.qc 10/28/99 12:19 PM Page 555 Chapter 17: Analyzing and Boosting Performance 555 ■ ■ #689, “Windows NT Server 4.0 Enterprise Technologies,” which has an excellent Network Monitor section. Realizing that Microsoft periodically updates its course offerings, I suspect the successor courses to course #689 will also teach this important topic. If you have routers in your Windows 2000 Server network mix, you will be learning and using Network Monitor. And you will inevitably be e-mailing those packet captures to Microsoft for analysis. Count on it! Task Manager Task Manager is my buddy. With a deft right-mouse click on the taskbar, I can essentially assess the memory and CPU conditions of my Windows 2000 Server. Task Manager can be thought of as System Performance Monitor “light.” Task Manager lives for the moment and doesn’t really offer any long-term analysis capabilities. But more on that later. First, some thoughts on the conceptual framework of performance analysis. Conceptual Steps in Performance Analysis A few basic steps are undertaken to analyze and boost the performance of your Windows 2000 Server: 1. Develop the model to use. In System Performance Monitor, this means it is critical to pick the correct object:counters as part of your model. For Network Monitor, this step might refer to the duration of your packet capture and from what point on the network you capture packets. 2. Gather data for input into the model. This is a collection phase that involves acquiring the data. For System Performance Monitor, you log the data to a file for a reasonable amount of time. More important, this step is where we’re most concerned about the first two parts of GIGO (garbage in/garbage out). Poor data accumulation results in garbage in, certainly a poor foundation to build the rest of your analysis upon. In fact, this step is analogous to my recent homebuying experience on Bainbridge Island (near Seattle). As I was writing this book, my family decided to go house-shopping. After identifying a house that met our needs, we retained a construction engineer to assess the house’s fitness. Unfortunately, he reported the house was unacceptable because it was built on a wood foundation, the rule being that a house’s foundation affects everything from that point forward, including resale value. With respect to Windows 2000 Server, blow this step and suffer for the remainder of your analysis period. 3. Analyze the results. Now the fun begins. If Steps 1 and 2 went well, you are now ready for Step 3: analyzing the results. Success at this stage will truly enable you to boost performance and optimize your Windows 2000 Server implementation. If you struggle here, see the next section on troubleshooting. 4620-1 ch17.f.qc 556 10/28/99 12:19 PM Page 556 Part VI: Optimizing Windows 2000 Server ■ ■ 4. Gather feedback. Are we missing the boat with our analysis? Did Steps 1, 2, and 3 lead us to optimize the system in such a way that performance was improved, not hampered? Your ability to gather and interpret feedback will make or break your ability to become a superstar system engineer. Troubleshooting via Performance Analysis Everything discussed so far is meaningless if the knowledge transfer between us doesn’t leave these pages. It is essential that there be a real-world outcome to the intense performance analysis discussion you and I have embarked on. Otherwise this discussion is nothing more than a pleasant academic exercise. The outcome we’re both seeking is applying the performance analysis tools and tricks readily available to improve your network’s performance. And that obviously includes troubleshooting. The performance analysis tools included with Windows 2000 Server are software-based and do a better job of diagnosing virtual problems than truly physical problems. Physical problems, such as a bad cable run, are better diagnosed using a handheld cable tester. There is no magic elixir to troubleshooting. Troubleshooting ability, by most accounts, is primarily a function of on-the-job experience, including long weekends and late nights at work. As an MCSE and MCT instructor, I’ve seen countless students struggle with the required Networking Essentials exam when their résumés are short and their tenures as Windows 2000 Server administrators are measured in months, not years. Students with significant industry experience enjoy an easier ride when taking the Networking Essentials exam, and not surprisingly, they have more sharply honed troubleshooting skills. Troubleshooting is something you learn with lots of on-the-job experience. The performance analysis tools provided with Windows 2000 Server are valuable not only for helping you improve the performance of your network, but also for troubleshooting problems more efficiently and effectively. Be advised that even the best set of tools in unclean or incompetent hands will usually result in an unfavorable outcome. Troubleshooting is not only a function of your Windows 2000 Server architectural experience, but also of your ability to swim comfortably within the Windows 2000 Server Registry. That’s so you can observe and capitalize on driver dependencies and start values, track the Windows 2000 Server boot process to the point of failure, and understand stop screens. It won’t hurt if you’ve worked with Microsoft support with the debugger utilities. But more on that in Chapter 25. Print and review the entire Window 2000 Server Registry as soon as you install Window 2000 Server. Learn the location of important information (for example, HKEY_LOCAL_MACHINE is much more important than HKEY_CLASSES_ROOT). By studying and learning the Registry early, you will know where to go to investigate Registry values in an emergency. Don’t forget to place this Registry printout in a notebook and update it periodically (quarterly, if you make significant changes or install lots of 4620-1 ch17.f.qc 10/28/99 12:19 PM Page 557 Chapter 17: Analyzing and Boosting Performance 557 ■ ■ applications). I would highly recommend that you print to file and edit the information in a word processing application such as Microsoft Word. You will shorten the size of the printout considerably and make it more readable. Of course, you can always print to file as your only form of storage (and avoid printing to the printer at all). Whether you print to file or the printer, the Registry information is most valuable. Misdiagnosing a problem is as problematic in the world of Windows 2000 Server as it is in the world of medicine. Although using System Performance Monitor, Network Monitor, and Task Manager will not assure a correct diagnosis, these tools are legitimate ways to eliminate false reads. Furthermore, some third-party performance analysis tools I’ll discuss in the next few chapters can be used to supplement Windows 2000 Server’s built-in tools and dramatically improve your troubleshooting efforts. The Four Big Areas to Monitor Quick, what are the four resource areas you should monitor in order to boost performance in Windows 2000 Server? They are memory, processor or, disk subsystem, and network subsystem. Memory Perhaps the simplest secret conveyed in this entire book is that adding memory will truly solve many problems in your networked environment. Looking beyond that reasoning is the idea behind analyzing memory performance. First, you probably have economic and technical constraints that prevent you from adding an infinite amount of memory to your server. Second, simply buying your way out of a problem by purchasing and installing more memory isn’t fundamentally sound network engineering. Understanding the reasons for adding memory is what’s important. Simply stated, we look at two forms of memory as part of our analysis: RAM and cache memory. RAM is, as we all know, volatile primary storage. Cache memory, also in RAM, is where Windows 2000 Server places files, applications, drivers, and such that are currently being accessed by the users, the operating system, and so forth. In Chapter 22, memory will be discussed in more detail with respect to specific memory object:counters used in System Performance Monitor. Processor My experience with analyzing the processor is that it usually isn’t the cause of everyone’s grief. Many suffer from processor envy, which is no doubt a function of popular advertisements creating the need for the latest and greatest Intel processor. So network engineers and administrators on the front line are often greeted with free advice from users on upgrading the processor. However, in most small- and medium-sized organizations, the processor utilization rates are well within acceptable limits. In large networked environments, a strong case can be made for faster, more powerful processors and even implementing 4620-1 ch17.f.qc 558 10/28/99 12:19 PM Page 558 Part VI: Optimizing Windows 2000 Server ■ ■ multiple processors. These larger enterprises will be interested in learning more about Windows 2000 Server’s multiple processing capabilities using the symmetric multiprocessing model. Disk subsystem Another tired solution that’s the bane of network engineers and administrators is the “just buy a faster hard disk” approach. Easier said than done. Again, economic considerations may prevent you from just throwing money at your problems. A more intelligent approach is to analyze your disk subsystem in detail to determine exactly where the bottleneck resides. Issues to consider when analyzing the disk subsystem include ■ What is your disk controller type (ranging from legacy IDE controllers to more modern Fast SCSI-2 and PCI controllers)? Disk controller type technology changes rapidly and new innovations in system buses are introduced frequently. If you do not have a strong hardware orientation, make sure you are reading the hardware ads in popular technology trade journals and occasionally taking your technician/hardware guru buddy to lunch. ■ Do your controllers have on-board processors (typically known as “bus master” controllers)? ■ Do your controllers cache activity directly on the controller card, thereby bypassing the use of RAM or internal cache memory on the computer to store limited amounts of data? ■ Do disk-bound applications and the associated high levels of read and write requests suggest you need to consider the fastest disk subsystem available? Current disk device drivers: Are you implementing the latest disk subsystem drivers on your system? While this is an often overlooked duty, using current drivers can go a long way toward boosting your disk subsystem performance (and are typically available for the low price associated with downloading a driver from the vendor’s Internet site). Hardware-based RAID solutions offer significantly better performance than RAID solutions implemented via software (the software-based RAID capabilities found in Disk Administrator). That’s because hardware-based RAID parity calculations are performed independently of the operating system. Sometimes you just have to reboot! Here’s one secret you won’t find in any Windows 2000 Server user manual. For reasons I can’t fully explain, sometimes Windows 2000 Server just freaks out and the hard disks spin excessively. When this happens, you don’t even get enough processor time to freely move your mouse. The solution? Just restart the server. This condition will often disappear upon reboot. Truth be told, this “secret” is one of the best consulting freebies that I offer my clients — I often tell my clients to reboot and call me in the 4620-1 ch17.f.qc 10/28/99 12:19 PM Page 559 Chapter 17: Analyzing and Boosting Performance 559 ■ ■ morning. It’s usually just what the doctor ordered. I don’t know if it is a function of Microsoft products more than other vendor products, but rebooting works wonders! I often say the rebooting Windows 2000 Server will solve 90 percent of your problems. Be sure to delay rebooting your Windows 2000 Server until after work hours if possible. Users often take advantage of a reboot condition to call it a day and leave early, causing unexpected traffic jams in the parking lot! Network subsystem The network subsystem consists of internal and external network components such as the network adapter type, number of network adapter cards, cabling media, routers and switches, Windows 2000 Server services, and the types of applications used (SQL Server, Exchange, and other Microsoft BackOffice applications). And don’t forget end users. I consider end users to be a network component because they can impact the performance of the network with their usage. How you configure Active Directory will also impact the performance of your network. A complex and unwieldy Active Directory structure can hinder rather than help your network. See Chapters 5 and 16 for more information on Active Directory. In general, network bottlenecks are more difficult to detect and resolve than problems found in the three subsystems just discussed (memory, processor, and disk subsystem). In fact, all of the tools discussed in this and the next several chapters are typically used to resolve network bottlenecks. Additionally, physical tools are readily employed to remedy network subsystem ailments. These include cable testers and time domain reflectors (TDRs). In fact, detection of quasi-logical/virtual and quasi-physical problems on your network may present one of your greatest challenges as a Windows 2000 Server professional. At a small site, I once fell victim to some tomfoolery introduced on the network by a 3COM switch. The device, being used primarily as a media converter between a 100MBps backbone run to the network adapter on the Windows 2000 Server and 10MBps runs to the workstations, decided to both reconfigure itself and downright break one evening when Microsoft Proxy Server 2.0 was introduced. Several hours of sleuthing later, it was determined the 100MBps downlink port had truly gone under. That is, the 100MBps port had lost its configuration. The solution? We quickly implemented a cheap 10MBps Ethernet concentrator to get everything running again. The hours spent fussing over the switch clearly eliminated all of the advantages associated with the 100MBps server backbone. But that’s another topic. Use 32-bit network adapters. Older 8-bit network adapters transfer up to 400 kilobytes per second (Kbps). Newer (and now standard) 32-bit network adapters transfer up to 1.2 Megabytes per second (MBps). If the network adapter card is too slow, it cannot effectively perform transfers of information from the computer to the network and vice versa. 4620-1 ch17.f.qc 560 10/28/99 12:19 PM Page 560 Part VI: Optimizing Windows 2000 Server ■ ■ Consider installing multiple network adapter cards to boost throughput). A single network adapter card can be a bottleneck in the network subsystem by virtue of its primary role in taking 32-bit parallel form data and transferring it to a serial form for placement on the wire (see Figure 17-8). Multiple network cards will boost network subsystem performance. Data to network Data from computer Figure 17-8: A network adapter card performing data transfer Bind only one protocol type to each network card if possible. This enables you to perform some load balancing between network adapter cards. For example, if you have a second network cable segment for backing up the servers in your server farm to a backup server, consider binding the fast and efficient NetBEUI protocol to the network adapter cards on this segment (assuming no routing is involved). Binding multiple protocols to each network adapter can result in a performance decline on your network. Reducing excess protocols will reduce network traffic. Some types of network traffic, such as connection requests, are sent over all protocols at the same time. Now that’s a traffic jam! Try and reduce the number of protocols and networking services used on your Windows 2000 Server. Small is beautiful because overhead is reduced with a smaller networking subsystem footprint. Use network adapters from the same manufacturer, if possible. Different manufacturers implement drivers against the lower layers of the OSI model differently. Using the same type of card from the same manufacturer results in a consistent implementation of the network subsystem component. Networking services in Windows 2000 Server may be installed from the Add/Remove Programs applet in Control Panel. Simply select the Add/Remove Windows Components button in the Add/Remove Programs applet and complete with the Windows Components Wizard, as seen in Figure 17-9. Select the network services you want to install. 4620-1 ch17.f.qc 10/28/99 12:19 PM Page 561 561 Chapter 17: Analyzing and Boosting Performance ■ ■ Figure 17-9: Networking Services in Windows Components Wizard Table 17-4 is a list and description of possible network-related services that may be installed with Windows 2000 Server via the Networking Services dialog box. Table 17-4 Windows 2000 Server Networking Services Service Name Description COM Internet Services Proxy This service automatically enables the Distributed Component Object Model (DCOM) to travel over HTTP via the Internet Information Server (IIS). Domain Name System (DNS) This is the mechanism that answers queries and updates requests for the Domain Name System (DNS) names. Dynamic Host Configuration Protocol This service enables a network connection to the Internet to dynamically assign a temporary IP address to a network host when the host connects to the network. Internet Authentication Service This service verifies authentication requests that are received via the RADIUS protocol. QoS Admission Control Service This service enables you to specify the quality of the network connection for each subnet. In other words, it is here that you may manage network bandwidth for QoS compliant applications. Simple TCP/IP Services Client program for simple network protocols, including Character Generator, Daytime, Discard, Echo, and Quote of the Day. Site Server LDAP Services This service provides the useful function of scanning TCP/IP stacks and updating directories with current user information. Continued 4620-1 ch17.f.qc 562 10/28/99 12:19 PM Page 562 Part VI: Optimizing Windows 2000 Server ■ ■ Table 17-4 (continued) Service Name Description Windows Internet Name Service Dynamic name registration and resolution service that maps NetBIOS computer names to IP addresses. Note this is primarily offered for backward-compatibility reasons for applications that need to register and resolve NetBIOS-type names. Other networking services may also be deployed via the following selections in the Windows Components Wizard: Other Network File and Print Services This installs File, Print Services for Macintosh, Print Services for Unix. Certificate Services This installs a certification authority (CA) to issue certificates for use with public key security applications. Note this certification discussion relates to security, not the MCSE-style certification. Internet Information Services (IIS) You would install IIS with its Web and FTP support plus FrontPage, transactions, ASPs, and database connection support here. Management and Monitoring This installs Connection Management Tools Components (for example, Phone Book Service), Director Service Migration Tool, Network Monitor Tools, and Simple Network Management Protocol. Message Queuing Services This service provides another form of reliable network communication services. Microsoft Indexing Service This is Microsoft Index Server with its robust full-text searching of files. Terminal Services and Terminal Services Licensing This is where you would install Terminal Server, a multisession remote host solution similar to WinFrame or PCAnywhere. The bottom line on the network subsystem? You should be interested in ultimately knowing where you are today in terms of network performance (via System Performance Monitor using the Network Segment object and Network Monitor using its statistics pane), plus accurately forecasting where you will be tomorrow. 4620-1 ch17.f.qc 10/28/99 12:19 PM Page 563 Chapter 17: Analyzing and Boosting Performance 563 ■ ■ Why Performance Declines Several reasons exist for performance declines in Windows 2000 Server. Likely suspects include memory leaks, unresolved resource conflicts, physical wear and tear on the system, and system modifications such as installing poorly behaved applications and running poorly configured applications. The funny thing about operating system patch kits is that previously denied problems by Microsoft such as memory leaks originating from the executive services (like drivers and DLLs) are not only acknowledged, but also fixed. Memory leaks, which can be monitored by performing specific memory measurements over time, are typically corrected by simply rebooting the server periodically. That’s an old trick for those of us who managed NetWare servers in the early days of NetWare 2.x and 3.x, when a monthly reboot was essential to terminate discontiguous memory. Unresolved resource conflicts might include the dance of the fighting SCSI cards. I recently had an experience with a new workstation from a well-known hardware manufacturer where the on-board SCSI controller was fighting with the Adaptec SCSI card. Several modifications later, the conflict appeared to be resolved (by turning off the SCSI BIOS on the Adaptec card), but I could swear the boot time still remained unacceptably long, suggesting the existence of some lingering difficult-to-detect resource conflict (and yes, likely causes such as IRQ settings had been checked and resolved). An example of a poorly configured application might be SQL Server with too much RAM allocated to it, causing a memory shortage for Windows 2000 Server. That would be likely to cause Windows 2000 Server to page excessively, resulting in lower overall system performance. Such a situation not only hurts Windows 2000 Server, but also SQL Server — the application you were trying to help with the original memory optimization scenario. I discuss RAM issues in more detail in Chapter 10. Fragmentation is another source of declining performance in Windows 2000 Server. All operating systems and secondary storage media are subject to fragmentation. This is where a file can be stored across several areas of the hard disk. That adds to read and write times and user frustration levels. Third-party products such as Executive Software’s Diskeeper provide defragmentation services that optimize the secondary storage media and thus boost performance. I discussed fragmentation in more detail in Chapter 10. Consider running the “error checking” utility Check Now found on the Tool tab of a drive’s Properties sheet. Figure 17-10 shows the Check Disk dialog box that should appear. Error checking automatically checks for system errors and scans for and attempts to recover bad sectors. 4620-1 ch17.f.qc 564 10/28/99 12:19 PM Page 564 Part VI: Optimizing Windows 2000 Server ■ ■ Figure 17-10: Check Disk dialog box If you have SCSI drives, sector sparing (also known as a hotfix in NetWare) enables your system to essentially heal itself, kinda like my old VW van. That van had a way of healing itself from ailments if I just let it sit for a month or two. Sector sparing works much faster than my mystic VW van’s healing magic by mapping out bad blocks on the secondary storage media and preventing further writes to that bad space. When all else fails, you can improve performance by truly manually defragmenting your hard disk using a technique employed during my early Macintosh days. Simply store your data to backup tape (be sure to verify the fitness of your backup by performing a test restore) and reformat your hard disk. No doubt a drastic measure, but one that enables you to start fresh! Additional ways to improve performance after you have suffered declines include ■ Keeping the Recycle Bin empty. ■ Deleting those pesky temporary files that many applications write to your secondary storage yet don’t erase. ■ Using NTFS for partitions over 400MB in size and FAT for smaller partitions under 400MB in size. However, be advised that this is a whitepaper recommendation. Many Windows 2000 Server professionals frown upon the use of FAT partitions because NTFS file and folder-level security isn’t available. Other Windows 2000 Server professionals like to install the operating system on a FAT partition and data and applications on an NTFS partition (see Chapter 3 for more discussion). Lying with Performance Analysis A must-read for MBA students is The Honest Truth about Lying with Statistics by Cooper B. Holmes, a primer on how to manipulate statistical analysis to meet your needs. To make a long story short, we can apply some of the same principles contained in Holmes’ book to Windows 2000 Server performance analysis. For example, changing the vertical scale of data presented in System Performance Monitor can radically emphasize or deemphasize performance information, depending on your slant. If you’re seeking a generous budget 4620-1 ch17.f.qc 10/28/99 12:19 PM Page 565 Chapter 17: Analyzing and Boosting Performance 565 ■ ■ allotment to enhance your Windows 2000 Server network, perhaps scaling the processor utilization or network utilization counters in System Performance Monitor to show dramatic peaks and valleys will “sell” your business decision makers on your argument (see Figure 17-11). Figure 17-11: An exaggerated view of processor utilization Performance Benchmarks Several products enable you to establish performance benchmarks when comparing several servers running Windows 2000 Server, different applications, or different services and protocols. These tools change frequently, but perhaps your best source for such benchmarking applications is www.zdnet.com (the Ziff-Davis site). This site has available Socket Test Bench (STB) to test your WinSock-based communications and several other bench-test applications, including ServerBench and Winstone. ServerBench is a popular client/server benchmarking application that runs on many popular operating systems including Windows 2000 Server, Novell NetWare, OS/2 Warp Server, and SCO UNIX (see Figure 17-12). The processor, disk, and network subsystems are all exhaustively tested by ServerBench through different tests and load levels placed on the server being tested. The bottom line? Performance is measured in transactions per second for 4620-1 ch17.f.qc 566 10/28/99 12:19 PM Page 566 Part VI: Optimizing Windows 2000 Server ■ ■ each of the measured subsystems. These transactions reflect the activity between client and server and allow for meaningful comparisons between network operating systems and different makes of computers. Programs such as ServerBench enable you to better evaluate the performance of your Windows 2000 Servers individually and against other network operating systems you might have at your site. Figure 17-12: ServerBench 4.0 Be sure to perform the same ServerBench tests periodically so that you can identify any bothersome declines in system performance. Also note that a search of the Internet using popular search engines such as AltaVista will help you identify performance benchmarking applications you can use on your Windows 2000 Server network. It’s all about positive outcomes. By employing performance analysis methods and approaches to the management of Windows 2000 Server, you can see trends, observe usage patterns, detect bottlenecks, and plan for the future. You can create meaningful management reports that not only keep the appropriate decision makers informed but also identify needed equipment acquisitions and facilitate the IT budgeting process. That’s a key point! Getting budget approval is the life blood of any Windows 2000 Server manager. Don’t forget it! Now — onward to System Performance Monitor! 4620-1 ch17.f.qc 10/28/99 12:19 PM Page 567 Chapter 17: Analyzing and Boosting Performance ■ 567 ■ Summary This chapter introduced performance analysis. Specifically, it presented quantitative and qualitative methods to analyze and boost Windows 2000 Server performance. This understanding of computer network performance issues provides both the foundation for the next few chapters and your efficient and effective use of Windows 2000 Server in your organization. The following points were covered: Appreciating why you would monitor and forecast Windows 2000 Server performance Understanding and being able to define performance analysis Being able to distinguish between quantitative and qualitative tools Listing performance analysis steps The four most important Windows 2000 Server areas to monitor Understanding why performance declines in Windows 2000 Server 4620-1 ch17.f.qc 10/28/99 12:19 PM Page 568