Document 11499092

advertisement

Performance Model Directed Data Sieving

for High Performance I/O

DISCL group

Yin Lu

Performance Model Directed Data Sieving for High Performance I/O

Outline

§ Introduction

§ Performance Model Directed Data Sieving

§ Evaluation

§ Conclusion & Future work

Performance Model Directed Data Sieving for High Performance I/O

Outline

§ Introduction

§ Performance Model Directed Data Sieving

§ Evaluation

§ Conclusion & Future work

Introduction

§ Highly data intensive I/O for large-scale scientific computing.

SciDAC climate studies visualization at ORNL

SciDAC astrophysics simulation

visualization at ORNL

Introduction

§ Data requirements of representative INCITE applications run at Argonne

National Laboratory.

On-Line

Data

INCITE: Innovative and Novel Computational Impact on Theory and Experiment Program

Off-Line

Data

FLASH: Buoyancy-Driven Turbulent Nuclear Burning

75TB

300TB

Reactor Core Hydrodynamics

2TB

5TB

Computational Nuclear Structure

4TB

40TB

Computational Protein Structure

1TB

2TB

Performance Evaluation and Analysis

1TB

1TB

Kinetics and Thermodynamics of Metal and Complex

Hydride Nanoparticles

5TB

100TB

Climate Science

10TB

345TB

Parkinson's Disease

2.5TB

50TB

Plasma Microturbulence

2TB

10TB

Lattice QCD

1TB

44TB

Thermal Striping in Sodium Cooled Reactors

4TB

8TB

Gating Mechanisms of Membrane Proteins

10TB

10TB

Introduction

§ Poor performance in dealing with large number of small and

noncontiguous data requests.

Compute

Node

Compute

node

Metadata

server

Compute

Node

Compute

node

Storage

server

Compute

Node

Compute

node

Storage

server

Compute

Node

Compute

node

Storage

server

Introduction

§ Structured data leads naturally to noncontiguous I/O

§ Noncontiguous I/O has three forms

• Noncontiguous in memory, noncontiguous in file, or noncontiguous in both

Large array distributed

among 16 processes

P0

P1

P2

P3

P4

P5

P6

P7

P8

P9 P10 P11

Each square represents a

subarray in the memory of a

single process

P12 P13 P14 P15

Access Pattern in the file

P0

P1

P4

P2

P5

P8

P3

P6

P9

P12

P0

P7

P10

P13

P1

P4

P11

P14

P2

P5

P6

P8

P9

P10

P15

P12

P13

P14

Introduction

§ ROMIO addresses noncontiguous I/O effectively on parallel file systems.

• The most popular MPI-IO implementation.

• MPI - a standardized and portable message-passing system used to program on parallel

computers.

• MPI IO - a standard interface for parallel I/O.

• Layered implementation supports many storage types

– Local file systems (e.g. XFS)

MPI-IO Interface

– Parallel file systems (e.g. PVFS2)

– NFS, Remote I/O (RFS)

Common Functionality

– UFS implementation works for most other file systems

ADIO Interface

(e.g. GPFS and Lustre)

• Includes data sieving and two-phase optimizations.

PVFS

XFS

UFS

NFS

Introduction

§ Data sieving combines small and noncontiguous I/O requests into

a large and contiguous request to reduce the effect of high I/O

latency caused by noncontiguous access pattern.

§ Date sieving write operations

Introduction

§ Benefits highly depend on specific access patterns

• Always combines all the requests to form a large and contiguous one.

• Lacks a dynamic decision based on different access patterns.

• Non-requested portions (holes) could be too large to be beneficial to perform data

sieving.

Introduction

§ Potential problem of extensive memory requirement

• A single contiguous chunk of data starting from the first up to the last byte requested by

the user is read into the temporary buffer

• Although memory capacity gradually increases in HPC system, the available memory

capacity per core even decreases

– Especially when the scale of HPC is projected to million cores or beyond

Performance Model Directed Data Sieving for High Performance I/O

Outline

§ Introduction

§ Performance Model Directed Data Sieving

§ Evaluation

§ Conclusion & Future work

Performance Model Directed Data Sieving

§ Our work

• Develop a performance model of parallel I/O

system.

MPI-IO Interface

Common Functionality

COMMON FUNCTIONALITY

PMD Data Sieving

• Component determines when to preform data

sieving dynamically on the fly depending on access

patterns.

Performance Model

Dynamic

Decision

• Component determines how data sieving is

performed based on the performance model and

specific access patterns.

Requests

Grouping

Data Access

ADIO Interface

PVFS

XFS

UFS

NFS

Performance Model Directed Data Sieving

Basic model

§ For reading a particular block of data, the total time required is

• TRtotal (Total time for reading a block) = Start up time + Time for system I/O call +

(Request size / Bandwidth for read)

§ Similarly for writing a particular block of data

hole

Performance Model Directed Data Sieving

Extended model

§

§

The client nodes and storage nodes are separate from each other, and every data access involves network transmission.

Data is stripped across all the storage nodes in a round-robin fashion.

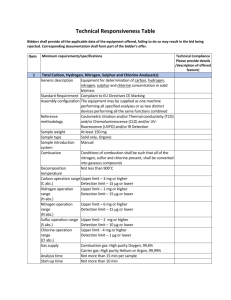

Performance Model Directed Data Sieving

Extended model

Table1. Parameters and Descriptions

Parameters

Table 2. Formula of Deriving I/O Performance

The total time required for

establishing network

connection

Description

p

Number of I/O client processes

n

Number of storage nodes (file servers)

te

Time of establishing network connection for single node

tt

Network transmission time of one unit of data

cud

Time reading/writing one unit of data

lqdep

The latency for outstanding I/Os

sizerd

Read data size of one I/O request

The total time spent on the

network transmission

The total start up (s) time

for I/O operations

The total time spent on the

actual data read/write (Trw)

te * p

tt * sizerd tt * sizewr

Or

n

n

p *(seek time + system IO call)

sizerd * cud

sizewr * cud

Or

n

n

Ttotal = Tnetwork + Tstorage + lqdep

Ttotal = te ∗ p +

tt ∗ sizerrd / wr

sizerd / wr ∗ cud

+ p ∗(seek time + system IO call) +

+ lqdep

n

n

Performance Model Directed Data Sieving

Components Design

§ Dynamic Decision Component

• Input: Hole size (sizeh), seek latency read , time for system I/O

call, bandwidth read , the number of storage nodes (n), the

number of I/O client processes (p), the time for establishing

network connection for single node (te), network transmission

time of one unit of data (tt) and the next I/O access size (sizerd)

MPI-IO Interface

Common Functionality

PMD Data Sieving

Performance Model

• Output: YES or NO. If it is YES, then the data sieving technique

is adopted. If it is NO, requests are handled as independent I/O

request.

Dynamic

Decision

Requests

Grouping

Data Access

{

THread = sizeh /(bandwidthread * n)

Let TStart = (te * p) + (tt * sizerd)/n + (seek latencyread + time for

system I/O call)*p;

If (TStart > THread)

{

Return (YES);

}

Else if (TStart < THread)

{

Return (NO);

}

}

ADIO Interface

PVFS

XFS

UFS

NFS

Performance Model Directed Data Sieving

Components Design

§ Request Grouping Component

• Input: List of all offsets and list of lengths of the each I/O

request data.

MPI-IO Interface

Common Functionality

• Output: Set of groups containing all I/O requests. Among

the I/O requests in each group, the data sieving technique

will be implemented.

{

{

Performance Model

Dynamic

Decision

Start from the lowest offset;

While (algorithm doesn’t reach to the end of the largest offset)

data request is encountered;

decision = call Algorithm 1;

If (decision = NO)

{

If (encountered data request not in any group)

then, go for the independent I/O request;

Else if (encountered data request is in a group)

then, close that group;

}

Else if (decision = YES)

{

group it with the next consecutive I/O request;

}

}

PMD Data Sieving

}

Requests

Grouping

Data Access

ADIO Interface

PVFS

XFS

UFS

NFS

Performance Model Directed Data Sieving for High Performance I/O

Outline

§ Introduction

§ Performance Model Directed Data Sieving

§ Evaluation

§ Conclusion & Future work

Evaluation

§ Experimental Environment

• One Sun Fire X4240 head node with dual 2.7 GHz Opteron quad core processors and

8GB memory. 64 Sun Fire X2200 compute nodes with dual 2.3GHz Opteron quad-core

processors and 8GB memory connected with Gigabit Ethernet.

• Each node is equipped with one solid state drive with model number OCZ Technology

OCZSSDPX-1RVDX0100 REVO X2 PCIE SSD 100GB MLC.

• Ubuntu 4.3.3-5 system with kernel 2.6.28.10, PVFS 2.8.1 file system and

MPICH2-1.0.5p3 library manages the storage system and runtime environment.

• The actual values of the parameters used in the performance model were obtained

through measurement on the experimental platform.

– te: 0.0003sec

– tt: 1/120 MB

– cud: 1/120 MB

Evaluation

§ Three synthetic I/O benchmark scenarios from real applications kernel

• All requests and holes among them have different sizes

• Sparse noncontiguous I/O requests and large holes exist among requests

• Dense noncontiguous I/O requests where small size holes exist among request

!

!

!

Evaluation

§ Experimental Results on Single Node

Execution time of three strategies

Memory requirement of three strategies

Speedup ratio of two strategies

Evaluation

§ Experimental Results on Multiple Nodes

Execution time for access scenario 1

(fixed number of storage nodes)

Execution time for access scenario 2

(fixed number of storage nodes)

Execution time for access scenario 3

(fixed number of storage nodes)

Execution time for access scenario 1

(fixed number of I/O client processes)

Execution time for access scenario 2

(fixed number of I/O client processes)

Execution time for access scenario 3

(fixed number of I/O client processes)

Performance Model Directed Data Sieving for High Performance I/O

Outline

§ Introduction

§ Performance Model Directed Data Sieving

§ Evaluation

§ Conclusion & Future work

Conclusion

§ Data sieving remains a critical approach to improve the performance

for small and noncontiguous accesses in data intensive applications.

§ The existing data sieving strategy is static and suffers large memory

requirement pressure.

§ The proposed performance model directed (PMD) data sieving

approach is essentially a heuristic data sieving approach directed by

estimation given from a performance model.

§ Experiments have been performed on a cluster to evaluate the benefit

of PMD approach

• PMD performs better than both direct method and the current data sieving

approach in terms of execution time.

• PMD reduces the memory requirement considerably as well compared with the

conventional data sieving.

Future work

§ Rigorous study of the performance model can be done by including

more parameters

§ Integrate PMD data sieving with the hybrid storage media

Questions?

Backup