Evaluation of middleware for distributed objects on handheld devices Tom Kristensen

advertisement

Evaluation of middleware for distributed objects on

handheld devices

Tom Kristensen12 , Lars Preben S. Arnesen1 , Eirik Valen1 , and Thomas Plagemann1

1

Department of Informatics, University of Oslo, Norway

{tomkri, larsar, erk, plageman}@ifi.uio.no

http://www.ifi.uio.no/dmms/

2 Thales Communications AS, Oslo, Norway

http://www.thales.no/

Abstract. Use of object-oriented middleware will ease the development of distributed applications in pervasive and mobile computing environments. However, the handheld devices have limited resources and the wireless networks have

limited capacity. In this paper, we evaluate if PDAs and laptops are powerful

enough to run commodity CORBA implementations. We have performed and

analysed thorough measurements of different configurations of hardware, network and software. We found that vanilla PDAs run CORBA implementations

quite efficiently. Therefore, use of object-oriented middleware is feasible in wireless networking, using PDAs and handheld devices today. However, tailoring the

CORBA implementations for limited resources yields better performance. This

indicates the need for specialised implementations for handheld devices, or configurable and tailorable ORBs with regard to the environments the implementations are deployed.

1

Introduction and motivation

Mobile communication and computing devices, like laptops and PDAs with WLAN

interface cards or cell phones with PDA functionality have become very popular over

the last years and there is no sign that this trend will change in the next future. Obviously, these devices are and will be used to run distributed applications. However, the

high level of heterogeneity in terms of devices ranging from high-performance PCs to

resource poor cell-phones, and in terms of networks, ranging from wired gigabit networks to wireless low bitrate links is a challenge for application developers. To mask

heterogeneity of platforms, location, development language, etc. is the classical task

of middleware. Object-oriented middleware, like CORBA, is successfully used to ease

application development in heterogeneous environments. Offering the same abstractions and similar interfaces for application development on all these platforms will ease

and speed up application development. We regard CORBA, and CORBA-like technologies promising candidates for building next-generation object-oriented middleware for

handheld devices, mobile computing, wireless and ad hoc networks, as well as traditional environments where CORBA is widely used today.

Therefore, it is natural to study the appropriateness of object-oriented middleware

also for the development of distributed applications running on PDAs and wireless networks. Some myths exists with regards to the use of object-oriented middleware in such

environments: (i) middleware is heavy and adds a lot of overhead in both processing

time and resource consumption, and (ii) that handheld devices are too weak for running

middleware.

The motivation for the work described in this paper is to understand whether these

myths are true or not and to analyse in detail the performance of CORBA implementations on vanilla PDAs using commodity wireless network technology.

In order to draw a roadmap for future development of middleware for handheld

devices and, in general, ubiquitous computing the state of existing middleware implementations will serve as a baseline. To the best of our knowledge, no newer benchmark measurements and analysis of middleware on handheld devices are performed.

Therefore, we have to explore the state-of-the-art concerning performance of available

solutions and implementations of today’s middleware.

Prominent examples of next-generation middleware for handheld devices is UICCorba[1][2] and BASE[3]. However, these approaches target tiny, resource-scarce devices as typical PalmOS-based PDAs. Also, MICO is available on the Palm platform

[4]. For wireless environments Object Management Group (OMG) have worked out the

Wireless CORBA specification[5], which is implemented by the MICO wireless extensions (MIWCO)[6]. CORBA and object-oriented middleware in general is used for

mobile systems, ad hoc networks and embedded systems in numerous projects and prototypes. However, our mission is to conduct a benchmarking and analysis of standard

CORBA implementations on newer, quite powerful PDAs and laptops.

The rest of the paper is organized as follows. Section 2 describes the test set-up and

an overview of the benchmark tool used. Section 3 contains the analysis of the benchmark tests divided in subsections with emphasis on different CORBA implementations,

hardware and network technologies, as well as system resource usage. The paper and

results is summarized in Section 4

2

Test configuration

Our goal is to investigate whether the CORBA implementations are suited for handheld

devices or not. We have to make measurements of the resource and time consumption of

the CORBA applications, in order to find out how much overhead CORBA adds. Both

simple method invocations with no transfer of parameter data, and methods transfering

a great amount of parameter data have to be measured. A CORBA implementation

also have to support a huge number of objects, without increasing the response time to

unacceptable values.

We investigate the behaviour of the commercial Orbix/E[7] and the open-source

MICO[8] CORBA implementations. Orbix/E is targeted for embedded, resource scarce

devices, implementing only a subset of the CORBA specification. MICO is a fullfledged CORBA implementation, and does not emphasize any particular type of platform. MICO is a prominent representative of generic CORBA implementations. Additionally, it is possible to use on the platforms used in this paper. Orbix/E is an example

of an ORB tailored for embedded devices, and therefore interesting to use in comparison with MICO. Also, Orbix/E’s source code is available and runs on the platforms of

interest in this paper.

Bluetooth

Bluetooth

IPAQ1

IPAQ2

PC2

Logger

802.11b

(managed)

PC1

Internet

Router

Switch

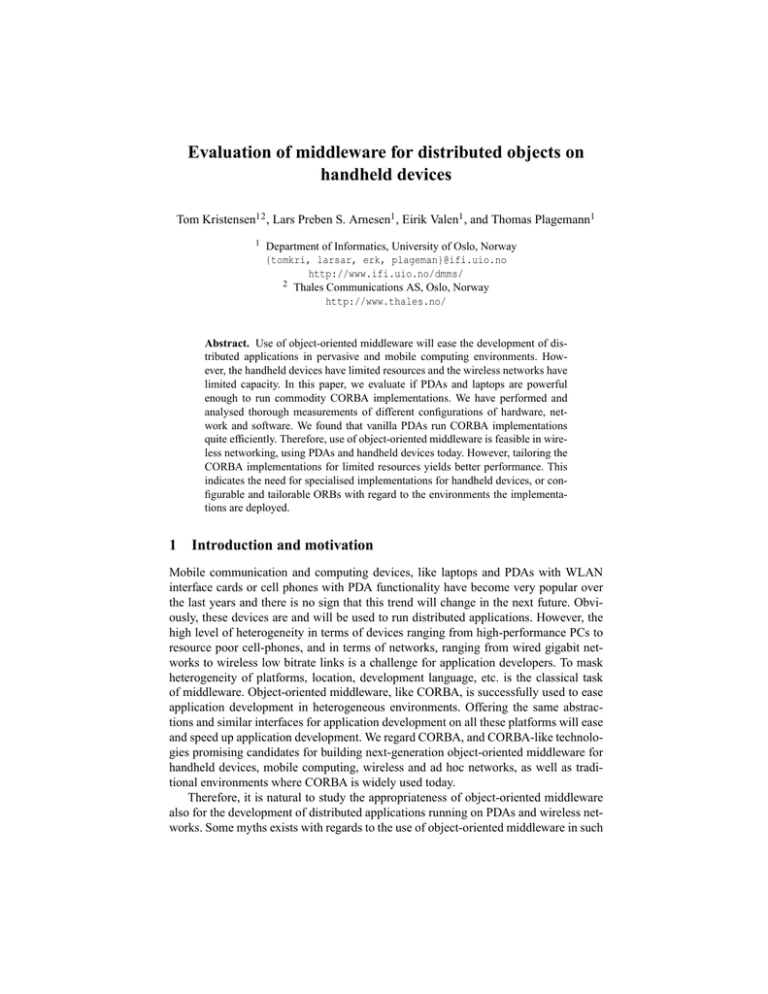

Fig. 1. Setup for the test

The CORBA implementations are tested on different platforms using various network technologies[9]. Comparing data from the measurements enable us to analyse how

changes in test parameters, such as type of hardware and network technology influence

on the performance of the implementations. We will also analyse how the performance

varies for the different CORBA implementations given the same test parameters.

The measurements are conducted with different combinations of client and server

platforms, running on devices with the same or different architecture. In addition measurements will be done with client and server on the same machine. This is the list of

test configurations:

–

–

–

–

Client on iPAQ, server on PC

Client on iPAQ, server on another iPAQ

Client on PC, server on iPAQ

Client and server on same iPAQ or PC

Figure 1 illustrates the test setup. The HP iPAQ H3870 has a 206MHz Intel SA-1110

StrongARM processor with 64MB RAM and 32 MB ROM. The iPAQs are communicating over Bluetooth and Wi-Fi (IEEE 802.11b) wireless LAN links. PC1 is a 350MHz

Intel Pentium II with 512MB RAM. PC1 is is equipped with a Bluetooth USB adapter

and connected to the Wi-Fi router over Ethernet, enabling communication with the

iPAQs using Bluetooth and Wi-Fi respectively. PC2 is a 500MHz Intel Pentium III with

384MB RAM. PC2 is solely communicating with the other computers using Wi-Fi. Additionally, the PC named Logger is available via a 100 Mbit Ethernet switch. Logger is

used for saving the measurement data to disk due to restricted disk space on the iPAQs.

Open CORBA Benchmarking (OCB)[10] is used to perform and generate suitable

data for the analysis. OCB provides an overview of the most significant parameters regarding ORB performance. OCB is a flexible tool already supporting several CORBA

implementations, including Orbix/E and MICO used in this paper. OCB also allows

users to publish and browse other’s benchmarking results on the web, enabling comparison with data from other test configurations.

OCB is designed according to OMG’s guidelines for CORBA benchmarking[11],

which advocates the need for benchmarks to be open, well understood and easy to

measure. We focus on these OCB test categories:

– Invocation - measures response times for method invocations without parameters.

The response times for the request-reply is measured on the client side. This test is

labeled invocation.

– Marshalling - Throughput test performed by sending invocations with parameters

of increasing size, in order to investigate the behaviour of the ORB. These tests are

refered to as sequence in and sequence out.

– Dispatcher - Scalability test measuring response times for increasing number of

objects on the server side. The test is labeled instances.

– System performance - several small independent test measuring CPU, memory,

thread and socket performance (without using CORBA functionality).

3

Analysis

This section describes the analysis of the data from the OCB measurements with the

test configurations presented in Section 2. For the method incovation test all raw data

from the measurements are available. Data from the marshalling and dispatcher measurements are limited to five set of values, the set consists of a minimum, a maximum

and one average value. Therefore, the analysis if based on an average of the average

value from these five sets.

During the marshalling and dispatcher tests data where gathered respectively for

different data size and for number of objects. The analysis is based on values for the

maximum and minimum data size and number of objects. For the marshalling tests that

minimum data size is 0 KB and maximum 50 KB, for dispatcher tests the minimum

number of objects is 1 and the maximum 20 000.

The measurement analysis is partitioned into four parts. First, the differences between the two CORBA implementations are described. Second, the influence of the

hardware platforms are analysed, the results of the benchmark on the iPAQ and the

workstation are compared to see the influence of different hardware. In the third part,

two different network technologies are used, that is Wi-Fi and Bluetooth. Measurements

from using these wireless networks are compared with measurements running locally

on one machine, that is without using network. Finally, the system resource usage is

analysed.

The most important measurement results are summarized in tables. We are fully

aware that such a presentation makes the paper not easier to read, but it guarantees that

all relevant numbers can be presented in this paper. For more details we kindly refer the

reader to [9].

3.1

CORBA implementations

In order to explore the differences between the CORBA implementations, without the

influence of factors as network conditions or hardware configurations, the different

OCB measurements are performed locally on an iPAQ and on the two workstations.

In

Response time

Machine ORB

0 KB 50 KB

Increase

PC1

Orbix/E 243 µs 1 262 µs 1 019 µs 419%

PC1

MICO 789 µs 2 785 µs 1 996 µs 253%

PC2

Orbix/E 107 µs 794 µs 687 µs 642%

PC2

MICO 391 µs 1 986 µs 1 595 µs 408%

PC1

Orbix/E 270 µs 1 604 µs 1 334 µs 494%

PC1

MICO 795 µs 4 312 µs 3 517 µs 442%

PC2

Orbix/E 119 µs 1 090 µs 971 µs 816%

PC2

MICO 402 µs 3 007 µs 2 605 µs 648%

Table 2. Measurements from sequence in and out

for Orbix/E and MICO

Out

Orbix/E MICO

Response time

Machine Average Median

iPAQ 6 237 µs 4 361 µs

PC1

732 µs 659 µs

PC2

355 µs 325 µs

iPAQ 1 627 µs 1 206 µs

PC1

237 µs 211 µs

PC2

107 µs

90 µs

Table 1. Invocation measurement for MICO and Orbix/E

Invocation Table 1 presents the response times for MICO and Orbix/E running locally

on the different machines, as shown MICO’s response times are clearly higher than

Orbix/E’s. In all the cases MICO spends over three times as long time performing the

invocation compared to Orbix/E.

From Table 1 we see the main distribution for Orbix/E is between 209-213 µs and

between 648-663 µs for MICO.

Marshalling In order to study how the response times reacts to an increasing amount

of parameter and return data, we have calculated the relative increase in response times

for invocations with and without associated data. This is based on local measurements

to isolate the benchmark from delays in the network. Table 2 presents the measurements for parameter size of 0 and 50 KB for both sequence in and sequence out. The

marshalling test follows the trend from the invocation test; Orbix/E is clearly performing better than MICO. On both test machines Orbix/E used about one-third of the time

MICO used for invocations without data transfer. When transfering 50 KB, how the

marshalling/demarshalling routines are implemented have huge impact. As the measurements show, Orbix/E uses 40-45% of the time MICO uses to complete the invocation.

Also, for the sequence out test does Orbix/E behave better than MICO when regarding response times. The response times for both CORBA implementations are slightly

higher than for sequence in. The differences between response times for Orbix/E and

MICO for sequence out are however smaller. Table 2 also shows that the increase in

response times is bigger for sequence out compared to sequence in for both implementations. With empty method invocation (0 KB) the response times for sequence in

comprise 90% of the response times for sequence out using Orbix/E and 97-99% using

MICO. When transfering 50 KB in the invocations the values for sequence in are about

75% and 66% of the sequence out values for Orbix/E and MICO respectively.

MICO is distinctly slower than Orbix/E both when transfering parameter and return

data. In addition, MICO scales poorly compared to Orbix/E for both sequence tests, especially sequence out. This indicates that MICO’s IDL compiler generates less effective

code compared to Orbix/E’s compiler.

Dispatcher In order to explore differences in response times for varying number of

of objects on the server side, we performe local measurements on a workstation and

Response time

Machine ORB

1 obj 10 000 obj Increase

Orbix/E 246 µs

252 µs

6 µs 2%

PC1

MICO

739 µs

817 µs

78 µs 11%

Orbix/E 1 685 µs 1 961 µs 276 µs 16%

iPAQ

MICO 6 343 µs 9 507 µs 3 164 µs 50%

Table 3. Measurements from instances

Machine Average Median

PC1

236 µs 212 µs

PC2

107 µs

90 µs

iPAQ

1 627 µs 1 206 µs

Table 4. Response times

from invocation for Orbix/E

an iPAQ for both CORBA implementations. Orbix/E on iPAQ could not handle more

than 10 000 objects, therefore we used this as the maximum number of objects in this

particular test.

Table 3 presents the results of the measurements, with calculations of absolute and

relative increase of response times as the number of objects increase from 1 to 10 000

objects. As we see MICO has higher response times than Orbix/E for both 1 and 10 000

objects and on the iPAQ as well as the workstation. Also, the increase in response

times is higher for MICO, meaning that Orbix/E scales better for increasing number

of objects compared to MICO. This indicates that Orbix/E’s dispatcher functionality is

implemented more effective than in MICO.

Summary All benchmarks shows MICO has longer response times than Orbix/E. The

invocation tests for Orbix/E results in response times that is one-third of corresponding

values for MICO. Orbix/E also scales better both for increasing parameter and return

data size and for increasing number of objects on the server side. All sequence tests, for

both ORBs shows that response times involving return of parameter data is higher than

sending of parameter data. We have not been able to identify whether this is due to the

benchmark tools or the CORBA architecture and implementation itself.

3.2

Out

In

Orbix/E MICO

Response time

Machines Average Median

Machine

0 KB 50 KB

Increase

iPAQ - PC1 4 979 µs 4 242 µs

PC1 - iPAQ 243 µs 1 262 µs 1 019 µs 419%

PC1 - iPAQ 5 870 µs 4 981 µs

iPAQ - PC1 107 µs 794 µs 687 µs 642%

iPAQ - iPAQ2 8 914 µs 6 845 µs

iPAQ - iPAQ2 391 µs 1 986 µs 1 595 µs 408%

iPAQ - PC1 3 734 µs 3 180 µs

PC1 - iPAQ 270 µs 1 604 µs 1 334 µs 494%

PC1 - iPAQ 3 676 µs 3 132 µs

iPAQ - PC1 795 µs 4 312 µs 3 517 µs 442%

iPAQ - iPAQ2 5 698 µs 4 265 µs

iPAQ - iPAQ2 119 µs 1 090 µs 971 µs 816%

Table 5. Invocation measureTable 6. sequence in and sequence out for MICO

ment for MICO and Orbix/E

Hardware

In this section, we present measurements performed using different configurations of

hardware, the results are compared with measurements done locally. We have used WiFi as network technology to connect the different machines together, as this is the technology we had available with the lowest latency and highest throughput.

Invocation The response time distribution from the invocation test are presented in

Table 4. As can be seen, there is a distinct difference between PC1 and PC2. The values

for PC2 are about the half of the response times for PC1. The iPAQ is, as expected much

slower than the two other machines. PC1 spend 6-8% of the response times measured

for the iPAQ. PC2 has over 7 000 occurences of 90 µs. The distribution of results for

PC1 is not that tight, although it is more focused than iPAQ’s. This indicates that how

tight or focused the distribution is depends on how powerful the machine is.

The measurements above are done locally on each machine. Additionally, measurements for invocations between machines are performed in order to explore how different

machine configurations influence the results. Refer to Figure 1 for an overview of the

configurations.

The combination with the worst response times were iPAQ-iPAQ2, as shown in

Table 5. This configuration was the combination with least power in total, therefore

the result was as expected. Using Orbix/E, the combinations iPAQ-PC1 and PC1-iPAQ

resulted in about the same response times and distribution of measurements from the

test. For MICO the combination iPAQ-PC1 resulted in response times about 20% lower

than the values for the combination PC1-iPAQ. This indicates the server needs more

processing power than the client, therefore the best combination is to use the most

powerful machine with most resources on the server side.

Marshalling As shown in Section 3.1, returning data (sequence out) consumes more

time and scales worse than sending of parameter data (sequence in). This tendency is

the same for all machines, and for both 0 KB and 50 KB data size.

The smallest increase in response times comes from the combinations where the

PC performs sending/returning of data. That is, the best combination for the sequence

in test is using the PC as a client and for the sequence out test using the PC as server.

Table 6 presents measurements of sequence in and sequence out with 0 and 50 KB of

data size. As we see, operations that involve sending of data is more time consuming

than operations for receiving the data. For TCP, receiving data is the heaviest operation,

this indicates that the reason for the ORB behaviour can be found above the TCP implementation, e.g. in the middleware itself. The measurements summarized in Table 6

is performed using Wi-Fi, the relative differences between the sequence tests is smaller

than the tests performed locally on the different machines. An explanation for this is that

the Wi-Fi network introduces delays in the network that hides some of the differences

in processing time.

Dispatcher For the instances test we present measurements for both Wi-Fi and Bluetooth using different combinations of hardware. The reason for presenting Bluetooth as

well is that we experienced a different behaviour for Bluetooth compared to Wi-Fi.

Table 7 summarize the measurements, with 1 - 20 000 objects for MICO and 1 10 000 objects for Orbix/E. When running locally on PC1 and iPAQ, response times are

clearly lower for PC1. Additionally, we see that both the absolute and relative increase

in response times from 1 to 20 000 (10 000 for Orbix/E) is not as steep as the increase

on the iPAQ.

The hardware configuration that scales best is PC1 - iPAQ. Our results indicates

better results regarding scalability when using the most powerful machine on the client

side. Our pre-assumption was that a more powerful machine on the server side would

Wi-Fi Local

BT

Response time MICO

Response time Orbix/E

Machines

1 obj 20 000 o.

Increase

1 obj 10 000 o. Increase

PC1

739 µs

839 µs

100 µs 14%

246 µs

252 µs

6 µs 2%

iPAQ

6 343 µs 10 831 µs 4 488 µs 71% 1 685 µs 1 961 µs 276 µs 16%

PC1 - iPAQ 5 834 µs 6 862 µs 1 028 µs 17% 3 675 µs 3 778 µs 103 µs 3%

iPAQ - PC1 5 036 µs 8 473 µs 3 437 µs 68% 3 756 µs 4 147 µs 391 µs 10%

iPAQ - iPAQ2 8 919 µs 13 713 µs 4 794 µs 54% 5 781 µs 6 279 µs 498 µs 9%

PC1 - iPAQ 59 764 µs 60 251 µs

487 µs 1% 55 500 µs 55 529 µs

29 µs <1%

iPAQ - PC1 63 588 µs 77 626 µs 14 068 µs 22% 58 994 µs 60 904 µs 1 910 µs 3%

iPAQ - iPAQ2 79 150 µs 90 489 µs 11 339 µs 14% 75 896 µs 77 238 µs 1 342 µs 2%

Table 7. Measurements from instances for MICO and Orbix/E

Network technology Theoretical iPAQ-PC1 iPAQ-iPAQ2

Wi-Fi

11 000 Kbps 3 600 Kbps 2 500 Kbps

Bluetooth

110-340 Kbps

80 Kbps

75 Kbps

Bluetooth comp

2 800 Kbps 2 200 Kbps

Table 8. Theoretical bandwidth and measured throughput

have a positive effect on the dispatching performance. However, we have found weaknesses in OCB’s routines that may be the cause of the described findings.

When running the benchmark over Wi-Fi, the combination iPAQ - PC1 scales better

than the combination with two iPAQs. Using Bluetooth results in the opposite, the combination with two iPAQs scales better. The significance of the scalability is reflected in

the relative increase of response time. Even though iPAQ - PC1 scales better compared

to iPAQ - iPAQ2 over Wi-Fi, the increase in response times for iPAQ - PC1 represents

a greater amount of the total response time.

3.3

Network

We have already seen in the previous section that the network influences the performance of the ORBs. In this section, we analyse in detail how MICO and Orbix/E behave

when used over different network technologies and how different hardware configurations react on changes in network technology.

Troughput and delay Before performing the measurements and analysis of the ORBs

it is useful to know what the performance on the network level are, both locally and

between machines. We are using the ttcp tool to measure troughput and delay. ttcp is

configured to generate random generated data for 5 minutes, this test is repeated several

times for each configuration.

In Table 8, the measured throughput for the combinations iPAQ - PC1 and iPAQ iPAQ2 is listed for the interesting network types. As we can see the theoretical bandwidth is much higher than the measured values. This is not surprising as the theoretical

bandwidth does not include checksums, headers or other control data. The measured

performance is for pure payload on the application level.

Machine

Throughput

RTT

iPAQ

127 000 Kbps 0.20 ms

PC1

540 000 Kbps 0.03 ms

PC2

1 320 000 Kbps 0.04 ms

Table 9. Measured local

throughput and RTT

Network tech. PC1-iPAQ iPAQ-PC1 iPAQ-iPAQ

Wi-Fi

2.7 ms

2.7 ms

3.8 ms

Bluetooth

64 ms

73 ms

88 ms

Bluetooth comp

49 ms

57 ms

88 ms

Table 10. Measured RTT with different network

technologies between machines

In order to relate response times from OCB to response times below the ORB layer,

simple measurements with ping is done. The results can be found in Table 9 and 10.

Invocation With different network types the behaviour of the two ORBs are measured.

As we can see from Table 11 numbers for Bluetooth are a magnitude higher than the

numbers for local and Wi-Fi measurements. The transport protocol in use, namely TCP,

is probably the cause of this, because it uses three segments to establish and four segments to release a connection. Therefore, the latency in the network have huge impact

on the response times in this scenario.

For all measurements the difference between MICO’s and Orbix/E’s performance

are clear. Notice that we experience response times for Orbix/E over Wi-Fi that is lower

than response times measured locally with MICO. Differences in average response

times between Orbix/E and MICO vary distinctly between local measurements and

measurements over network, differences when using network is a magnitude higher.

However, this difference constitutes a smaller part of the total response the slower

the networks used are. Differences between the ORBs are getting smaller when using

slower networks.

Response time

Network

1 obj 10 000 obj

Increase

Local

6 343 µs 10 831 µs 4 488 µs 71 %

Wi-Fi

8 919 µs 13 713 µs 4 794 µs 54 %

Bluetooth 79 134 µs 90 750 µs 11 616 µs 15 %

Local

1 685 µs 1 961 µs

276 µs 16 %

Wi-Fi

5 781 µs 6 455 µs

674 µs 12 %

Bluetooth 75 972 µs 78 419 µs 2 447 µs 3 %

Table 12. Measurements from instances between

iPAQs

Orbix/E MICO

Orbix/E MICO

Response time

Network Average Median

Local

6 237 µs 4 361 µs

Wi-Fi

8 914 µs 6 845 µs

Bluetooth 80 550 µs 79 476 µs

Local

1 627 µs 1 206 µs

Wi-Fi

5 698 µs 4 265 µs

Bluetooth 76 050 µs 74 970 µs

Table 11. Response times for

MICO and Orbix/E

Marshalling To explore how different network technologies influence on throughput,

we have measured the ORBs locally on an iPAQ and between two iPAQs using Wi-Fi

and Bluetooth. Table 13 summarizes the results for measurements with 0 and 50 KB

data size.

The empty method invocations (0 KB data size) over Wi-Fi have quite low response

times compared to local measurements. Switching to Bluetooth yields response times

about ten times higher than for Wi-Fi. Again the setup and teardown of TCP connections

Orbix/E

MICO

Test Network

0 KB

50KB

Increase

Local

6 650 µs 21 522 µs 14 872 µs 224%

In Wi-Fi

9 494 µs 185 571 µs 176 077 µs 1 855%

Bluetooth 81 011 µs 354 318 µs 273 307 µs 338%

Local

6 827 µs 34 070 µs 27 243 µs 399%

Out Wi-Fi

9 454 µs 198 528 µs 189 074 µs 2 000%

Bluetooth 82 389 µs 368 277 µs 285 888 µs 347%

Local

1 648 µs 6 917 µs 5 269 µs 320%

In Wi-Fi

5 688 µs 170 995 µs 165 307 µs 2 906%

Bluetooth 76 018 µs 358 839 µs 282 821 µs 372%

Local

1 872 µs 9 551 µs 7 679 µs 410%

Out Wi-Fi

5 870 µs 171 819 µs 165 949 µs 2 827%

Bluetooth 76 218 µs 369 670 µs 293 452 µs 385%

Table 13. Measurements from sequence in and out for Orbix/E and MICO on iPAQ

have a huge influence in this. For 50 KB data size the relative differences are not that

big. Response times for Wi-Fi now constitute about 70% of the response times for

Bluetooth using MICO. For Orbix/E the difference is somewhat bigger. Response times

for local measurements and 50 KB data are far below the measurements when using

network, the numbers are just 5 - 10% of the network response times.

The difference between the sequence in and out tests are clear for local measurements with 50 KB data. Using Wi-Fi the difference is less, for Orbix/E the response

times for the two is even almost identical. For measurements over Bluetooth the relative difference between sequence in and out is very small for both ORBs. Thus, delay

in the wireless networks masks out differences between the two sequence tests.

Dispatcher How scalability is affected by the different network technologies are summarized in Table 12. Measurements are performed for Orbix/E and MICO using Wi-Fi

and Bluetooth, with local measurements added in order to compare the results.

The increase in response times is larger for Bluetooth than for Wi-Fi, as seen from

Table 12. The increase for local measurements are lower than when using network, this

is valid for both ORBs. This indicates the ORBs scales poorer, when slower networks

are used. However, the average response time increase considerably with slower networks. Therefore, analysing the relative numbers for scalability in Table 12 shows that

the increase in response times have less influence for slower networks. The difference

in scalability blurs out for the CORBA implementations when using slow networks.

3.4

System resource usage

In this Section, we analyse the utilization of system resources on the test machines

during the measurements. The CPU load for local measurements, where both client

and server run on the same machine, is very high. In this case, the client and server

applications consumed all available processing time, this influence the performance

measured in this configuration. During measurements over network the CPU load was

clearly lower, especially with Bluetooth where much time is spent in I/O wait state

caused by latency in the network.

The network resource usage is quite similar for both ORBs. As both use standard

IIOP for data encapsulation and exchange, and OCB sends equal amount of data for

over both ORBS.

During dispatcher measurements MICO generated far more page faults than Orbix/E. We presume MICO’s poor performance compared to Orbix/E have a connection

with this. Another related effect showed up during initial testing of the two ORBs.

MICO could handle up to 37 000 objects, while Orbix/E could barely handle 20 000

objects. However, Orbix/E is targeted for embedded platforms, therefore resource consumption is kept to a minimum. MICO uses a higher amount of memory, on a machine

with limited memory on board this will lead to a high amount of page faults and degraded performance.

4

Conclusions

In this paper, we have presented our experimental studies on the performance of CORBA

implementations on commodity PDAs and laptops. The measurements are performed

using Open Corba Benchmarking (OCB). The different tests are (i) invocation, measuring response times for empty method calls, (ii) sequence in and out, testing performance

for invocations with different parameter data size, (iii) instances, indicating scalability

by measuring response times for increasing number of objects, and finally (iv) system

performance, consisting of several tests benchmarking the system performance without CORBA functionality. During the analysis of the measurement data, three different

viewpoints are used. First, we dealt with differences between the two CORBA implementations Orbix/E and MICO. Second, the influence of various hardware were analysed and finally the impact of using two different wireless network technologies were

looked into. In addition, the system resource consumption were analysed.

It turned out Orbix/E performed significantly better than MICO, both in terms of

lower response times for method invocations, higher troughput and better scaling behaviour regarding number of objects. MICO generated a high number of page faults

during the dispatcher test that may have influenced the performance. Tuning MICO’s

memory consumption would probably improve the performance.

Measurements using the different hardware scenarios showed that configurations

with a powerful machine in one connection end-point performed much better compared

to configurations with iPAQs in both ends. Another result was that CORBA implementations used more time sending data compared to the time it takes to receive the same

amount of data. Also, transfering return data is more demanding than transfering parameter data. Therefore, for applications returning a large amount of parameter data

should if possible place the most powerful machine as server.

Network analysis indicates that both Wi-Fi and Bluetooth restricts the performance

of CORBA applications due to slower data transmission and higher response times

for invocations. Also, the relative differences between the CORBA implementations

becomes smaller as a result of slower networks.

The work described in this paper proves the feasability of using CORBA as middleware on handheld devices. The performance are promising and the added overhead

from CORBA is not a major concern. Newer handheld devices are powerful computing

devices, enabling development of distributed systems where for instance cellular phones

and PDAs perform an increasing amount of the data processing. PDAs are not anymore

just devices used to shuffle information back and forth between server machines and

the handheld devices. However, several factors have a strong impact on how relatively

resource poor devices behave in wireless environments. Using these factors as input for

optimizations will probably produce good results. Orbix/E is developed with resource

poor devices in mind, as shown, resulting in better performance in such environments.

The promising results makes it interesting to explore the use of CORBA and develop

next-generation CORBA-like middleware in scenarios involving handheld or embedded

devices in domains such as mobile communications, sensor networks and ad hoc networking.

Acknowledgements

We would like to acknowledge the DITS and GT Programmes of the Norwegian Research Council (NFR) for financial support and UNINETT for providing PDAs and the

wireless equipment used in this work.

References

1. Román, M., Kon, F., Campbell, R.H.: Reflective middleware: From your desk to your hand.

Distributed Systems online 2 (2001)

2. Román, M., Singhai, A., Carvalho, D., Hess, C., Campbell, R.: Integrating pdas into distributed systems: 2k and palmorb. In Gellersen, H.W., ed.: Proceedings of the First International Symposium on Handheld and Ubiquitous Computing (HUC 99). Number LNCS 1707

in Lecture Notes in Computer Science, Karlsruhe, Germany, Springer Verlag (1999)

3. Becker, C., Schiele, G., Gubbels, H.: Base - a micro-kernel-based middleware for pervasive

computing. In: Proceedings of IEEE International Conference on Pervasive Computing and

Communications, Dallas-Fort Worth, Texas, USA (2003)

4. Puder, A.: Mico for the Palm Pilot. (1999) http://www.mico.org/pilot/.

5. Force, T.D.T.: Telecom wireless corba specification. Final Adopted Specification OMG

Document dtc/2001-06-02, Object Management Group, Framingham, MA, USA (2001)

6. Kangasharju, J.: MIWCO - Wireless CORBA extensions for MICO. University of Helsinki.

(2002)

7. IONA Technologies: Orbix/E for C, C++ and Java. (2002)

8. Puder, A., Römer, K.: Mico: An Open Source CORBA Implementation. Morgan Kaufmann

(2000)

9. Arnesen, L.P.S., Valen, E.: Evaluation of CORBA implementations on handheld devices in

wireless networks. Master thesis, Department of Informatics, University of Oslo, Norway

(2002) In norwegian.

10. Tůma, P., Buble, A.: Open corba benchmarking. In: Proceedings of the International Symposium on Performance Evaluation of Computer and Telecommunication Systems (SPECTS

2001), Orlando, Florida, USA (2001)

11. (ed.), P.K.: White paper on benchmarking version 1.0. White Paper OMG Document

bench/99-12-01, Object Management Group, Benchmark PSIG, Framingham, MA, USA

(1999)