The Island Problem Revisited

advertisement

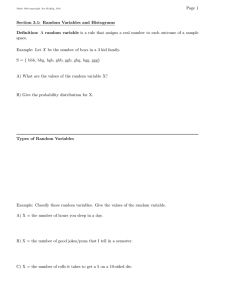

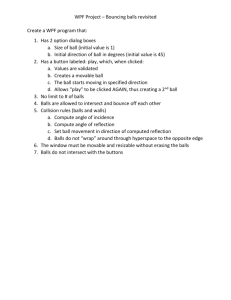

The Island Problem Revisited Halvor Mehlum1 Department of Economics, University of Oslo, Norway E-mail: halvor.mehlum@econ.uio.no March 10, 2009 1 While carrying out this research, the author has been associated with the ESOP centre at the Department of Economics, University of Oslo. ESOP is supported by The Research Council of Norway. The author is grateful to the referees and the editors for stimulating comments and suggestions. The author also wishes to thank David Balding, Philip Dawid, Jo Thori Lind, Haakon Riekeles, Tore Schweder, and to participants at the Joseph Bell Workshop in Edinburgh. Abstract I revisit the so called Island Problem of forensic statistics. The problem is how to properly update the probability of guilt when a suspect is found that has the same characteristics as a culprit. In particular, how should the search protocol be accounted for? I present the established results of the literature and extend them by considering the selection effect resulting from a protocol where only cases where there is a suspect reach the court. I find that the updated probability of guilt is shifted when properly accounting for the selection effect. Which way the shift goes depends on the exact distribution of all potential characteristics in the population. The shift is only marginal in numerical examples that have any resemblance to real world forensic cases. The Island Problem illustrates the general point that the exact protocol by which data are generated is an essential part of the information set that should be used when analyzing non-experimental data. KEY WORDS: Bayesian method, Forensic statistics, Collins case. 1 INTRODUCTION In 1968, a couple in California with certain characteristics committed a robbery. The Collins couple, who had the same characteristics as the robbers, were arrested and put on trial. In court, the prosecutor claimed that these particular characteristics would appear in a randomly chosen couple with the probability 1/12,000,000 and that the couple thus had to be guilty. The suspected couple was convicted, but The California Supreme Court later overturned the conviction (The Supreme Court of California 1969). The Collins’s case, or a stylized version of it called the “Island Problem” (Eggleston 1983), has a central place in forensic statistics literature. This problem can be formulated as a “balls in an urn” problem: An urn contains a known number of balls with different colors. Then a ball is drawn. Its color, which happens to be red, is observed. The ball then goes back to the urn. Now, a search for a red ball is carried out and a red ball is indeed found. What is the probability that the first and the second red balls are in fact the same ball? That is, what is the probability that the suspected ball really is the guilty ball? In the literature, one central theme is how to properly extract information from the circumstances of the case and the search protocol used when finding the suspect. Central contributions start with Fairley and Mosteller (1974), Yellin (1979), and Eggleston (1983) and continue with Lindley (1987), Dawid (1994), Balding and Donnelly (1995), and 1 Dawid and Mortera (1996). These authors show how changes in the assumption regarding the search procedure change the results. In addition to being a central starting point for thinking about forensic statistics, the Island Problem is a good example of how the immediate intuition about probabilities may be wrong. The variations of the Island Problem provide several illustrations of how to appropriately take account of the often subtle information in non-experimental situations. For all students of statistics the Island Problem can serve as a stimulating and challenging puzzle. In particular it can be used in lectures relating to statistics and society, where the students are invited to contemplate the statisticians role as an expert scientist trying to extract information from non-experimental situations. In this note I introduce a possible issue relating to selection in the Island Problem. My question is as follows: given that the search procedure does not always produce a suspect, and assuming that for each case with a successful search there are several cases with unsuccessful searches, what is then the appropriate analysis of the problem? In contrast to the other authors, I include in the analysis the fact that only a selected subset of criminal cases reaches the court. As in the other contributions, I consider a highly stylized and abstract version of the case. I will explain this argument using the helpful ‘balls in an urn’ analogy. I will first present two central solutions from the literature. I will then show how the analysis 2 should be modified when taking into account the fact that we are faced with a selected case. 2 URN MODELS Consider an urn containing N balls. The N balls in the urn may be a sample from an underlying population with M different colors. Now, a robbery takes place: one ball is drawn at random from the urn. All balls are equally likely to be drawn. The color of the ball is observed as being red, and the ball is put back in. Let this part of the evidence be denoted F1 = ‘first ball drawn at random is red’. The question now is: How should F1 affect our belief regarding the number of red balls in the urn? When the ball we draw at random is red, we will adjust our belief about the likely number of red balls in the urn. The only knowledge we have before picking a red ball is that the number of red balls, X, is distributed bin (N, p) with N and p known. Given the evidence F1 the distribution of X may be updated using Bayes’ formula: P (F1 |X = n) = P (X = n|F1 ) = P (X = n) P (F1 ) 3 N n pn (1 − p)(N −n) n/N p (1) By re-arranging, P (X = n|F1 ) = N −1 n−1 pn−1 (1 − p)(N −1)−(n−1) (2) which is the unconditional probability of there being n − 1 red balls among the N − 1 not observed balls. Now, a search for a red ball is conducted. Building on Balding and Donnelly (1995) and Dawid and Mortera (1996), I will first discuss two possible search procedures: search until success and random search. 2.1 Search until Success Yellin (1979) was the first to consider a procedure consisting of a search through the urn until a red ball, the suspect, is found. If no record is kept of the balls screened, no additional evidence is gained in the process of finding the second red ball. This search protocol always produces a suspect and the question of guilt G is the question of whether the second ball is identical to the first ball. The larger the number of red balls, the lower is the probability of guilt. For a given number of red balls, X = n, the probability of guilt is 1/n. As X is unknown, the probability of guilt is P (G|F1 ) = E X −1 |F1 4 Using formula (2), the probability of guilt is N X 1 − (1 − p)N 1 1 N − 1 n−1 (N −n) p (1 − p) = = P (G|F1 ) = n n−1 Np E (X|X ≥ 1) n=1 Hence, the probability of guilt P (G|F1 ) has a simple solution which happens to be identical to the reciprocal of E (X|X ≥ 1). This solution is different from the solution following from the California Supreme Court’s erroneous argument. The California Supreme Court’s interpretation of the evidence can be formulated as F0 =‘there is at least one red ball’. In that case the probability of guilt is P (G|F0 ) = E (X −1 |X ≥ 1). Given this particular relationship between the expressions for P (G|F1 ) and P (G|F0 ) it follows from Jensen’s inequality that P (G|F1 ) < P (G|F0 ). Hence compared to Yellin (1979) the California Supreme Court overstated the probability of guilt. 2.2 Random Search By design, the above search always produces a suspect and provides no additional information about the distribution of X. Another alternative, as explored by Dawid (1994), is the random search where only one ball is picked at random. If this ball is not red the question of guilt is straight away answered negatively. If, however, the randomly selected ball is indeed red, the additional evidence is F2 = ‘second ball drawn at random is red’, and the distribution of X should be 5 updated accordingly. Using Bayes’s formula we get: P (X = n|F1 ∩ F2 ) = P (X = n|F1 ) P (F2 |X = n ∩ F1 ) P (F2 |F1 ) (3) When conditioning on the number of red balls X = n, the events F1 = ‘first ball drawn at random is red’ and F2 = ‘second ball drawn at random is red’ are independent (the draws are done with replacement); it therefore follows that the numerator can be written as P (F2 |X = n ∩ F1 ) = P (F2 |X = n) = n N The denominator in (3) can be written as P (F2 |F1 ) = N N X n 1 X E (X|F1 ) P (X = n|F1 ) = nP (X = n|F1 ) = , N N N n=1 n=1 where it follows from (2) that E (X|F1 ) = 1 + (N − 1) p. Therefore (3) can be written as P (X = n|F1 ∩ F2 ) = n P (X = n|F1 ) E (X|F1 ) (4) The probability of guilt is now N X n 1 1 P (G|F1 ∩ F2 ) = P (X = n|F1 ) = n E (X|F1 ) E (X|F1 ) n=1 6 (5) As X −1 is a convex transformation for X ≥ 1, it follows from Jensen’s inequality that 1 < E X −1 |F1 E (X|F1 ) From above we know that P (G|F1 ) = E (X −1 |F1 ) hence P (G|F1 ∩ F2 ) < P (G|F1 ) . The probability of guilt of a suspect is thus lower in the random search than in search until success. The intuition is simple: when two balls drawn at random happen to be red it increases the likelihood of a large number of red balls more than if only one ball drawn at random is red. This updating assumes that we are faced with one experiment, where two balls drawn with replacement happen to be red. Thus only a fraction of first draws (robberies) will lead to a case. With the random search protocol there will therefore be a subtle selection effect of cases. It is the analysis of this selection effect that is my own contribution. 2.3 Random Search with Selection Effect I will in the following show how the analysis changes when taking account of the selection effect. As before, consider an urn containing N balls drawn from an underlying population of M colors. The urn is characterized by the joint frequency 7 of balls by color Xi (i = 1..M ). Now, one of a never ending series of draws takes place, one ball is drawn and put back in. Then a second ball is drawn. If the balls are not the same color, the case is closed and the next potential case occurs. Assume that after an unknown number of draws (i.e. potential cases), drawn from the same urn, there is a case where the first and the second ball are of the same color, red. When this case arrives the question is, as before: Are the balls identical? Let the evidence be denoted F3 = ‘The first time that both balls are of the same color they are red’. Let Xi , i ∈ [1, M ], denote the number of balls of color i, where i = 1 is the color red and where PM i=1 Xi = N , then Bayes’s formula gives P (X1 = n|F3 ) = P (X1 = n) P (F3 |X1 = n) P (F3 ) (6) The denominator P (F3 ) is the unconditional probability of the first instance of two consecutive draws of balls of the same color involves two red balls. In an urn where X1 ...XM denotes the number of balls of each of the M colors (and where X1 is the number of red balls) the probability of drawing two balls of color i is (Xi /N )2 . Given X1 ...XM the probability of the first instance of two consecutive draws of balls of the same color involving two red balls is thus 2 (X1 /N ) / M X 2 2 (Xi /N ) = X1 / i=1 M X i=1 8 Xi 2 (7) The expectation of (7) over all combinations of X1 ...XM determines the denominator in (6), P (F3 ) .Hence P (F3 ) = E X1 2 / M X ! Xi 2 i=1 The numerator in (6) P (F3 |X1 = n) follows when taking the expectation of (7) conditioning on the number of red beads X1 = n P (F3 |X1 = n) = E X1 2 / M X ! Xi 2 |X1 = n i=1 =E n2 / M X ! Xi 2 |X1 = n i=1 It therefore follows that (6) can be written as P 2 X |X = n E n2 / M i 1 i=1 , P (X1 = n|F3 ) = P (X1 = n) P M 2 2 E X1 / i=1 Xi (8) This expression can generally not be simplified further. In order to get an idea on how updating based on F3 compares to updating based on F1 ∩ F2 I will first look at some numerical illustrations and then at some approximations for large N . 2.4 Numerical Illustrations Assume that the underlying distribution amounts to a multinomial distribution where pi is the probability of color i. I will consider two problems: 9 1. The Tiny island, where N = 5 and P (red ball) = p1 = 1/6 2. The Large island, where N = 100 and P (red ball) = p1 = 0.004 The parameters of the Large island corresponds exactly to Eggleston’s (1983) Island Problem. In each of these problems the marginal distribution for red balls is fixed by bin(N, p = p1 ). For each of these problems I look at three different sets of assumptions regarding the probabilities of colors other than red. i) Red and green, where M = 2 and p2 = 1 − p1 ii) Red and palette, where M is enormous and p2 = · · · = pM = (1 − p1 ) / (M − 1) ≈ 0 iii) All equal, where p1 = p2 = · · · = pM = 1/M (M = 6 in the Tiny island and M = 250 in the Large island) The calculations of the results for i) and ii) are straightforward. The calculation for iii) is more demanding. By construction the combination Tiny island and iii) is identical to a throw of five dice. In gambling it is known under the name ”poker dice”, a variant of yatzee. Poker dice is analyzed in the book in gambling by Epstein (1967 p154) and the essential probabilities can be taken from there. For the combination Large island and iii) some CPU time is needed and the complete calculations involve integrating over all 190 mill. partitions of the number 100. I 10 approximate by integrating over the 600,000 partitions with the highest probability. These account for 1 − 10−6 of the probability mass. The results for the probability of guilt are summarized in Table 1. The row at the bottom includes, as a reference, the result of the random search P (G|F1 ∩ F2 ) from (5). Table 1: Probability of guilt, P (G|F3 ) in the iterated case and in the reference. Tiny island Large island i) Red and green 0.574 0.712 ii) Red and palette 0.674 0.722 iii) All equal 0.643 0.719 Random search, P (G|F1 ∩ F2 ) 0.600 0.716 reference The results show that the probability of guilt P (G|F3 ) = E (X −1 |F3 ) indeed varies with the distributional assumption regarding the distribution of colors other than red in the underlying population. The probability of guilt may both be above and below the reference case of random search P (G|F1 ∩ F2 ). In order to understand the logic behind the result for a particular parameter configuration one needs to consider all the possible combinations of colors that the configuration can generate. The essential insight is gained by looking at the distribution for the updated distribution of X1 . For the Tiny island the updated distribution P (X1 = n|F3 ) is given in Figure 1. The lower density is the prior 11 Figure 1: Tiny island, updating the distribution. P 0.5 . .... . . . .... . . ... ... ................ .. . . ........... ........ ... ...... ................. ....... ... ............. ... .... .. ............. . . ........... . . . . . . . . . . . ... .. . . .. ............... . . ... ... ... . . . ..... . . . ... ... . . . . ..... . .. ... ..... .. . . . . . . . ... ... ... . . ....... ........ . ... .... ..... ... . ... . ... .... . . . ... ..... . ........... . . ... . .. .. .. . ...... . . ... . ... ... .. ... ...... . ... . ... . ..... .. ... .. ... ... . . . ..... ... . .. .. ... ..... ... . ... . ..... ... . ... ... . ..... ... .. ... . ... ..... ... . .. .. ..... ... . ... .... ..... ... . ... . ..... ... .. ... ...... ... ..... ... . ... .... ..... ... . ...... ..... ... .. ... . ...... ... . ........ ...... ... . . .... ...... ..... . . ....... ...... . ....... . . ...... ....... . . ......... ...... ...... ....... . .. ...... ....... . . ....... ...... . . ........ . . ...... ...... ....... . .... ...... ........ . .... ...... ........ . ...... ...... ........ . ........ ....... ........ ....... ... ...................... ........ .. ...... ........ . ...................... ... ........ ......................................................................... ................... ......................................................................... ............ ......... ii) 0.4 0.3 iii) (reference) i) 0.2 (prior) 0.1 1 2 3 4 n 5 P (X1 = n), which is common for all the parameter configurations. Consider the updated density in configuration i) (Red and green). This density is adjusted most down for X1 = 1 while it is adjusted most up for X1 = 3. These adjustments follows from the Bayesian updating given by (6) P (X1 = n|F3 ) = P (X1 = n) P (F3 |X1 = n) P (F3 ) (6) The likelihood of F3 = ‘The first time that both balls are of the same color they are red’ in the numerator is low when there is one red (X1 = 1) and four green balls, while the likelihood is much higher when there is three red balls (X1 = 3) and two green. The updated density for ii) (Red and palette) deviates less from the prior. The reason is that the likelihood of F3 is quite high even when X1 = 1 when all other 12 colors are unique. The updated distribution i) is the one that moves most to the right, giving more probability mass to the large values of X1 . The probability of guilt P (G|F3 ) = E (X −1 |F3 ) is therefore the lowest in case i) . The updated distribution in case ii) is the one that moves least to the right, giving the least probability mass to large number of X1 . Therefore the probability of guilt in case i) is the lowest while the probability of guilt in case ii) is the highest. 2.5 Known Number of Iterations In the above analysis it was assumed that, the number of iterations until a case was found, with a second ball of the same color as the first ball, was unknown. In principle the information regarding the number of iterations could be available. Let R denote the number of iterations until a case was found. As before let the first case involve two red balls. Given a combination of colors X A (where the number of balls of color i is XiA , i ∈ [1, M ] and P XiA = N ), the likelihood of experiencing F4 = [R − 1 potential cases and then a red case] is P F4 |X A = 1− M X !R−1 XiA /N 2 X1A /N 2 (9) i=1 Using Bayes’s formula this likelihood can be used to update the distribution over all possible X A s in a given context. The updated probability distribution over 13 X A s can in turn be marginalized to achieve an updated probability distribution over red balls X1 , which in turn can be used to calculate the probability of guilt. I have done this exercise for all of the three Tiny islands. The updated probability of guilt is plotted in Figure 2 . Three distinct features are apparent. First, when Figure 2: Probability of guilt and the number of iterations. P 1.0 ................................................ ...................................... . . . . . . . . . . . . . ........................... ii) Red and palette................................................................. . . . . . . . . . . . . . . . . . . . . 0.8 0.6 . ... ..... ............. .... ............ .... ........... .......... .... . . . . . . . . . . . . .. . ......... .... ......... .... ........ .... ........ .... ........ . . . . . . . . . . .. ... ....... ... ....... ...... . . . . . ...... ....... . . . . . . . . .... . ..... . . ...... . . ...... ..... . ......... .. ..... .. ...... . ....... ....... ....... ....... ....... ....... ....... ....... ....... ....... ....... ....... ....... ....... ....... ....... ....... ....... ....... ....... ....... ....... ....... ....... ....... ....... iii) All equal i) Red and green 0.4 0.2 R 10 20 30 40 the number of iterations is exactly equal to one, the probability of guilt is in all cases equal to 0.600. This is no coincidence. Looking at (9) it is clear that the distributions of other colors than red do not matter when R = 1. Hence, N and p1 are the only facts that matter and in all three cases N = 5 and p1 = 1/6. As seen above, 0.600 is also the probability of guilt in the reference case of random search. The reference is an experiment where: ”we are faced with one experiment, where two balls drawn with replacement by chance happen to be red.” Another 14 way of putting it is that the number of iterations before two red balls are drawn happen to be R = 1. This illustrates again the difference between the reference experiment and the experiment where it is assumed that the case is a selected case after a number of iterations. Second, both for ”Red and palette” and ”All equal” the probability of guilt increases and approaches unity as R increases. The reason for this asymptotic behavior is that in both configurations the Bayesian updating, when seeing a long range of iterations, gives large probability to X A s that are such that there is one out of each color. Such X A s are possible both in ”Red and palette” and ”All equal”. One out of each color implies that there is only one red ball, hence sure guilt. Third, the probability of guilt with ”Red and green” declines and settles at a level of around 0.45. As there are only two colors in this configuration, having just one of each color is not possible. As R increases the likelihood of only two alternatives stand out: a) X1 = 2 and X2 = 3 or b) X1 = 3 and X2 = 2. Hence, in this configuration the probability of guilt settles at a level between 1/3 and 1/2. 3 DISCUSSION The analysis of the Island Problem illustrates the general point that the interpretation of statistical data must take into account exactly how the data were collected. 15 What is the protocol and where does it end? In the solution to the Island Problem, Yellin (1979) only considered the part of the evidence relating to the fact that the guilty happened to have certain characteristics. Later Dawid(1994) brought into the picture the fact that the search had produced a suspect with the same characteristics. Both Yellin and Dawid start from the premise that the case is given. My argument is that the case may itself be selected in a stochastic process. Only cases where there is a suspect with identical characteristics as the culprit are brought before the court. The discussion started from the Collins Case, a classic case in forensic statistics. In addition to illustrating core issues of forensic statistics, like ”the prosecutors fallacy”, the Collins Case also illustrates some important principles in Bayesian learning and Bayesian reasoning. The present discussion is not meant as a substantial contribution to forensic statistics, but rather as an elaboration on a fascinating stylized case of Bayesian reasoning. In fact, the salience of the selection effect would only be marginal in stylized forensic problems of realistic size. In Eggleston’s (1983) Island Problem, for example, as captured by Large island in Table 1 above, N is only 100. Already at that modest population size the difference between the different parameter configurations is very small. If N increases further the selection effect would not matter at all. To put it loosely, when the number of balls is large and when there is a 16 large number of colors, each with small probabilities, then P Xi 2 can be treated as a constant independent of X1 for small X1 . Then, it follows that (8), for small n, can be simplified as follows P (X1 = n|F3 ) ≈ P (X1 = n) n2 = P (X1 = n|F1 ∩ F2 ) . E (X12 ) (10) The last equality follows as P (X1 = n|F1 ∩ F2 ) = P (X1 = n) P ((F1 ∩ F2 ) |X1 = n) n2 /N 2 = P (X1 = n) P (F1 ∩ F2 ) E (X12 /N 2 ) The approximation (10) is accurate when red is quite rare, and when there is a large number of other features in the population. That the feature red is rare is an implicit condition for the problem to be relevant in the context of evidence in a court case. References Balding; D. J., and Donnelly, P. (1995), “Inference in Forensic Identification,” Journal of the Royal Statistical Society. Series A (Statistics in Society), 158 (1), 21-53. Dawid, A. P. (1994), “The Island Problem: Coherent Use of Identification Evi- 17 dence,” Aspects of Uncertainty. A Tribute to D. V. Lindley, Wiley, New York, 159–170. Dawid, A. P., and. Mortera, J. (1996), “Coherent Analysis of Forensic Identification Evidence,” Journal of the Royal Statistical Society. Series B (Methodological), 58 (2), 425-443. Eggleston, R. (1983), Evidence, proof and probability 2nd ed. , London: Weidenfeld and Nicolson. Epstein, R. A. (1967), The Theory of Gambling and Statistical Logic, Academic Press, New York. Fairley, W. B., and Mosteller, F. (1974) “A Conversation about Collins,” The University of Chicago Law Review, 41(2). pp. 242-253. Lindley, D.V., (1987) “The probability approach to the treatment of uncertainty in artificial intelligence and expert systems,” Statistical Science, 2, 17-24. The Supreme Court of California (1969) “People v. Collins,” reprinted in Fairley, W. B. and Mosteller, F. (eds.) (1977) Statistics and Public Policy, Reading, Mass , 1977. pp. 355-368. Yellin, J. (1979) “Review of Evidence, Proof and Probability (by R. Eggleston)” Journal of Economic Literature, 17, 583-584. 18