MAT4701

advertisement

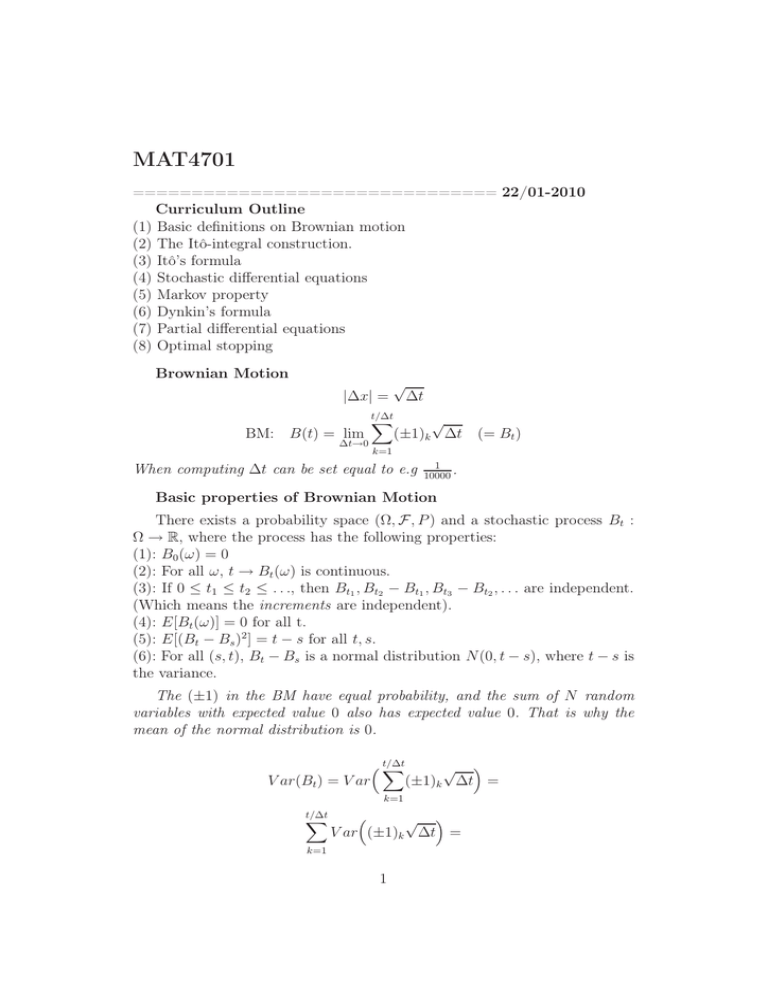

MAT4701

=============================== 22/01-2010

Curriculum Outline

(1) Basic definitions on Brownian motion

(2) The Itô-integral construction.

(3) Itô’s formula

(4) Stochastic differential equations

(5) Markov property

(6) Dynkin’s formula

(7) Partial differential equations

(8) Optimal stopping

Brownian Motion

|∆x| =

BM: B(t) = lim

∆t→0

√

t/∆t

X

∆t

√

(±1)k ∆t (= Bt )

k=1

When computing ∆t can be set equal to e.g

1

.

10000

Basic properties of Brownian Motion

There exists a probability space (Ω, F , P ) and a stochastic process Bt :

Ω → R, where the process has the following properties:

(1): B0 (ω) = 0

(2): For all ω, t → Bt (ω) is continuous.

(3): If 0 ≤ t1 ≤ t2 ≤ . . ., then Bt1 , Bt2 − Bt1 , Bt3 − Bt2 , . . . are independent.

(Which means the increments are independent).

(4): E[Bt (ω)] = 0 for all t.

(5): E[(Bt − Bs )2 ] = t − s for all t, s.

(6): For all (s, t), Bt − Bs is a normal distribution N(0, t − s), where t − s is

the variance.

The (±1) in the BM have equal probability, and the sum of N random

variables with expected value 0 also has expected value 0. That is why the

mean of the normal distribution is 0.

V ar(Bt ) = V ar

t/∆t

X

k=1

t/∆t

X

k=1

√ (±1)k ∆t =

√ V ar (±1)k ∆t =

1

t/∆t

X

√

V ar((±1)k )( ∆t)2 =

X

t

· ∆t = t

∆t

k=1

t/∆t

(1)(∆t) =

k=1

This is basically a verification of property (5), where s = 0.

We know this is a normal distribution due to the central limit theorem.

Stochastic integration

The Riemann integration uses the same basic idea that we want to use

in stochastic integration.

Z b

X

f (x)dx ≈

f (x∗i )∆xi

a

i

For a stochastic integral, Xt (ω) : a stochastic process, and Bt (ω) : brownian

motion.

Z b

X

Xt (ω)dBt (ω) ≈

Xt∗i (ω) Bti+1 − Bti

a

i

We want the limit to exist as maxi |ti+1 − ti | → 0. But this turns out to be

“impossible” (except some very specific cases).

Example.

0 = t0 ≤ t1 ≤ t2 ≤ . . . ≤ tN = T

We consider the sum

X

i

• Case 1.

t∗i

Bt∗i (Bti+1 − Bti )

= ti (leftmost point).

hX

i

E

Bti Bti+1 − Bti =

i

X E Bti Bti+1 − Bti =

i

Since Bti and Bti+1 − Bti are independent (independent increments)

X E Bti E Bti+1 − Bti = 0

i

2

• Case 2. t∗i = ti+1 (rightmost point).

hX

i

E

Bti+1 (Bti+1 − Bti ) =

i

In this case Bti+1 and Bti+1 − Bt1 are not independent. However, we can use

a little trick and add/subtract Bti .

hX

i

E

(Bti+1 − Bti + Bti )(Bti+1 − Bti ) =

i

E

hX

i

2

(Bti+1 − Bti ) +

X

i

i

Bti (Bti+1 − Bti ) =

We observe the right hand side is zero, like we showed in case 1.

i X

i X h

hX

2

2

∆ti = T

E (Bti+1 − Bti ) =

E

(Bti+1 − Bti ) =

i

i

i

P

If the limit of i Bt∗i (Bti+1 − Bti ) exists as max|ti+1 − ti | → 0 then the limit

must have expected value 0 and T (and everything in between), which is

impossible.

Solution

Basic idea: Fix the approximation point.

(1) t∗i = ti (Itô-integral).

(2) t∗i = ti+12−ti (Stratonovich integral).

Sigma-algebras: F − σ algebra.

(Σ1 ) ∅ ∈ F

(Σ2 ) F ∈ F ⇒ F c ∈ F S

(Σ3 ) F1 , F2 , . . . ∈ F ⇒ ∞

i=1 Fi ∈ F

σ-algebras generated by sets

C - a collection/family of sets.

σ(C) = the smallest σ-algebra containing all the sets in C.

One says σ(C) is the σ-algebra generated by C.

Random variables.

X : Ω → R.

σ(X) = the smallest σ-algebra containing all sets on the form F = X −1 (A),

where A is any Borel set.

3

σ−algebras generated by a stochastic process

Xt : Ω → R

σ(Xs , s ≤ t) = the smallest σ−algebra containing all sets on the form

{ω | Xt1 ∈ A, Xt2 ∈ A2 , . . . , Xtn ∈ An }

where t1 , t2 , . . . , tn ≤ t and A1 , . . . , An are arbitrary Borel sets.

Important: Ft = σ(Bs , s ≤ t). The σ−algebra generated by the Brownian

motion. A stochastic process is called Ft -adapted iff Xt is Ft −measurable for

all t.

Ft ; what does it “mean” that Xt is Ft −adapted?

Result: If Xt is Ft −measurable, then we can find a sequence ftn such that

Xt = lim ftn

n→∞

and where

ftn

=

Nn

X

(i)

a.e.

(i)

(i)

g1 (Btn1 )g2 (Btn2 ) . . . gm

(Btnm )

i=1

where g: any Borel-funtion and all times ≤ t.

Hence if ω is known (i.e what sample path we are on) we can in principle

find the value of Xt from the values Bs (ω), 0 ≤ s ≤ t. Conclusion: Ft contains

all “information” up to time t.

For instance

Xt = Bt sin(B 2t ) + B 2 t

3

is Ft -measurable since all t values are less than t.

Xt = B2t

is not Ft -measurable since it contains information in the “future” (2t > t).

Itô-integral. Construction.

Z

T

f (t, ω)dBt(ω)

S

We choose S, T . Normally S = 0, T = T .

V = V[S, T ] is the class of functions f (t, ω) : [S, T ] × Ω → R such that:

(i) f (t, ω) is B × F −measurable

4

(ii) f (t,

h Rω) is Ft −adapted

i

T

(iii) E S f (t, ω)2dt < ∞ (Lebesgue-integral)

Elementary functions

φ ∈ V is elementary iff

φ(t, ω) =

N

X

ej (ω)I[tj ,tj+1 ) (t)

j=1

where I is the indicatorfunction.

S = t1 ≤ t2 ≤ . . . ≤ tN = T.

Note: ej (ω) must be Ftj −measurable. The Itô-integral of an elementary

function is defined as:

Z

T

φ(t, ω)dBt(ω) =

S

N

X

j

ej (ω)(Btj+1 − Btj )

Itô-isometry.

Holds for any object in V. We will prove it for an elementary function.

h Z T

2 i

hZ T

i

E

φ(t, ω)dBt(ω)

=E

φ2 (t, ω)dt

S

S

The left side is a stochastic integral, where as the right side is a normal

Lebesgue integral.

Proof :

h Z T

2 i

E

φ(t, ω)dBt(ω)

=

S

Substituting the definition of the Itô-integral.

E

N

h X

j

E

N

hX

i,j

ej (ω)(Btj+1 − Btj )

2 i

=

i

ei (ω)ej (ω)(Bti+1 − Bti )(Btj+1 − Btj ) =

From here on, we consider three seperate cases.

5

• Case 1. i < j. Then:

ei (ω) is Ftj -measurable.

ej (ω) is Ftj -measurable.

Bti+1 − Bti is Ftj -measurable.

Since Fti ⊂ Ftj and ti+1 ≤ tj if i < j .

Then (Btj+1 − Btj ) is independent of the rest of the equation.

E

N

hX

i,j

E

N

hX

i,j

i

ej (ω)ei (ω)(Bti+1 − Bti )(Btj+1 − Btj ) =

i h

i

ej (ω)ei(ω)(Bti+1 − Bti ) E (Btj+1 − Btj ) = 0

• Case 2. i > j. Then Btj+1 − Btj is independent and everything cancels the

same way.

• Case 3. i = j.

E

h Z

T

φ(t, ω)dBt(ω)

S

E

N

hX

i=1

2 i

=

i

ei (ω)2(Btj+1 − Btj )2 =

N

i

i h

h

X

E ei (ω)2 E (Btj+1 − Btj )2 =

i=1

N

i

h

X

E ei (ω)2 ∆t =

i=1

E

N

hX

i=1

E

hZ

S

i

ei (ω)2∆t =

T

i

φ2 (t, ω)dt

Q.E.D

Lemma

If f ∈ V, then there exists a sequence of elementary functions φn , n = 1, 2, . . .

such that

hZ T

i

E

(f − φn )2 dt → 0 as n → ∞.

S

6

Usage of the lemma:

Definition of the Itô-integral for f ∈ V: find a sequence φn as above and put

Z T

Z T

f dBt = lim

φn dBt

n→∞

S

S

We are integrating an elementary function, i.e we have a sum.

Questions:

(1) Why is this well defined?

(2) Why does the limit exist in the first place?

Answers:

(2) Let

ψn =

Z

T

φn dBt

S

We consider:

2 i

ψn − ψm

=

Z T

h Z T

2 i

E

φn dBt −

φm dBt

=

E

h

S

S

The difference between elementary functions is a new elementary function.

h Z T

2 i

E

(φn − φm )dBt

=

S

The integral of elementary functions is linear. Apply Itô isometry.

hZ T

i

E

(φn − φm )2 dt =

S

E

hZ

S

2

2

T

i

(φn − f + f − φm )2 dt ≤

We use: (x + y) ≤ 4x + 4y 2 .

hZ T

i

E

4(φn − f )2 + 4(f − φm )2 dt → 0

S

From the lemma we know this tends to 0.

This proves that ψn is a Cauchy sequence in L2 Ω × [S, T ] . From measure

theory we know that all Lp spaces are complete. Since L2 is complete the

Cauchy sequence converges. Therefore the limit exists.

7

Answers:

(1) Why is it well defined? Let

E

h Z

φn → f,

S

E

Iso-isometry

Φn → f

Z

2 i

T

T

(φn dBt −

Φn dBt

=

h Z

E

E

2

hZ

S

T

S

hZ

S

T

φn − Φn dBt

T

2 i

=

i

(φn − Φn )2 dt =

i

(φn − f + f − Φn ) dt ≤

2

S

2

Again, since (x + y) ≤ 4x + 4y 2 = 4(x2 + y 2)

Z T

hZ T

i

2

4·E

(φn − f ) dt +

(f − Φn )2 dt → 0

S

S

This means they must converge to the same object.

Proof of Lemma.

• Step 1:

Assume that g ∈ V is bounded and continuous. Put

X

φn (t, ω) =

g(tj , ω)I[tj ,tj+1 ) (t).

j

RT

Since g is continuous for each ω; S (g −φn )2 dt → 0 pointwise in ω as n → ∞.

Z T

Z T

2

e

(g − φn ) dt ≤

(2C)2 dt ≤ C

S

S

e is some constant. So, by the

Where |g| ≤ C, since g is bounded and C

bounded convergence theorem we know that

hZ T

i

E

(g − φn )2 dt → 0.

S

• Step 2:

What if f is not continuous? Assume h ∈ V is bounded, but not necessarily

continuous. Then we can find gn ∈ V bounded and continuous such that

hZ T

i

E

(h − gn )2 dt → 0 as n → ∞.

S

8

Proof :

Let ψ ≥ 0 (positive function) be such that

(i) ψ(x) = 0 for x ≤ −1 and x ≥ 0.

(ii) ψR is continuous.

∞

(iii) −∞ ψ(x)dx = 1 (probability density).

Put ψn (x) = nψ(nx), n = 1, 2, . . . (approximating unity) and consider

Z t

gn (t, ω) =

ψn (s − t)h(s, ω)ds.

(∗)

0

Then gn is continuous (trivial, Lebesgue integrals are continuous in the

integral) and bounded (because all (*) integrates to 1) and adapted (which is

very difficult to prove). The s-t; if less than 0 provides a “negative support”

since all values are 0.

9

================================ 29/01-2010

Exercise 2.3

T

If {Hi } is a family of σ-algebras, prove that H = i∈I Hi is a σ-algebra.

a) We check that the three properties of the sigma-algebra are met.

\

∀i ∈ I; ∅ ∈ Hi ⇒ ∅ ∈

Hi

(Σ1 )

F ∈

\

i∈I

i∈I

Hi ⇒ ∀i ∈ I, F ∈ Hi ⇒ ∀i ∈ I, F c ∈ Hi ⇒ F c ∈

Fk ∈

\

i∈I

\

i∈I

Hi

(Σ2 )

Hi , k = 1, 2, . . . where Fk ∩ Fl = ∅ ⇒

∀i ∈ I, Fk ∈ Hi , k = 1, 2, . . . ⇒ ∀i ∈ I,

∞

[

k=1

Fk ∈ Hi ⇒

T

We see the three properties are fulfilled. Thus H =

i∈I

∞

[

k=1

(Σ3 )

Fk ∈

\

i∈I

Hi

Hi is a σ-algebra.

b) We are going to show by counterexample that the union of σ-algebras

generally isn’t a σ-algebra. Let

F1 = ∅, {1}, {2, 3}, {1, 2, 3},

F2 = ∅, {2}, {1, 3}, {1, 2, 3},

which both are σ-algebras. We will examine the union:

F1 ∪ F2 = ∅, {1}, {2}, {1, 3}, {2, 3}, {1, 2, 3},

We have {1} ∈ F1 ∪ F2 and {2}S∈ F1 ∪ F2 but {1, 2} 6∈ F1 ∪ F2 , that is, it

isn’t closed over unions, so G = i∈I Fi is not neccesarily a σ-algebra.

Exercise 2.6

P

Let A1 , A2 , . . . be sets in F such that ∞

k=1 Ak < ∞. Show that:

∞ [

∞

\

P

Ak = 0.

m=1 k=m

We define

Bm =

∞

[

Ak

k=m

We see that Bm gets smaller, so

we have that

B1 =

∞

[

Ak , B2 =

k=1

T

Bm ց ∞

m=1

lim P (Bm ) = P

m→∞

∞

[

Ak , . . .

k=2

Bm . By property 4 of measures,

∞

\

m=1

Bm

On the next page we repeat the properties of measures.

10

Measures

Let µ be a measure (Ω, µ), then

(1) Ai : (1 ≤ i ≤ n) are disjoint. When n is finite;

µ

n

[

i=1

n

X

µ(Ai )

Ai =

i=1

(2)

∀A, B ∈ F , A ⊂ B ⇒ µ(A) ≤ µ(B)

(3) Bn is increasing in F , convergent to B ∈ F , µ(B) = limn→∞ µ(Bn ), In

other words:

Bn ր B ⇒ µ(Bn ) → µ(B)

(4) Similarly, C1 < +∞.

Cn ց C ⇒ µ(Cn ) ց µ(C), n → ∞

So again, by property 4 of measures, we know that:

lim P (Bm ) = P

m→∞

P

∞ [

∞

\

m=1 k=m

lim P

m→∞

m=1

Ak = P

∞

[

k=m

∞

\

∞

\

m=1

Bm

Bm = lim P (Bm ) =

m→∞

Ak ≤ limm→∞

∞

X

P (Ak )

m=1

P

where lim is the

sup lim (which always exists). Now since ∞

k=1 P (Ak ) < ∞,

P∞

then limm→∞ m=1 P (Ak ) = 0, which is the remainder of the convergent

sequence. Result proven.

Exercise 2.7

a) G1 , G2 , . . . , Gn are disjoint subsets of Ω such that

Ω=

∞

[

Gi .

i=1

We want to prove that G that includes ∅ and a union of some (or all) of the

sets is a σ-algebra on Ω. We begin by verifying the three requirements for

σ-algebras.

∅ ∈ G by construction.

(Σ1 )

11

F =

p

[

i=1

c

Gi , 1 ≤ p ≤ n ⇒ F =

p

[

Gi

i=1

c

=

p

\

i=1

Gci ∈ G

F1 , F2 , . . . , Fk ∈ G ⇒ F1 ∪ F2 , . . . ∪ Fn ∈ G

(Σ2 )

(Σ3 )

which is satisfied by the nature of this exercise.

b) Prove that any finite σ-algebra is of the type described in a).

Let F be a finite σ-algebra of subsets of Ω.

o

\n

F ∈F;x∈F

∀x ∈ Ω ; Fx =

(Since F is finite, Ω is finite). Fx ∈ F and Fx is the smallest set in F which

contains x. A ∈ Fi and x ∈ A ⇒ Fx ∈ A (since Fx is the intersection, i.e the

smallest).

For given x, y ∈ Ω, either Fx ∩ Fy = ∅ or Fx = Fy .

We argue by contradiction: we assume that we have Fx ∩ Fy 6= ∅ and Fx 6= Fy

in other words Fx \Fy 6= ∅.

1) x ∈ Fx ∩ Fy ⇒ Fx ∩ Fy ∈ F , and Fx ∩ Fy ⊂ Fx . But this is impossible

(Fx is the smallest possible σ-algebra).

2) x ∈ Fx \Fy . Then Fx \Fy is a set in F and contains x and we have

Fx \Fy is strictly smaller than Fx , but that is impossible.

Let x1 , . . . , xm ∈ Ω such that Fx1 , . . . Fxm form a partition of Ω. Then any

F ∈ F is a disjoint union of some of the Fxi ’s. Then we have the situation

in a).

c) We have X : Ω → R : F -measurable.

∀C ∈ R, X −1 {C} = ω ∈ Ω, X(ω) = C ∈ F

(by definition of the measurability of X). Therefore X has the constant value

C on a finite union of Fi , Fi = Fxi (as in exercise b).

2.10

Xt is stationary if Xt ∼ Xt+h , h > 0 (if they have the same distribution).

∀t > 0, Bt+h − Bt ∼ N(0, h) (h is the variance) ⇒

Bt+h − Bt h≥0 has the same distribution ∀t ⇒

Bt is stationary in increments

12

Exercise 2.14

K ⊂ Rn , K has zero n-dimensional Lebesgue measure. We shall prove that

the expected total length of time that Bt spends in K is zero. This expected

time is given by (E x means that the Brownian motion begins in x, i.e B0 = x):

hZ ∞

i Z ∞ x

E

I{Bt ∈K} dt =

E x I{Bt ∈K} dt =

0

Z

∞

P Bt ∈ K dt =

x

0

0

Z

∞

(2πt)

0

−n

3

Z

K

since it has 0 Lebesgue measure.

− (x−y)

2t

e

2

dy dt = 0

Exercise 2.18

a) Let Ω = {1, 2, 3, 4, 5} and U = {1, 2, 3}, {3, 4, 5} . Find the σ-algebra

generated by U, that is the smallest σ-algebra on Ω containing U.

n

o

HU = ∅, {1, 2, 3}, {3, 4, 5}, {4, 5}, {1, 2}, {1, 2, 4, 5}, {3}, {1, 2, 3, 4, 5},

b) If X : Ω → R and

X(1) = X(2) = 0, X(3) = 10, X(4) = X(5) = 1

X is measurable with respects to HU if ∀B ⊂ R:

∅

if {0} 6∈ B, {10} 6∈ B, {1} 6∈ B

{1, 2} if {0} ∈ B, {10} 6∈ B, {1} 6∈ B

{3}

if {10} ∈ B, {0} 6∈ B, {1} 6∈ B

X −1 (B) =

{4, 5} if {1} ∈ B, {0} 6∈ B, {10} 6∈ B

{1,

2, 3} if {0} ∈ B, {10} ∈ B, {1} 6∈ B

...

etc

c) We define Y : Ω → R by

Y (1) = 0

Y (2) = Y (3) = Y (4) = Y (5) = 1

and want to find the σ-algebra generated by Y (which must be the smallest

possible σ-algebra).

n

o

HY = ∅, {1}, {2, 3, 4, 5}, {1, 2, 3, 4, 5}

13

Exercise 2.19

(Ω, F , µ is a probability space and p ∈ [1, ∞]. A sequence of functions

p

{fn }∞

n=1 , where fn ∈ L (µ) (the Lebesgue space) is called a Cauchy sequence

if

kfn − fm kp → 0

as m, n → ∞

The sequence is convergent if there exists a f ∈ Lp (µ) such that fn → f in

Lp (µ). We are going to prove that all convergent series are Cauchy sequences.

When a series is convergent, that means ∃f ∈ Lp (µ) such that kfn −f kp →

0 when n → ∞.

kfn − fm kp = kfn − f + f − fm kp ≤ kfn − f kp + kfm − f kp −−−−→ 0

n,m→∞

which means that fn is a Cauchy sequence.

Additional exercises 1 - Brownian Motion

Properties of Brownian Motion

Exercise 1

1) If t ≥ s, Bs and Bt − Bs are independent.

2) E[Bt2 ] = t, ∀t

3) Bt ∼ N(0, t)

4) X ∼ N(0, σ 2 ) ⇒

Z

x2

1

√

E[f (X)] =

f (x)e− 2σ2 dx

2πσ 2

R

Let t ≥ s and compute

h

E Bs2 Bt − Bs3

Hint: Bt = Bt − Bs + Bs .

E Bs2 Bt − Bs3 = E Bs2 Bt − E Bs3 =

E Bs2 Bt − Bs + Bs − E Bs3 =

E Bs2 Bt − Bs + E Bs3 − E Bs3 =

E Bs2 Bt − Bs = E Bs2 E Bt − Bs = s · 0 = 0

14

Exercise 2

t ≥ s. Compute

E Bt Bs

Hint: Bt = Bt − Bs + Bs .

E Bt Bs = E (Bt − Bs + Bs )Bs = E Bs (Bt − Bs ) + Bs2 =

E Bs (Bt − Bs ) + E Bs2 = E Bs E Bt − Bs + s = 0 · 0 + s = s

Exercise 3

Show that

E Bt − Bs )2 = t − s

Hint: See property 2 for Brownian motion.

E Bt − Bs )2 = E Bt2 − Bt Bs − Bt Bs + Bs2 =

E Bt2 − 2E Bt Bs + E Bs2 =

t − 2s + s = t − s

Exercise 4

Show that

E Br4 = 3r 2

Hint: Use integration by parts to prove that

E Br4 = 3rE Br2

We think of f (Br ) = (Br )4 , so we can use property (4) for Brownian motion

with f (x) = x4 .

Z

4

x2

1

√

E Br =

x4 e− 2r dx (= I)

2πr

R

We use integration by parts:

Z

Z

′

uv = uv − u′ v

We have

u = x3 =⇒ u′ = 3x2

x2

x2

v ′ = xe− 2r =⇒ v = −re− 2r

We get

i

x2

1 h

− rx3 e− 2r −

I=√

R

2πr

15

Z

R

−√

x2

1

3x2 re− 2r dx

2πr

2i

1 h

3 − x2r

I=√

+ 3r

− rx e

R

2πr

Z

R

√

1

x2

x2 e− 2r dx

2πr

By property 5) of Brownian motion, we note that we have

Z

1

x2

√

x2 e− 2r dx = E Br2

2πr

R

For the uv part of the integral, we first note that we have:

x2

−rx3 e− 2r =

−rx3

x2

e 2r

which gives

lim

x→∞

−rx3

e

x2

2r

L’Hop

=

lim

x→∞

−3rx2

2xe

x2

2r

= lim

x→∞

−3rx

2e

L’Hop

x2

2r

=

lim

x→∞

−3r

x2

=0

4xe 2r

and it is similar when x → −∞. Thus, the integral is:

E Br4 = I = 0 + 3rE Br2 = 3rE Br2

We can now simply apply property 2 of Brownian Motion and we have shown

that

E Br4 = 3r 2

Exercise 5

Assume t ≥ s. Compute

E Bs4 Bt2 − 2Bt Bs5 + Bs6

We use that Bt = Bt − Bs + Bs .

2

E Bs4 Bt2 − 2Bt Bs5 + Bs6 = E Bs4 Bt − Bs + Bs − 2Bt Bs5 + Bs6 =

2 E Bs4 Bt − Bs + Bs

− E 2Bt Bs5 + E Bs6

Some intermediate calculations:

2

(Bt − Bs + Bs

= (Bt − Bs )2 + 2Bs (Bt − Bs ) + Bs2 =

(Bt − Bs )2 + 2Bs Bt − 2Bs2 + Bs2 = (Bt − Bs )2 + 2Bs Bt − Bs2

This in turn shows us that

Bs4 Bt − Bs + Bt

2

= Bs4 (Bt − Bs )2 + 2Bt Bs − Bs2 =

16

Bs4 (Bt − Bs )2 + 2Bt Bs5 − Bs6

Returning to the exercise.

2 E Bs4 Bt − Bs + Bt

− E 2Bt Bs5 + E Bs6 =

E Bs4 (Bt − Bs )2 + 2Bt Bs5 − Bs6 − 2E Bt Bs5 + E Bs6 =

E Bs4 (Bt − Bs )2 + 2E Bt Bs5 − E Bs6 − 2E 2Bt Bs5 + E Bs6 =

6

5

E Bs4 (Bt − Bs )2 + 2E

B

−2E

Bt Bs5 + −E

Bs EBs6 =

t Bs E Bs4 (Bt − Bs )2 = E Bs4 E (Bt − Bs )2 = 3s2 (t − s)

where the last step follows from exercise 3 and 4.

17

=============================== 05/02-2010

Step 1 g ∈ V is bounded and continuous.

X

φn (t, ω) =

(t)

g(tj , ω)I(tj ,tj+1 )

=⇒ E

i

hZ

Step 2 h ∈ V is bounded.

Z

gn (t, ω) =

t

ψn (s − t)h(s, ω)ds =⇒ E

0

Step 3 f ∈ V

−n

fn (t, ω) =

f (t, ω)

n

T

S

i

(g − φn )2 dt → 0

hZ

S

T

i

(h − gn )2 dt → 0

if f (t, ω) < −n

hZ T

i

=⇒ E

(f − fn )2 dt → 0

if − n ≤ f (t, ω) ≤ n

S

if f (t, ω) > n

It is clear that fn (tω) → f (t, ω) pointwise in ω. A trivial observation is that

(f − fn )2 ≤ 4f 2, so by the Bounded convergencce theorem we have bounded

convergence.

Lemma

If f ∈ V[S, T ] then we can find a sequence of elementary functions such that

E

hZ

T

i

(f − φn ) dt → 0

2

S

(*)

Proof : Begin with step 3, then use step 2 and finally step 1.

Definition of the Itô-integral (Repetition)

Z

T

S

f (s, ω)dBs(ω) = lim

2

L

Z

T

φn (s, ω)dBs(ω)

S

Where (ej (ω) are step-functions):

Z

S

T

φn (s, ω)dBs(ω) =

X

j

ej (ω) Btj+1 − Btj .

We proved last time that the limit always exists (since L2 is complete, and

every Cauchy-sequence converges), and is independent of the choice of the

approximating sequence φn .

18

Properties of the Itô-integral

h Z T

2 i

E

f (t, ω)dBt

S

By defintion:

= lim E

n→∞

h Z

T

φn (t, ω)dBt(ω)

S

Elementary functions satisfy the Iso-isometry, so

hZ T

i

= lim E

φ2n (t, ω)dt

n→∞

2 i

S

and the Brownian motion has disappeared. But as we saw in (∗), φ2n → f

(bounded convergence theorem), so

hZ T

i

= E

f 2 (t, ω)dt

S

To summarize:

E

h Z

T

f (t, ω)dBt

S

2 i

= E

hZ

T

i

f (t, ω)dt

2

S

Note that Iso-isometry is valid for all functions, not just elementary ones!

Further properties

Mostly straightforward to verify from the definition.

(1)

Z T

Z U

Z T

f dBt =

f dBt +

f dBt (S < U < T )

S

(2)

S

Z

U

T

(cf + g)dBt = c

S

(3)

E

hZ

S

T

T

f dBt +

S

E

Since:

Z

hZ

T

S

Z

T

gdBt

S

i

f dBt = 0

i

hX

i

φn (t, ω)dBt = E

ej Btj+1 − Btj =

j

X X E ej (ω) E Btj+1 − Btj =

E ej (ω) · 0 = 0

j

j

19

(4)

Z

T

f dBt

S

is FT -measurable,

since there are no values s, t in the expression for the integral that exceeds

T.

Martingale properties

A filtration is a family of σ-algebras

M = {Mt }t≥0

(i.e we have a σ-algebra ∀t) such that:

(i) Mt ⊂ F where F is the biggest σ-algebra on Ω.

(ii) If s < t, then Ms ⊆ Mt (as time increases we get a bigger σ-algebra).

A martingale Mt is a stochastic process with the properties:

M1 ) Mt is Mt -measurable.

M2 ) E |Mt | < ∞ ∀t

M3 ) E Ms |Mt = Mt when s ≥ t.

The first two properties of the martingales are quite obvious. The third

property is the one most used in calculations.

Example

Bt is an Ft -martingale.

We check the properties.

M1 ) Bt is Ft -measurable. This follows by the definition.

M2 ) We use the Hölder inequality:

again, by definition.

M3 )

21

2

E |Bt | ≤ E |Bt |

= t < ∞

E Bs | Ft = E (Bs + Bt − Bt ) | Ft

The Bs − Bt is independent of Ft . The conditional expected value is linear

so:

= E Bs − Bt | Ft + E Bt | Ft

Now since Bs − Bt is independent from Ft and by property (iii) of

Martingales, we get

= E Bs − Bt + Bt = 0 + Bt = Bt

20

Doob’s martingale inequality

1 P sup |Mt | ≥ λ ≤ p E |MT |p

λ

0≤t≤T

where p ≥ 1, T ≥ 0 and λ > 0. This inequality holds for all t → Mt (ω)

continuous (which we accept without a proof). We use this to get uniform

convergence. This result is true for all martingales Mt . (We note that this

inequality says something about all Mt where t ≤ T .

Theorem

RT

Let f ∈ V[0, T ]. Then there exists a t-continuous version of 0 f (s, ω)dBs,

for t ∈ [0, T ].

What do we mean by version?

Xt and Yt are versions of each other if

P {ω | Xt (ω) = Yt (ω)} = 1 ∀t,

(can be different on a set with measure 0 and this set can be different for

any t).

Proof of theorem:

This is a difficult proof. The idea is to consider:

Z tX

Nn

In (t) =

enj (ω)I(tnj ,tnj+1 ) dBs

0

We choose this since

Z

0

(**)

j=1

t

f (s, ω)dBs ≈

Z

t

φn (s, ω)dBs

0

which in turn is defined by (∗∗). We want to prove that the sequence (∗∗)

has a uniformly convergent subsequence. If fn are all continuous functions

and fn → f uniformally, then f is continuous. Fact from real analysis.

Lemma

For each n, t → In (t) is an Ft -martingale. (Property i: it is Ft -measurable

since s, t in the expression are less than or equal to t. Property ii: In is finite).

Property iii needs some verification. We want to show that:

E In (s) | Ft = In (t)

We write In (s) as two integrals.

Z

Z s

t

E In (s) | Ft = E

φn (u)dBu +

φn (u)dBu | Ft

0

t

21

Rt

Since 0 φn (u)dBu is Ft -measurable, it equals itself. Hence, by linearity of

the expected value:

Z

s

= In (t) + E

φn (u)dBu | Ft

(L1)

t

Rs

Now we have a slight problem since t φn (u)dBu is not independent of Ft .

However, we can rewrite it:

Z

h X

s

i

(n)

(n)

E

φn (u)dBu |Ft = E

ej Btj+1 − Bt(n) Ft

(***)

j

t

(n)

t≤tj ≤s

(n)

In this form, we see that (Btj+1 − Bt(n) ) is independent of Ft . We will use to

j

facts from conditional expectations:

h i

A ⊆ B ⇒ E E X|B A = E X|A

and if X is A-measurable;

E XY |A = XE Y |A .

Returning to (∗ ∗ ∗), we use the properties of the expected value and get:

h X

i

(n) E

ej E Bt(n) − Bt(n) |Ft(n) Ft

j+1

j

j

(n)

t≤tj ≤s

Now (Bt(n) − Bt(n) ) is independent of Ft(n) , so we can rewrite:

j+1

j

j

E Bt(n) − Bt(n) |Ft(n) = E Bt(n) − Bt(n) E Ft(n) = 0 · E Ft(n) = 0

j+1

j

j

j+1

j

j

j

Since this is equal to zero, we get a recursive effect and everything in the

expression is multiplied by zero. Returning to L1 of the Lemma, we get:

Z

s

= In (t) + E

φn (u)dBu |Ft

(L1)

t

= In (t) + 0 = In (t)

Thus we have shown:

We have proved that all

E In (s)|Ft = In (t).

In (t) =

Z

t

φn (s, ω)dBs(ω)

0

22

(which are t-continuous) are Ft -martingales. For such I’s, In − Im are also

Ft -martingales (and so are any linear combinations of martingales).

We use Doob’s martingale inequality with p = 2, λ = ǫ and get

1 P sup |In (t, ω) − Im (t, ω)| ≥ ǫ ≤ 2 E |In − Im |2

ǫ

0≤t≤T

We have φn → f ∈ V. By construction of the Itô-integral, In −Im is a Cauchy

sequence, so

1 2

E

|I

−

I

|

→ 0 as m, n → ∞.

n

m

ǫ2

For simplicity we assume P (In −Im = ǫ) = 0. Then we can find a subsequence;

when we choose k and set ǫ = 2−k , so:

P sup |In − Im | > 2−k ≤ 2−k

0≤t≤T

We set:

Ak =

Summing over infinity;

∞

X

k=1

−k

.

ω sup |Ink+1 −Ink | > 2

0≤t≤T

P (Ak ) ≤

∞

X

k=1

We use the Borell-Cantelli theorem: if

P

∞

∞ [

\

2−k = 1 < ∞

P∞

m=1 k=m

k=1

P (Ak ) < ∞, then

Ak = 0

Where

∞ [

∞

\

m=1 k=m

Ak = ω ω is an element of infinitely many A′k s

c

T ∞ S∞

A

, or in other words, with

Then for almost all ω ∈

k

k=m

m=1

inside

that

set.

probability 1 we

are

not

c

T∞ S∞

If ω ∈

k=m Ak , there exists a number k1 (ω) such that if

m=1

k ≥ k1 (ω), then ω 6∈ Ak . But this means that

sup In − Im ≤ 2−k

0≤t≤T

23

for all k ≥ k1 (ω) and does not depend on t. That is, we have uniform

RT

RT

convergence. In (t) → 0 f (s, ω)dBs uniformly =⇒ 0 f (s, ω)dBs is tcontinuous except for a set of ω’s with measure 0. In that case we can simply

define the integral of f to be 0 on that set. Theorem proven!

We usually assume we are working with the continuous version.

Corollary

Rt

The mapping t → 0 f (s, ω)dBs is an Ft -martingale. (Because Im (t) are

Rt

martingales and In (t) → In (t) = 0 f (s, ω)dBs in L2 .

Proof :

We want to show that:

Z s

h Z t

2 i

E E[ f dB | Fs ] −

f dB

= 0.

0

0

Rs

If this is true, 0 f dBs is a martingale. We begin with the expression and

work our way through.

Z s

h Z t

2 i

E E[ f dB | Fs ] −

f dB

0

0

Rt

We add and subtract E[ 0 φn dB | Fs ] to the expression, and use the linearity

of expected values.

Z t

Z t

Z

Z s

2 t

= E E

f dB −

φn dB | Fs + E

φn dB | Fs −

f dB

0

0

0

0

Z s

h Z t

2 i

= E E[ (f − φn )dB | Fs] +

(φn − f )dB

o

0

h Z t

2 i

h Z s

2 i

≤ 4 E E[ (f − φn )dB | Fs ]

+E

(φn − f )dB

o

0

Since φn → f by Iso-isometry and construction

h Z t

2 i

h Z s

2 i

≤4 E

(f − φn )dB

+E

(φn − f )dB

o

0

which tends to 0 as n → ∞. Corollary proven.

24

Extensions of the Itô-integral

First. We have assumed that f ∈ V (measurable, adapted etc). Ft -adapted

can be relaxed. Idea: assume thatRBt is Ht -measurable and an Ht -martingale.

t

Then it is possible to construct o f dB when f ∈ Ht -measurable (where H

is “big”).

Why is this important?

Assume B1 and B2 are to independent Brownian motions.

Ht = σ B1 (s), s ≤ t, B2 (s), s3 ≤ t ,

i.e a filtration generated by both Brownian motions. We wish to calculate

Z

Z

B1 dB2 or

B2 dB1

and the first extension covers this.

Second. We have assumed

E

hZ

i

t

2

0

f (s, ω)ds < ∞.

This assumption can be relaxed in the case where

Z t

2

P

f (s, ω)ds < ∞ = 1.

0

The Itô/Stratonovich Integrals

Itô-integral:

Z

X

f dB ⇒

f (tj ) Btj+1 − Btj

Stratonovich-integral (denoted with a ◦):

Z

X tj + tj+1 Btj+1 − Btj

f ◦ dB ⇒

f

2

Assume that B n (t, ω) are continuously differentiable and B n (t, ω) → B(t, ω)

uniformly.

For each ω we can solve a DE (differential equation), i.e find Xt such that

(Xt depends on n; omitted so we can skip the indices).

(n)

dXt

dBs

= b(t, Xt ) + σ(t, Xt )

dt

ds

25

If we have found an Xt , we must have (integrating both sides):

Xt = X0 +

Z

t

b(s, Xs )ds +

0

X t = X0 +

Z

t

0

Z

t

b(s, Xs )ds +

0

Z

(n)

dBs

σ(s, Xs )

ds

ds

t

0

σ(s, Xs )dBs(n)

We try to pass this to the limit; i.e we let n → ∞ (possible to prove). Since

(n)

dBs is differentiable and B n (t, ω) → B(t, ω) as n → ∞:

Z t

Z t

Xt = X0 +

b(s, Xs )ds +

σ(s, Xs ) ◦ dBs

0

0

where we note that we get the Stratonovich-integral in the limit. This seems

to indicate that the Stratonovich-integral is the “natural choice”. However,

the limit also gives us:

Z t

Z t

Z t

1 ∂σ

(s, Xs )σ(s, Xs )ds +

σ(s, Xs )dBs ,

X t = X0 +

b(s, Xs )ds +

0

0

0 2 ∂x

and this time we have the Itô-integral. This means that:

Z t

Z t

Z t

1 ∂σ

(s, Xs )σ(s, Xs )ds +

σ(s, Xs )dBs =

σ(s, Xs ) ◦ dBs

0

0 2 ∂x

0

Suppose

Xt = X0 +

Z

t

0

(s, Xs ) ◦ dBs

and that this is a “good model” for a natural phenomenon (like stock prices).

If we put

eb(t, Xs ) = b(s, Xs ) + 1 ∂σ (t, Xt )σ(t, Xt )

2 ∂x

then the Itô version

Z t

Z t

e

X t = X0 +

b(s, Xs )ds +

σ(s, Xs )dBs

0

0

is an equally “good model”.

Whatever you can do with the Stratonovich-integral you can do with Itô and

vice versa. Itô is occasionally easier to use, and is generally preferred over

the Stratonovich-integral.

26

=============================== 12/02-2010

Exercise 3.1

Using the definition of the Itô integral, we want to prove that

Z t

Z t

sdBs = tBt −

Bs ds.

0

0

We have the partition:

0 = t0 < . . . < tj < tj+1 < . . . < tn = t

and

∆Bj = Btj+1 − Btj .

This means:

tBt =

n

X

∆(tj Bj ) =

n

X

j+1

j+1

(tj+1 Btj+1 − tj Btj ).

We note that:

∆(tj Bj ) = tj+1 Btj+1 − tj Btj

= tj+1 Btj+1 − tj Bj+1 + tj Bj+1 − tj Btj

= Bj+1 (tj+1 − tj ) + tj Bj+1 − Btj

= Bj+1(∆tj ) + tj (∆Bj )

That is,

tBt =

n

X

tj ∆Bj +

j=0

tBt =

t

sdBs +

0

Z

Z

t

Bs ds =⇒

0

t

0

Bj+1 ∆tj ,

j=0

and, when we let ∆tj → 0, we get

Z

n

X

sdBs = tBt −

27

Z

0

t

Bs dt

Exercise 3.3

(X)

If Xt : Ω → R is a stochastic process, we let Ht = Ht denote the σ(X)

algebra generated by {Xs ; s ≤ t}, i.e {Ht }t≥0 is the filtration of the process

{Xt }t≥0 .

(a) Verify: if Xt is a martingale w.r.t to some filtration {Nt }t≥0 , then Xt is

also a martingale w.r.t it’s own filtration.

An important observation here is that the filtration Ht generated by the

process is the smallest possible filtration. Therefore Ht ⊆ N . Using this, we

know that for s > t,

h i

E Xs | Ht = E E Xs | Nt Ht = E Xt | Ht = Xt

The two first properties of martingales are apparent since Xt already is a

martingale.

————————————

An important property of conditional expectation.

E E[X|F ] = E[X]

Proof : ∀B ∈ Ω,

Z

B

For B = Ω;

E[X(ω) | F ]dP (ω) =

Z

(⋆)

X(ω)dP (ω)

B

E E[X(ω) | F ] = E[X]

————————————

(b) Show that if Xt is a martingale w.r.t to Ht , then

E[Xt ] = E[X0 ] ∀t ≥ 0

Since Xt is martingale, we kow that E[Xt | H0 ] = X0 . So,

(⋆)

E[X0 ] = E E[Xt | H0] == E[X]

(c) The property in (b) is automatic if the process is a martingale. The

converse is not necessarily true. This is apparent if we consider the process

Xt = Bt3 . Then E[Bt3 ] = 0 = E[B0 ], but Xt is not a martingale. We verify

this by checking property M3 for martingales. Assume s > t:

28

E Bs3 | Ft = E (Bs − Bt )3 + 3Bs2 Bt − 3Bt2 Bs + Bt3 | Ft =

We can factor out Bt ’s, since they are Ft -measurable. We also have E[(Bs −

Bt )3 ] = 0. This is specially verified below, after 3.18.

3Bt E[Bs2 | Ft ] − 3Bt2 E[Bs | Ft ] + Bt3 =

Using that Bs2 = (Bs − Bt + Bt )2 :

3Bt E[(Bs − Bt )2 | Ft] − 3Bt2 E[Bs | Ft ] + Bt3 + 3Bt E[2Bs Bt2 − Bt2 ] =

3Bt E (Bs − Bt )2 − 2Bt3 + 6Bt3 − 3Bt3 =

3Bt (s − t) + Bt3 6= Bt3

This means

E[Xs | Ft] 6= Xt

for this process, and then it can not be a martingale.

Exercise 3.4

We are going to determine if these processes are martingales.

(i) Xt = Bt + 4t.

The first property of martingales is often trivial, so when you check if

something is a martingale you usually begin with property 2. In this case,

even property 2 is quite obvious, so we proceed to the third property. ∀s < t:

E[Bt + 4t | Fs] = 4t + E[Bt | Fs] = 4t + Bs 6= 4s + Bs

(M3 )

The property is not met, and therefore this process is not a martingale.

(ii) Xt = Bt2

E[Xt ] = E[Bt2 ] = t 6= 0 = E[B02 ] = E[X0 ]

From 3.3b) we know that any martingale must have the same expected value

for all t. This is not the case for this process, so this is not a martingale.

Rt

(iii) Xt = t2 Bt − 2 0 sBs ds.

Property M2 is fulfilled, and we consider M3 .

Z

2

t

E Xt | Fs = E t Bt | Fs − 2E

uBu du | Fs =

0

2

t Bs − 2E

Z

s

0

uBu du | Fs − 2E

29

Z

s

t

uBu du | Fs =

By splitting the integral, the first part is now Fs -measurable. We also use

property (⋆⋆) for conditional expectations on the integral from s to t.

Z s

Z t

2

t Bs − 2

uBu du − 2

uE Bu | Fs du =

0

2

t Bs − 2

2

2

t Bs − 2

Z

t Bs − 2

s

0

Z

s

s

uBu du − 2Bs

0

Z

0

s

Z

t

udu =

s

1

uBu du − 2Bs (t2 − s2 )

2

2

2

2

uBu du − t Bs + s Bs = s Bs − 2

Property M3 is met, and Xt is a martingale.

Z

s

uBu du

0

(iv) Xt = B1 (t)B2 (t) (a 2-dimensional Brownian motion). Assume s < t, and

try to verify property:

E B1 (t)B2 (t) | Fs = B1 (s)B2 (s)

(M3 )

Some intermediate calculations; using a little trick:

B1 (t) − B1 (s) B2 (t) − B2 (s) =

B1 (t)B2 (t) − B1 (t)B2 (s) − B1 (s)B2 (t) + B1 (s)B2 (s) =

We rearrange a little;

B1 (t)B2 (t) − B1 (s)B2 (t) + B1 (s)B2 (s) − B1 (t)B2 (s) =

We add and subtract B1 (s)B2 (s);

B1 (t)B2 (t)−B1 (s)B2 (t)+B1 (s)B2 (s)−B1 (t)B2 (s)+B1 (s)B2 (s)−B1 (s)B2 (s) =

Factor out B1 (s) and B2 (s) to get the desired result.

n

o

n

o

B1 (t)B2 (t) − B1 (s) B2 (t) − B2 (s) − B2 (s) B1 (t) + B1 (s) − B1 (s)B2 (s)

We can now take the expression we have:

n

o

B1 (t) − B1 (s) B2 (t) − B2 (s) = B1 (t)B2 (t) − B1 (s) B2 (t) − B2 (s)

n

o

− B2 (s) B1 (t) + B1 (s) − B1 (s)B2 (s)

and rewrite to the equivalent form:

n

o

B1 (t)B2 (t) = B1 (t) − B1 (s) B2 (t) − B2 (s) + B1 (s) B2 (t) − B2 (s)

n

o

+ B2 (s) B1 (t) + B1 (s) + B1 (s)B2 (s)

30

Now, returning to the exercise we now we can rewrite E[B1 (t)B2 (t) | Ft],

when s < t as

h

i

E B1 (t) − B1 (s) B2 (t) − B2 (s) | Fs

h

i

+ E B1 (s) B2 (t) − B2 (s) | Fs (= 0)

h

i

+ E B2 (s) B1 (t) − B1 (s) | Fs (= 0)

h

i

+ E B1 (s)B2 (s) | Fs =⇒

We use that B1 (s)B2 (s) are Fs -measurable.

h

i

E B1 (t) − B1 (s) B2 (t) − B2 (s) | Ft + B1 (s)B2 (s) =

Next we have independence in the expectation, and get:

h

i h

i

E B1 (t) − B1 (s) | Ft E B2 (t) − B2 (s) | Ft + B1 (s)B2 (s) = B1 (s)B2 (s)

Thus we have shown this is a martingale.

Exercise 3.5

Let Mt = Bt2 − t. Prove this is a martingale w.r.t the filtration Ft .

Properties M1 and M2 are obvious. We check M3 for t > s.

E[Mt | Fs ] = E[Bt2 − t | Fs] = E[Bt2 | Fs] − t =

We use Bt = Bt − Bs + Bs

h

i

2

2

E (Bt − Bs ) − Bs + 2Bt Bs | Fs − t =

E[(Bt − Bs )2 ] − E[Bs2 | Fs ] + 2E[Bt Bs | Fs ] − t =

t−s

−

Bs2

+

2Bs2

− t = Bs2 − s = Ms

All the properties for a martingale process are met, and Mt is martingale.

Exercise 3.18

Prove that

1

Mt = exp{σBt − σ 2 t}

2

is an Ft -martingale.

Properties M1 and M2 are simple, and we verify M3 for t > s:

i

h

σBt − 21 σ2 t

| Fs =

E e

31

We add and subtract σBs in the exponent.

h

i

h

i

1 2

1 2

E eσBt −σBs +σBs − 2 σ t | Fs = E eσ(Bt −Bs ) eσBs − 2 σ t | Fs =

We have measurability and independence, and can use some properties from

the conditional expectations.

1 2

eσBs − 2 σ t E eσ(Bt −Bs )

Use Laplace transfrom on the expected value:

1

1

2

eσBs − 2 σ t e 2 σ

1

eσBs − 2 σ

2s

2 (t−s)

= Ms

All the properties are met, and Mt is a martingale.

Verification for 3.3c): E[(Bt − Bs )3 ] = 0.

E (Bt − Bs )3 = E Bt3 − Bs3 − 3Bs2 Bt + 3Bs Bt2 =

E[Bt3 ] − E[Bs3 ] − 3E[Bs2 Bt ] + 3E[Bs Bt2 ] =

Here, the expectation to the first two terms are zero, and we use that

Bt = Bt − B − s + Bs

−3E[Bs2 Bt | Ft ] + 3E[Bs (Bt − Bs ) − Bs2 + 2Bt Bs ] =

−3E[Bs3 ] + 3[Bs (Bt − Bs )2 ] − 3E[Bs3 ] + 6E[Bt Bs2 ] =

3E[Bs ]E[(Bt − Bs )2 ] = 0

In the course of this verification, we used E[Bt3 ] = 0. This requires some

justification. If we regard E[Bt3 ] as E[f (X)] for f (X) = X 3 , we can use

property 4 of Brownian motion (on page 14).

E[Bt3 ]

Z

x2

e− 2t

dx

=

x √

2πt

R

3

We integrate with partial fractions.

u = x2 =⇒ u′ = 2x

x2

x2

xe− 2t

e− 2t

v = √

=⇒ v = −t √

2πt

2πt

′

32

We use these values in the integral:

Z

Z

′

I = uv = uv − u′ v

We get the integral:

Z

h x3 te− x2t2 i

x2

2t

I= √

xe− 2t dx

+√

2πt R

2πt R

The first part tends to zero, so we are left with:

−2t2 − x2 I = 0+ √

e 2t = 0

R

2πt

which also tends to zero, because we have the exponential function with a

negative square in the exponent. It tends to zero for positive and negative

x-values. Therefore E[Bt3 ] = 0.

Exercise 3.8

Let Y be a real valued random variable on (Ω, F , P ) such that

E[|Y |] < ∞.

We define

Mt = E[Y | Ft] ; t ≥ 0.

(3.8.1)

a) We want to show that Mt is an Ft -martingale. By definition M2 holds,

and M1 is trivial. We show that the third property holds. s ≤ t.

3.8.1

E[Mt | Fs] == E E[Y | Ft ] | Fs

The filtration Ft is increasing, i.e Fs ⊆ Ft , so

E E[Y | Ft] | Fs = E[Y | Fs ] = Ms

and we are done. Mt is a martingale.

(b) Conversely, we let Mt ; t ≥ 0 be a real valued Ft -martingale such that

sup E[|Mt |p ] < ∞ for some p > 1.

t≥0

Using Corollary 7, we want to show there exists Y ∈ L1 (P ) such that

Mt = E[Y | Ft ].

Corollary 7 states ∃M ∈ L1 (P ) such that Ms −→ M a.e (P ) and

s→∞

Z

|Ms − M|dP → 0 as s → ∞

33

E[ lim Ms | Ft ] = lim E[Ms | Ft] = Mt

s→∞

s→∞

We can put the limit outside because the conditional expectation is a random

variable. Consider this simplified version, for some F ∈ Ft .

E[IF lim Ms ] = lim E[IF Ms ]

s→∞

s→∞

The definition is, for F ∈ F :

Z

Z

E[X(ω) | Ft]dP =

X(ω)dP

F

=

F

Z

Yt dP

F

Returning to the exercise:

Z

Z

lim Ms dP =

E[ lim Ms | Ft ]dP =

s→∞

lim

s→∞

Z

Ms dP = lim

s→∞

F

F s→∞

Z

F

E[Ms | Ft ]dP =

Z

lim E[Ms | Ft ]dP =

F s→∞

Additional exercises 2 - Itô Isometry

Exercise 1

Calculate

E

h Z

0

t

2 i

(1 + Bs )dBs .

Hint: Apply Itô isometry;

Z

h Z T

2 i

E

=

f (t, ω)dBt(ω)

S

T

S

E f 2 (t, ω) ds

We recognise the function as f (t, ω) = 1 + Bs . Squaring this, we get:

f 2 (t, ω) = (1 + Bs )(1 + Bs ) = 1 + 2Bs + Bs2 ⇒

E[f 2 ] = E[1] + 2E[Bs ] + E[Bs2 ] = 1 + 0 + s = 1 + s ⇒

Z t

1 t

1

1 + sds = s + s2 = t + t2

2 0

2

0

34

Exercise 2

Calculate

E

h Z

t

0

Bs2 dBs

2 i

.

We apply the Itô isometry on f (t, ω) = Bs2 ,

f 2 (t, ω) = Bs4 ⇒ E[f 2 ] = E[Bs4 ] = 3s2

Z t

Z t

t

2

E[f ]ds =

3s2 ds = s3 = t3

0

Exercise 3

Calculate

E

h Z

0

0

t

sin(Bs )dBs

0

2

Z

+

t

cos(Bs )dBs

0

2 i

.

We can split the expected value over the two terms, and can apply the Iso

isometry to each function.

f = sin(Bs ) ⇒ f 2 = sin2 (Bs )

g = cos(Bs ) ⇒ g 2 = cos2 (Bs )

Z t

Z t

Z t

2

2

E sin (Bs ) ds +

E cos (Bs ) ds =

E sin2 (Bs ) + cos2 (Bs ) ds =

0

0

2

0

2

Using cos (α) + sin (α) = 1:

Z t

Z t

t

E 1 ds =

1ds = s = t

0

0

0

Exercise 4

Calculate

E

hZ

0

t

1dBs ·

Z

0

t

Bs2 dBs

i

1

Hint: xy = (x + y)2 − (x − y)2

4

Rt

Rt

Using the hint, we set X = 0 1dBs and Y = 0 Bs2 dBs .

1

1 1 E (X + Y )2 − (X − Y )2 = E (X + Y )2 − (X − Y )2

4

4

4

Substituting back, we now have:

Z

Z t

Z t

i

i 1h Z t

1 h t

2

2

E ( 1dBs +

Bs2 dBs )2 =

Bs dBs ) − ( 1dBs −

4

4

0

0

0

0

Z t

Z

h

i

h

i

t

1

1

E ( 1 + Bs2 dBs )2 − ( 1 − Bs2 dBs )2

4

4

0

0

E[XY ] =

35

We apply the Itô isometry.

Z

Z

1 t 1 t 2 2

E (1 + Bs ) ds −

E (1 − Bs2 )2 ds

4 0

4 0

(4.I)

We square the funtions:

(1 + Bs2 )2 = 1 + 2Bs2 + Bs4

(1 − Bs2 )2 = 1 − 2Bs2 + Bs4

Distribute and use the expectation.

E[1 + 2Bs2 + Bs4 ] = 1 + 2s + 3s2

E[1 − 2Bs2 + Bs4 ] = 1 − 2s + 3s2

Returning to the integral 4.I, we have:

Z

Z t

1 t

2

2

1 + 2s + 3s ds −

1 − 2s + 3s ds =

4 0

0

Z

1 t

1 + 2s + 3s2 − 1 + 2s − 3s2 ds =

4 0

Z

Z t

1 t

1

1 t

4sds =

sds = s2 = t2

4 0

2 0

2

0

Exercise 5

Prove that

hZ

E

T

S

f (t, ω)dBt ·

Z

i

T

g(t, ω)dBt =

S

Z

T

S

Applying the hint for exerise 4, with X =

RT

g(t, ω)dBt, we get:

S

E f (t, ω)g(t, ω) ds

RT

S

f (t, ω)dBt and Y

1

(X + Y )2 − (X − Y )2 =

4

Z

2 Z T

g(t, ω)dBt −

f (t, ω)dBt−

=

E[XY ] =

1 h

4

Z

T

f (t, ω)dBt +

S

1 h

E

4

Z

Z

S

T

T

f (t, ω) + g(t, ω)dBt

S

S

2

−

36

Z

T

g(t, ω)dBt

S

T

S

f (t, ω) − g(t, ω)dBt

2 i

2 i

=

=

Itô isometry.

Z T

Z T

2 2 1

E f (t, ω) + g(t, ω) ds −

E f (t, ω) − g(t, ω)dBt ds =

4

S

S

Z

T

S

2

2 i

1 h

E f (t, ω) + g(t, ω) − f (t, ω) − g(t, ω) ds =

4

We can now use the hint the other way, and arrive at the desired result.

Z T

E f (t, ω)g(t, ω) ds

S

37

===============================

Itô’s Formula. Consider the integral of

Z t

Bs dBs

19/02-2010

0

where we make the approximation

φn (s, ω) =

X

Btj I(tj ,tj+1 ) (s).

j

Clearly φn ∈ V. We must prove that the difference is negligible:

"

#

Z t

Z tj+1

X

2

Fubini

E

(Bs − φn (s))2 ds

= E

Bs − Btj ds ==

0

XZ

j

tj+1

tj

tj

j

2

E (Bs − Btj ) ds =

max(∆tj )

j

X

j

XZ

j

tj+1

(s − tj ) ds =

tj

1

∆tj = max(∆tj ) t → 0

j

2

X1

j

2

∆t2j ≤

The functions are arbitrarily close to each other.

Lemma 1 For ∆Bj = Btj+1 − Btj , we know that

X

(∆Bj )2 → t in L2

j

Lemma 2

A(B − A) =

Returning to the integral.

Z t

1 2

B − A2 − (B − A)2

2

Bs dBs = lim

n→∞

0

lim

n→∞

X

j

Z

t

φn dBs =

0

Btj Btj+1 − Btj

We now have the same form as in lemma 2. We get:

X

lim

Bt2j+1 − Btj − (∆Bj )2

n→∞

j

where we see that Bt2j+1 − Btj is a telescoping sum. And since B0 = 0, we use

lemma 1 and get:

1

1X

1

1

(∆Bj )2 = Bt − t

lim (Bt2 − 02 ) −

n→∞ 2

2 j

2

2

38

We need a more efficient machinery to calculate the Itô integral. We are

going to introduce the Itô formula. First some notation.

Z t

Z t

X t = X0 +

u(s, ω)ds +

v(s, ω)dBs

0

0

This is called an Itô process, or a stochastic integral. The shorthand notations

is

dXt = udt + vdBt .

Since we don’t have any differenting theory for functions with Brownian

motion, this is a slightly dubious equation, but with it we really mean the

full expression for Xt over.

Itô’s Formula

Let g(t, x) ∈ C 2 [0, T ] × R , and let Xt be an Itô process. Then Yt = g(t, Xt)

and

∂g

1 ∂2g

∂g

(t, Xt )dt +

dXt +

(t, Xt )(dXt )2 .

(I.1)

dYt =

2

∂t

∂x

2 ∂x

As earlier dXt = udt + vdBt , but we have to compute (dXt )2 . We have a set

of formal rules we must use.

dt · dt = dt · dBt = dBt · dt = 0

dBt · dBt = dt.

This last little fact is central to much of the Itô calculus! Multiplying the

parenthesis, we end up with a normal Lebesgue integral.

(dXt )2 = (udt+vdBt )2 = u2 (dt)2 +2uvdtdBt +v 2 (dBt )2 ⇒ 0+0+0+v 2dt = v 2 dt

Proof :

2

∂g ∂g ∂ 2 g

∂2g

We assume that g, ∂x

, ∂t , ∂x2 , ∂x∂t

, ∂∂t2g are bounded functions. The proof

can later be extended to the unbounded case. We also assume u and v are

elementary functions, which can also be extended to any object.

As a telescoping sum, we get:

g(t, Xt ) = g(0, X0) +

X

j

g(tj+1.Xtj+1 ) − g(tj , Xtj )

We write this as a 2nd order Taylor expansion.

X ∂g

X ∂g

1 X ∂2g

= g(0, X0) +

∆tj +

∆Xj +

(∆tj )2

2

∂t

∂x

2 j ∂t

j

j

+

X

X ∂2g

1 X ∂2g

2

(∆tj )(∆Xj ) +

(∆X

)

+

Rj

j

∂t∂x

2 j ∂x2

j

39

Now we examine each of the six terms in the expression individually and

see what happens.

Z t

X ∂g

∂g

(tj , Xtj )∆tj →

(s, Xs )ds

(1)

∂t

0 ∂t

j

We approximated this as a Riemann sum (integral) which we can do since

we have a bounded, continuous function.

X ∂g

X ∂g

∆Xj =

(uj ∆tj + vj ∆Bj ) →

(2)

∂x

∂x

j

j

Z

Z t

∂g

∂g

(s, Xs )u(s)ds +

(s, Xs )v(s)dBs

0 ∂x

0 ∂x

We did the same here, approximated as Riemann integrals.

t

X

1 X ∂2g

2

(∆t

)

≤

C

(∆tj )2 → 0

j

2 j ∂t2

j

Since g is bounded, there is some C ∈ R such that g ≤ C.

X ∂ 2 g X ∂2g

|∆tj | |∆Xj | ≤

(∆tj )(∆Xj ) ≤ ∂t∂x

∂t∂x

(3)

(4)

j

j

To ensure this expression is greater than the one above, we use absolute

values everywhere. Since g is bounded, we can again use a constant:

X

X

e

C

|∆tj | |uj ||∆tj | + |vj ||∆Bj | ≤ C

|∆tj |2 + |∆tj ||∆Bj |

j

j

In the expression we now have |∆tj |2 which, as in term 3, will tend to 0. We

can continue with just the last term in the expression.

X

X

2 |∆tj ||∆Bj | so we consider E

|∆tj ||∆Bj |

=

j

j

We use the double-sum argument.

hX

i

E

|∆ti ||∆Bi ||∆tj ||∆Bj | =

i,j

We can set the constants outside;

X

|∆ti ||∆tj |E |∆Bi ||∆Bj | =

i,j

40

(i6=j)

X

i,j

i X

h

i

h

|∆ti ||∆tj |E |∆Bi | E |∆Bj | +

|∆ti ||∆ti |E |∆Bi |2

i=j

Now we use E[|∆Bi |2 ] = ∆ti and the Hölder-inequality E[|f |] ≤ E[f 2 ]1/2 .

This yields;

(i6=j)

≤

X

i,j

q

q

X

|∆ti |2 |∆ti |

|∆ti ||∆tj | |∆ti | |∆tj | +

i=j

Since |∆ti | → 0, |∆t2i | → 0 even faster. Merging the double sum back, and

using the same general argument we get:

X

p 2

=

|∆ti | ∆ti → 0

i

We now consider the fifth term which is quite important.

X ∂2g

(∆Xj )2 =

2

∂x

j

=

X ∂2g ∂x2

j

X ∂2g

j

∂x2

uj ∆tj + vj ∆Bj

2

u2j (∆tj )2 + 2uj vj ∆tj ∆Bj + vj2 (∆Bj )2

(5)

Here u2j (∆tj )2 → 0 and 2uj vj ∆tj ∆Bj → 0, so all we have to consider is the

last part:

X ∂2g

vj2 (∆Bj )2

2

∂x

j

(∆Bj )2 is very closely related to ∆tj in L2 . We consider the difference

E

h X ∂ 2 g

j

vj2 (∆Bj )2 −

2

∂x

= E

h X ∂ 2 g

j

X ∂2g

j

vj2 ∆tj

2

∂x

v 2 (∆Bj2 − ∆tj )

∂x2 j

2 i

(F1)

2 i

We use the double sum argument.

= E

h X ∂ 2 g

i,j

2 i

∂2g

2 2

2

2

(ti , Xti ) 2 (tj , Xtj )vi vj (∆Bi − ∆ti )(∆Bj − ∆tj )

∂x2

∂x

41

We consider the case i < j. Then everything is Fj -measurable except

∆Bj2 −∆tj , which is independent of the rest. We also use that E[∆Bj2 −∆tj ] =

0, and everything cancels to 0. We have the exact same result for j < i. Thus

we only have to worry about the case i = j. Since g, v is bounded:

E

h ∂ 2 g ∂x2

vi4

∆b2i

− ∆ti

2 i

≤ CE

hX

i

∆Bi2

2 i

− ∆ti .

However, the differences (∆Bi2 − ∆ti )2 are arbitrarily small in L2 , so:

hX

2 i

2

CE

∆Bi − ∆ti

→0

i

The difference between the sum with (dBt )2 and dt is negligible, which is

connected to the rule (dBt )2 = dt. The fifth term in our original expression

is arbitrarily close to the same term with ∆ti , and this term converges to a

Riemann integral. In summary, the fifth term can be written as a Riemann

integral.

Z t 2

X ∂2g

∂ g

2

2

v dBj →

(s, Xs )v 2 (s, ω)ds

2

2

∂x

0 ∂x

j

The last term is the remainder term Rj = o(|∆ti |2 , |∆Xi |2 ), and this term

tends to zero.

In conclusion, this means that;

Z t

Z t

∂g

∂g

(s, Xs )ds +

(s, Xs )u(s)ds

Yt = g(t, Xt ) =

0 ∂x

0 ∂t

Z

Z t

1 t ∂2g

∂g

(s, Xs )v(s)dBs +

(s, Xs )v 2 (s, ω)ds

+

2

2 0 ∂x

0 ∂x

Using (dXt )2 = v 2 ds and dXt = udt + vdBt we get our conclusion, and we

have proved the Itô formula:

Z t

Z

Z t

∂g

1 t ∂2g

∂g

Yt =

(s, Xs )ds +

(s, Xs )dXt +

(s, Xs )(dXs )2 Q.E.D

2

∂t

∂x

2

∂x

0

0

0

42

We consider a few examples involving the formula. We begin by

integrating the one we began with, just to see how much easier it is with

the formula.

Example

Calculate

Z

t

Bs dBs .

0

We use the Itô process with Xt = Bt , or in other words:

dXt = 0dt + 1dBt .

We have to choose a two times differentiable function, and if we evaluate

the integral as a normal integral we get a reasonable choice for g. So we set

g(t, x) = 21 x2 .

Now we apply Itô’s formula, and we have the general form as:

∂g

1 ∂2g

∂g

dt +

dXt +

(dXt )2 .

∂t

∂x

2 ∂x2

We calculate each of these parts.

dg(t, Xt) =

1

g(t, x) = x2

2

∂g

∂g

(t, x) = 0 =⇒

(t, Xt )dt = 0

∂t

∂t

∂g

1

(t, x) = x

g(t, x) = x2 =⇒

2

∂x

Substituting Xt for x, which is Xt = Bt in this case.

=⇒

∂g

(t, Xt )dXt = Bt dBt

∂x

Finally,

∂2g

1

(t, x) = 1

g(t, x) = x2 =⇒

2

∂x2

With Xt = Bt , (dXt )2 = (dBt )2 = dt by the formal rules of Itô calculus. So;

1 ∂2g

(dXt )2 =⇒

2 ∂x2

We can now write out the Itô formula for

dg(t, Xt) =

1

1

(1)dt = dt

2

2

this integral.

∂g

1 ∂2g

∂g

dt +

dXt +

(dXt )2

2

∂t

∂x

2 ∂x

Substituting with our results over, recalling that g(t, Xt) = 21 (Bt )2 , we have

1 1

1

d Bt2 = 0 + Bt dBt + dt = Bt dBt + dt

2

2

2

43

Since Yt = g(t, Xt ) is a Itô process, we now have the short form:

dYt = udt + vdBt

and expanding this to the full form results in:

Z t

Z t

Yt = Y0 +

uds +

vdBs .

0

0

In this example, this means the short version

1 1

d Bt2 = Bt dBt + dt

2

2

in expanded form is:

1 2

B = B0 +

2 t

Z

t

0

1

ds +

2

Z

t

Bs dBs

0

or, equivalently, using that B0 = 0,

Z t

1

1

Bs dBs = Bt2 − t

2

2

0

which is the solution to the problem.

Example 2

We want to compute

Z

t

sdBs .

0

This is somewhat different since we now have a deterministic function s in

the integral. As is usually the case, we set Xt = Bt , and we make the not so

obvious choice g(t, x) = tx. We calculate the needed partial derivatives.

∂g

∂t = x

∂g

g(t, x) = tx =⇒

=t

∂x

∂2g

=0

∂x2

Using Itô’s formula, we get

d (g(t, Xt )) =

∂g

∂g

∂2g

dt +

dXt + 2 (dXt )2

∂t

∂x

∂x

d (tBt ) = Bt dt + tdBt + 0

44

We recognise this as the shorthand version of a stochastic process with

udt = Bt dt and vdBt = tdBt . Expanding gives

Z t

Z t

tBt = B0 +

Bs ds +

sdBs

Z

0

0

t

sdBs = tBt −

Z

0

t

Bs ds

0

and we have solved the integral. This can also be seen as a version of

integration by parts. We also see that we have simplified a stochastic integral

to a multiplication and a Lebesgue integral, which is easier to compute.

Multi-dimensional Itô-formula

When we have more than one Brownian motion. For m ∈ N, we have the

m-dimensional vector

Bt = B1 (t), B2 (t), . . . , Bm (t) .

For n stochastic processes we have:

dX1 = u1 dt + v11 dB1 + v12 dB2 + . . . + v1m dBm

dX2 = u2 dt + v21 dB1 + v22 dB2 + . . . + v2m dBm

..

..

.

.

dXn = un dt + vn1 dB1 + vn2 dB2 + . . . + vnm dBm

which we can simply write as

dXt = udt + vdBt

where

X1

Xt = ... ,

Xn

u1

u = ... ,

un

v11 . . . v1m

.. ,

v = ... . . .

.

vn1 . . . vnm

dB1

dBt = ... ,

dBm

and where we define g(t, x) for t ∈ [0, ∞) and x ∈ Rn .

Moving on to the multi-dimensional Itô formula we get Yt = g(t, Xt ),

n

n

X

∂gk

1 X ∂ 2 gk

∂gk

(t, Xt )dXi +

(dXi )(dXj )

dt +

dYt =

∂t

∂xi

2 i,j=1 ∂xi xj

i=1

This is not so different from the one dimensional case, except we have sums.

In this case we also have some formal rules which are easily transfered from

the one-dimensional case.

dt if i = j

dBi dBj =

0

if i 6= j

45

Example

We will calculate the first row for a 2-dimensional stochastic process.

Xt = B1 (t), B2 (t)

#

"

tB1 (t)3 B2 (t)2

Yt =

et B2 (t)

#

"

tx31 x22

.

g(t, x1 , x2 ) =

et x2

Each of the rows in Y and g corresponds to Y1 , Y2 , g1 and g2 .

We will calculate dY1 :

∂g1

∂g1

∂g1

1 ∂ 2 g1

(dX1 )2

dt +

dX1 +

dX2 +

∂t

∂x1

∂x2

2 ∂x21

1 ∂ 2 g1

1 ∂ 2 g1

1 ∂ 2 g1

(dX2 )2

(dX1 )(dX2 ) +

(dX1 )(dX2 ) +

+

2 ∂x2 x1

2 ∂x1 x2

2 ∂x22

dY1 =

We observe that X1 = B1 (t) and X2 = B2 (t), and the formal rules stated

above, and we know that dB1 dB2 = dB2 dB1 = 0, so we see that two of the

terms in the expression above becomes 0. We are left with:

∂g1

∂g1

∂g1

1 ∂ 2 g1

1 ∂ 2 g1

2

dY1 =

dt +

dX1 +

dX2 +

(dX1 ) +

(dX2 )2

2

2

∂t

∂x1

∂x2

2 ∂x1

2 ∂x2

where we can use that (dXi )2 = dt, and we have reduced the problem to:

dY1 =

1 ∂ 2 g1

∂g1

∂g1

1 ∂ 2 g1

∂g1

dt

+

dt

dt +

dX1 +

dX2 +

∂t

∂x1

∂x2

2 ∂x21

2 ∂x22

Now we evaluate the differentiated functions. g1 (t, X) = g1 (t, B1 , B2 ) =

tB1 (t)3 B2 (t)2 .

∂g1

(t, B1 , B2 ) = B13 B22

∂t

∂g1

(t, B1 , B2 ) = 3tB12 B22

∂x1

∂ 2 g1

(t, B1 , B2 ) = 6tB1 B22

2

∂x1

∂g1

(t, B1 , B2 ) = 2tB13 B2

∂x2

∂ 2 g1

(t, B1 , B2 ) = 2tB13

∂x22

46

We substitute these in the expression, and get:

1

1

dY1 = B13 B22 dt + 3tB12 B22 dB1 + 2tB13 B2 dB2 + 6tB1 B22 dt + 2tB13 dt

2

2

= B13 B22 dt + 3tB12 B22 dB1 + 2tB13 B2 dB2 + 3tB1 B22 dt + tB13 dt

3 2

2

3

= B1 B2 + 3tB1 B2 + tB1 dt + 3tB12 B22 dB1 + 2tB13 B2 dB2

This is the first row in the solution Yt .

Martingale Representation

First some preparation:

Doob-Dynkin Lemma.

Let X and Y be two random variables on Ω, taking values in Rn . Assume

that Y is σ(X)-measurable.

The only way it can be measurable to that σ-algebra is if there exists a

function f (Lebesgue-measurable) such that Y = f (X).

The Fourier Inversion Theorem.

Given a function φ. We get a new function by performing the Fourier

transform.

n Z

1

def

b

φ(y)e−ixy dy

φ(x) == √

n

2π

R

The theorem says we get the same function back when we perform an inverse

Fourier transform on the new function. That is,

n Z

1

ixy

b

φ(x) == √

φ(y)e

dy

2π

Rn

if φ and φb both are in L1 (Rn . Strictly speaking the equality is only true for

φ(−x), but for our applications it doesn’t matter.

Lemma

If g ∈ L2 (FT ), where FT is the σ-algebra generated by Brownian motion,

then this function can be approximated by objects on the form

φ Bt1 , Bt2 , . . . , Btn

where φ ∈ C0∞ (Rn ) (infinitely differentiable with compact support).

47

Lemma (difficult)

Consider objects on the form

Z

hZ t

i

1 t 2

exp

h(t)dBt −

h (t)dt

2 0

0

where h ∈ L2 [0, T ] is a deterministic function with no dependancy on ω. The

linear span of such functions is dence in L2 (Ω, FT ).

Proof :

Assume that

Z

g(ω) exp

Ω

hZ

t

1

h(t)dBt −

2

0

Z

0

t

i

h (t)dt dP (ω) = 0.

2

for all h ∈ L2 [0, T ]. Since h2 (t)dt is constant w.r.t dP (ω), we can think of

this as ea−b = ea /eb , i.e factor it out and remove it. We get

Z

Z

i

hZ t

1 t 2

h (t)dt dP (ω) = 0.

g(ω) exp

h(t)dBt −

2 0

Ω

0

Now we put

h(t) = λ1 I(0,t1 ) (t) + λ2 I(0,t2 ) (t) + . . . + λn I(0,tn ) (t)

which obviously is in L2 since it is bounded. Since

Z t

λI(0,a) (t)dBt = λBa

0

we now have

Z

Ω

i

h

g(ω) exp λ1 Bt1 + λ2 Bt2 + . . . + λn Btn dP (ω) = 0.

for all λ = (λ1 , . . . , λn ) ∈ Rn . We now define a function on Cn .

Z

h

i

G(z) =

g(ω) exp z1 + . . . + zn dP (ω)

Ω

is an analytic and an entire function. If the function G(z) is zero on the real

line, it must be zero everywhere, i.e G(z) = 0. This is clear if we look at the

series expansion.

f (z) =

X

n

an z ,

f n (0)

= 0 =⇒ f (z) = 0.

an =

n!

48

Now we let φ ∈ C0∞ (Rn ) be arbitrary.

Z

φ Bt1 , Bt2 , . . . , Btn g(ω)dP (ω)

Ω

and do the Fourier inversion.

Z

Z 1

iy1 Bt1 +iy2 Bt2 +...+iyn Btn

b

√

dyg(ω)dP (ω)

φ(y)e

n

2π

Ω

R

where we used (x1 , . . . , xn ) = (Bt1 , . . . , Btn ) in the Fourier inversion theorem.

We can now apply Fubini’s theorem and interchange the integral signs.

Z Z

1

b

√

φ(y)

eiy1 Bt1 +iy2 Bt2 +...+iyn Btn g(ω)dP (ω) dy

2π

Rn

{z

}

|Ω

G(z) with zi =yi Bti

Since G(z) is 0 everywhere, so is this entire expression!

Conclusion:

If

Z

g(ω) exp

Ω

2

nZ

0

T

1

h(t)dBt −

2

Z

0

T

o

h2 (t)dt dP (ω) = 0

C0∞ (Rn ):

for all h ∈ L [0, T ], then for any φ ∈

Z

Z

g 2 (ω)dP = 0

φ(Bt1 , . . . , Btn )g(ω)dP = 0 =⇒

Ω

Ω

since g ∼ φ(Bt1 , . . . , Btn ). Since g ∈ L2 (FT ) ⇒ g = 0 in L2 .

2

Now

R L is a Hilbert space (Inner RProduct space) and the inner product in

2

L is gφ. We have just Rshown that gφ = 0 which means g(ω) is orthogonal

to the linear span exp( h − 0.5h2 ). Since it is orthogonal to an arbitrary

function, it is orthogonal to all functions and this in turn means that it must

be in the set!

To sum up, any

R function2 g(ω) can be approximated by a linear

combination of exp( h − 0.5h ).

49

=============================== 26/02-2010

Problem 6

For the partition 0 = t1 ≤ t2 ≤ . . . ≤ tN = T , prove that

N

T

2 i

h

X

X

2

= 2

(∆ti )2

E T−

(∆Bi )

i=1

i=1

Multiplying the parenthesis we get

N

N

hX

i

h X

2 i

E[T 2 ] − 2T E

(∆Bi )2 + E

(∆Bi )2

i=1

We use the hint: (

PN

ai )2 =

i=1

N

X

2

T − 2T

i=1

P

i=1

PN

i,j=1 (ai aj ).

N

i

hX

∆ti + E

(∆Bi )2 (∆Bj )2

i,j=1

We note that

∆ti is a telescoping series which results in tN − t1 which is

T − 0 = T . We get T 2 − 2T 2 = −T 2 .

N

hX

i

2

2

−T + E

(∆Bi ) (∆Bj )

2

i,j=1

We can rewrite the double sum using a little summation manipulation.

N

X

X −T +

E (∆Bi )4 + 2

E (∆Bi )2 (∆Bj )2

2

i=1

i<j

Using expectation of Brownian motion and independence.

2

−T + 3

N

X

(∆ti )2 + 2

i=1

2

i<j

−T + 3

2

−T + 2

N

X

i=1

X E (∆Bi )2 E (∆Bj )2

N

X

(∆ti )2 + 2

i=1

2

(∆ti ) +

X

∆ti ∆tj

i<j

N

X

i=1

50

(∆ti )2 + 2

X

i<j

∆ti ∆tj

Using the same summation manipulation as above, just the other way,

−T + 2

N

X

−T 2 + 2

N

N

X

X

2

(∆ti )2 +

∆ti

2

2

(∆ti ) +

i=1

N

X

∆ti ∆tj

i,j=1

i=1

i=1

The last term is again a telescoping series, and sums to T , then we square it.

2

−T + 2

N

X

2

(∆ti ) + T

2

= 2

i=1

N

X

(∆ti )2

Q.E.D

i=1

Exercise 4.1

Given a Yt we want to write it as dYt = udt + vdBt .

a) For Yt = Bt2 = g(t, Bt) we see that g(t, x) = x2 . Differentiating.

∂g

=0

∂t

∂2g

=2

∂x2

∂g

= 2x

∂x

By Itô’s formula;

1

d(Yt ) = d(Bt 2 ) = 0dt + 2Bt2 dBt + 2(dBt )2

2

dYt = 1dt + 2Bt dBt

b) Yt = 2 + t + eBt , where we see g(t, x) = 2 + t + ex .

∂g

=1

∂t

∂g

= ex

∂x

∂2g

= ex

2

∂x

Itô’s formula.

1

1

dYt = 1dt + eBt dBt + eBt (dBt )2 = (1 + eBt )dt + eBt dBt

2

2

c) Yt = B12 (t) + B22 (t). This time g(t, x, y) = x2 + y 2 .

∂g

=0

∂t

∂g

= 2x

∂x

∂2g

=2

∂x2

∂g

= 2y

∂y

∂2g

=2

∂y 2

Multi dimensional Itô’s formula.

dYt = 2dt + 2B1 (t)dB1 (t) + 2B2 (t)dB2 (t)

51

∂2g

=0

∂y∂x

d) Yt = (t0 + t, Bt ).

1

0

dYt = (dt, dBt ) =

dt +

dBt

1

0

e) Yt = (B1 (t) + B2 (t) + B3 (t), B22 (t) − B1 (t)B2 (t).

dY1 (t) = dB1 (t) + dB2 (t) + dB3 (t)

dY2 (t) = 2B2 (t)dB2 (t) + dt − dB1 (t)dB3 (t) − B1 (t)db3 (t) + dB1 (t)dB3 (t)

and since dB1 (t)dB3 (t) = 0:

dB1

1

1

1

0

dY1

dB2

dt +

=

dYt =

−B3 2B2 −B1

1

dY2

dB3

Exercise 4.2

We set Yt = 31 Bt3 , which implies g(t, x) = 31 x3 .

∂g

=0

∂t

Itô’s formula.

Exercise 4.3

∂g

= x2

∂x

∂2g

= 2x

∂x2

1

1

d( Bt3 ) = Bt2 dBt + 2Bt dt

3

2

Z t

Z t

1 3 1 3

2

B = B0 +

Bs dBs +

Bs ds

3 t

3

0

0

Z t

Z t

1 3

2

Bs ds

Bs dBs = Bt −

3

0

0

We want to prove that d(Xt Yt ) = Xt dYt + Yt dXt + dXt · dYt . In thise case

g(t, x, y) = xy. We calculate the derivatives.

∂g

=0

∂t

∂g

=y

∂x

∂2g

=0

∂x2

∂g

=x

∂y

∂g

∂g

(t, Xt , Yt ) = Yt

(t, Xt , Yt ) = Xt

∂x

∂y

Applying Itô’s multi dimensional formula.

∂2g

=0

∂y 2

∂2g

=1

∂y∂x

∂2g

(t, Xt , Yt ) = 1

∂y∂x

1

d(Xt Yt ) = Yt dXt + Xt dYt + (dXt dYt + dYt dXt ) = Yt dXt + Xt dYt + dXt dYt

2

52

We can deduce the integration by parts formula.

Z t

Z t

Z t

X t Y t = X0 Y 0 +

Ys dXs +

Xs dYs +

dXs dYs

0

Z

0

0

t

Xs dYs = Xt Yt − X0 Y0 −

Exercise 4.5

Z

0

t

0

Ys dXs −

Z

t

dXs dYs

0

For a brownian motion Bt ∈ R with B0 = 0 we define βk = E[Btk ] for

k = 0, 1, 2, . . . and t ≥ 0. Using Itô’s formula we want to prove that

Z t

1

βk (t) = k(k − 1)

βk−2 (s)ds; k ≥ 2.

2

0

Y (t) = Btk implies f (t, x) = xk .

∂f

=0

∂x

∂2f

= k(k − 1)xk−2

∂y∂x

∂f

= kxk−1

∂y

Itô’s formula.

1

dYt = d(Btk ) = 0 + kBtk−1 dBt + k(k − 1)Btk−2 (dBt )2

2

k(k − 1) k−2

Bt dt + kBtk dBt

2

Since Y0 = B0k = 0 the integral form is

Z

Z t

k(k − 1) t k−2

k

Bt =

Bs ds + k

Bsk dBs

2

0

0

d(Btk ) =

Recalling that the expectation to a Itô integral is 0.

Z t

i

h k(k − 1) Z t

k−2

t

Bs ds + k

Bsk dBs

βk (t) = E[Bk ] = E

2

0

0

Z t

Z t

k(k − 1)

k(k − 1)

E[Bsk−2 ]ds + 0 =

βk−2 ds

2

2

0

0

a) We want to show that E[Bt4 ] = 3t2 and compute E[Bt6 ].

E[Bt4 ]. Let Yt = Bt4 ⇒ f (t, x) = x4 .

∂f

=0

∂x

∂f

= 4x3

∂y

53

∂2f

= 12x2

∂y∂x

Q.E.D

1

dYt = 4Bt3 dBt + 12Bt2 dt

2

Z t

Z t

4

3

Bt =

4Bs dBs + 6

Bs2 ds

0

0

Now, we take the expectation.

t

Z t

Z t

6 2 4

2

E[Bt ] = 6

E[Bs ]ds = 6

sds = s = 3t2

2 0

0

0

For E[Bt6 ] we use Yt = Bt6 and the implied function f (t, x) = x6 .

∂f

=0

∂x

∂f

= 6x5

∂y

∂2f

= 30x4

∂y∂x

1

dYt = 6Bt5 dBt + 30Bt4 dt

2

Z t

Z t

6

5

Bt = 6

Bs dBs + 15

Bs4 ds

0

0

Since the expectation to a stochastic integral is 0, and using the first part:

t

Z t

Z t

45 3 6

4

2

E[Bt ] = 15

E[Bs ]ds = 45

s ds = s = 15t3

3

0

0

0