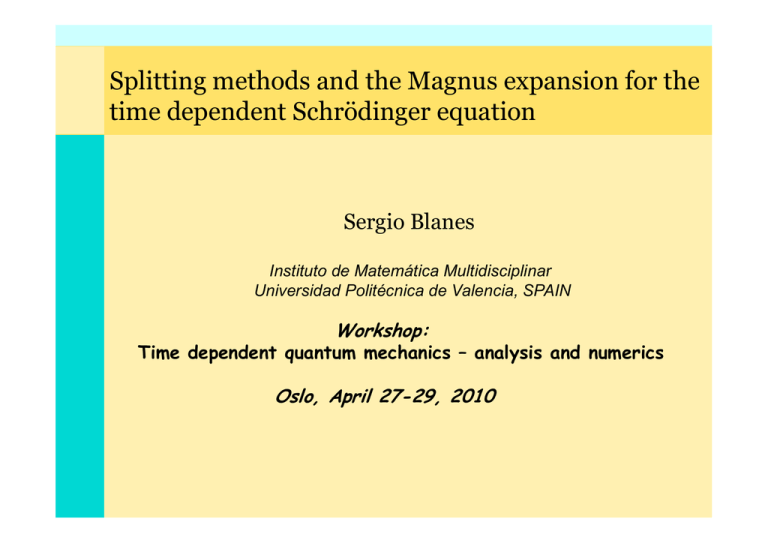

Splitting methods and the Magnus expansion for the

advertisement

Splitting methods and the Magnus expansion for the time dependent Schrödinger equation Sergio Blanes Instituto de Matemática Multidisciplinar Universidad Politécnica de Valencia, SPAIN Workshop: Time dependent quantum mechanics – analysis and numerics Oslo April 27 Oslo, 27-29, 29 2010 The Schrödinger g equation q The system y can interact with other systems y or external fields and can have a more involved structure but the Hamiltonian has to be a linear Herminian operator. After Spectral decomposition, Spatial discretization, etc., in general, we have to solve a linear ODE in complex variables where c = (c1,…, cN)T ∈ N and H ∈ N×N is an Hermitian matrix. If H is constant, the formal solution is given by Th solution The l i corresponds d to a unitary i transformation. f i If H is of large dimension, it is important to have an efficient procedure to compute t or approximate i t the th exponential ti l multiplying lti l i a vector. t If H is time-dependent the problem can be more involved. We can be i t interested t d to t write it the th solution l ti as an exponential ti l with W; being and skew-Hermitian operator. There is the problem, however, g of such operator. p of the existence and convergence Depending on the problem and the techniques used, the structure of the matrix H(t) can differ considerably, and then different numerical techniques have to be used. We know the system has several qualitative properties (preservation of the energy, momentum, norm, etc.) and it is desirable the approximation methods h d share h these h qualitative li i properties. i I this In thi ttalk lk we b briefly i fl consider: id Splitting Methods and Magnus integrators Splitting Methods Consider the autonomous time dependent SE It is separable in its kinetic and potential parts. The solution of the discretised equation is given by where c = (c1,…, cN)T ∈ N and H = T + V ∈ N×N Hermitian matrix. Fourier methods are frequently used i the is h ffast F Fourier i transform f (FFT) Consider the Strang-splitting or leap-frog second order method Notice that the exponentials are computed only once and are stored at the beginning. Similarly, for the kinetic part we have This splitting was proposed in: Feit, Fleck, and Steiger, J. Comput. Phys., 47 (1982), 412. Higher Orders The well known fourth-order fourth order composition scheme with Higher Orders The well known fourth-order fourth order composition scheme with Or in general with -Creutz-Gocksh(89) -Suzuki(90) S ki(90) -Yoshida(90) Higher Orders The well known fourth-order fourth order composition scheme with Or in general with -Creutz-Gocksh(89) -Suzuki(90) S ki(90) -Yoshida(90) Excellent trick!! In practice, they show low performance!! Basic References E. Hairer, C. Lubich, and G. Wanner, Geometric Numerical Integration. Structure-Preserving Algorithms for Ordinary Differential Equations, Springer-Verlag, (2006). R.I. McLachlan and R. Quispel, Splitting methods, Acta Numerica, 11 (2002), 341-434. S. Blanes, F. Casas, and A. Murua, Splitting and composition methods in the numerical integration of differential equations, Boletín SEMA, 45 (2008), 89-145. (arXiv:0812.0377v1). Polynomial Approximations: Taylor, Chebyshev and Splitting Let us consider again the linear time dependent SE with H real and symmetric. This problem can be reformulated using real variables. Consider then Hamiltonian system: or, in short: z’ = M z Formal solution: with z = (q,p)T The Taylor Method An m-stage Taylor method for solving the linear equation can be written as where is the Taylor expansion of the exponential. It is a y function of degree g m which approximates the exact polynomial solution up to order m and we can advance each step by using the Horner's algorithm This algorithm can be trivially rewritten in terms of the real vectors vectors, q,p, and it only requires to store two extra vector q and p. The Taylor Method The matrix written as that propagates the numerical solution can be where the entries T1(y) and T2(y) are the Taylor y series expansion of cos(y) and sin(y) up to order m, i.e. Notice that i iis not a symplectic it l i transformation! f i ! Th The eigenvalues i l are given i b by Th scheme The h iis stable t bl if For practical purposes, we require however The Chebyshev Method The Chebyshev method approximates the action of the exponential on the initial conditions by a near-optimal polynomial given by: where with Here, Tk(x) is the kth Chebyshev polynomial generated from the recursion and T0(x)=1, T1(x)=x. Jk(w) are the Bessel functions of the first kind which provides a superlinear convergence for m > w or, in other words, d when h The Chebyshev Method The Clenshaw algorithm allows to compute the action of the polynomial by storing only two vectors which can also be easily rewritten in term of the real vectors q,p. The scheme can be written as As in the Taylor case: It is not a symplectic transformation. The Symplectic Splitting Method We have built splitting methods for the harmonic oscillator!!! Exact solution (ortogonal and symplectic) We consider the composition Notice that The Symplectic Splitting Method We have which are polynomials of degree twice higher as in the previous cases for the same number of stages. The algorithm: a generalisation of the Horner Horner's s algorithm or the Clenshav algorithm It only requires to store one additional real vector of dimension The Symplectic Splitting Method The methods preserve symplecticity by construction: Stability: M is stable if |Tr K|<2, i.e. Theorem:Any composition method is conjugate to an orthogonal method, and unitarity is preserved up to conjugacy. There is a recursive p procedure to g get the coefficients of the splitting p g methods from the coefficients of the matrix K. We can build different matrices with: - Large stability domain - Accurate approximation to the solution in the whole interval (like Chebyshev) - Methods with different orders of accuracy and very large number of satges. Taylor order tol=inf tol=10-8 tol=10-4 10. 0. 0. 0. 15. 0.111249 0.111249 0.111249 20. 0.164515 0.164515 0.164515 25. 0. 0.065246 0.065246 30. 0. 0.108088 0.108088 35. 0.0461259 0.138361 0.322661 40 0.0804521 40. 0 0804521 0 0.160884 160884 0.321618 0 321618 45. 0. 0.178294 0.320804 50. 0. 0.192154 0.32015 Chebyshev order tol=inf tol=10-8 tol=10-4 20. 0.00362818 0.321723 0.643217 25 25. 0 00233368 0.384289 0.00233368 0 384289 0.64029 0 64029 30. 0.00162599 0.425648 0.638324 35. 0.00119743 0.45502 0.636912 40 0 40. 0.000918402 000918402 0.476957 0 476957 0.63585 0 63585 45. 0.000726646 0.635021 0.635021 50. 0.000589228 0.634356 0.634356 55 55. 0 0. 0 633812 0.633812 0.633812 0 633812 60. 0. 0.633357 0.633357 Splitting methods Error bounds Schrödinger equation with a Poschl-Teller potential with Initial conditions Poschl-Teller potential Taylor: 10-40 0 -2 LOG(E ERROR) -4 -6 -8 -10 -12 -14 14 3.1 3.2 3.3 3.4 3.5 3.6 3.7 3.8 LOG(FFT CALLS) 3.9 4 4.1 Poschl-Teller potential Split: 4-12 0 -2 LOG(E ERROR) -4 -6 -8 -10 -12 -14 14 3.1 3.2 3.3 3.4 3.5 3.6 3.7 3.8 LOG(FFT CALLS) 3.9 4 4.1 Poschl-Teller potential Chebyshev: 20-100 0 -2 LOG(E ERROR) -4 -6 -8 -10 -12 -14 14 3.1 3.2 3.3 3.4 3.5 3.6 3.7 3.8 LOG(FFT CALLS) 3.9 4 4.1 Poschl-Teller potential New Split 0 -2 LOG(E ERROR) -4 -6 -8 -10 -12 -14 14 3.1 3.2 3.3 3.4 3.5 3.6 3.7 3.8 LOG(FFT CALLS) 3.9 4 4.1 References S. Blanes, F. Casas, and A. Murua, Work in Progress. Group webpage: http://www.gicas.uji.es/ S. Blanes, F. Casas and A. Murua, On the linear stabylity of splitting methods, Found. Comp. Math., 8 (2008), pp. 357-393. S. Blanes, F. Casas and A. Murua, Symplectic splitting operator methods for the time-dependent Schrödinger equation, J. Chem. Phys. 124 (2006) 234105. Th Magnus The M series i expansion i Let us now consider the time-dependent problem or, equivalently Th Magnus The M series i expansion i Let us now consider the time-dependent problem or, equivalently We can use the time-dependent p Perturbation Theory: y The Dyson y p perturbative series where Wh th When the series i iis ttruncated, t d th the unitarity it it iis llost! t! Th Magnus The M series i expansion i with A(t) ∈ d×d Th Magnus The M series i expansion i Theorem (Magnus 1954). Let A(t) be a known function of t (in general, in an associative ring), and let Y(t) be an unknown function satisfying y g Y’ = A(t) ( ) Y, with Y(0) ( ) = I. Then, if certain unspecified p conditions of convergence are satisfied, Y(t) can be written in the form where and Bn are the Bernouilli numbers. The convergence is asured if The Magnus expansion which can be obtained by Picard iteration or using a recursive formula where Convergence condition: This is a sufficient but NOT necessary condition F Casas, F. C S Sufficient ffi i t conditions diti ffor th the convergence off th the M Magnus expansion, J. Phys. A: Math. Theor. 40 (2007), 15001-15017 This theory allows us to build relatively simple methods at different orders: A second order method is given by: with ith This theory allows us to build relatively simple methods at different orders: A second order method is given by: with ith A fourth order method is given by: with and This theory allows us to build relatively simple methods at different orders: A second order method is given by: with ith A fourth order method is given by: with and Methods M th d using i different diff t quadratures d t and d higher hi h order d exist. i t Th These methods th d have been used for the SE beyond the convergence domain: M. Hochbruck, M H hb k C. C Lubich, L bi h On O Magnus M integrators i t t for f time-dependent ti d d t Schrödinger equations, SIAM J. Numer. Anal. 41 (2003) 945-963. A two level quantum system The Rosen-Zenner Model with If we consider id the h iinteraction i picture i whch depends on two parameters: The transition probability from to is given by ξ=0.3 =0.3 Trans sition Prrobabilit robabilit ty Trans ition Pr obabilitty y 1 1 1111 0.9 0.9 0.9 0.9 0.9 0.9 0.8 0.8 0.8 0.8 0.8 0.8 0.7 0.7 0.7 0.7 0.7 0.7 0.6 0.6 0.6 0.6 0.6 0.6 0.5 0.5 0.5 0.5 0.5 0.5 0.4 0.4 0.4 0.4 0.4 0.4 0.3 0.3 0.3 0.3 0.3 0.3 0.2 0.2 0.2 0.2 0.2 0.2 0.1 0.1 0.1 0.1 0.1 0.1 0 0 0 0 11 22 33 γγ 44 ξξ=1 =1 55 66 0000 0000 RK4 E1 E1 M4 M2 1111 2222 3333 γγ 4444 5555 6666 Numerical integration for ξ=0.3 1 1 Trans sition Prrobabilit robabilitty ty Trans ition Pr using 50 evaluations 111 0.9 0.9 0.9 0.9 0.9 0.8 0.8 0.8 0.8 0.8 0.7 0.7 0.7 0.7 0.7 0.6 0.6 0.6 0.6 0.6 0.5 0.5 0.5 0.5 0.5 0.4 0.4 0.4 0.4 0.4 0.3 0.3 0.3 0.3 0.3 0.2 0.2 0.2 0.2 0.2 0.1 0.1 0.1 0.1 0.1 0 0 0 0 11 22 33 γγ ξξ=1 =1 44 55 66 000 000 RK4 E1 E1 M4 M2 111 222 333 γγ 444 555 666 Numerical integration for using 50 evaluations Transsition Prrobabilitty ξ=0.3 ξ=1 1 11 0.9 0.9 0.9 0.8 0.8 0.8 0.7 0.7 0.7 0.6 0.6 0.6 0.5 0.5 0.5 0.4 0.4 0.4 0.3 0.3 0.3 0.2 0.2 0.2 0.1 0.1 0.1 0 0 1 2 3 γ 4 5 6 00 00 RK4 E1 M4 M2 11 22 33 γ 44 55 66 Numerical integration for using 50 evaluations Transsition Prrobabilitty ξ=0.3 ξ=1 1 1 0.9 0.9 0.8 0.8 0.7 0.7 0.6 0.6 0.5 0.5 0.4 0.4 0.3 0.3 0.2 0.2 0.1 0.1 0 0 1 2 3 γ 4 5 6 0 RK4 E1 M4 M2 0 1 2 3 γ 4 5 6 Numerical integration for using 50 evaluations Transsition Prrobabilitty ξ=0.3 ξ=1 1 1 0.9 0.9 0.8 0.8 0.7 0.7 0.6 0.6 0.5 0.5 0.4 0.4 0.3 0.3 0.2 0.2 0.1 0.1 0 0 1 2 3 γ 4 5 6 0 RK4 E1 M4 M2 0 1 2 3 γ 4 5 6 (ξ=0.3,γ=10) (ξ 0.3,γ 10) (ξ=0.3,γ=100) (ξ 0.3,γ 100) 0 0 -1 -2 Log(Error) -2 Log(Error) -1 Euler RK4 RK6 Magnus-2 Magnus-4 Magnus 4 Magnus-6 -3 -4 -3 -4 -5 5 -5 5 -6 -6 -7 2.5 3 Log(Evaluations) 3.5 -7 3 3.2 3.4 3.6 3.8 Log(Evaluations) 4 (ξ=0.3,γ=10) (ξ 0.3,γ 10) (ξ=0.3,γ=100) (ξ 0.3,γ 100) 0 0 -1 -2 Log(Error) -2 Log(Error) -1 Euler RK4 RK6 Magnus-2 Magnus-4 Magnus 4 Magnus-6 -3 -4 -3 -4 -5 5 -5 5 -6 -6 -7 2.5 3 Log(Evaluations) 3.5 -7 3 3.2 3.4 3.6 3.8 Log(Evaluations) 4 Splitting-Magnus Splitting Magnus methods with Splitting-Magnus Splitting Magnus methods with We can approximate the solution by the composition Splitting-Magnus Splitting Magnus methods with We can approximate the solution by the composition with the averages S. Blanes, S Blanes F F. Casas and A A. Murua Murua, Splitting methods for non non-autonomous autonomous Linear systems, Int. Jour. Comp. Math., 6 (2007), 713-727 Conclusions Magnus and splitting methods are powerful tools for numerically solving the Schrödinger equation Conclusions Magnus and splitting methods are powerful tools for numerically solving the Schrödinger equation But, the performance strongly dependes on the choice of the appropriate methods for each problem Conclusions Magnus and splitting methods are powerful tools for numerically solving the Schrödinger equation But, the performance strongly dependes on the choice of the appropriate methods for each problem Alternatively, one can build methods tailored for particular problems p p Basic References S. Blanes, F. Casas, J.A. Oteo, and J. Ros, The Magnus expansion and some of its applications, Phys. Rep. 470 (2009), 151-238. S. Blanes, F. Casas, and A. Murua, Splitting and composition methods in the numerical integration of differential equations, ( ), 89-145. (arXiv:0812.0377v1)). Boletín SEMA,, 45 (2008), C. Lubich, From Quantum to Classical Molecular Dynamics: Reduced Models and Numerical Analysis, Analysis Zurich Lectures in Adv Adv. Math Math., (2008). E. Hairer, E Hairer C C. Lubich Lubich, and G G. Wanner Wanner, Geometric Numerical Integration. Structure-Preserving Algorithms for Ordinary Differential Equations, Springer-Verlag, (2006). A. Iserles, H.Z. Munthe-Kaas, S.P. Nörsett and A. Zanna, group p methods,, Acta Numerica,, 9 (2000), ( ), 215-365. Lie g R.I. McLachlan and R. Quispel, Splitting methods, Acta Numerica, 11 (2002), 341-434.