Understanding Implicit Social Context in Electronic Communication ROTCH

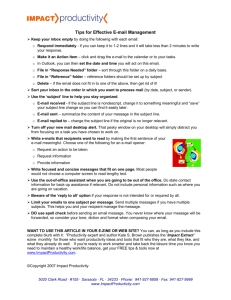

advertisement