Building Condition Monitoring SEP 0 1 2010

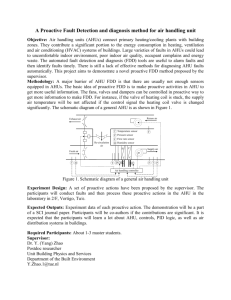

advertisement