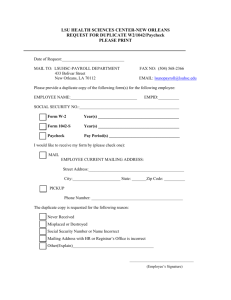

DUPLICATE ENTRY DETECTION IN AND NORCKAUER,

advertisement