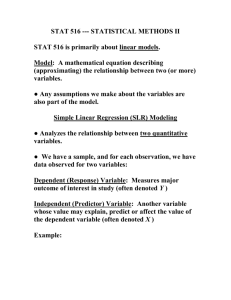

Chapter 8: A Closer Look at Assumptions for SLR

advertisement

Chapter 8: A Closer Look at Assumptions for SLR

The simple linear regression model is based on independence, normality, constant variance, and

a linear relationship. We need practice in judging when these assumptions are violated and need

to know how to handle data which do not fit the assumptions.

8.2 Robustness of Least Squares Inferences:

SLR Assumptions

1. Linearity:

• Violations:

(a) Straight line not adequate (eg. curvature)

(b) Straight line appropriate for most of data, but there are several outliers

• Implications:

– Estimated means and predictions can be biased

– Tests and CIs may be based on wrong SE

– Severity of consequences depends on severity of the violation.

• Remedies:

– Transformations

– Including other terms in the model (we will see this in Multiple Linear Regression), for example a quadratic term.

2. Constant Variance:

• Violation:

– Spread around the regression line changes for different values of X

• Implications:

– Estimates are unbiased, but SEs are inaccurate (same as for one-way ANOVA)

• Remedies:

– Transformations, Weighted Regression

3. Normality:

• Violation

– Distribution of Y for each value of X is not normally distributed

• Implications:

– Estimates and SEs of coefficients are robust to nonnormality. Long tailed distributions with small sample sizes present the only serious situation.

– Prediction intervals are not robust to nonnormality. Why?

• Remedies:

– Transformations

1

4. Independence:

• Violations:

– Cluster effects

– Serial effects

• Implications:

– Standard errors are seriously affected!

• Remedies:

– Incorporate dependence into analysis using more sophisticated models

8.3 Graphical Tools for Model Assessment

Scatterplot of Response (Y ) vs. Explanatory variable (X)

• Study Display 8.6

Scatterplots of Residuals vs. Fitted Values

• Better for finding patterns because the linear component of variation in response has been

removed. (see Display 8.7)

• Use to detect

– nonlinearity, (Look for curvature)

– nonconstant variance, (Look for a fan shape) and

– outliers (Residuals far from 0)

• The horn-shaped pattern:

– Poor fit and increasing variability

– Transformations:

logarithm,

square root,

– How to choose?

∗ Try one, re-fit the regression model, look at the residual plot

∗ Think about interpretation

2

reciprocal

8.4 Interpretation After Log Transformation

It depends on whether the transformation was applied to the response, the explanatory variable,

or both.

Logged RESPONSE variable:

If µ[log(Y )|X] = β0 + β1 X

and distributions symmetric then

Median{Y |X} = exp(β0 ) exp(β1 X)

=⇒

Median{Y |(X + 1)}

= exp (β1 )

Median{Y |X}

• Wording: A one unit increase in X is associated with a multiplicative change in the median

Y of exp(β1 ), with an associated 95% confidence interval from exp(lower limit of CI for

β1 ) to exp(upper limit of CI for β1 ).

Logged EXPLANATORY variable:

If µ{Y | log(X)} = β0 + β1 log(X) then

µ{Y | log(2X)} − µ{Y | log(X)} = β0 + β1 log(2) + β1 log(X) − [β0 + β1 log(X)] = β1 log(2)

• Describes change in the mean of Y for a doubling (or another multiplicative change) of X

• Wording: A doubling of X is associated with a log(2)β1 unit change in mean Y , with an

associated 95% confidence interval from log(2)∗ (lower limit of CI for β1 ) to log(2)∗ (upper

limit of CI for β1 ).

Logged RESPONSE and EXPLANATORY variables:

If µ{log(Y )| log(X)} = β0 + β1 log(X) then

Median{Y |X} = eβ0 β1 X = eβ0 X β1

• Wording: A doubling of X is associated with a multiplicative change of 2β1 in the median of Y , with an associated 95% confidence interval from 2(lower limit of CI forβ1 ) to

2(upper limit of CI forβ1 ) .

3

8.5 Assessment of Fit Using Analysis of Variance

Scenario: Replicate response values at several explanatory variable values.

Three Models for Population Means

1. Separate-means model:

2. Simple linear regression model:

3. Equal-means model:

8.5.3 The Lack-of-Fit F -Test

• If we want a formal assessment of the goodness of fit of the SLR model, what two models

might we compare? (called a lack-of-fit F-test)

• What is necessary in order to even think about comparing the above two models?

• The Lack-of-Fit F -Test is a formal test of the adequacy of the straight-line regression

model. In other words, can the variability among group means be adequately explained

by the simple linear regression model?

• What numbers do we need to actually do the lack-of-fit F-test?

4

• Breakdown of insulating fluid

insulators <- read.table("data/insulatorBreakdown.txt",head=T)

plot(log(time) ~ voltage,insulators)

insl.linear.fit <- lm( log(time) ~ voltage,insulators)

abline( insl.linear.fit ,col=2)

points(tapply(insulators$voltage,insulators$voltage,mean) ,

tapply(log(insulators$time),insulators$voltage,mean),

col=4,pch=10,cex=1.5)

8

insl.group.fit <- lm( log(time) ~ factor(voltage),insulators)

anova(insl.group.fit)

#Analysis of Variance Table

#

#Response: log(time)

#

Df Sum Sq Mean Sq F value

Pr(>F)

#factor(voltage) 6 196.48 32.746 13.004 8.871e-10

#Residuals

69 173.75

2.518

anova(insl.linear.fit)

#Analysis of Variance Table

#

#Response: log(time)

●

●

#

Df Sum Sq Mean Sq F value

Pr(>F)

#voltage

1 190.15 190.151 78.141 3.34e-13

#Residuals 74 180.07

2.433

●

●

●

6

●

●

●

●

●

●

●

●

log(time)

4

●

●

●

●

●

●

●

●

2

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

0

●

●

●

d.f.

6

1

5

69

75

5

●

●

●

●

●

●

●

●

●

●

36

38

●

−2

●

●

26

28

30

32

34

voltage

• Let’s combine the usual ANOVAs and the lack-of-fit test into a single table.

Sum of

Squares

196.48

190.15

6.33

173.75

370.23

●

●

●

●

●

●

●

●

●

●

●

●

●

8.5.4 A Composite ANOVA Table:

Source of

Variation

Between Groups

Regression

Lack-of-fit

Within Groups

Total

●

●

●

●

●

●

anova(insl.linear.fit, insl.group.fit)

#Analysis of Variance Table

#

#Model 1: log(time) ~ voltage

#Model 2: log(time) ~ factor(voltage)

# Res.Df

RSS Df Sum of Sq

F Pr(>F)

#1

74 180.07

#2

69 173.75 5

6.3259 0.5024 0.7734

●

●

Mean

Square

32.746

190.15

0.5024

2.518

——

F-stat

13.004

75.5

0.7734

——

——

p-val

8.871e-10

0.0000

0.77

——

——

Related Issues

8.6.1 R-Squared: The Proportion of Variation Explained

• R-squared statistic (R2 or r2 ) = the percentage of the total variation in the response

explained by the explanatory variable.

R2 = 100

Total SS − Residual SS

%

Total SS

• Interpretation: “R2 percent of the variation in Y was explained by the linear regression

on X.”

• What does an ESS F-test take into account that R2 does not?

• What is a good R2 ?

• For SLR, R2 is equal to the square of the sample correlation coefficient (r).

• R2 should not be used to assess the adequacy of a straight-line model. (R2 can be quite

large even when the SLR model is not adequate).

8.6.2 SLR or One-Way ANOVA?

• If the simple linear regression model fits, then it is preferred. Why?

• Advantages of SLR:

1.

2.

3.

4.

6

8.6.3 Other Residual Plots

• Residuals vs. time order (or location) (Display 8.12)

• Normal probability plots (Display 8.13)

8.6.4 Planning an Experiment: Balance

• Balance = same number of experimental units in each treatment group

• Balance is desirable, but not essential. It plays a more important role when there is more

than one factor (more than one grouping variable) because it allows for straightforward

decomposition of the SS.

7