An Operational Semantics of Cache Coherent Multicore Architectures

advertisement

UNIVERSITY OF OSLO

Department of Informatics

An Operational

Semantics of Cache

Coherent Multicore

Architectures

Research Report 449

Shiji Bijo,

Einar Broch Johnsen,

Ka I Pun, and

S. Lizeth Tapia Tarifa

ISBN 978-82-7368-414-1

ISSN 0806-3036

December 2015

An Operational Semantics of

Cache Coherent Multicore Architectures∗

Shiji Bijo, Einar Broch Johnsen, Ka I Pun, and S. Lizeth Tapia Tarifa

University of Oslo, Norway

{shijib, einarj, violet, sltarifa}@ifi.uio.no

ABSTRACT

This paper presents a formal semantics of multicore architectures

with private cache, shared memory, and instantaneous inter-core

communications. The purpose of the semantics is to provide an

operational understanding of how low-level read and write operations interact with caches and main memory. The semantics is

based on an abstract model of cache coherence and allows formal

reasoning over parallel programs that execute on any given number

of cores. We prove correctness properties expressed as invariants

for the preservation of program order, data-race free execution of

low-level operations, and no access to stale data. A prototype implementation is provided as a proof of concept interpreter for the

formal model.

Keywords

Formal semantics, multicore architectures, memory consistency,

cache coherence, correctness properties, observable behaviour.

1.

INTRODUCTION

Multicore architectures dominate today’s hardware design. In

these architectures, cache memory is used to accelerate program

execution by providing quick access to recently used data, but allowing multiple copies of data to co-exist during execution. Cache

coherence protocols ensure that cores do not access stale data. With

the dominating position of multicore architectures, system developers can benefit from a better understanding and ability to reason about interactions between programs, caches and main memory. For this purpose we need clear and precise operational models

which allow us to reason about such interactions.

In this paper, we propose a formalization of an abstract model of

cache coherent multicore architectures, directly connecting the parallel execution of programs on different cores to the movement of

data between caches and main memory. Similar to formal semantics for programming languages, we develop an operational semantics of parallel computations on cache coherent multicore architectures. Our purpose is not to evaluate the specifics of a concrete

cache coherence protocol, but rather to capture program execution

on shared data at locations with coherent caches in a formal way.

Consequently, we integrate the basic MSI protocol directly into the

operational semantics of our formal model, while abstracting from

the concrete communication medium (which could be, e.g., a bus

or a ring), and from the specifics of cache associativity and replacement policies. We show that this abstract model of cache coherent

∗Supported by the EU project FP7-612985 UpScale: From Inherent Concurrency to Massive Parallelism through Type-based Optimisations (http:// www.upscale-project.eu).

multicore architectures guarantees desirable properties for the programmer such as program order, absence of data races, and that

cores always access the most recent value of data. The technical

contributions of this paper are (1) a formal, operational model of

executions on cache coherent multicore architectures and (2) correctness properties for the formal model expressed as invariants

over any given number of cores.

Related work. Approaches to the analysis of multicore architectures include on the one hand simulators for efficiency and on the

other hand formal techniques for proving the correctness of specific

cache coherence protocols. We are not aware of work on abstract

models of execution on cache coherent multicore architectures and

their formalization, as presented in this paper.

Simulation tools allow cache coherence protocols to be specified to evaluate their performance on different architectures (e.g.,

gems [17] and gem5 [1]). These tools run benchmark programs

written as low-level read and write instructions to memory and perform measurements, e.g., the cache hit/miss ratio. Advanced simulators such as Graphite [18] and Sniper [3] can handle multicore

architectures with thousands of cores by running on distributed

clusters. A framework, proposed in [15], statically estimates the

worst-case response times for concurrent applications running on

multiple cores with shared cache.

Both operational and axiomatic formal models have been used

to describe the effect of parallel executions on shared memory under relaxed memory models, including abstract calculi [5], memory

models for programming languages such as Java [13], and machinelevel instruction sets for concrete processors such as POWER [16,

22] and x86 [23]. The behavior of programs executing under total store order (TSO) architectures is studied in [10, 24]. However,

work on weak memory models abstracts from caches, and is as such

largely orthogonal to our work that does not consider reordering of

source-level syntax. Cache coherence protocols can be formally

specified as automata and verified by (parametrized) model checking (e.g., [7, 11, 19, 21]), or in terms of operational formalizations

which abstract from the specific number of cores to prove the correctness of the protocols (e.g., [8, 9, 25]). In contrast to these approaches, our model allows the explicit representation of programs

executing on caches. In this sense, our approach is more similar to

the unformalized work on simulation tools discussed above.

Paper overview. Sect. 2 briefly reviews background concepts

on multicore architectures, Sect. 3 presents our abstract model of

cache coherent multicore architectures, Sect. 4 details the operational semantics for this model, and Sect. 5 the associated correctness properties. Sect. 6 discusses the prototype implementation,

and Sect. 7 concludes the paper.

2.

MULTICORE ARCHITECTURES

Modern multicore architectures consist of components such as

independent processing units or cores, small and fast memory units

or caches associated to one or more cores, and main memory. Cores

execute program instructions and interact with main memory to

load and store data. Cores use caches to speed up their executions. The current market offers different designs for integrating

these components. Cache memory keeps the most recently used

data accessed by the core available for quick reading or writing. A

core reads or writes data as words. The cache is organized in cache

lines. Each cache line contains several words, such that a specific

word can be accessed by the core using a memory reference. Multiple continuous words in main memory form a block, which has a

unique memory address.

An attempt to access data from the cache is called a hit if the

data is found in the cache and a miss otherwise. In the case of

a miss, the block containing the requested data must be fetched

from a lower level in the memory hierarchy (e.g., main memory).

Since caches are small compared to main memory, a fetch instruction may require the eviction of an existing cache line. In this case,

the selection of which cache line to evict depends on how the cache

lines are organized, the so-called cache associativity, and on the

replacement policy. In k-way set associative caches, the caches are

grouped as sets with k cache lines and the memory block can go

anywhere in a particular set. For direct mapped caches, associativity is one and the cache is organized in single-line groups. In fully

associative caches, the entire cache is considered as a single set and

memory blocks can be placed anywhere in the cache. Replacement

policies determine the line to evict from a full cache set when a new

block is fetched into that set. Typical policies are random, FIFO,

and LRU (Least Recently Used).

Multicore architectures use cache coherence protocols to keep

the data stored in different local caches and in main memory consistent. In particular, invalidation-based protocols are characterized

by broadcasting invalidation messages when a particular core requires write access to a memory address. Examples of invalidation

protocols are MSI and its extensions (e.g., MESI and MOESI).

An invalidation-based coherence protocol integrates a finite state

controller in each core, and connects the cores and memory using a broadcast medium (a bus, ring, or other topology). The controller responds to requests from its core and from other cores via

the medium. In the MSI protocol, a cache line can be in one of

the three states: modified, shared, invalid. For a line in a cache, a

modified state indicates that it is the most updated copy, and that all

other copies in the system are invalid, while a shared state indicates

that the copy is shared among one or more caches, and the main

memory and that all copies are consistent. When a core attempts to

access a line which is either invalid or does not exist in the cache,

i.e., a cache miss, it will broadcast a read request. Upon receiving

this message, the core which has a modified copy of the requested

cache line will flush it to the main memory and change the state of

the cache line to shared in both the core and the main memory. For

write operations, the cache line must be in either shared or modified

state. An attempt to write to a cache line in shared state will broadcast an invalidation message to the other cores and the main memory. The state of the cache line will be updated to modified if the

attempt succeeds. Upon the receipt of an invalidation message, a

core will invalidate its copy only if the state is shared. For more details on variations of multicore architectures, coherence protocols,

and memory consistency, the reader may consult, e.g., [6, 12, 20].

Queue of

tasks

{

…

}

Task

Core 1

Core 2

...............

Core N

Local

cache

Labels

{!Rd(n),?RdX(m)}

{!RdX(m),?Rd(n)}

. . . . . . . . . . . . . . . {?Rd(n),?RdX(m)}

Data

instructions

Abstract communication medium

{?Rd(n),?RdX(m)}

Main memory

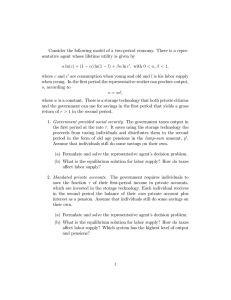

Figure 1: Abstract model of multi-core architecture (illustration).

3.

THE ABSTRACT MODEL

This section describes our abstract model of execution on architectures with shared memory, inter-core communications, and

where cores have a private one-level cache. Figure 1 depicts one

such architecture. The cores in our model execute low-level statements, given as tasks and scheduled by a task queue, reflecting the

read and write operations of a program. These statements interact with local caches and may trigger the movement of data between the caches and main memory, reflected as fetch and flush

data instructions. The exchange of messages between caches and

main memory is captured by an abstract communication medium,

abstracting from different concrete topologies such as bus, ring,

or mesh. Communication in this medium appears to be instantaneous and is captured by labels. If a core needs to access a block of

memory with address n, which is not available with the right permissions in its local cache, it will broadcast a !Rd(n) or !RdX(n)

message to all other components in the configuration to obtain read

or read exclusive permissions to n, respectively, and it will proceed

to fetch the data, if needed. Observe that a read exclusive message will invalidate all other copies of that memory block in other

caches, so the sender can perform a write operation. Consequently,

the consistency of copies of data in different caches in this abstract

model will be maintained by an abstracted version of the basic coherence protocol MSI. Technically, we let synchronization of dual

labels on parallel transitions capture the instantaneous exchange of

messages, as common in process algebra. This mechanism is used

to model the abstract communication medium; a component which

sends a message generates a label and the other components will instantaneously receive the dual labels ?Rd(n) and ?RdX(n) that the

medium automatically generates (e.g., as in Figure 1). For simplicity, the data contained in memory blocks and cache lines is ignored,

a cache line has the same size as a memory block, and data does not

move from one cache to another directly, but indirectly via the main

memory. The model guarantees sequential consistency [14].

Figure 2 contains the syntax of the runtime structure in the model.

The input language consists of tasks with source-level statements

sst. These statements are PrRd(r) for reading from a memory reference r, PrWr(r) for writing to r and commit(r) for flushing r.

Cores execute runtime statements rst, including sst, by interacting

with the local cache. The extra statements are explained as follows.

A core may be blocked during the execution due to a cache miss;

PrRdBl(r) and PrWrBl(r) represent the corresponding waiting

states while data is being fetched from main memory, and commit

forces the flushing of all modified data contained in a cache into

Syntactic

categories.

MM,C, Qu in id

n, m in address

r in reference

k in version

α in hk, statusi

Definitions.

Config ::= MM(M) Qu(sst) CR : H

CR ::=C(MLoc ` rst) : h

MLoc ::= M, ∼, d

M ::= 0/ | M[n 7→hk, statusi]

status ::= mo | sh | inv

d ::= ε | d; d | fetch(n) | flush(n)

sst ::= ε | sst; sst | PrRd(r) | PrWr(r) | commit(r)

rst ::= sst | rst; rst | PrRdBl(r) | PrWrBl(r)

| commit

H ::= ε | H; ev

h ::= ε | h; ev

ev ::=W (C, n) | R(C, n)

Figure 2: Syntax for the abstract model of parallel architectures,

where over-bar denotes sets (e.g., CR) and where ∼ represents the

associativity and replacement policy.

main memory, used at the end of a task to guarantee that all data is

flushed before another task is assigned to the core.

A runtime configuration Config expresses that the multicore

architecture has reached a given state after observing a trace H of

events. The configuration consists of a main memory MM(M), a set

Qu(sst) of tasks to be scheduled and executed in a core, multiple

cores CR, and a global history H of events. Memory maps M bind

memory addresses n to pairs hk, statusi, representing both memory

blocks in main memory and cache lines in cache memory. Each

core CR includes a local cache memory MLoc, a sequence rst of

statements to be executed, and a local history h. A cache memory

MLoc consists of a map M, a function ∼ expressing its cache associativity and replacement policy, and a sequence of data instructions d. In MSI, a cache line can be modified, shared or invalid;

this is captured in our formal syntax by a status mo, sh or inv, respectively. (Since data is first modified in a cache, blocks in main

memory only have status sh or inv, see Sect. 4 below.) We abstract

from the actual data contained in M, but keep the version number k

of the data such that the highest version number together with a sh

or mo status represents the most updated copy of data. The lookup

function M(n) returns the corresponding pair hk, statusi if n is in

the domain of M. Otherwise it returns ⊥, indicating n does not exist in M. Data instructions fetch(n) load memory blocks into

the cache when there is a cache miss and flush(n) store cache

lines in main memory.

Logs. The global history H logs the concurrent global execution

of statements in the cores CR, such that a successfully completed

read or write by a core to a reference r is reflected by an event

ev appended to H. Since many cores execute in parallel, multiple

events may be appended at the same time. In events R(C, n) for

reading and W (C, n) for writing, C is the id of the core and n the

block address in which r is located. The local history h logs the

execution of a core; it appends single events ev whenever a read or

write operation succeeds in the core. Thus, a local history h is a

projection over the global history H with respect to a given core.

4.

OPERATIONAL SEMANTICS

We develop a structural operational semantics (SOS) for our abstract model of cache coherent multicore architectures. In an initial

configuration, all memory blocks in the main memory MM have

status sh and version number 0, the task queue Qu contains a set

of tasks written in the source-level language sst, each core in CR

has an empty cache, and no data instructions as well as no runtime statements. Executions start from an initial configuration by

applying global transition rules, which in turn apply local transi-

∗

tion rules. Let Config → Config0 denote an execution starting from

Config and reaching configuration Config0 by applying zero or more

global transition rules, in which case we call Config0 reachable.

Global steps capture the abstract communication medium with

interactions to flush and fetch data to and from main memory, schedule tasks and follow a global protocol to guarantee data consistency. The communication medium, using labels for instantaneous

communication, allows many cores to request and access different memory blocks in parallel. Therefore, there may in general be

many interactions occurring at the same time and synchronization

of labels on transitions is over a possibly empty sets of labels. We

formally define the syntax for the label mechanism as follows:

W ::= !Rd(n) |!RdX(n)

S ::= 0/ | {W } | S ∪ S

Q ::= ?Rd(n) |?RdX(n)

R ::= 0/ | {Q} | R ∪ R

where S contains at most one label per block address n. Sect. 4.1

details the global rules.

Local steps capture the local transitions in main memory, the

local executions of statements in each core and the local actions

derived from the global protocol to keep local data coherent with

respect to the other components. Sect. 4.2 details the local rules.

4.1

Global Transition Rules

The global steps of the operational semantics are given in Figure 3. These transition rules describe the interactions and communications between the different components in the configuration,

and ensure data synchronization between cores and main memory.

Rule T OP -S YNCH captures the global synchronization for handling a non-empty set S of labels corresponding to broadcast messages. In this rule, R is the set of receiving labels dual to S. The

configuration is updated in two steps: the main memory must accept the set R and the cores must accept the set S.

Rule C ORE -C OMMUNICATION recursively decomposes the label set S into sets of sending and receiving labels distributed over

the cores CR, such that each set eventually contains at most one W

label. Each set of cores must accept the associated set of labels

in the premises of the rule. The decomposition ensures that only

receiving messages are shared between the transitions. The rule

ensures that a core which does not send a message W will receive

the dual message Q. The decomposition also applies to the global

history which projects to the sets CR1 and CR2 , respectively. If the

transitions generate events, these are merged into a set of events

which extends the global history H.

The rule T OP -A SYNCH captures parallel transitions in different components when the set of labels is empty. These transitions can be local to individual cores, parallel memory accesses

or scheduling of new tasks. There are four cases: CR1 perform local transitions without labels, CR2 access main memory, CR3 get

new tasks from the task queue, and CR4 are idle. The decomposition for local transitions, memory accesses, and scheduling of new

tasks is respectively handled by the rules PAR -I NTERNAL -S TEPS,

PAR -M EMORY-ACCESS, and PAR -TASK - S CHEDULER. Let the

predicate disjoint(CR1 ,CR2 , CR3 ,CR4 ) express that the sets of cores

involved in the parallel transitions are disjoint to each other.

Data transfer between a cache and main memory is described

in rules of the form MM(M); (MLoc) → MM(M 0 ); (MLoc0 ). They

capture the execution of data instructions fetch and flush. Here

the function select(M, ∼, n), used in the rules for fetching a data

block with address n, returns the address of the cache line that needs

to be evicted to give space to the data block being fetched. If no

eviction is needed, the select function returns n (cf. rule F ETCH1 ).

Rule F ETCH2 describes the case where we need to evict a nonmodified cache line m; rule F ETCH3 refers to the case where the

(C ORE -C OMMUNICATION )

S

0

(T OP -A SYNCH )

CR = CR1 ∪CR2 ∪CR3 ∪CR4 disjoint(CR1 ,CR2 ,CR3 ,CR4 )

0

0

0

0

CR = CR1 ∪CR2 ∪CR3 ∪CR4 H 0 = H; ev

0

CR1 : H/id(CR1 ) → CR1 : H/id(CR1 ); ev

0

0

MM(M) CR2 → MM(M 0 ) CR2 Qu(sst) CR3 → Qu(sst0 ) CR3

0

MM(M) Qu(sst) CR : H →

− MM(M 0 ) Qu(sst0 ) CR : H 0

(F ETCH1 )

m = select(M2 , ∼2 , n) m = n

M(n) = hk, shi M20 = M2 [n 7→hk, shi]

MM(M); (M2 , ∼2 , fetch(n); d2 )

→ MM(M); (M20 , ∼2 , d2 )

0

S2 ∪R1 ∪R

0

S∪R

0

CR2 : H/id(CR2 ) −−−−−→ CR2 : H/id(CR2 ); ev2

S = S1 ∪ S2 S1 ∩ S2 = 0/ H 0 = H; (ev1 ∪ ev2 )

R1 = {?RdX(n) |!RdX(n) ∈ S1 } ∪ {?Rd(n) |!Rd(n) ∈ S1 }

R2 = {?RdX(n) |!RdX(n) ∈ S2 } ∪ {?Rd(n) |!Rd(n) ∈ S2 }

MM(M) Qu(sst) CR : H →

− MM(M 0 ) Qu(sst) CR : H 0

0/

S ∪R ∪R

1 2

CR1 : H/id(CR1 ) −−

−−−→ CR1 : H/id(CR1 ); ev1

(T OP -S YNCH )

0

R

S

MM(M) →

− MM(M 0 ) CR : H →

− CR : H 0

S 6= 0/ {n |!RdX(n) ∈ S} ∩ {n |!Rd(n) ∈ S} = 0/

R = {?RdX(n) |!RdX(n) ∈ S} ∪ {?Rd(n) |!Rd(n) ∈ S}

0

CR1 CR2 : H −−→ CR1 CR2 : H 0

(PAR -I NTERNAL -S TEPS )

H 0 = H; (ev1 ∪ ev2 )

0

CR1 : H/id(CR1 ) → CR1 : H/id(CR1 ); ev1

0

CR2 : H/id(CR2 ) → CR2 : H/id(CR2 ); ev2

0

0

CR1 CR2 : H → CR1 CR2 : H 0

(F ETCH2 )

m = select(M2 , ∼2 , n) m 6= n M2 (m) 6= hk, moi

M(n) = hk, shi M20 = M2 [m 7→ ⊥, n 7→ hk, shi]

MM(M); (M2 , ∼2 , fetch(n); d2 )

→ MM(M); (M20 , ∼2 , d2 )

(F LUSH1 )

M2 (n) = hk, moi M 0 = M[n 7→hk + 1, shi] M20 = M2 [n 7→hk + 1, shi]

MM(M); (M2 , ∼2 , flush(n); d2 ) → MM(M 0 ); (M20 , ∼2 , d2 )

(PAR -TASK -S CHEDULER )

sst0 = sst\sst

0

Qu(sst0 ) CR → Qu(sst00 ) CR

Qu(sst) CR C(M, ∼, ε ` ε) : h

0

→ Qu(sst00 ) CR C(M, ∼, ε ` sst; commit) : ε

(PAR -M EMORY-ACCESS )

MM(M[n 7→ α]); (MLoc)

→ MM(M[n 7→ α 0 ]); (MLoc0 )

0

0

M = M[n 7→ ⊥] MM(M 0 ) CR → MM(M 00 )CR

MM(M[n 7→ α]) CR C(MLoc ` rst) : h

0

→ MM(M 00 [n 7→ α 0 ]) CR C(MLoc0 ` rst) : h

(F ETCH3 )

m 6= n

m = select(M2 , ∼2 , n) M2 (m) = hk, moi

MM(M); (M2 , ∼2 , fetch(n); d2 )

→ MM(M); (M2 , ∼2 , flush(m); fetch(n); d2 )

(F LUSH2 )

M2 (n) 6= hk, moi ∨ n 6∈ dom(M2 )

MM(M); (M2 , ∼2 , flush(n); d2 ) → MM(M); (M2 , ∼2 , d2 )

Figure 3: Global semantics for cache coherent multicore architectures

cache line m to be evicted has status mo, so it needs to be flushed before cache line n can be loaded. Rules F ETCH1 and F ETCH2 check

that the cache line has status sh in main memory, otherwise the

instruction is blocked until the data is flushed from another cache.

Rule F LUSH1 stores a cache line in main memory, incrementing

the version number and setting its status to sh both in the cache and

main memory. Rule F LUSH2 discards the flush(n) instruction if

the cache line n is no longer modified (or has been evicted).

4.2

Local Transition Rules

The rules in this section capture local transitions in either the

cores or the main memory, and are given in Figure 4. Local rules in

the cores reflect the statements being executed and the local finite

state controller enforcing the MSI protocol. Let addr(r) denote the

block address that contains the reference r, and status(M, n) the

status of cache line n in the map M.

In the main memory, the controller sets the status of a block to

inv in rule O NE -L INE -M AIN -M EMORY1 if exclusive access has

been requested. Rule O NE -L INE -M AIN -M EMORY2 will always

accept a shared read request. Rules M AIN -M EMORY1 and M AIN M EMORY2 are distribution rules for sets of labels.

Label sets are decomposed by the S END -R ECEIVE -M ESSAGE

(which has only one W label), R ECEIVE -E MPTY, and R ECEIVE M ESSAGE rules in order to feed the finite state controller. A core

can only receive an exclusive request ?RdX(n) for a cache line that

is not modified. Rule I NVALIDATE -O NE -L INE sets the status of

cache line n to inv if the cache line has status sh when the core

receives a ?RdX(n) message. Rule I GNORE -I NVALIDATE -O NE L INE ignores any read exclusive message for an invalid cache line,

or for a block which is not in the cache. For messages ?Rd(n), if the

cache line n has status mo, rule F LUSH -O NE -L INE adds a flush

to the head of the data instructions d (to avoid deadlock), otherwise

rule I GNORE -F LUSH -O NE -L INE ignores the message.

Read statements succeed if the cache line n containing the requested reference r is available in the cache, applying rule P R R D1 .

Otherwise, a fetch(n) instruction is added to the tail of the data

instructions d in rule P R R D2 . In this case, execution is blocked by

the statement PrRdBl(r). Execution may proceed once the block n

has been copied into the cache, captured by rule P R R D B LOCK1 . In

the parallel setting, the cache line may get invalidated while the

core is still blocked after fetch. Rule P R R D B LOCK2 captures

this situation and broadcasts the !Rd(n) message again.

Rule P RW R1 expresses that a write statement PrWr(r) succeeds

when the memory block has mo status in cache memory. If the

cache line is shared, the core needs to get exclusive access, captured by rule P RW R2 . If the cache line is invalid (or the block

is not in the cache), the core first needs to request the cache line

in rule P RW R3 . Similar to the case for read, we use a statement

PrWrBl(r) and the rule P RW R B LOCK1 to block repeated read

requests. Once the cache line has status sh, rule P RW R B LOCK2

requests exclusive access, as in rule P RW R2 .

The statements commit(r) and commit are respectively used

to force flushing of a single modified cache line and of the entire

cache. Rules C OMMIT1 and C OMMIT2 capture the single cache

line for modified and non-modified cache lines, respectively. Rules

C OMMIT-A LL1 and C OMMIT-A LL2 reduce a commit statement

to a sequence of flush-instructions. In order to ensure data consistency among main memory and individual caches, the final statement in a task should be commit (see rule PAR -TASK -S CHEDULER

in Figure 3), in this way all modified data in the cache will be

flushed before another task is assigned to the core.

5.

CORRECTNESS

We consider correctness properties for the proposed model, including the preservation of program order in cores, the absence of

data races, and successful accesses to memory locations always

retrieve the most recent value1 . We first define a function which

translates statements into event histories:

D EFINITION 1. Let addr(r) = n. Define rst ↓C inductively over rst:

(PrRd(r); rst) ↓C = R(C, n); rst ↓C

(PrRdBl(r); rst) ↓C = R(C, n); rst ↓C

(PrWr(r); rst) ↓C =W (C, n); rst ↓C

(PrWrBl(r); rst) ↓C =W (C, n); rst ↓C

(commit(r); rst) ↓C = rst ↓C

commit ↓C = ε

ε ↓C = ε

1 The proofs of the lemmas in this section can be found in Appendix A.

R

(M AIN -M EMORY1 )

Q

MM(M) →

− MM(M 0 ) MM(M 0 ) −

→ MM(M 00 )

R∪{Q}

MM(M) −−−−→ MM(M 00 )

(M AIN -M EMORY2 )

0/

MM(M) →

− MM(M)

(S END -R ECEIVE -M ESSAGE )

R

C(MLoc ` rst) : h →

− C(MLoc0 ` rst) : h

W

C(MLoc0 ` rst) : h −

→ C(MLoc00 ` rst0 ) : h0

R∪{W }

C(MLoc ` rst) : h −−−−→ C(MLoc00 ` rst0 ) : h0

(I NVALIDATE -O NE -L INE )

M(n) = hk, shi

M 0 = M[n 7→hk, invi]

C(M, ∼, d ` rst) : h

?RdX(n)

−−−−→ C(M, ∼, d ` rst) : h

?RdX(n)

(P R R D1 )

n = addr(r) status(M, n) 6= inv

C(M, ∼, d ` PrRd(r); rst) : h

→ C(M, ∼, d ` rst) : h; R(C, n)

(P R R D B LOCK2 )

n = addr(r) status(M, n) = inv

d = d; fetch(n) M 0 = M[n 7→ ⊥]

C(M, ∼, d ` PrRdBl(r); rst) : h

0

!Rd(n)

−−−→ C(M 0 , ∼, d0 ` PrRdBl(r); rst) : h

(P RW R3 )

n = addr(r) status(M, n) = inv ∨ n 6∈ dom(M)

0

d = d; fetch(n) M 0 = M[n 7→ ⊥]

C(M, ∼, d ` PrWr(r); rst) : h

!Rd(n)

−−−→ C(M 0 , ∼, d0 ` PrWrBl(r); rst) : h

(C OMMIT1 )

n = addr(r) status(M, n) = mo

0

d = d; flush(n)

C(M, ∼, d ` commit(r); rst) : h

→ C(M, ∼, d0 ` rst) : h

?RdX(n)

Q

C(MLoc0 ` rst) : h −

→ C(MLoc00 ` rst) : h

R∪{Q}

C(MLoc ` rst) : h −−−−→ C(MLoc00 ` rst) : h

(F LUSH -O NE -L INE )

n ∈ dom(M) status(M, n) = mo

d0 = flush(n); d

C(M, ∼, d ` rst) : h

?Rd(n)

−−−→ C(M, ∼, d0 ` rst) : h

!Rd(n)

−−−→ C(M 0 , ∼, d0 ` PrRdBl(r); rst) : h

(P RW R1 )

n = addr(r) status(M, n) = mo

C(M, ∼, d ` PrWr(r); rst) : h

→ C(M, ∼, d ` rst) : h;W (C, n)

(P RW R B LOCK1 )

n = addr(r) status(M, n) = inv

d = d; fetch(n) M 0 = M[n 7→ ⊥]

C(M, ∼, d ` PrWrBl(r); rst) : h

0

−−−→ C(M 0 , ∼, d0 ` PrWrBl(r); rst) : h

(C OMMIT2 )

n = addr(r)

status(M, n) 6= mo ∨ n 6∈ dom(M)

C(M, ∼, d ` commit(r); rst) : h

→ C(M, ∼, d ` rst) : h

?Rd(n)

MM(M) −−−→ MM(M)

0

(R ECEIVE -M ESSAGE )

R

C(MLoc ` rst) : h →

− C(MLoc0 ` rst) : h

(P R R D2 )

n = addr(r) status(M, n) = inv ∨ n 6∈ dom(M)

d0 = d; fetch(n) M 0 = M[n 7→ ⊥]

C(M, ∼, d ` PrRd(r); rst) : h

!Rd(n)

(O NE -L INE -M AIN -M EMORY2 )

MM(M) −−−−→ MM(M )

(R ECEIVE - EMPTY )

C(MLoc ` rst) : h

0/

→

− C(MLoc ` rst) : h

(I GNORE -I NVALIDATE -O NE -L INE )

n 6∈ dom(M) ∨ status(M, n) = inv

C(M, ∼, d ` rst) : h

−−−−→ C(M 0 , ∼, d ` rst) : h

(O NE -L INE -M AIN -M EMORY1 )

M 0 = M[n 7→hk, invi]

(I GNORE -F LUSH -O NE -L INE )

n 6∈ dom(M) ∨ status(M, n) 6= mo

C(M, ∼, d ` rst) : h

?Rd(n)

−−−→ C(M, ∼, d ` rst) : h

(P R R D B LOCK1 )

n = addr(r) status(M, n) = sh

C(M, ∼, d ` PrRdBl(r); rst) : h

→ C(M, ∼, d ` rst) : h; R(C, n)

(P RW R2 )

n = addr(r) M(n) = hk, shi M 0 = M[n 7→hk, moi]

C(M, ∼, d ` PrWr(r); rst) : h

!RdX(n)

−−−−→ C(M 0 , ∼, d ` rst) : h;W (C, n)

(P RW R B LOCK2 )

n = addr(r) M(n) = hk, shi M 0 = M[n 7→hk, moi]

C(M, ∼, d ` PrWrBl(r); rst) : h

!RdX(n)

−−−−→ C(M 0 , ∼, d ` rst) : h;W (C, n)

(C OMMIT-A LL1 )

status(M, n) = mo

flush(n) 6∈ d d0 = d; flush(n)

C(M, ∼, d ` commit) : h

→ C(M, ∼, d0 ` commit) : h

(C OMMIT-A LL2 )

∀n ∈ dom(M).status(M, n) 6= mo

C(M, ∼, d ` commit) : h

→ C(M, ∼, d ` ε) : h

Figure 4: Local semantics for cache coherent multicore architectures

Intuitively, rst ↓C reflects the expected program order of read and

write accesses when executing rst directly on main memory. Note

that ε; h = h. We show that execution with local cache preserves

this program order:

L EMMA 1

(P ROGRAM ORDER ).

If C(M, ∼, ε ` rst) : ε →∗ C(M 0 , ∼, d ` rst0 ) : h , then h; rst0 ↓C = rst ↓C .

The next lemma states properties about data races when accessing a memory block from main memory.

L EMMA 2 (N O DATA RACES ). The following properties hold for

all reachable configurations MM(M) Qu(sst) CR : H:

(a) ∀n ∈ dom(M).(status(M, n) = inv ⇔

∃ Ci (Mi , ∼i , di ` rsti ) : hi ∈ CR. status(Mi , n) = mo)

(b) ∀n ∈ dom(M). (status(M, n) = inv ⇔

(∃ CRi ∈ CR where CRi = Ci (Mi , ∼i , di ` rsti ) : hi . status(Mi , n) = mo)

∧ ∀ C j (M j , ∼ j , d j ` rst j ) : h j ∈ CR\CRi .

(status(M j , n) = inv ∨ n 6∈ dom(M j )))

(c) ∀n ∈ dom(M). (status(M, n) = sh ⇔

(∀ Ci (Mi , ∼i , di ` rsti ) : hi ∈ CR. status(Mi , n) 6= mo))

(d) ∀ Ci (Mi , ∼i , di ` rsti ) : hi ∈ CR, ∀n ∈ dom(Mi ).

(status(Mi , n) = sh ⇒ status(M, n) = sh)

Lemma 2 guarantees that there is at most one modified copy of

a memory block among the cores. This ensures single write access

and parallel read access to the memory blocks.

The following lemma shows that the shared copies of a memory

block n in different cores always have the same version number.

Let the function version(M, n) return the version number of block

address n in M.

L EMMA 3 (C ONSISTENT SHARED COPIES ). Given a reachable

configuration MM(M) Qu(sst) CR and n ∈ dom(M): If status(M, n) = sh,

and if for any C j (M j , ∼ j , d j ` rst j ) : h j ∈ CR such that status(M j , n) = sh,

then version(M, n) = version(M j , n).

We define the most recent value of a memory block as follows:

D EFINITION 2 (M OST RECENT VALUE ). For a global configuration MM(M)Qu(sst) CR, a memory location n, and a core CRi ∈ CR,

where CRi = Ci (Mi , ∼i , di ` rsti ) : hi . Mi (n) has the most recent value if the

following holds.

(a) If Mi (n) = hk, shi, and ∀ C j (M j , ∼ j , d j ` rsti ) : h j ∈ CR\CRi .

status(M j , n) = sh, then M j (n) = hk, shi, and M(n) = hk, shi.

(b) If status(Mi , n) = mo, then status(M, n) = inv, and

∀ C j (M j , ∼ j , d j ` rsti ) : h j ∈ CR\CRi . status(M j , n) = inv.

With Lemma 3 and Definition 2, the following lemma shows that

if a core succeeds to access a memory block, it will always get the

most recent value.

L EMMA 4 (N O ACCESS TO STALE DATA ). Let MM(M) Qu(sst)

CR be a reachable configuration such that CRi = Ci (Mi , ∼i , di ` rsti ) : hi for

CRi ∈ CR. Given a block address n and an event e ∈ {R(Ci , n),W (Ci , n)},

!RdX(n)

we have that: if CRi : hi → CR0i : hi ; e or CRi : h −−−−→ CR0i : hi ; e, then

Mi (n) has the most recent value.

6.

PROOF OF CONCEPT INTERPRETER

The proposed operational semantics has been implemented in

Maude [4], a high-level declarative language for executable specifications based on rewriting logic, which supports different analysis

strategies. Maude has been proposed as a semantic framework for

concurrent and distributed systems; a parallel system can be axiomatized using equational theory to model the states and rewrite

rules can be used to model the system’s dynamic behaviour. Maude

specifications can be executed using rewrite commands which implement top-down rule fair and depth-first position fair strategies.

Maude also supports searching through all reachable states for states

in which invariants are violated such that we can verify invariant

properties for all execution paths of concrete examples.

To implement the semantics as an executable specification2 , configurations are defined as multi-sets of Maude objects where each

object has a unique identity and the communications between objects are done by message passing. To make the operational semantics executable, we need to concretize the address-function and the

associativity and replacement policy of the caches. This is now a

configurable parameter to the cache objects in the implementation.

Local transition rules, for the execution of both low-level statements and data instructions, are specified as rewrite rules. Messages between the cores are identified operationally and not declared for the global transitions. The main challenge in the implementation was the C ORE -C OMMUNICATION rule, because Maude

does not support label synchronization on transitions as in the operational semantics. Instead we implemented a broadcast operation

using a sink to collect messages from different cores, which then

multicasts the messages to the other cores using equational logic.

The version numbers for cache lines and the histories in the semantics serve the purpose of book-keeping to prove Lemmas 1, 3 and 4

and have been omitted in Maude. Instead, we have added counters

for cache hits and cache misses per cache, to collect information

about the cache hit/miss ratio during simulations.

6.1

Simulations

This section looks closer at the Maude implementation of the

operational semantics, discussed above. We need to make the abstract associativity function ∼ executable. In the implementation,

the function Associative(k) is used to store the associativity of the

cache memory where k is the number of cache lines in each cache

set. For direct mapped caches, k is 1 while it is equal to the cache

size for fully associative caches. Each cache set has an associated

index which helps to find the particular cache set at the time of

fetching. Even though the implementation evicts cache lines randomly, first and second priority will be given for invalid and shared

cache lines, respectively.

Example. Fig. 5 shows an initial configuration {conf0} of the

Maude implementation of the operational semantics. Each core is

modelled by a Maude object with an identifier ci and a group of

attributes such as MLoc for the memory map M, CacheSize for

total number of cache lines in the private cache, ∼ for the cache

associativity, Rst for executing the task (rst), D for data instruction

queue, Misses for counting the local cache misses, and Hits for

counting the local cache hits.

The TBL object is used to concretize the address-function. Here,

the Addr attribute stores a map of bindings ref (r) 7→ n which de2 The Maude prototype is available at http://folk.uio.no/shijib/

musepat2016maude.zip

eq conf0 =

h c1 : CR | MLoc : empty, Rst : nil, D : nil, Misses : 0,

Hits : 0, CacheSize : 10, ~ : Associative(1) i

h c2 : CR | MLoc : empty, Rst : nil, D : nil, Misses : 0,

Hits : 0, CacheSize : 10, ~ : Associative(1) i

h c3 : CR | MLoc : empty, Rst : nil, D : nil, Misses : 0,

Hits : 0, CacheSize : 10, ~ : Associative(1) i

h sch : Qu | Sst : ( PrWr(0) PrRd(0) PrWr(1)), (PrWr(2)

PrWr(3)), (PrWr(2) PrRd(7)), (PrRd(6) PrWr(7)) i

h tbl : TBL | Addr : ref(0) 7→0, ref(1) 7→1, ref(2) 7→

2, ref(3) 7→3, ref(4) 7→4 , ref(5) 7→5, ref(6) 7→

6 ,ref(7) 7→7 i h sy : Sync | Data : nil i

h m : MM | M : 0 7→sh, 1 7→sh, 2 7→sh,3 7→sh, 4 7→sh,

5 7→sh, 6 7→sh, 7 7→sh, missCount : 0 i .

Figure 5: Example of an initial configuration.

rewrites : 5303 in 5ms cpu (5ms real) (925318

rewrites/second)result GlobalSystem : {

h c1 : CR | MLoc : 0 7→0 7→sh, 1 7→1 7→sh, 6 7→6 7→sh,

7 7→7 7→sh,Rst : nil,D : nil, Misses : 4, Hits : 1,

CacheSize : 10, ~ : Associative(1) i

h c2 : CR | MLoc : 2 7→2 7→inv, 7 7→7 7→sh, Rst : nil,

D : nil,Misses : 2, Hits : 0,CacheSize : 10,

~ : Associative(1) i

h c3 : CR | MLoc : 2 7→2 7→sh, 3 7→3 7→sh, Rst : nil,

D : nil,Misses : 2, Hits : 0,CacheSize : 10,

~ : Associative(1) i

h sch : Qu | Sst : empty i h sy : Sync | Data : nil i

h tbl : TBL | Addr : ref(0) 7→0, ref(1) 7→1, ref(2) 7→

2, ref(3) 7→3, ref(4) 7→4, ref(5) 7→5, ref(6) 7→

6, ref(7) 7→7 i

h m : MM | M : 0 7→sh, 1 7→sh, 2 7→sh, 3 7→inv, 4 7→sh,

5 7→sh, 6 7→sh, 7 7→sh,missCount : 8 i }

Figure 6: Example of a resulting final object configuration.

fines the address(r) function to find memory block where a

given reference is stored.

The MM object implements the main memory. Here, the M attribute stores a map of bindings n 7→ status. The attribute missCount

counts the number of fetch operations which have taken place in

the configuration during the execution.

The Sync object implements the label set synchronisation during message passing. Its Data attribute collects broadcast messages without causing any data race to the same memory address.

When a consistent set of messages has been collected, they are delivered to the system.

The sch object is the scheduler which allocates tasks (i.e., statement sequences) to each core when its executing task is empty.

There is no Version number in this Maude model.

The Maude model is executed by the command frew {conf0}

The output from a simulation shows one of the possible final object

configurations, as in Fig. 6. In this final configuration, all cores

have completed their task queue and committed all modified copies

to the main memory.

The Maude search command allows all possible states from an

initial configuration {conf0} to be explored. The search command is written search {conf0} −→ {C : Configuration}

where C is a variable of sort Configuration which matches

with all global configurations. We can also use search command to

prove safety properties by testing a predicate on the matches. For

example, the search condition in Fig. 7 detects violations of the in-

variant in Lemma 2(a), if any. Since no solutions are found, the

search shows that the invariant was never violated from this initial

state.

search {conf0} −→ ∗{C : Configuration

h A : Oid : CR | MLoc : (x : Int 7→(y : Int 7→stA : Status),

Atts i h B : Oid : CR | MLoc : (x : Int 7→(y : Int 7→

stB : Status), Atts i h C : Oid : CR | MLoc : (x : Int 7→

(y : Int 7→stC : Status), Atts i h O : Oid : MM |

M : (y : Int 7→stM : Status, D : DataSet), Atts i

h tbl : Oid : TBL | Atts i h sy : Oid : Sync | Atts i

h sch : Oid : Qu | Atts i }

such that (stM : Status = inv) and

((stA : Status = mo and stB : Status = mo) or

(stA : Status 6= mo and stB : Status 6= mo and

stC : Status 6= mo)) .

No solution.

Figure 7: Example for search.

7.

CONCLUSIONS

Slogans such as “move the processes closer to the data” reflect

how data location is becoming increasingly important in parallel

computing. To study how computations and data locations interfere, formal models which account for the location of data and the

penalties associated with data access may help the system developer. This paper proposes a basis for such formal models in terms

of an operational semantics of execution on cache coherent multicore architectures. The proposed model also opens for reasoning

about the proximity of processes and data using techniques from

programming languages research such as subject reduction proofs.

In this paper, the semantics incorporates the MSI cache coherence protocol. In the semantics, version numbers and histories are

only needed for correctness proofs, and have been omitted from the

prototype implementation. Obvious extensions to our work include

dynamic task creation, loops, and choice in the statement language,

which all extend the operational semantics with standard rules. Going beyond these language extensions, we plan to use the presented

semantics in future work to study data layout in main memory, as

well as synchronization mechanisms between tasks and scheduling

policies to improve the cache hit/miss ratio. The long term goal is

to feed such analyses into a compiler for a high-level programming

language which targets multicore architectures, such as Encore [2].

8.

REFERENCES

[1] N. L. Binkert, B. M. Beckmann, G. Black, S. K. Reinhardt,

A. G. Saidi, A. Basu, J. Hestness, D. Hower, T. Krishna,

S. Sardashti, R. Sen, K. Sewell, M. Shoaib, N. Vaish, M. D.

Hill, and D. A. Wood. The gem5 simulator. SIGARCH

Computer Architecture News, 39(2):1–7, 2011.

[2] S. Brandauer, E. Castegren, D. Clarke, K. Fernandez-Reyes,

E. B. Johnsen, K. I Pun, S. L. Tapia Tarifa, T. Wrigstad, and

A. M. Yang. Parallel objects for multicores: A glimpse at the

parallel language Encore. In Formal Methods for Multicore

Programming, LNCS 9104, pages 1–56. Springer, 2015.

[3] T. Carlson, W. Heirman, and L. Eeckhout. Sniper: Exploring

the level of abstraction for scalable and accurate parallel

multi-core simulation. In Intl. Conf. on High Performance

Computing, Networking, Storage and Analysis (SC’11),

pages 1–12, 2011.

[4] M. Clavel, F. Durán, S. Eker, P. Lincoln, N. Martí-Oliet,

J. Meseguer, and C. L. Talcott, editors. All About Maude - A

High-Performance Logical Framework, How to Specify,

Program and Verify Systems in Rewriting Logic, LNCS 4350.

Springer, 2007.

[5] K. Crary and M. J. Sullivan. A calculus for relaxed memory.

In Proc. POPL, pages 623–636. ACM, 2015.

[6] D. E. Culler, A. Gupta, and J. P. Singh. Parallel Computer

Architecture: A Hardware/Software Approach. Morgan

Kaufmann, 1997.

[7] G. Delzanno. Constraint-based verification of parameterized

cache coherence protocols. Formal Methods in System

Design, 23(3):257–301, 2003.

[8] D. L. Dill, A. J. Drexler, A. J. Hu, and C. H. Yang. Protocol

verification as a hardware design aid. In Proc. Intl. Conf. on

Computer Design (ICCD’92), pages 522–525. IEEE, 1992.

[9] D. L. Dill, S. Park, and A. G. Nowatzyk. Formal specification

of abstract memory models. In Proc. Symp. on Research on

Integrated Systems, pages 38–52. MIT Press, 1993.

[10] B. Dongol, O. Travkin, J. Derrick, and H. Wehrheim. A

high-level semantics for program execution under total store

order memory. In Theoretical Aspects of Computing (ICTAC

2013), LNCS 8049, pages 177–194. Springer, 2013.

[11] E. A. Emerson, and V. Kahlon. Rapid parameterized model

checking of snoopy cache coherence protocols. In Tools and

Algorithms for the Construction and Analysis of Systems

(TACAS 2003), LNCS 2619, pages 144–159. Springer, 2003.

[12] J. L. Hennessy and D. A. Patterson. Computer Architecture:

A Quantitative Approach. Morgan Kaufmann, 2011.

[13] R. Jagadeesan, C. Pitcher, and J. Riely. Generative

operational semantics for relaxed memory models. In Proc.

ESOP, LNCS 6012, pages 307–326. Springer, 2010.

[14] L. Lamport. How to make a multiprocessor computer that

correctly executes multiprocess programs. IEEE Trans.

Comput., 28(9):690–691, 1979.

[15] Y. Li, V. Suhendra, Y. Liang, T. Mitra, and A. Roychoudhury.

Timing analysis of concurrent programs running on shared

cache multi-cores. In Real-Time Systems Symp. (RTSS 2009),

pages 57–67. IEEE Press, Dec 2009.

[16] S. Mador-Haim, L. Maranget, S. Sarkar, K. Memarian,

J. Alglave, S. Owens, R. Alur, M. M. K. Martin, P. Sewell,

and D. Williams. An axiomatic memory model for POWER

multiprocessors. In Proc. CAV, LNCS 7358, pages 495–512.

Springer, 2012.

[17] M. M. K. Martin, D. J. Sorin, B. M. Beckmann, M. R. Marty,

M. Xu, A. R. Alameldeen, K. E. Moore, M. D. Hill, and

D. A. Wood. Multifacet’s general execution-driven

multiprocessor simulator (gems) toolset. SIGARCH Comput.

Archit. News, 33(4):92–99, Nov. 2005.

[18] J. E. Miller, H. Kasture, G. Kurian, C. G. III, N. Beckmann,

C. Celio, J. Eastep, and A. Agarwal. Graphite: A distributed

parallel simulator for multicores. In High-Performance

Computer Architecture (HPCA-16), pages 1–12. IEEE, 2010.

[19] J. Pang, W. Fokkink, R. F. H. Hofman, and R. Veldema.

Model checking a cache coherence protocol of a Java DSM

implementation. J. Log. and Alg. Prog., 71(1):1–43, 2007.

[20] D. A. Patterson and J. L. Hennessy. Computer Organization

and Design: The Hardware/Software Interface. Morgan

Kaufmann, 2013.

[21] F. Pong and M. Dubois. Verification techniques for cache

coherence protocols. ACM Comp. Surv., 29(1):82–126, 1997.

[22] S. Sarkar, P. Sewell, J. Alglave, L. Maranget, and

D. Williams. Understanding POWER multiprocessors. In

Proc. PLDI, pages 175–186. ACM, 2011.

[23] P. Sewell, S. Sarkar, S. Owens, F. Z. Nardelli, and M. O.

Myreen. X86-TSO: A rigorous and usable programmer’s

model for x86 multiprocessors. CACM, 53(7):89–97, 2010.

[24] G. Smith, J. Derrick, and B. Dongol. Admit your weakness:

Verifying correctness on TSO architectures. In 11th Intl.

Symp. on Formal Aspects of Component Softwares, LNCS

8997, pages 364–383. Springer, 2014.

[25] X. Yu, M. Vijayaraghavan, and S. Devadas. A proof of

correctness for the Tardis cache coherence protocol. arXiv

preprint, arXiv:1505.06459, 2015.

APPENDIX

A. SUPPLEMENTARY PROOFS

A.1

Proof of Lemma 1

or the runtime statements, the invariant holds for the configuration

after the step.

Case C OMMIT1 : C(M 0 , ∼, d0 ` commit(r); rst00 ) : h0 →

C(M 0 , ∼, d00 ` rst00 ) : h0

We are further given in the case that n = addr(r). Then by induction and by Definition 1, we have h0 ; (commit(r); rst00 ) ↓C =

h0 ; ε; rst00 ↓C = h0 ; rst00 ↓C , where the lemma holds. The other three

commit cases work analogously.

The rest of the rules in Figure 4 is not applicable because they

do not affect either the runtime statements or the local history.

A.2

Proof of Lemma 2

P ROOF. An initial configuration MM(M) Qu(sst) CR : H satisfies the lemma since all the memory blocks in the main memory

have the status shared, and all cores have empty caches and no data

instructions or runtime statements.

Next we are going to show the preservation of the invariant over

all transition steps:

S

P ROOF. It is trivial to see that the lemma holds for the initial

case where the number of transition step is zero, i.e., ε; rst ↓C =

rst ↓C . Next we are going to show the preservation of the local invariant over the transition steps. By induction hypothesis, we have

C(M, ∼, ε ` rst) : ε →k C(M 0 , ∼, d0 ` rst0 ) : h0 , where h0 ; rst0 ↓C =

rst ↓C . Note that k here refers to k transition steps. Next we have to

show the lemma still holds for the k + 1 th step.

Assume C(M 0 , ∼, d0 ` rst0 ) : h0 → C(M 00 , ∼, d00 ` rst00 ) : h00 , and

the step may be labelled. The proof proceeds by case distinction on

the rules for the local transition steps from Figure 4.

0

MM(M) Qu(sst) CR : H →

− MM(M 0 ) Qu(sst0 ) CR : H 0

(1)

where S is a set of sending messages. Remember that S contains

at most one message for each block address n. In the following,

the proof proceeds by case distinction on the rules for the transition

steps. By induction, the configuration MM(M) Qu(sst) CR : H

satisfies the lemma.

We first consider the case where S = 0.

/ Let CRi ∈ CR where

CRi = Ci (Mi , ∼i , di ` rsti ) : hi . By rule T OP -A SYNCH in Figure 3,

there are three possibilities of a reduction step when S = 0:

/ (1) it

can either be an internal transition in CRi (cf. rule PAR -I NTERNALS TEPS); or (2) a global step for the communication between CRi

Case P R R D1 : C(M 0 , ∼, d0 ` PrRd(r); rst00 ) : h0 →

and the main memory (cf. rule PAR -M EMORY-ACCESS); or (3) a

C(M 0 , ∼, d0 ` rst00 ) : h0 ; R(C, n)

global step for CRi to get a new task from the task queue (cf. rule

We are further given in the case that n = addr(r). Then by induction

PAR -TASK -S CHEDULER).

and Definition 1, we get h0 ; (PrRd(r); rst00 ) ↓C = h0 ; R(C, n); rst00 ↓C ,

For case (1), after the decomposition with rule PAR -I NTERNALwhich concludes the case. It is analogous for the case of P R R D B LOCK1 . S TEPS, the relevant internal transitions local in CRi include: P R R D1 ,

P R R D B LOCK1 , P RW R1 , and the four commit-rules in Figure 4.

!Rd(n)

Case P R R D2 : C(M 0 , ∼, d0 ` PrRd(r); rst00 ) : h0 −−−−→

These steps do not have any effect on the status of any memory

00

00

00

0

C(M , ∼, d ` PrRdBl(r); rst ) : h

block address in either the local cache or the main memory. By

We are further given in the case that n = addr(r). Then by induction

induction, the configuration before the step satisfies the invariant.

and Definition 1, we have h0 ; (PrRd(r); rst00 ) ↓C = h0 ; R(C, n); rst00 ↓C .

Hence, the invariant still holds after the transition, which concludes

For the configuration after the step, we have h0 ; (PrRdBl(r); rst00 ) ↓C

this case.

= h0 ; R(C, n); rst00 ↓C by Definition 1, which concludes the case.

For case (2), decomposing the global configuration with rule

The case of P R R D B LOCK2 works similarly.

PAR -M EMORY-ACCESS will ultimately lead to the application of

one of the rules for fetching/flushing a block address n from/to the

Case P RW R1 : C(M 0 , ∼, d0 ` PrWr(r); rst00 ) : h0 →

main memory in Figure 3.

C(M 0 , ∼, d0 ` rst00 ) : h0 ;W (C, n)

We are further given in the case that n = addr(r). By induction and

Definition 1, we have h0 ; (PrWr(r); rst00 ) ↓C = h0 ;W (C, n); rst00 ↓C ,

which concludes the case. The invariant holds for the cases of

P RW R2 and P RW R B LOCK2 in the similar way.

!Rd(n)

CaseP RW R3 :C(M 0 , ∼, d0 ` PrWr(r); rst00 ) : h0 −−−−→

C(M 00 , ∼, d00 ` PrWrBl(r); rst00 ) : h0

We are further given in the case that n = addr(r). Then by induction

and by Definition 1, we have h0 ; (PrWr(r); rst00 ) ↓C = h0 ;W (C, n);

rst00 ↓C . For the configuration after the step, we have h0 ; (PrWrBl(r);

rst00 ) ↓C = h0 ;W (C, n); rst00 ↓C by Definition 1, which concludes the

case.

!Rd(n)

Case P RW R B LOCK1 :C(M 0 , ∼, d0 ` PrWrBl(r); rst00 ) : h0 −−−−→

C(M 00 , ∼, d00 ` PrWrBl(r); rst00 ) : h0

We know by induction that the configuration before the step satisfies the invariant. Since the given step does not change either h0

Case F ETCH1 :

MM(M); (Mi , ∼i , fetch(n); di ) → MM(M); (Mi0 , ∼i , di0 )

We are further given in this case that M(n) = hk, shi, i.e., status(M, n)

= sh, and Mi0 = Mi [n 7→hk, shi] where n0 = select(Mi , ∼i , n) and

n0 = n. By induction, the invariant holds for the configuration before the step, and therefore status(M, n) = sh implies by part (c) of

the invariant that ∀ C j (M j , ∼ j , d j ` rst j ) : h j ∈ CR. status(M j , n) 6=

mo before the step. Note that there exists a core CR j such that

CR j = CRi . Since after the step status(Mi0 , n) = status(M, n) = sh,

the invariant is maintained. It is analogous for the case F ETCH2 .

Case F LUSH1 :

MM(M);Ci (Mi , ∼i , flush(n); di ) → MM(M 0 );Ci (Mi0 , ∼i , di0 )

In addition, we have in this case Mi (n) = hk, moi. Since by induction, the lemma holds for the configuration before the transition step, by part (a) of the invariant from the induction gives

status(M, n) = inv, and part (b) gives ∀ C j (M j , ∼ j , d j ` rst j ) : h j ∈

{?RdX(n)}

CR\CRi .(status(M j , n) = inv ∨ n 6∈ dom(M j ) before the transition.

We are additionally given that Mi0 = Mi [n 7→hk + 1, shi] and M 0 =

M[n 7→hk + 1, shi] for the configuration after the step, which satisfies part (c) and part (d) of the lemma, and therefore conclude the

case.

The premise MM(M) −−−−−−→ MM(M 0 ) correspond to the transition in the main memory. Then, by rules M AIN -M EMORY1 and

The cases F ETCH3 and F LUSH2 hold immediately because the

transition steps do not change the status of any memory location in

either the main memory or cache local in a core.

For the cores CR, rule T OP -S YNCH gives CR −−−−−−→ CR .

Then by recursive decomposition with rule C ORE -C OMMUNICATION,

Case (3) corresponds to the situation where CRi = Ci (Mi , ∼i , ε `

ε) : h. This case immediately holds because the step has no effect

on the status of any block address in any caches or main memory.

for all CR j ∈ CR\CRi and for some CR0j . For the step CRi −−−−−−→

CR0i , by rule S END -R ECEIVE -M ESSAGE in Figure 4, we get

Now we have to consider the set of sent messages S for a transition step in Equation (1) is not empty. The only applicable rule

for the case where S 6= 0/ is T OP -S YNCH in Figure 3, where updates

will be done to the main memory and to each core, respectively.

Consider a block address n and assume CRi sends a message W

for (exclusively) reading n in the following. Let us assume S = S0 ∪

{W }, where W can be either (i) !Rd(n) or (ii) !RdX(n). Note that

there is at most one message for each block address n in S. Because

of this property, and to keep the proof clear, we further assume

that S0 = 0;

/ that is, no other core sends any message apart from

CRi . The proof is applicable to all other cores in parallel which

send messages not relevant to address n. We further simplify the

proof for this case by omitting both the global and local histories in

the configurations because they do not affect the correctness of the

proof.

{!Rd(n)}

0

Case (i): MM(M) Qu(sst) CR −−−−−→ MM(M 0 ) Qu(sst0 ) CR

By rule T OP -S YNCH in Figure 3, we are given that R = {?RdX(n) |

!RdX(n) ∈ S} ∪ {?Rd(n) |!Rd(n) ∈ S}. In this case, we have R =

{?Rd(n)}.

{?Rd(n)}

The premise MM(M) −−−−−→ MM(M 0 ) correspond to the transition in the main memory. Then, by rules M AIN -M EMORY1 and

?Rd(n)

O NE -L INE -M AIN -M EMORY2 in Figure 4, we have MM(M) −−−−→

MM(M 0 ), where M 0 = M.

{!Rd(n)}

0

For the cores CR, rule T OP -S YNCH gives CR −−−−−→ CR . Then,

recursively decomposing with rule C ORE -C OMMUNICATION, we

{!Rd(n)}

{?Rd(n)}

get CRi −−−−−→ CR0i for some CR0i , and CR j −−−−−→ CR0j for all

{!Rd(n)}

CR j ∈ CR\CRi and for some CR0j . For the step CRi −−−−−→ CR0i ,

!Rd(n)

by rule S END -R ECEIVE -M ESSAGE in Figure 4, we get CRi −−−−→

CR0i , which is a step local in CRi . Consider the relevant rules in Figure 4, namely P R R D2 , P R R D B LOCK2 , P RW R3 and P RW R B LOCK1 .

The address n is either not in the local cache or its status is inv before the step, and is removed from the local cache in all these rules

after the transition.

{?Rd(n)}

For the step CR j −−−−−→ CR0j , by rule R ECEIVE -M ESSAGE in

?Rd(n)

Figure 4, we get CR j −−−−→ CR0j . Considering the relevant rules,

F LUSH -O NE -L INE and I GNORE -F LUSH -O NE -L INE in Figure 4,

the address n is not affected after the step. Since by induction, the

configuration before the step satisfies the lemma, and the configurations after the labelled step in the main memory as well as in all

cores satisfies the lemma, the global configuration after the transi0

tion, MM(M 0 ) Qu(sst0 ) CR , also satisfies the lemma.

{!RdX(n)}

0

Case (ii): MM(M) Qu(sst) CR −−−−−−→ MM(M 0 ) Qu(sst0 ) CR

By rule T OP -S YNCH in Figure 3, we are given that R = {?RdX(n) |

!RdX(n) ∈ S} ∪ {?Rd(n) |!Rd(n) ∈ S}. In this case, we have R =

{?RdX(n)}.

?Rd(n)

O NE -L INE -M AIN -M EMORY1 in Figure 4, we have MM(M) −−−−→

MM(M 0 ), where M 0 = M[n 7→hk, invi].

{!RdX(n)}

{!RdX(n)}

0

{?RdX(n)}

we get CRi −−−−−−→ CR0i for some CR0i , and CR j −−−−−−→ CR0j

{!RdX(n)}

!RdX(n)

CRi −−−−−→ CR0i , which is a step local in CRi . Consider CRi =

Ci (Mi , ∼i , di ), and the relevant rules in Figure 4, namely P RW R2

and P RW R B LOCK2 . The status of the address n in the cache local

in CRi is updated to mo after the step.

{?RdX(n)}

For the step CR j −−−−−−→ CR0j , by rule R ECEIVE -M ESSAGE in

?RdX(n)

Figure 4, we get CR j −−−−−→ CR0j . Considering the relevant rules,

I NVALIDATE -O NE -L INE and I GNORE -I NVALIDATE -O NE -L INE in

Figure 4, we have after the step the status of address n in all CR j

either inv or n does not exist in the domain. This together with having status(M 0 , n) = inv and status(Mi , , n) = mo after the transition

shows that part (a) and part (b) in the lemma hold, and therefore

concludes the case.

A.3

Proof of Lemma 3

P ROOF. An initial configuration MM(M) Qu(sst) CR : H satisfies the lemma since all the memory blocks in the main memory

have the status shared, and all cores have empty caches and no

data instructions or runtime statements. It is trivial that the invariant holds for the transition rules which are local to either the main

memory or a core because the transitions do not modify the version

number of a block address.

We consider in the following the cases where transitions are

communication steps between main memory and a core CRi ∈ CR.

The rules responsible for such communication steps are those for

fetching/flushing a memory block from/to the main memory in Figure 3. Let CRi = Ci (Mi , ∼i , di ` rsti ) : hi , and n be the block address.

Case F ETCH1 : MM(M); (Mi , ∼i , di ) → MM(M); (Mi0 , ∼i , di0 )

We are further given in this case that M(n) = hk, shi, i.e., status(M, n) =

sh, and Mi0 = Mi [n 7→hk, shi] where n0 = select(M2 , ∼2 , n) and n0 = n.

By Lemma 2(c), status(M, n) = sh implies status(M j , n) 6= mo for

all C j (M j , ∼ j , d j ` rst j ) : h j ∈ CR. We just have to consider those

cores Cl (Ml , ∼l , dl ` rstl ) : hl ∈ CR where status(Ml , n) = sh. (Note

that the cases where status(M j , n) = inv or n ∈ dom(M j ) are not relevant in this lemma.) By induction, version(M, n) = version(Ml , n) =

k. Since Mi0 = Mi [n 7→hk, shi], and the status of the address n in the

main memory and in all other cores remain unchanged, the configuration after the step satisfies the invariant. It is analogous for case

F ETCH2 .

Case F LUSH1 : MM(M); (Mi , ∼i , di ) → MM(M 0 ); (Mi0 , ∼i , di0 )

In addition, we have in this case Mi (n) = hk, moi, M 0 = M[n 7→hk +

1, shi], and M20 = M2 [n 7→hk +1, shi]. Mi (n) = hk, moi implies M(n) =

hi, invi by Lemma 2(a), and by Lemma 2(b) ∀ C j (M j , ∼ j , d j `

rst j ) : h j ∈ CR\CRi .(status(M j , n) = inv ∨ n 6∈ dom(M j ). The case

is concluded by having M 0 = M[n 7→hi+1, shi] and Mi0 = Mi [n 7→hi+

1, shi] after the step.

The cases F ETCH3 and F LUSH2 hold immediately because the

transition step does not change the status or version of any memory

location in either the main memory or cache local in a core.

A.4

Proof of Lemma 4

P ROOF. In order for the core CRi to read from (or write to) a

block address n successfully in its local cache, that is, to generate

the event R(Ci , n) (or W (Ci , n)), the relevant reduction rules include

P R R D1 , P R R D B LOCK1 , P RW R1 , P RW R2 and P RW R B LOCK2 in

Figure 4.

Consider the rule P R R D1 where status(Mi , n) 6= inv, that is, the

status of n is either mo or sh. We first consider the case where

status(Mi , n) = mo, it is trivial by Lemma 2(a) and (b) that Mi (n)

has the most recent copy according to Definition 2(b). For the case

where Mi (n) = hk, shi, it implies by Lemma 2(d) that status(M, n) =

sh which gives by Lemma 2(c), that ∀CR j ∈ CR where CR j =

C j (M j , ∼ j , d j ). status(M j , n) 6= mo. Similar to the proof of Lemma 3,

we just have to consider those cores Cl (Ml , ∼l , dl ` rstl ) : hl ∈ CR

where status(Ml , n) = sh. Then by Lemma 3, we get version(Mi , n) =

k = version(M, n) = version(Ml , n), which satisfies Definition 2(a)

conclude the case. The rest of rules mentioned above can be proven

analogously.